Giacomo D'Amicantonio

Mixture of Experts Guided by Gaussian Splatters Matters: A new Approach to Weakly-Supervised Video Anomaly Detection

Aug 08, 2025Abstract:Video Anomaly Detection (VAD) is a challenging task due to the variability of anomalous events and the limited availability of labeled data. Under the Weakly-Supervised VAD (WSVAD) paradigm, only video-level labels are provided during training, while predictions are made at the frame level. Although state-of-the-art models perform well on simple anomalies (e.g., explosions), they struggle with complex real-world events (e.g., shoplifting). This difficulty stems from two key issues: (1) the inability of current models to address the diversity of anomaly types, as they process all categories with a shared model, overlooking category-specific features; and (2) the weak supervision signal, which lacks precise temporal information, limiting the ability to capture nuanced anomalous patterns blended with normal events. To address these challenges, we propose Gaussian Splatting-guided Mixture of Experts (GS-MoE), a novel framework that employs a set of expert models, each specialized in capturing specific anomaly types. These experts are guided by a temporal Gaussian splatting loss, enabling the model to leverage temporal consistency and enhance weak supervision. The Gaussian splatting approach encourages a more precise and comprehensive representation of anomalies by focusing on temporal segments most likely to contain abnormal events. The predictions from these specialized experts are integrated through a mixture-of-experts mechanism to model complex relationships across diverse anomaly patterns. Our approach achieves state-of-the-art performance, with a 91.58% AUC on the UCF-Crime dataset, and demonstrates superior results on XD-Violence and MSAD datasets. By leveraging category-specific expertise and temporal guidance, GS-MoE sets a new benchmark for VAD under weak supervision.

Just Dance with $π$! A Poly-modal Inductor for Weakly-supervised Video Anomaly Detection

May 19, 2025Abstract:Weakly-supervised methods for video anomaly detection (VAD) are conventionally based merely on RGB spatio-temporal features, which continues to limit their reliability in real-world scenarios. This is due to the fact that RGB-features are not sufficiently distinctive in setting apart categories such as shoplifting from visually similar events. Therefore, towards robust complex real-world VAD, it is essential to augment RGB spatio-temporal features by additional modalities. Motivated by this, we introduce the Poly-modal Induced framework for VAD: "PI-VAD", a novel approach that augments RGB representations by five additional modalities. Specifically, the modalities include sensitivity to fine-grained motion (Pose), three dimensional scene and entity representation (Depth), surrounding objects (Panoptic masks), global motion (optical flow), as well as language cues (VLM). Each modality represents an axis of a polygon, streamlined to add salient cues to RGB. PI-VAD includes two plug-in modules, namely Pseudo-modality Generation module and Cross Modal Induction module, which generate modality-specific prototypical representation and, thereby, induce multi-modal information into RGB cues. These modules operate by performing anomaly-aware auxiliary tasks and necessitate five modality backbones -- only during training. Notably, PI-VAD achieves state-of-the-art accuracy on three prominent VAD datasets encompassing real-world scenarios, without requiring the computational overhead of five modality backbones at inference.

uTRAND: Unsupervised Anomaly Detection in Traffic Trajectories

Apr 19, 2024

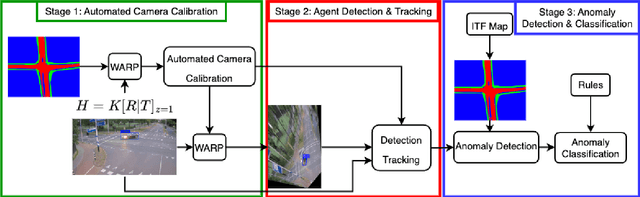

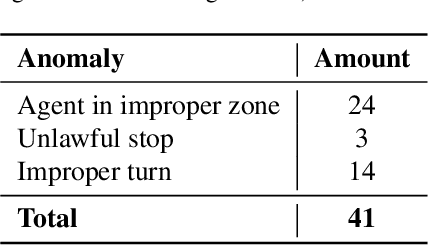

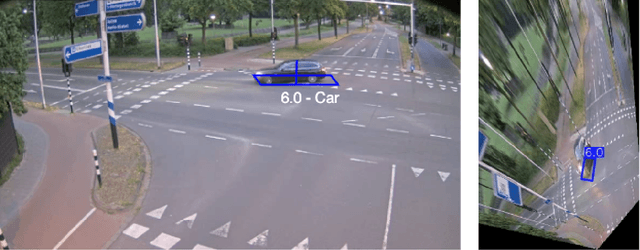

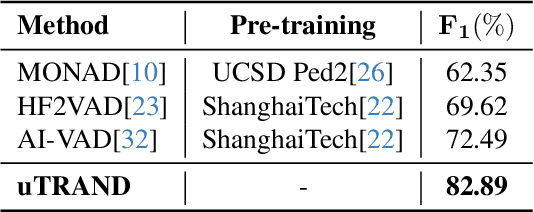

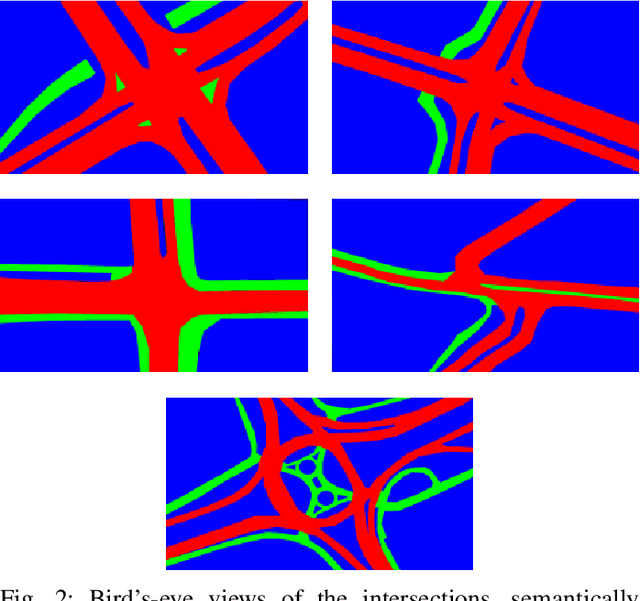

Abstract:Deep learning-based approaches have achieved significant improvements on public video anomaly datasets, but often do not perform well in real-world applications. This paper addresses two issues: the lack of labeled data and the difficulty of explaining the predictions of a neural network. To this end, we present a framework called uTRAND, that shifts the problem of anomalous trajectory prediction from the pixel space to a semantic-topological domain. The framework detects and tracks all types of traffic agents in bird's-eye-view videos of traffic cameras mounted at an intersection. By conceptualizing the intersection as a patch-based graph, it is shown that the framework learns and models the normal behaviour of traffic agents without costly manual labeling. Furthermore, uTRAND allows to formulate simple rules to classify anomalous trajectories in a way suited for human interpretation. We show that uTRAND outperforms other state-of-the-art approaches on a dataset of anomalous trajectories collected in a real-world setting, while producing explainable detection results.

Automated Camera Calibration via Homography Estimation with GNNs

Nov 05, 2023

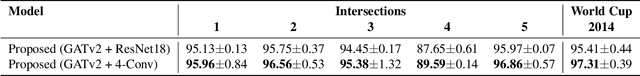

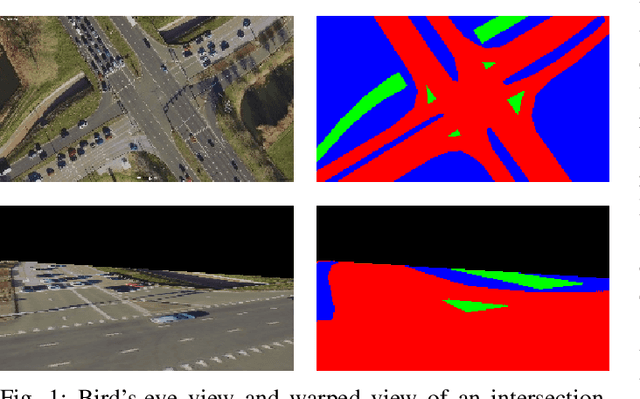

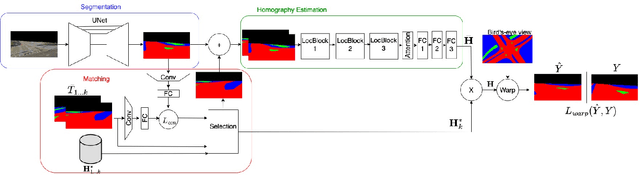

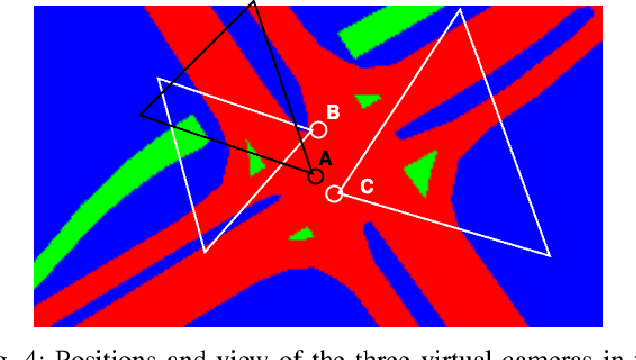

Abstract:Over the past few decades, a significant rise of camera-based applications for traffic monitoring has occurred. Governments and local administrations are increasingly relying on the data collected from these cameras to enhance road safety and optimize traffic conditions. However, for effective data utilization, it is imperative to ensure accurate and automated calibration of the involved cameras. This paper proposes a novel approach to address this challenge by leveraging the topological structure of intersections. We propose a framework involving the generation of a set of synthetic intersection viewpoint images from a bird's-eye-view image, framed as a graph of virtual cameras to model these images. Using the capabilities of Graph Neural Networks, we effectively learn the relationships within this graph, thereby facilitating the estimation of a homography matrix. This estimation leverages the neighbourhood representation for any real-world camera and is enhanced by exploiting multiple images instead of a single match. In turn, the homography matrix allows the retrieval of extrinsic calibration parameters. As a result, the proposed framework demonstrates superior performance on both synthetic datasets and real-world cameras, setting a new state-of-the-art benchmark.

Homography Estimation in Complex Topological Scenes

Aug 02, 2023

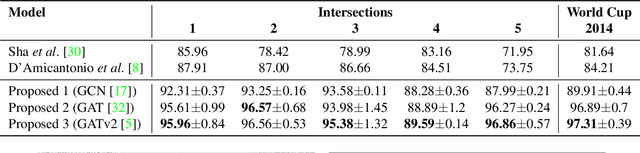

Abstract:Surveillance videos and images are used for a broad set of applications, ranging from traffic analysis to crime detection. Extrinsic camera calibration data is important for most analysis applications. However, security cameras are susceptible to environmental conditions and small camera movements, resulting in a need for an automated re-calibration method that can account for these varying conditions. In this paper, we present an automated camera-calibration process leveraging a dictionary-based approach that does not require prior knowledge on any camera settings. The method consists of a custom implementation of a Spatial Transformer Network (STN) and a novel topological loss function. Experiments reveal that the proposed method improves the IoU metric by up to 12% w.r.t. a state-of-the-art model across five synthetic datasets and the World Cup 2014 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge