uTRAND: Unsupervised Anomaly Detection in Traffic Trajectories

Paper and Code

Apr 19, 2024

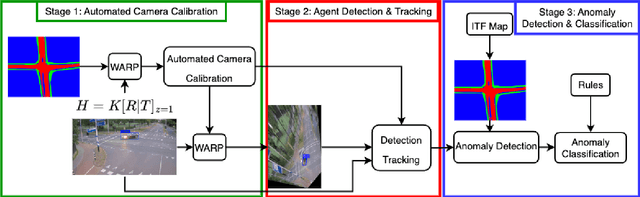

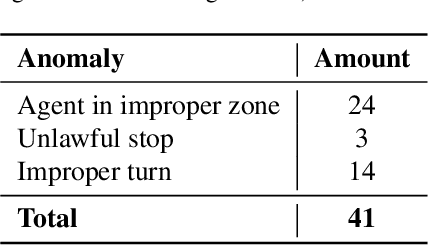

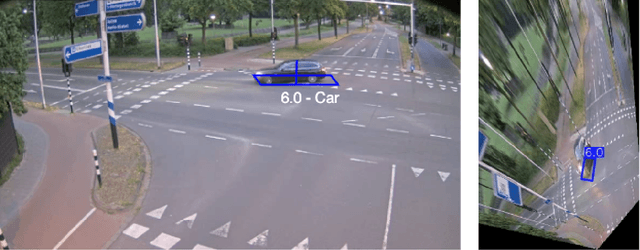

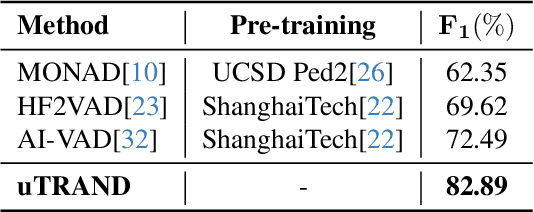

Deep learning-based approaches have achieved significant improvements on public video anomaly datasets, but often do not perform well in real-world applications. This paper addresses two issues: the lack of labeled data and the difficulty of explaining the predictions of a neural network. To this end, we present a framework called uTRAND, that shifts the problem of anomalous trajectory prediction from the pixel space to a semantic-topological domain. The framework detects and tracks all types of traffic agents in bird's-eye-view videos of traffic cameras mounted at an intersection. By conceptualizing the intersection as a patch-based graph, it is shown that the framework learns and models the normal behaviour of traffic agents without costly manual labeling. Furthermore, uTRAND allows to formulate simple rules to classify anomalous trajectories in a way suited for human interpretation. We show that uTRAND outperforms other state-of-the-art approaches on a dataset of anomalous trajectories collected in a real-world setting, while producing explainable detection results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge