Geoff McDonald

Whisper Leak: a side-channel attack on Large Language Models

Nov 05, 2025Abstract:Large Language Models (LLMs) are increasingly deployed in sensitive domains including healthcare, legal services, and confidential communications, where privacy is paramount. This paper introduces Whisper Leak, a side-channel attack that infers user prompt topics from encrypted LLM traffic by analyzing packet size and timing patterns in streaming responses. Despite TLS encryption protecting content, these metadata patterns leak sufficient information to enable topic classification. We demonstrate the attack across 28 popular LLMs from major providers, achieving near-perfect classification (often >98% AUPRC) and high precision even at extreme class imbalance (10,000:1 noise-to-target ratio). For many models, we achieve 100% precision in identifying sensitive topics like "money laundering" while recovering 5-20% of target conversations. This industry-wide vulnerability poses significant risks for users under network surveillance by ISPs, governments, or local adversaries. We evaluate three mitigation strategies - random padding, token batching, and packet injection - finding that while each reduces attack effectiveness, none provides complete protection. Through responsible disclosure, we have collaborated with providers to implement initial countermeasures. Our findings underscore the need for LLM providers to address metadata leakage as AI systems handle increasingly sensitive information.

AutoAttacker: A Large Language Model Guided System to Implement Automatic Cyber-attacks

Mar 02, 2024Abstract:Large language models (LLMs) have demonstrated impressive results on natural language tasks, and security researchers are beginning to employ them in both offensive and defensive systems. In cyber-security, there have been multiple research efforts that utilize LLMs focusing on the pre-breach stage of attacks like phishing and malware generation. However, so far there lacks a comprehensive study regarding whether LLM-based systems can be leveraged to simulate the post-breach stage of attacks that are typically human-operated, or "hands-on-keyboard" attacks, under various attack techniques and environments. As LLMs inevitably advance, they may be able to automate both the pre- and post-breach attack stages. This shift may transform organizational attacks from rare, expert-led events to frequent, automated operations requiring no expertise and executed at automation speed and scale. This risks fundamentally changing global computer security and correspondingly causing substantial economic impacts, and a goal of this work is to better understand these risks now so we can better prepare for these inevitable ever-more-capable LLMs on the horizon. On the immediate impact side, this research serves three purposes. First, an automated LLM-based, post-breach exploitation framework can help analysts quickly test and continually improve their organization's network security posture against previously unseen attacks. Second, an LLM-based penetration test system can extend the effectiveness of red teams with a limited number of human analysts. Finally, this research can help defensive systems and teams learn to detect novel attack behaviors preemptively before their use in the wild....

ScriptNet: Neural Static Analysis for Malicious JavaScript Detection

Apr 01, 2019

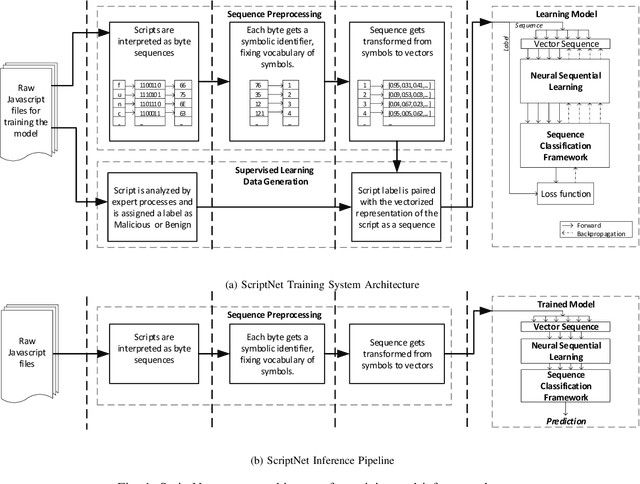

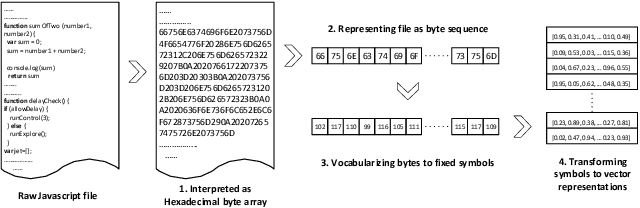

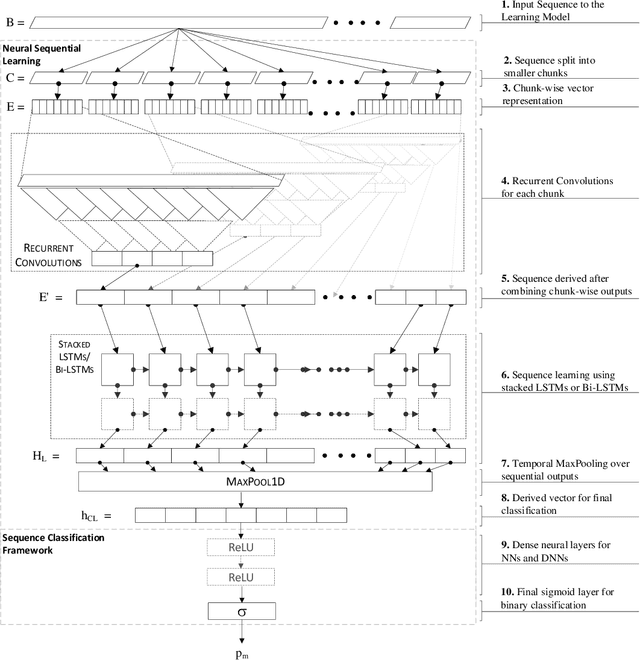

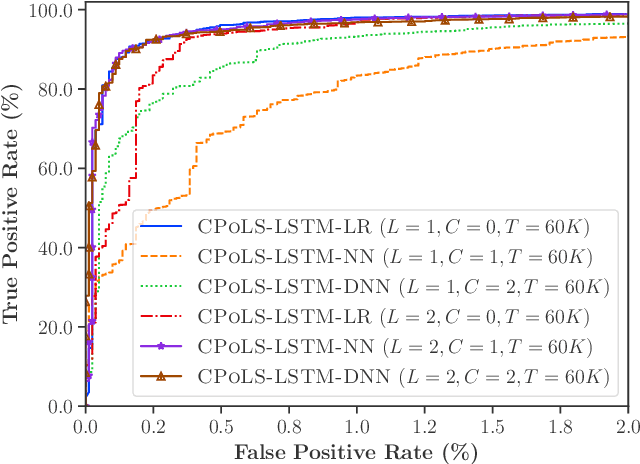

Abstract:Malicious scripts are an important computer infection threat vector in the wild. For web-scale processing, static analysis offers substantial computing efficiencies. We propose the ScriptNet system for neural malicious JavaScript detection which is based on static analysis. We use the Convoluted Partitioning of Long Sequences (CPoLS) model, which processes Javascript files as byte sequences. Lower layers capture the sequential nature of these byte sequences while higher layers classify the resulting embedding as malicious or benign. Unlike previously proposed solutions, our model variants are trained in an end-to-end fashion allowing discriminative training even for the sequential processing layers. Evaluating this model on a large corpus of 212,408 JavaScript files indicates that the best performing CPoLS model offers a 97.20% true positive rate (TPR) for the first 60K byte subsequence at a false positive rate (FPR) of 0.50%. The best performing CPoLS model significantly outperform several baseline models.

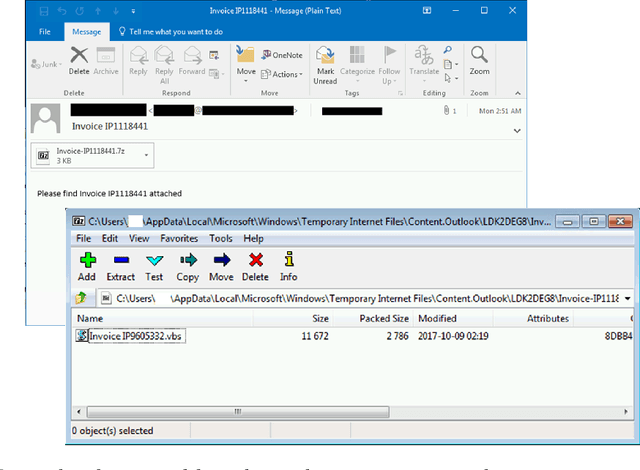

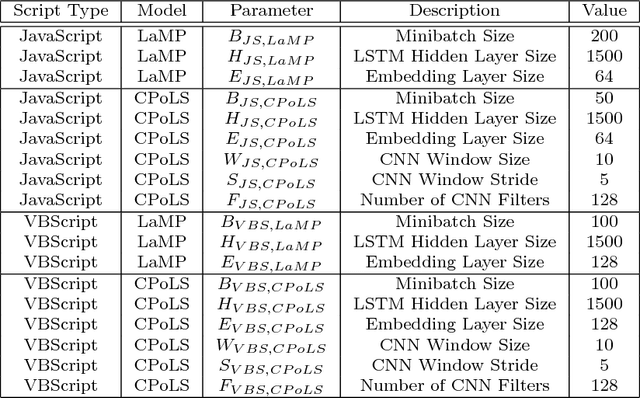

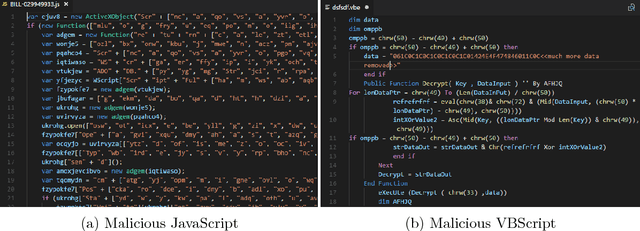

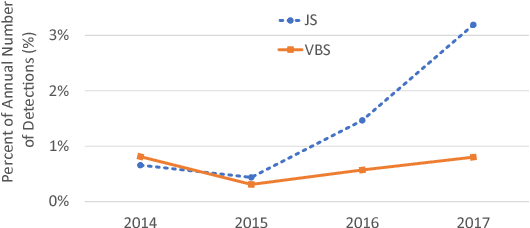

Neural Classification of Malicious Scripts: A study with JavaScript and VBScript

May 15, 2018

Abstract:Malicious scripts are an important computer infection threat vector. Our analysis reveals that the two most prevalent types of malicious scripts include JavaScript and VBScript. The percentage of detected JavaScript attacks are on the rise. To address these threats, we investigate two deep recurrent models, LaMP (LSTM and Max Pooling) and CPoLS (Convoluted Partitioning of Long Sequences), which process JavaScript and VBScript as byte sequences. Lower layers capture the sequential nature of these byte sequences while higher layers classify the resulting embedding as malicious or benign. Unlike previously proposed solutions, our models are trained in an end-to-end fashion allowing discriminative training even for the sequential processing layers. Evaluating these models on a large corpus of 296,274 JavaScript files indicates that the best performing LaMP model has a 65.9% true positive rate (TPR) at a false positive rate (FPR) of 1.0%. Similarly, the best CPoLS model has a TPR of 45.3% at an FPR of 1.0%. LaMP and CPoLS yield a TPR of 69.3% and 67.9%, respectively, at an FPR of 1.0% on a collection of 240,504 VBScript files.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge