Gaeun Kim

StablePrompt: Automatic Prompt Tuning using Reinforcement Learning for Large Language Models

Oct 10, 2024

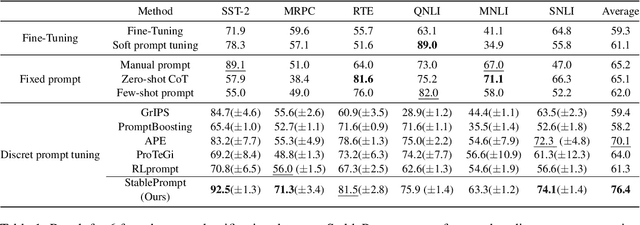

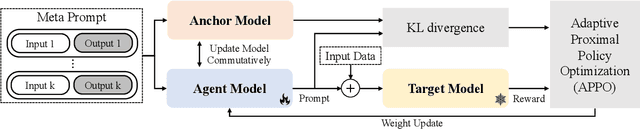

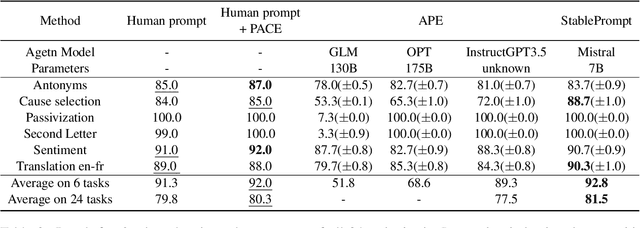

Abstract:Finding appropriate prompts for the specific task has become an important issue as the usage of Large Language Models (LLM) has expanded. Reinforcement Learning (RL) is widely used for prompt tuning, but its inherent instability and environmental dependency make it difficult to use in practice. In this paper, we propose StablePrompt, which strikes a balance between training stability and search space, mitigating the instability of RL and producing high-performance prompts. We formulate prompt tuning as an online RL problem between the agent and target LLM and introduce Adaptive Proximal Policy Optimization (APPO). APPO introduces an LLM anchor model to adaptively adjust the rate of policy updates. This allows for flexible prompt search while preserving the linguistic ability of the pre-trained LLM. StablePrompt outperforms previous methods on various tasks including text classification, question answering, and text generation. Our code can be found in github.

Team HYU ASML ROBOVOX SP Cup 2024 System Description

Jul 16, 2024

Abstract:This report describes the submission of HYU ASML team to the IEEE Signal Processing Cup 2024 (SP Cup 2024). This challenge, titled "ROBOVOX: Far-Field Speaker Recognition by a Mobile Robot," focuses on speaker recognition using a mobile robot in noisy and reverberant conditions. Our solution combines the result of deep residual neural networks and time-delay neural network-based speaker embedding models. These models were trained on a diverse dataset that includes French speech. To account for the challenging evaluation environment characterized by high noise, reverberation, and short speech conditions, we focused on data augmentation and training speech duration for the speaker embedding model. Our submission achieved second place on the SP Cup 2024 public leaderboard, with a detection cost function of 0.5245 and an equal error rate of 6.46%.

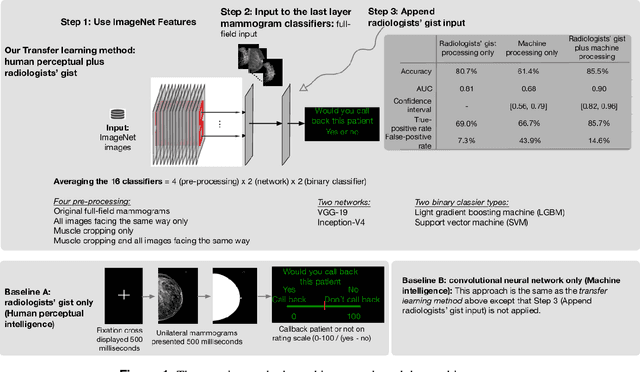

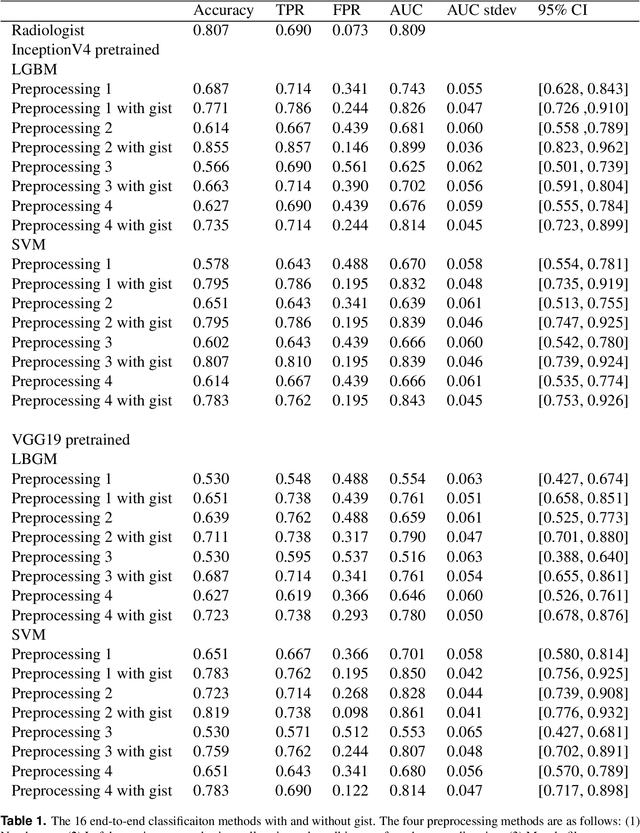

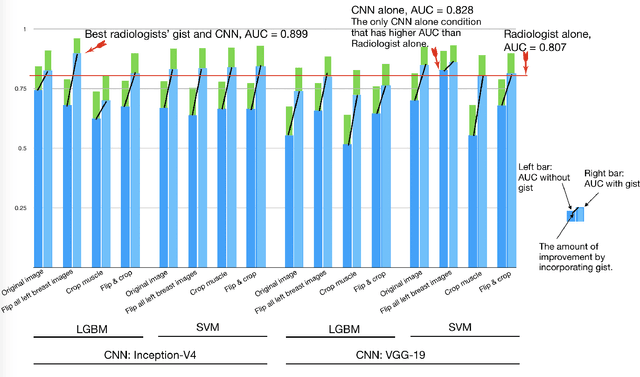

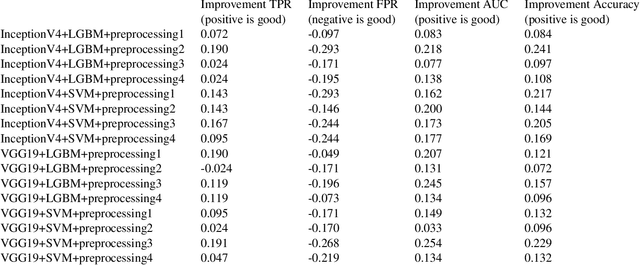

Human Gist Processing Augments Deep Learning Breast Cancer Risk Assessment

Nov 28, 2019

Abstract:Radiologists can classify a mammogram as normal or abnormal at better than chance levels after less than a second's exposure to the images. In this work, we combine these radiologists' gist inputs into pre-trained machine learning models to validate that integrating gist with a CNN model can achieve an AUC (area under the curve) statistically significantly higher than either the gist perception of radiologists or the model without gist input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge