Fumin Guo

Enhanced Self-supervised Learning for Multi-modality MRI Segmentation and Classification: A Novel Approach Avoiding Model Collapse

Jul 15, 2024

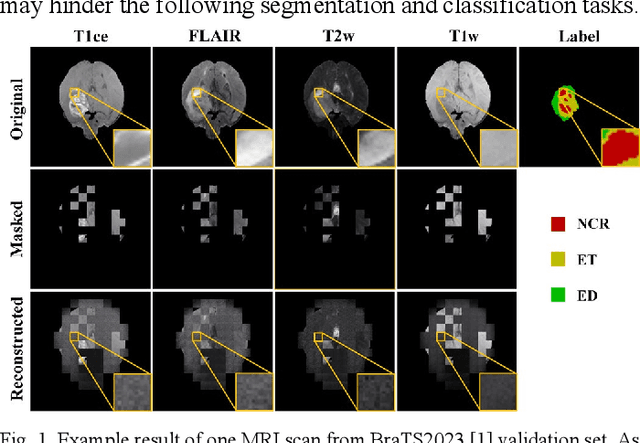

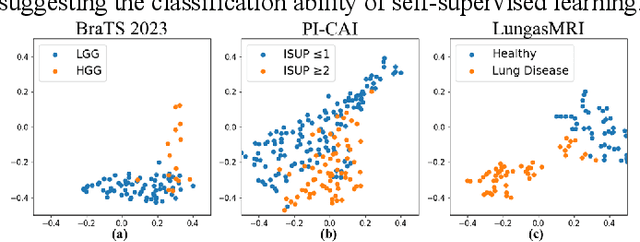

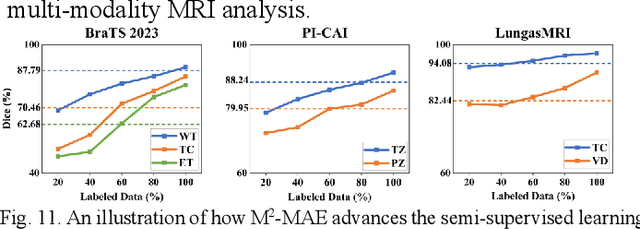

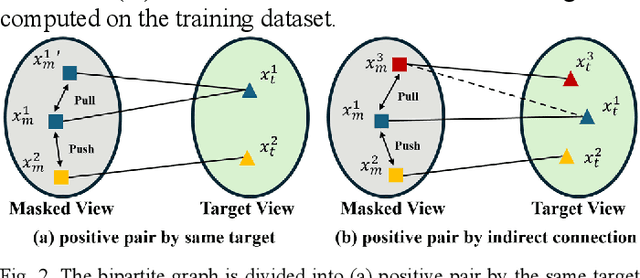

Abstract:Multi-modality magnetic resonance imaging (MRI) can provide complementary information for computer-aided diagnosis. Traditional deep learning algorithms are suitable for identifying specific anatomical structures segmenting lesions and classifying diseases with magnetic resonance images. However, manual labels are limited due to high expense, which hinders further improvement of model accuracy. Self-supervised learning (SSL) can effectively learn feature representations from unlabeled data by pre-training and is demonstrated to be effective in natural image analysis. Most SSL methods ignore the similarity of multi-modality MRI, leading to model collapse. This limits the efficiency of pre-training, causing low accuracy in downstream segmentation and classification tasks. To solve this challenge, we establish and validate a multi-modality MRI masked autoencoder consisting of hybrid mask pattern (HMP) and pyramid barlow twin (PBT) module for SSL on multi-modality MRI analysis. The HMP concatenates three masking steps forcing the SSL to learn the semantic connections of multi-modality images by reconstructing the masking patches. We have proved that the proposed HMP can avoid model collapse. The PBT module exploits the pyramidal hierarchy of the network to construct barlow twin loss between masked and original views, aligning the semantic representations of image patches at different vision scales in latent space. Experiments on BraTS2023, PI-CAI, and lung gas MRI datasets further demonstrate the superiority of our framework over the state-of-the-art. The performance of the segmentation and classification is substantially enhanced, supporting the accurate detection of small lesion areas. The code is available at https://github.com/LinxuanHan/M2-MAE.

Encoding Enhanced Complex CNN for Accurate and Highly Accelerated MRI

Jun 21, 2023

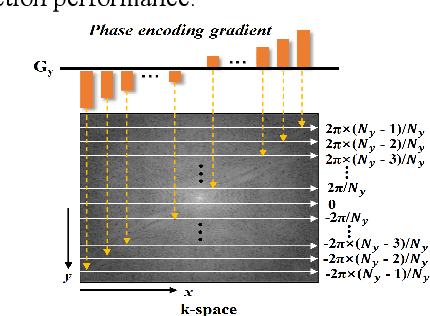

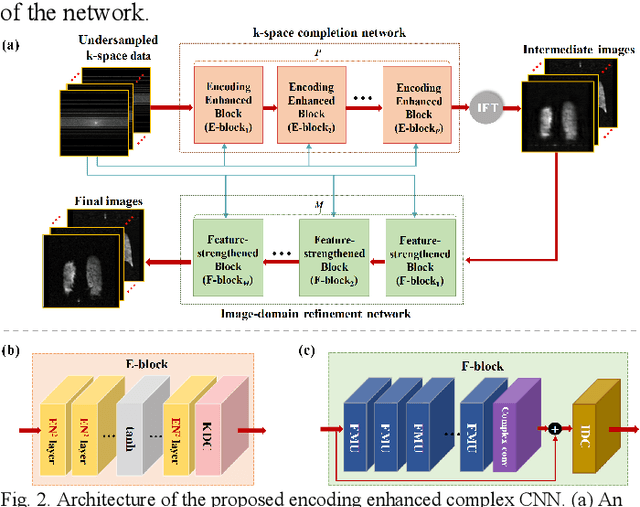

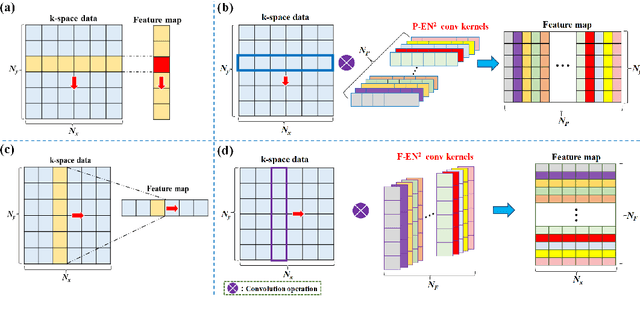

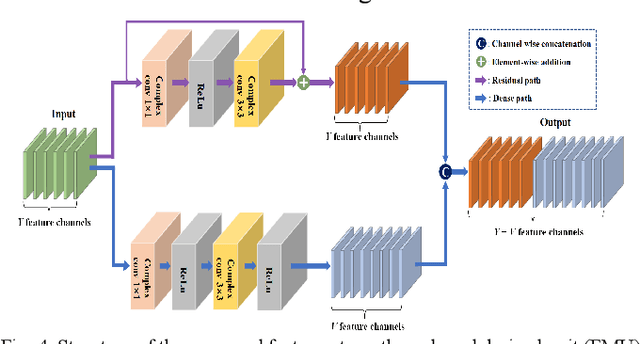

Abstract:Magnetic resonance imaging (MRI) using hyperpolarized noble gases provides a way to visualize the structure and function of human lung, but the long imaging time limits its broad research and clinical applications. Deep learning has demonstrated great potential for accelerating MRI by reconstructing images from undersampled data. However, most existing deep conventional neural networks (CNN) directly apply square convolution to k-space data without considering the inherent properties of k-space sampling, limiting k-space learning efficiency and image reconstruction quality. In this work, we propose an encoding enhanced (EN2) complex CNN for highly undersampled pulmonary MRI reconstruction. EN2 employs convolution along either the frequency or phase-encoding direction, resembling the mechanisms of k-space sampling, to maximize the utilization of the encoding correlation and integrity within a row or column of k-space. We also employ complex convolution to learn rich representations from the complex k-space data. In addition, we develop a feature-strengthened modularized unit to further boost the reconstruction performance. Experiments demonstrate that our approach can accurately reconstruct hyperpolarized 129Xe and 1H lung MRI from 6-fold undersampled k-space data and provide lung function measurements with minimal biases compared with fully-sampled image. These results demonstrate the effectiveness of the proposed algorithmic components and indicate that the proposed approach could be used for accelerated pulmonary MRI in research and clinical lung disease patient care.

Estimating Uncertainty in Neural Networks for Cardiac MRI Segmentation: A Benchmark Study

Dec 31, 2020

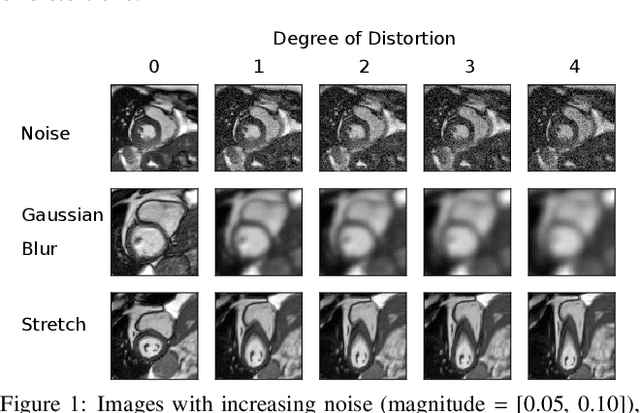

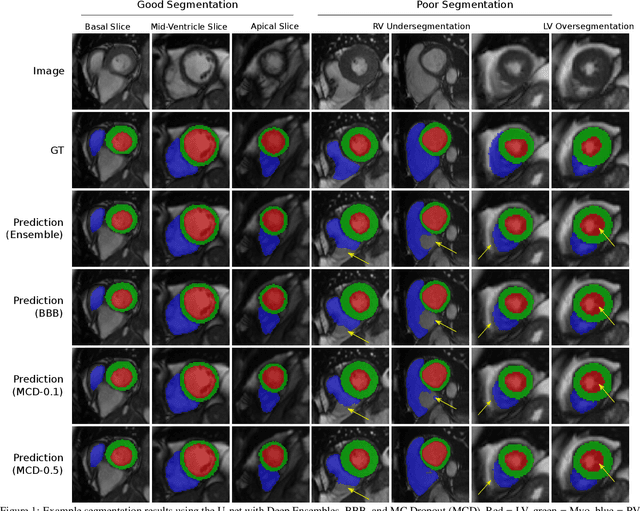

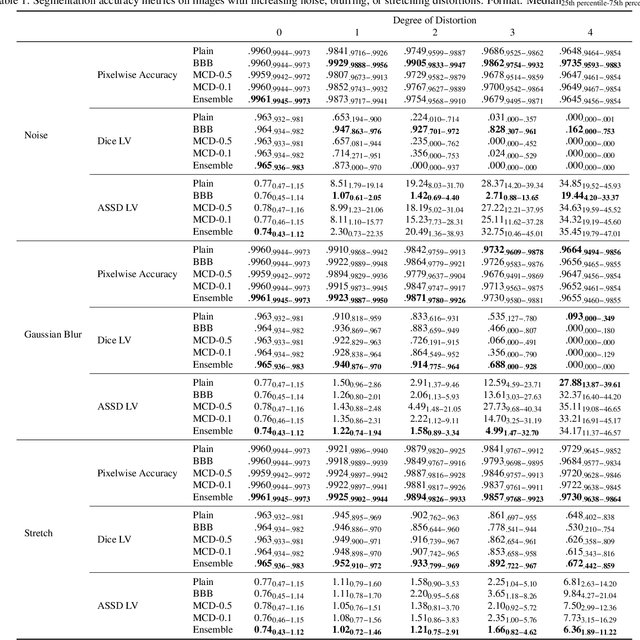

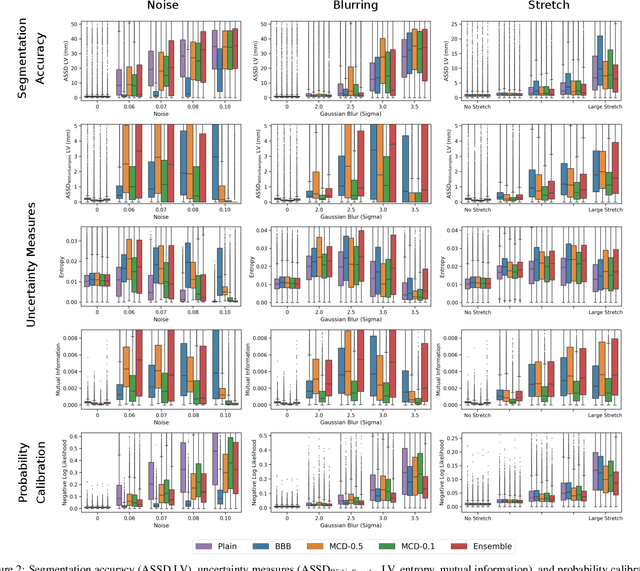

Abstract:Convolutional neural networks (CNNs) have demonstrated promise in automated cardiac magnetic resonance imaging segmentation. However, when using CNNs in a large real world dataset, it is important to quantify segmentation uncertainty in order to know which segmentations could be problematic. In this work, we performed a systematic study of Bayesian and non-Bayesian methods for estimating uncertainty in segmentation neural networks. We evaluated Bayes by Backprop (BBB), Monte Carlo (MC) Dropout, and Deep Ensembles in terms of segmentation accuracy, probability calibration, uncertainty on out-of-distribution images, and segmentation quality control. We tested these algorithms on datasets with various distortions and observed that Deep Ensembles outperformed the other methods except for images with heavy noise distortions. For segmentation quality control, we showed that segmentation uncertainty is correlated with segmentation accuracy. With the incorporation of uncertainty estimates, we were able to reduce the percentage of poor segmentation to 5% by flagging 31% to 48% of the most uncertain images for manual review, substantially lower than random review of the results without using neural network uncertainty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge