Frank Kramer

Classifying Mitotic Figures in the MIDOG25 Challenge with Deep Ensemble Learning and Rule Based Refinement

Aug 28, 2025

Abstract:Mitotic figures (MFs) are relevant biomarkers in tumor grading. Differentiating atypical MFs (AMFs) from normal MFs (NMFs) remains difficult, as manual annotation is time-consuming and subjective. In this work an ensemble of ConvNeXtBase models was trained with AUCMEDI and extend with a rule-based refinement (RBR) module. On the MIDOG25 preliminary test set, the ensemble achieved a balanced accuracy of 84.02%. While the RBR increased specificity, it reduced sensitivity and overall performance. The results show that deep ensembles perform well for AMF classification. RBR can increase specific metrics but requires further research.

Infherno: End-to-end Agent-based FHIR Resource Synthesis from Free-form Clinical Notes

Jul 16, 2025Abstract:For clinical data integration and healthcare services, the HL7 FHIR standard has established itself as a desirable format for interoperability between complex health data. Previous attempts at automating the translation from free-form clinical notes into structured FHIR resources rely on modular, rule-based systems or LLMs with instruction tuning and constrained decoding. Since they frequently suffer from limited generalizability and structural inconformity, we propose an end-to-end framework powered by LLM agents, code execution, and healthcare terminology database tools to address these issues. Our solution, called Infherno, is designed to adhere to the FHIR document schema and competes well with a human baseline in predicting FHIR resources from unstructured text. The implementation features a front end for custom and synthetic data and both local and proprietary models, supporting clinical data integration processes and interoperability across institutions.

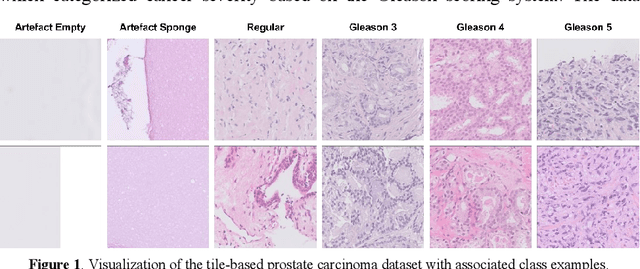

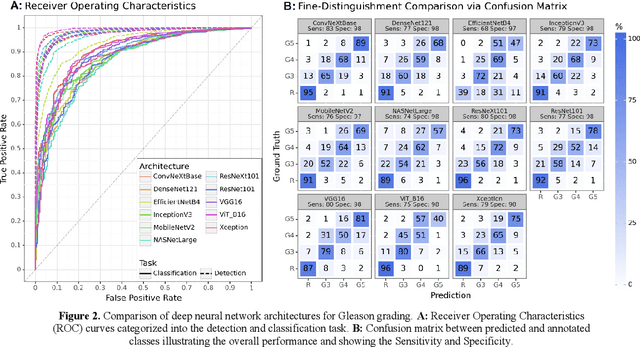

Assessing the Performance of Deep Learning for Automated Gleason Grading in Prostate Cancer

Mar 25, 2024

Abstract:Prostate cancer is a dominant health concern calling for advanced diagnostic tools. Utilizing digital pathology and artificial intelligence, this study explores the potential of 11 deep neural network architectures for automated Gleason grading in prostate carcinoma focusing on comparing traditional and recent architectures. A standardized image classification pipeline, based on the AUCMEDI framework, facilitated robust evaluation using an in-house dataset consisting of 34,264 annotated tissue tiles. The results indicated varying sensitivity across architectures, with ConvNeXt demonstrating the strongest performance. Notably, newer architectures achieved superior performance, even though with challenges in differentiating closely related Gleason grades. The ConvNeXt model was capable of learning a balance between complexity and generalizability. Overall, this study lays the groundwork for enhanced Gleason grading systems, potentially improving diagnostic efficiency for prostate cancer.

Towards Automated COVID-19 Presence and Severity Classification

May 15, 2023Abstract:COVID-19 presence classification and severity prediction via (3D) thorax computed tomography scans have become important tasks in recent times. Especially for capacity planning of intensive care units, predicting the future severity of a COVID-19 patient is crucial. The presented approach follows state-of-theart techniques to aid medical professionals in these situations. It comprises an ensemble learning strategy via 5-fold cross-validation that includes transfer learning and combines pre-trained 3D-versions of ResNet34 and DenseNet121 for COVID19 classification and severity prediction respectively. Further, domain-specific preprocessing was applied to optimize model performance. In addition, medical information like the infection-lung-ratio, patient age, and sex were included. The presented model achieves an AUC of 79.0% to predict COVID-19 severity, and 83.7% AUC to classify the presence of an infection, which is comparable with other currently popular methods. This approach is implemented using the AUCMEDI framework and relies on well-known network architectures to ensure robustness and reproducibility.

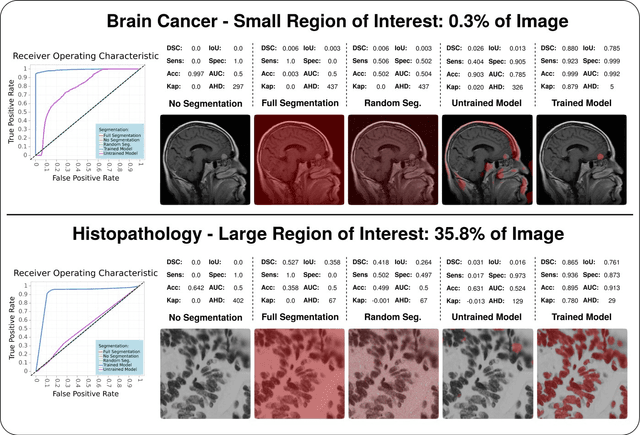

MISm: A Medical Image Segmentation Metric for Evaluation of weak labeled Data

Oct 24, 2022Abstract:Performance measures are an important tool for assessing and comparing different medical image segmentation algorithms. Unfortunately, the current measures have their weaknesses when it comes to assessing certain edge cases. These limitations arouse when images with a very small region of interest or without a region of interest at all are assessed. As a solution for these limitations, we propose a new medical image segmentation metric: MISm. To evaluate MISm, the popular metrics in the medical image segmentation and MISm were compared using images of magnet resonance tomography from several scenarios. In order to allow application in the community and reproducibility of experimental results, we included MISm in the publicly available evaluation framework MISeval: https://github.com/frankkramer-lab/miseval/tree/master/miseval

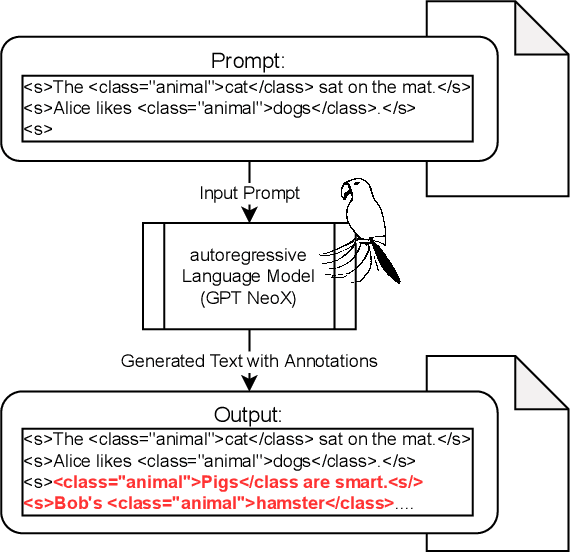

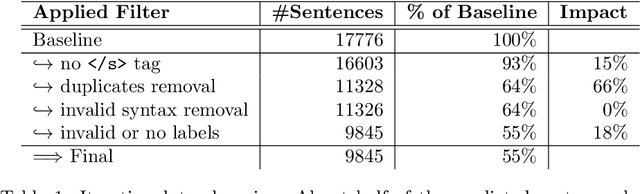

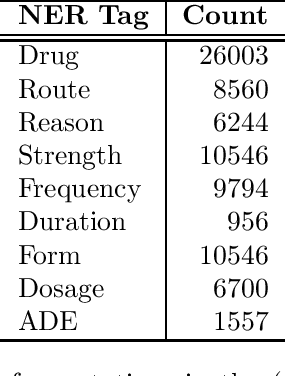

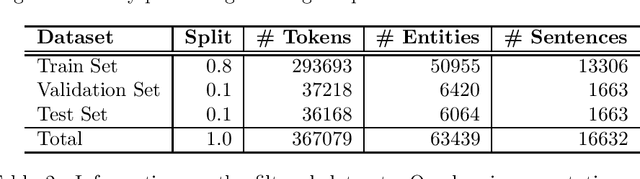

Annotated Dataset Creation through General Purpose Language Models for non-English Medical NLP

Aug 30, 2022

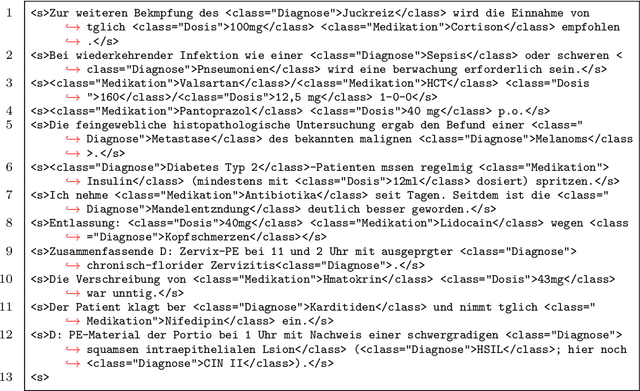

Abstract:Obtaining text datasets with semantic annotations is an effortful process, yet crucial for supervised training in natural language processsing (NLP). In general, developing and applying new NLP pipelines in domain-specific contexts for tasks often requires custom designed datasets to address NLP tasks in supervised machine learning fashion. When operating in non-English languages for medical data processing, this exposes several minor and major, interconnected problems such as lack of task-matching datasets as well as task-specific pre-trained models. In our work we suggest to leverage pretrained language models for training data acquisition in order to retrieve sufficiently large datasets for training smaller and more efficient models for use-case specific tasks. To demonstrate the effectiveness of your approach, we create a custom dataset which we use to train a medical NER model for German texts, GPTNERMED, yet our method remains language-independent in principle. Our obtained dataset as well as our pre-trained models are publicly available at: https://github.com/frankkramer-lab/GPTNERMED

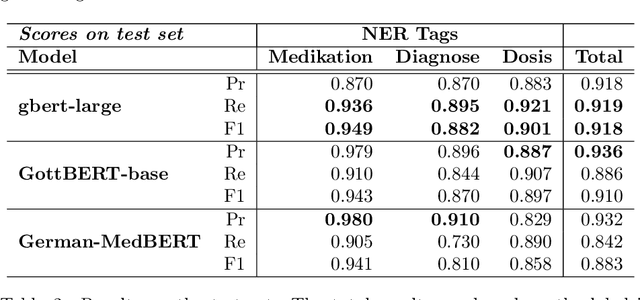

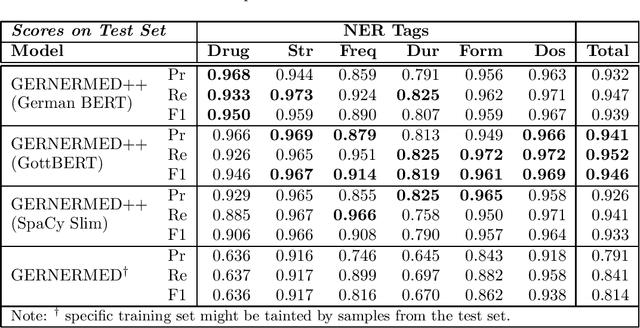

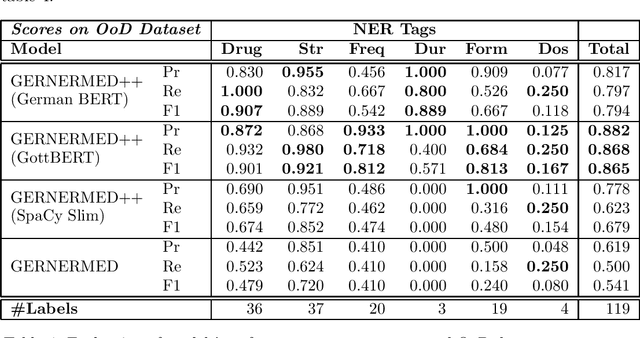

GERNERMED++: Transfer Learning in German Medical NLP

Jun 29, 2022

Abstract:We present a statistical model for German medical natural language processing trained for named entity recognition (NER) as an open, publicly available model. The work serves as a refined successor to our first GERNERMED model which is substantially outperformed by our work. We demonstrate the effectiveness of combining multiple techniques in order to achieve strong results in entity recognition performance by the means of transfer-learning on pretrained deep language models (LM), word-alignment and neural machine translation. Due to the sparse situation on open, public medical entity recognition models for German texts, this work offers benefits to the German research community on medical NLP as a baseline model. Since our model is based on public English data, its weights are provided without legal restrictions on usage and distribution. The sample code and the statistical model is available at: https://github.com/frankkramer-lab/GERNERMED-pp

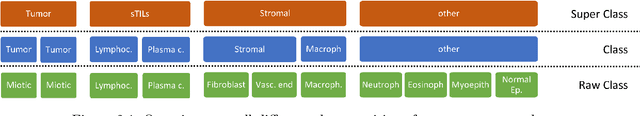

Nucleus Segmentation and Analysis in Breast Cancer with the MIScnn Framework

Jun 16, 2022

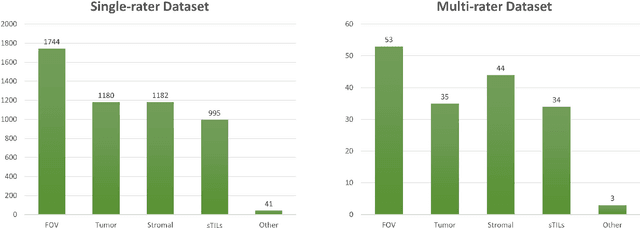

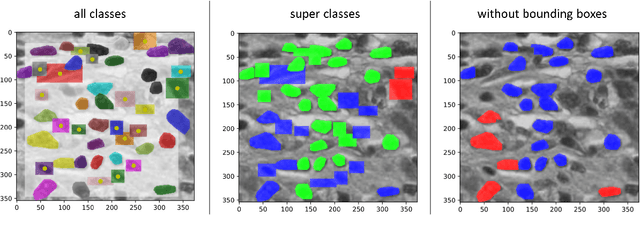

Abstract:The NuCLS dataset contains over 220.000 annotations of cell nuclei in breast cancers. We show how to use these data to create a multi-rater model with the MIScnn Framework to automate the analysis of cell nuclei. For the model creation, we use the widespread U-Net approach embedded in a pipeline. This pipeline provides besides the high performance convolution neural network, several preprocessor techniques and a extended data exploration. The final model is tested in the evaluation phase using a wide variety of metrics with a subsequent visualization. Finally, the results are compared and interpreted with the results of the NuCLS study. As an outlook, indications are given which are important for the future development of models in the context of cell nuclei.

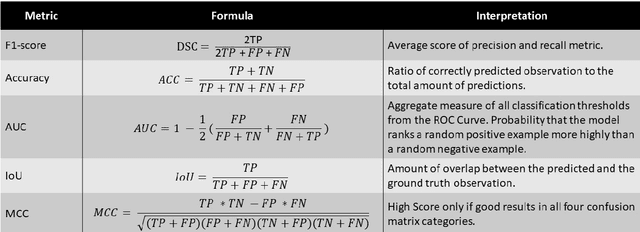

Towards a Guideline for Evaluation Metrics in Medical Image Segmentation

Feb 10, 2022

Abstract:In the last decade, research on artificial intelligence has seen rapid growth with deep learning models, especially in the field of medical image segmentation. Various studies demonstrated that these models have powerful prediction capabilities and achieved similar results as clinicians. However, recent studies revealed that the evaluation in image segmentation studies lacks reliable model performance assessment and showed statistical bias by incorrect metric implementation or usage. Thus, this work provides an overview and interpretation guide on the following metrics for medical image segmentation evaluation in binary as well as multi-class problems: Dice similarity coefficient, Jaccard, Sensitivity, Specificity, Rand index, ROC curves, Cohen's Kappa, and Hausdorff distance. As a summary, we propose a guideline for standardized medical image segmentation evaluation to improve evaluation quality, reproducibility, and comparability in the research field.

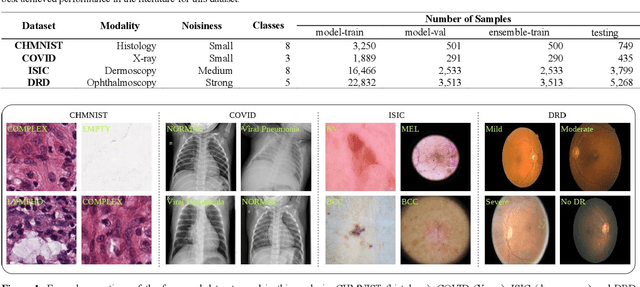

An Analysis on Ensemble Learning optimized Medical Image Classification with Deep Convolutional Neural Networks

Jan 27, 2022

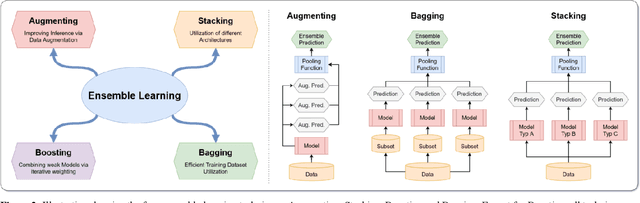

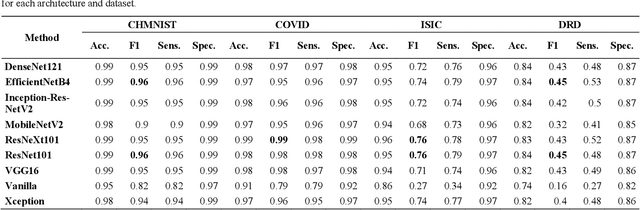

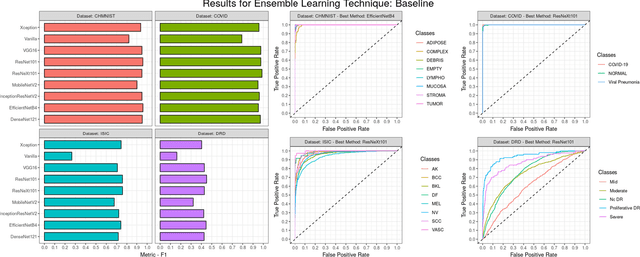

Abstract:Novel and high-performance medical image classification pipelines are heavily utilizing ensemble learning strategies. The idea of ensemble learning is to assemble diverse models or multiple predictions and, thus, boost prediction performance. However, it is still an open question to what extent as well as which ensemble learning strategies are beneficial in deep learning based medical image classification pipelines. In this work, we proposed a reproducible medical image classification pipeline for analyzing the performance impact of the following ensemble learning techniques: Augmenting, Stacking, and Bagging. The pipeline consists of state-of-the-art preprocessing and image augmentation methods as well as 9 deep convolution neural network architectures. It was applied on four popular medical imaging datasets with varying complexity. Furthermore, 12 pooling functions for combining multiple predictions were analyzed, ranging from simple statistical functions like unweighted averaging up to more complex learning-based functions like support vector machines. Our results revealed that Stacking achieved the largest performance gain of up to 13% F1-score increase. Augmenting showed consistent improvement capabilities by up to 4% and is also applicable to single model based pipelines. Cross-validation based Bagging demonstrated to be the most complex ensemble learning method, which resulted in an F1-score decrease in all analyzed datasets (up to -10%). Furthermore, we demonstrated that simple statistical pooling functions are equal or often even better than more complex pooling functions. We concluded that the integration of Stacking and Augmentation ensemble learning techniques is a powerful method for any medical image classification pipeline to improve robustness and boost performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge