Francisco Valentini

CLIRudit: Cross-Lingual Information Retrieval of Scientific Documents

Apr 22, 2025Abstract:Cross-lingual information retrieval (CLIR) consists in finding relevant documents in a language that differs from the language of the queries. This paper presents CLIRudit, a new dataset created to evaluate cross-lingual academic search, focusing on English queries and French documents. The dataset is built using bilingual article metadata from \'Erudit, a Canadian publishing platform, and is designed to represent scenarios in which researchers search for scholarly content in languages other than English. We perform a comprehensive benchmarking of different zero-shot first-stage retrieval methods on the dataset, including dense and sparse retrievers, query and document machine translation, and state-of-the-art multilingual retrievers. Our results show that large dense retrievers, not necessarily trained for the cross-lingual retrieval task, can achieve zero-shot performance comparable to using ground truth human translations, without the need for machine translation. Sparse retrievers, such as BM25 or SPLADE, combined with document translation, show competitive results, providing an efficient alternative to large dense models. This research advances the understanding of cross-lingual academic information retrieval and provides a framework that others can use to build comparable datasets across different languages and disciplines. By making the dataset and code publicly available, we aim to facilitate further research that will help make scientific knowledge more accessible across language barriers.

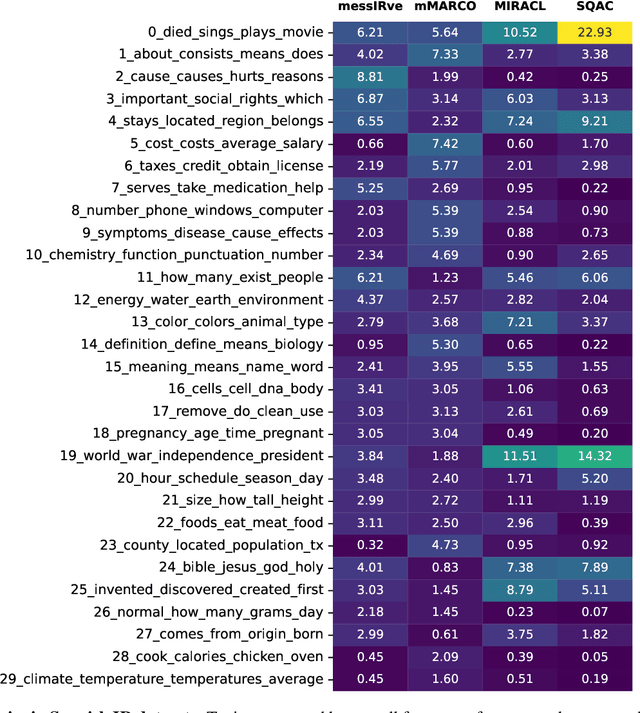

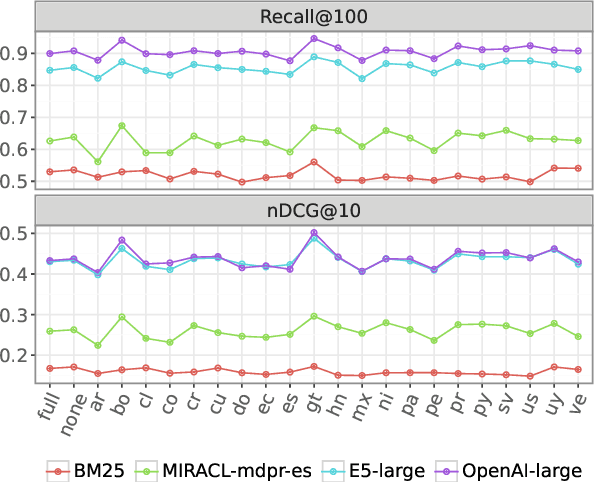

MessIRve: A Large-Scale Spanish Information Retrieval Dataset

Sep 09, 2024

Abstract:Information retrieval (IR) is the task of finding relevant documents in response to a user query. Although Spanish is the second most spoken native language, current IR benchmarks lack Spanish data, hindering the development of information access tools for Spanish speakers. We introduce MessIRve, a large-scale Spanish IR dataset with around 730 thousand queries from Google's autocomplete API and relevant documents sourced from Wikipedia. MessIRve's queries reflect diverse Spanish-speaking regions, unlike other datasets that are translated from English or do not consider dialectal variations. The large size of the dataset allows it to cover a wide variety of topics, unlike smaller datasets. We provide a comprehensive description of the dataset, comparisons with existing datasets, and baseline evaluations of prominent IR models. Our contributions aim to advance Spanish IR research and improve information access for Spanish speakers.

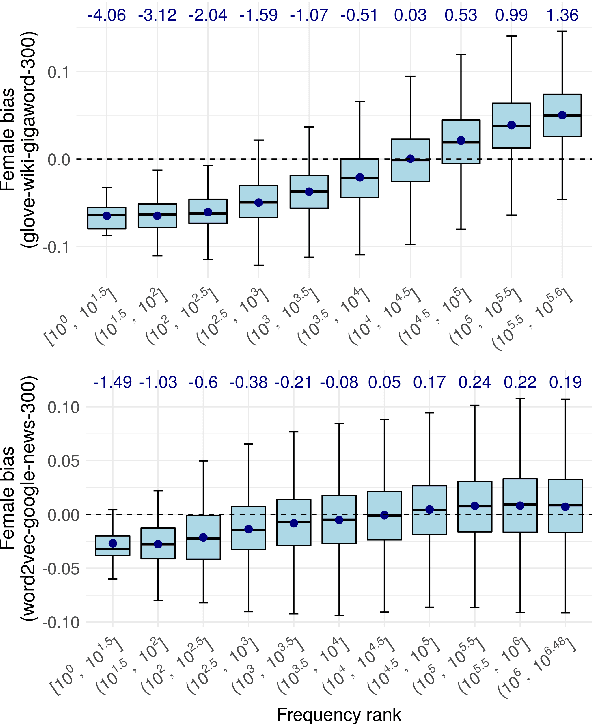

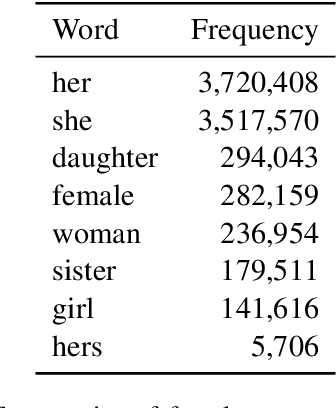

The Undesirable Dependence on Frequency of Gender Bias Metrics Based on Word Embeddings

Jan 02, 2023

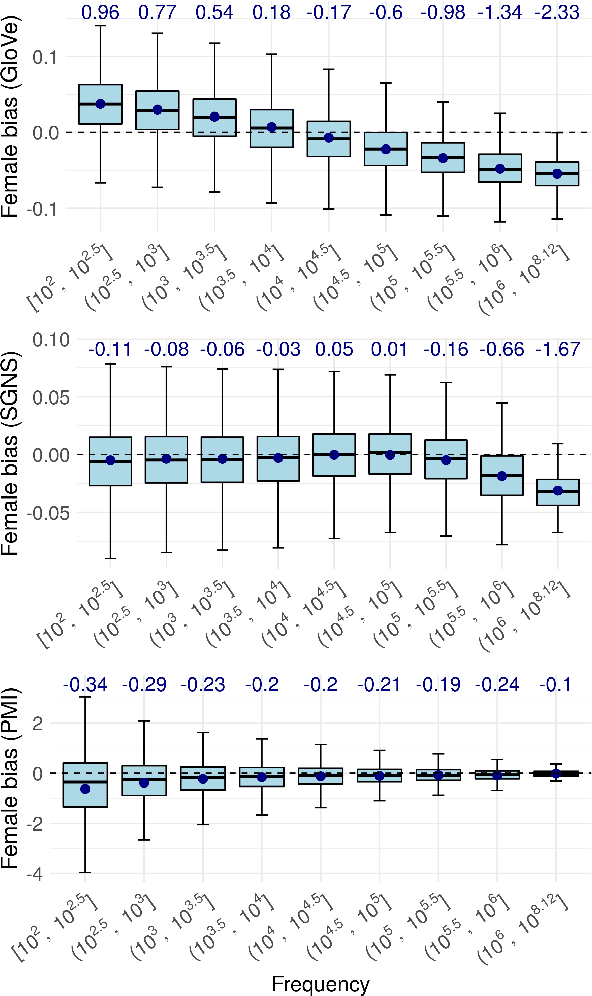

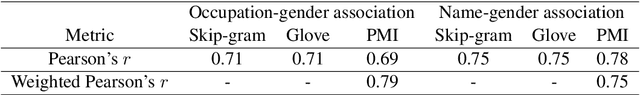

Abstract:Numerous works use word embedding-based metrics to quantify societal biases and stereotypes in texts. Recent studies have found that word embeddings can capture semantic similarity but may be affected by word frequency. In this work we study the effect of frequency when measuring female vs. male gender bias with word embedding-based bias quantification methods. We find that Skip-gram with negative sampling and GloVe tend to detect male bias in high frequency words, while GloVe tends to return female bias in low frequency words. We show these behaviors still exist when words are randomly shuffled. This proves that the frequency-based effect observed in unshuffled corpora stems from properties of the metric rather than from word associations. The effect is spurious and problematic since bias metrics should depend exclusively on word co-occurrences and not individual word frequencies. Finally, we compare these results with the ones obtained with an alternative metric based on Pointwise Mutual Information. We find that this metric does not show a clear dependence on frequency, even though it is slightly skewed towards male bias across all frequencies.

The Dependence on Frequency of Word Embedding Similarity Measures

Nov 15, 2022

Abstract:Recent research has shown that static word embeddings can encode word frequency information. However, little has been studied about this phenomenon and its effects on downstream tasks. In the present work, we systematically study the association between frequency and semantic similarity in several static word embeddings. We find that Skip-gram, GloVe and FastText embeddings tend to produce higher semantic similarity between high-frequency words than between other frequency combinations. We show that the association between frequency and similarity also appears when words are randomly shuffled. This proves that the patterns found are not due to real semantic associations present in the texts, but are an artifact produced by the word embeddings. Finally, we provide an example of how word frequency can strongly impact the measurement of gender bias with embedding-based metrics. In particular, we carry out a controlled experiment that shows that biases can even change sign or reverse their order by manipulating word frequencies.

On the interpretation and significance of bias metrics in texts: a PMI-based approach

Apr 13, 2021

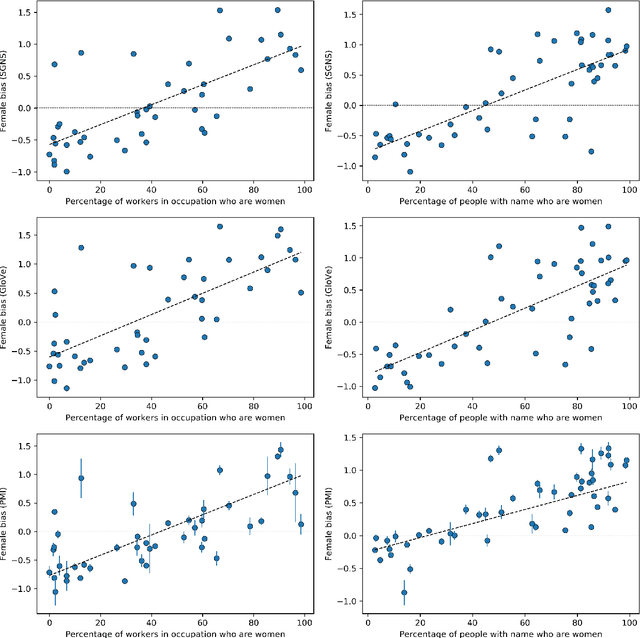

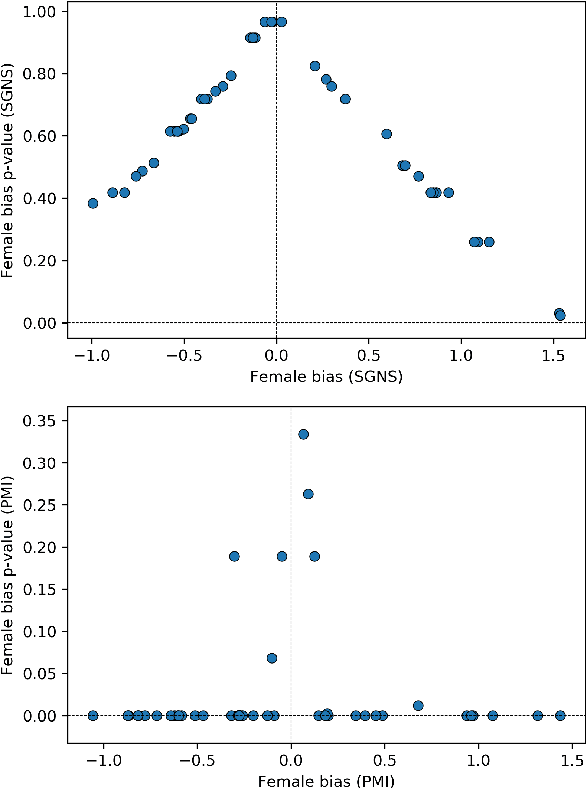

Abstract:In recent years, the use of word embeddings has become popular to measure the presence of biases in texts. Despite the fact that these measures have been shown to be effective in detecting a wide variety of biases, metrics based on word embeddings lack transparency, explainability and interpretability. In this study, we propose a PMI-based metric to quantify biases in texts. We show that this metric can be approximated by an odds ratio, which allows estimating the confidence interval and statistical significance of textual bias. We also show that this PMI-based measure can be expressed as a function of conditional probabilities, providing a simple interpretation in terms of word co-occurrences. Our approach produces a performance comparable to GloVe-based and Skip-gram-based metrics in experiments of gender-occupation and gender-name associations. We discuss the advantages and disadvantages of using methods based on first-order vs second-order co-occurrences, from the point of view of the interpretability of the metric and the sparseness of the data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge