Francisco Messina

Mitigating data replication in text-to-audio generative diffusion models through anti-memorization guidance

Sep 18, 2025Abstract:A persistent challenge in generative audio models is data replication, where the model unintentionally generates parts of its training data during inference. In this work, we address this issue in text-to-audio diffusion models by exploring the use of anti-memorization strategies. We adopt Anti-Memorization Guidance (AMG), a technique that modifies the sampling process of pre-trained diffusion models to discourage memorization. Our study explores three types of guidance within AMG, each designed to reduce replication while preserving generation quality. We use Stable Audio Open as our backbone, leveraging its fully open-source architecture and training dataset. Our comprehensive experimental analysis suggests that AMG significantly mitigates memorization in diffusion-based text-to-audio generation without compromising audio fidelity or semantic alignment.

Preserving Privacy in GANs Against Membership Inference Attack

Nov 06, 2023Abstract:Generative Adversarial Networks (GANs) have been widely used for generating synthetic data for cases where there is a limited size real-world dataset or when data holders are unwilling to share their data samples. Recent works showed that GANs, due to overfitting and memorization, might leak information regarding their training data samples. This makes GANs vulnerable to Membership Inference Attacks (MIAs). Several defense strategies have been proposed in the literature to mitigate this privacy issue. Unfortunately, defense strategies based on differential privacy are proven to reduce extensively the quality of the synthetic data points. On the other hand, more recent frameworks such as PrivGAN and PAR-GAN are not suitable for small-size training datasets. In the present work, the overfitting in GANs is studied in terms of the discriminator, and a more general measure of overfitting based on the Bhattacharyya coefficient is defined. Then, inspired by Fano's inequality, our first defense mechanism against MIAs is proposed. This framework, which requires only a simple modification in the loss function of GANs, is referred to as the maximum entropy GAN or MEGAN and significantly improves the robustness of GANs to MIAs. As a second defense strategy, a more heuristic model based on minimizing the information leaked from generated samples about the training data points is presented. This approach is referred to as mutual information minimization GAN (MIMGAN) and uses a variational representation of the mutual information to minimize the information that a synthetic sample might leak about the whole training data set. Applying the proposed frameworks to some commonly used data sets against state-of-the-art MIAs reveals that the proposed methods can reduce the accuracy of the adversaries to the level of random guessing accuracy with a small reduction in the quality of the synthetic data samples.

MEAD: A Multi-Armed Approach for Evaluation of Adversarial Examples Detectors

Jun 30, 2022

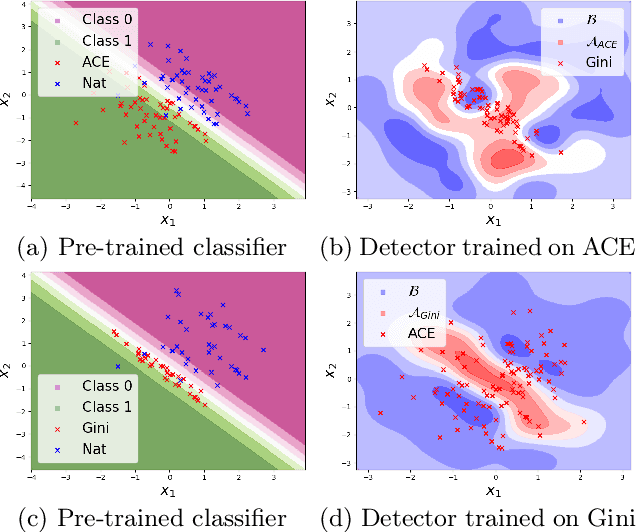

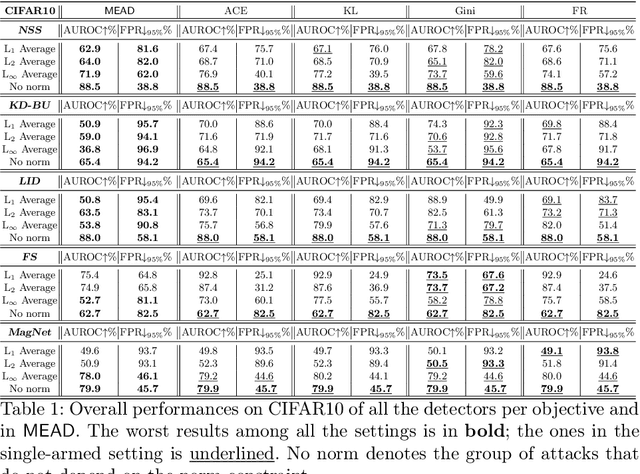

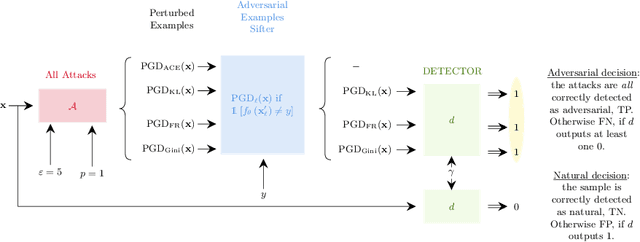

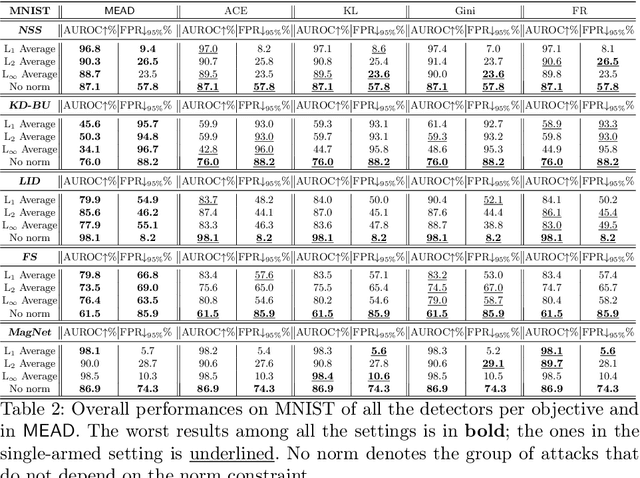

Abstract:Detection of adversarial examples has been a hot topic in the last years due to its importance for safely deploying machine learning algorithms in critical applications. However, the detection methods are generally validated by assuming a single implicitly known attack strategy, which does not necessarily account for real-life threats. Indeed, this can lead to an overoptimistic assessment of the detectors' performance and may induce some bias in the comparison between competing detection schemes. We propose a novel multi-armed framework, called MEAD, for evaluating detectors based on several attack strategies to overcome this limitation. Among them, we make use of three new objectives to generate attacks. The proposed performance metric is based on the worst-case scenario: detection is successful if and only if all different attacks are correctly recognized. Empirically, we show the effectiveness of our approach. Moreover, the poor performance obtained for state-of-the-art detectors opens a new exciting line of research.

Forced Oscillation Identification and Filtering from Multi-Channel Time-Frequency Representation

Aug 19, 2021

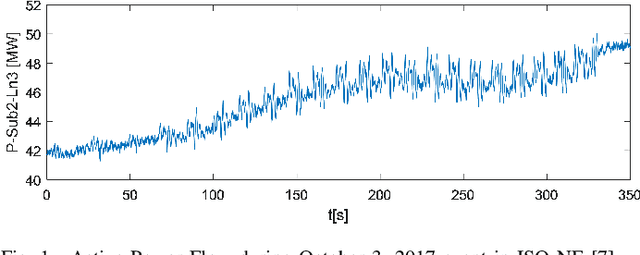

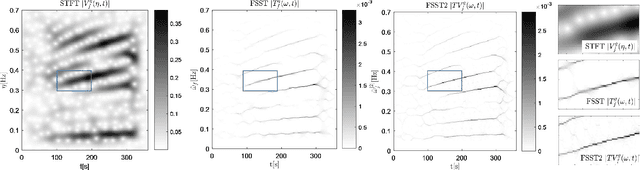

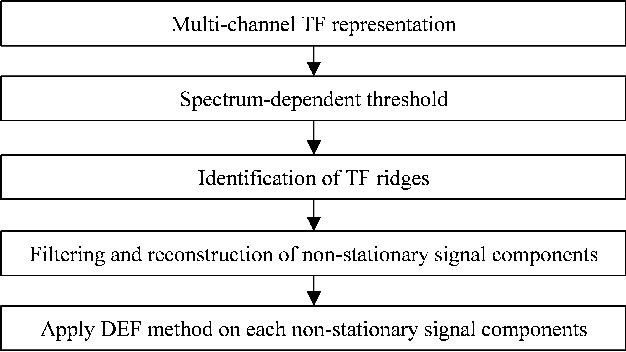

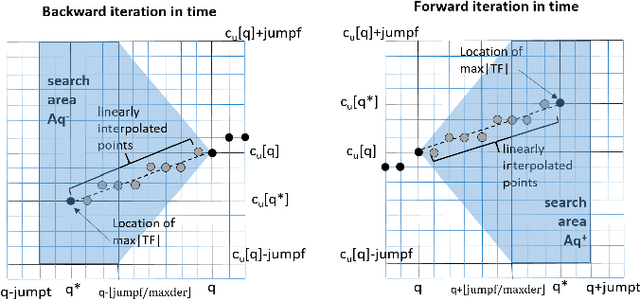

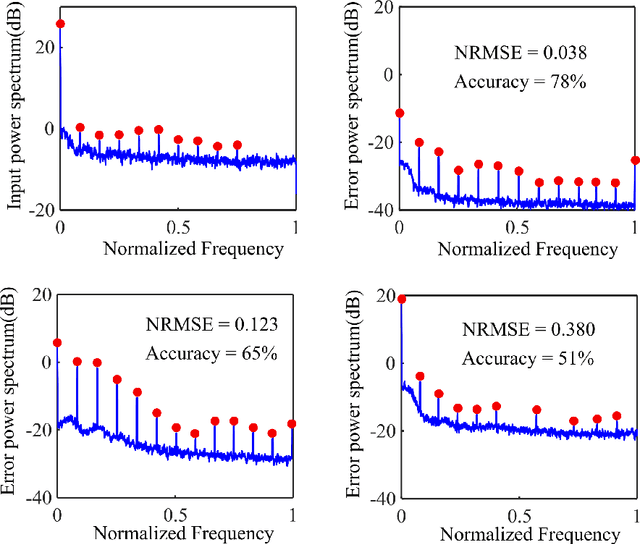

Abstract:Location of non-stationary forced oscillation (FO) sources can be a challenging task, especially in cases under resonance condition with natural system modes, where the magnitudes of the oscillations could be greater in places far from the source. Therefore, it is of interest to construct a global time-frequency (TF) representation (TFR) of the system, which can capture the oscillatory components present in the system. In this paper we develop a systematic methodology for frequency identification and component filtering of non-stationary power system forced oscillations (FO) based on multi-channel TFR. The frequencies of the oscillatory components are identified on the TF plane by applying a modified ridge estimation algorithm. Then, filtering of the components is carried out on the TF plane applying the anti-transform functions over the individual TFRs around the identified ridges. This step constitutes an initial stage for the application of the Dissipating Energy Flow (DEF) method used to locate FO sources. Besides, we compare three TF approaches: short-time Fourier transform (STFT), STFT-based synchrosqueezing transform (FSST) and second order FSST (FSST2). Simulated signals and signals from real operation are used to show that the proposed method provides a systematic framework for identification and filtering of power systems non-stationary forced oscillations.

Learning Sparse Privacy-Preserving Representations for Smart Meters Data

Jul 17, 2021

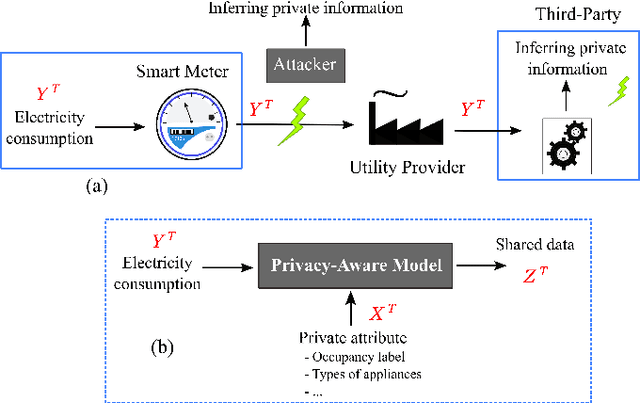

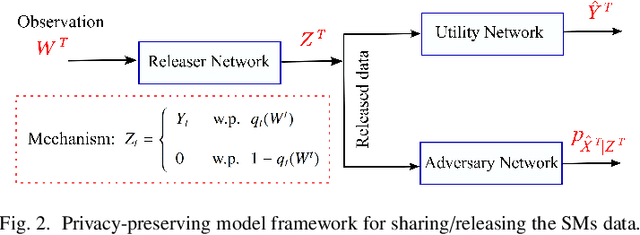

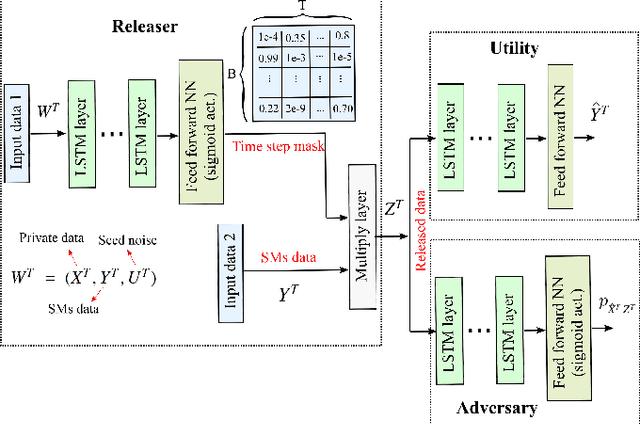

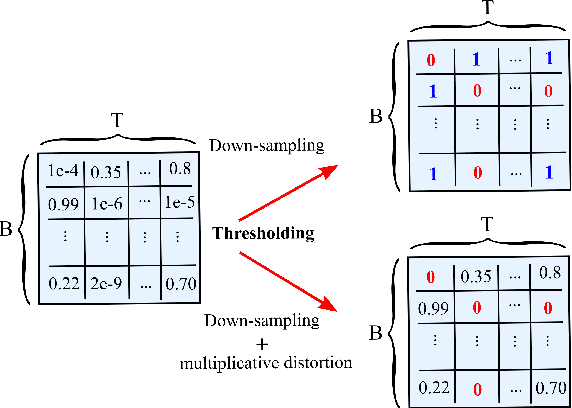

Abstract:Fine-grained Smart Meters (SMs) data recording and communication has enabled several features of Smart Grids (SGs) such as power quality monitoring, load forecasting, fault detection, and so on. In addition, it has benefited the users by giving them more control over their electricity consumption. However, it is well-known that it also discloses sensitive information about the users, i.e., an attacker can infer users' private information by analyzing the SMs data. In this study, we propose a privacy-preserving approach based on non-uniform down-sampling of SMs data. We formulate this as the problem of learning a sparse representation of SMs data with minimum information leakage and maximum utility. The architecture is composed of a releaser, which is a recurrent neural network (RNN), that is trained to generate the sparse representation by masking the SMs data, and an utility and adversary networks (also RNNs), which help the releaser to minimize the leakage of information about the private attribute, while keeping the reconstruction error of the SMs data minimum (i.e., maximum utility). The performance of the proposed technique is assessed based on actual SMs data and compared with uniform down-sampling, random (non-uniform) down-sampling, as well as the state-of-the-art in privacy-preserving methods using a data manipulation approach. It is shown that our method performs better in terms of the privacy-utility trade-off while releasing much less data, thus also being more efficient.

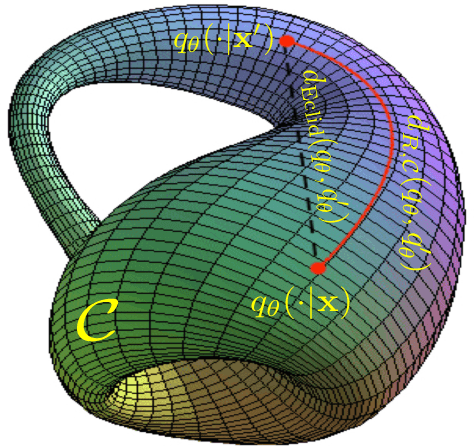

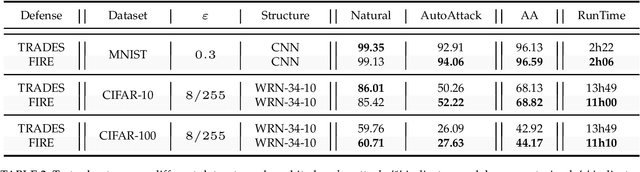

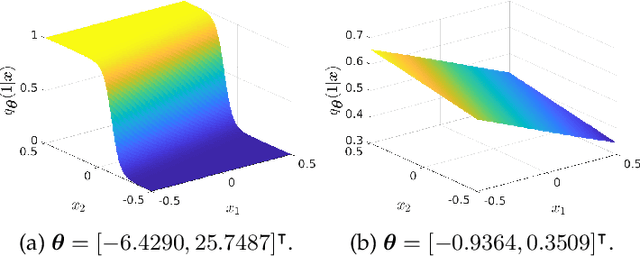

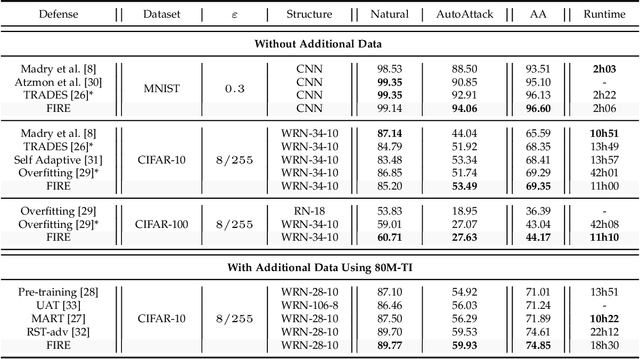

Adversarial Robustness via Fisher-Rao Regularization

Jun 12, 2021

Abstract:Adversarial robustness has become a topic of growing interest in machine learning since it was observed that neural networks tend to be brittle. We propose an information-geometric formulation of adversarial defense and introduce FIRE, a new Fisher-Rao regularization for the categorical cross-entropy loss, which is based on the geodesic distance between natural and perturbed input features. Based on the information-geometric properties of the class of softmax distributions, we derive an explicit characterization of the Fisher-Rao Distance (FRD) for the binary and multiclass cases, and draw some interesting properties as well as connections with standard regularization metrics. Furthermore, for a simple linear and Gaussian model, we show that all Pareto-optimal points in the accuracy-robustness region can be reached by FIRE while other state-of-the-art methods fail. Empirically, we evaluate the performance of various classifiers trained with the proposed loss on standard datasets, showing up to 2\% of improvements in terms of robustness while reducing the training time by 20\% over the best-performing methods.

Deep Directed Information-Based Learning for Privacy-Preserving Smart Meter Data Release

Nov 20, 2020

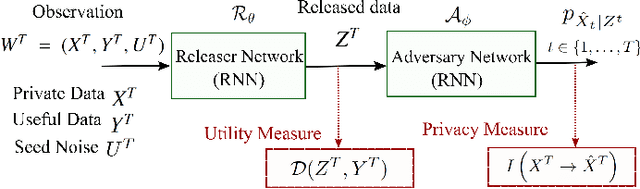

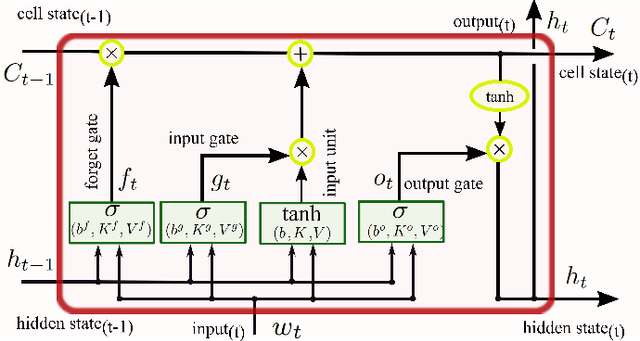

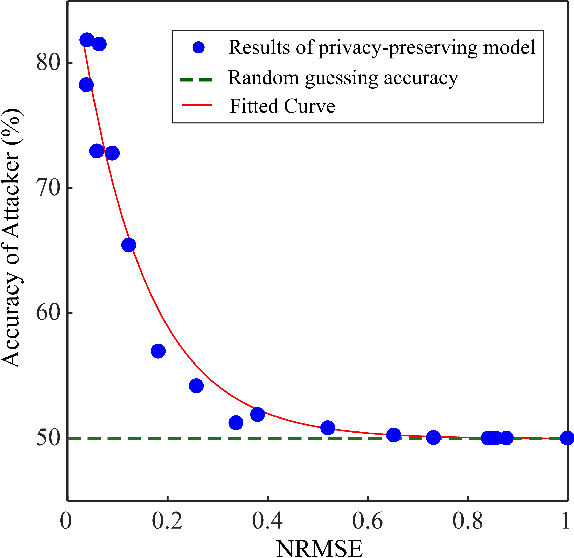

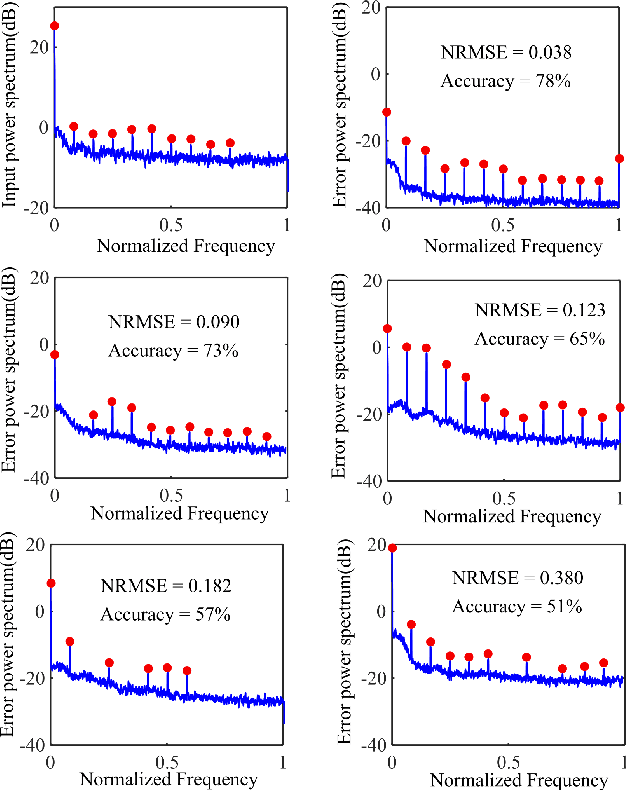

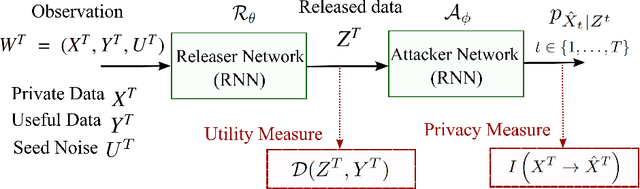

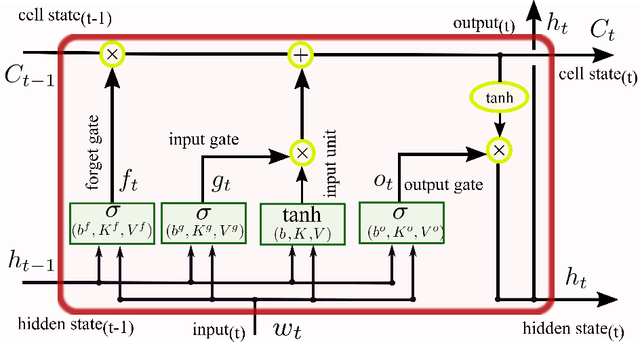

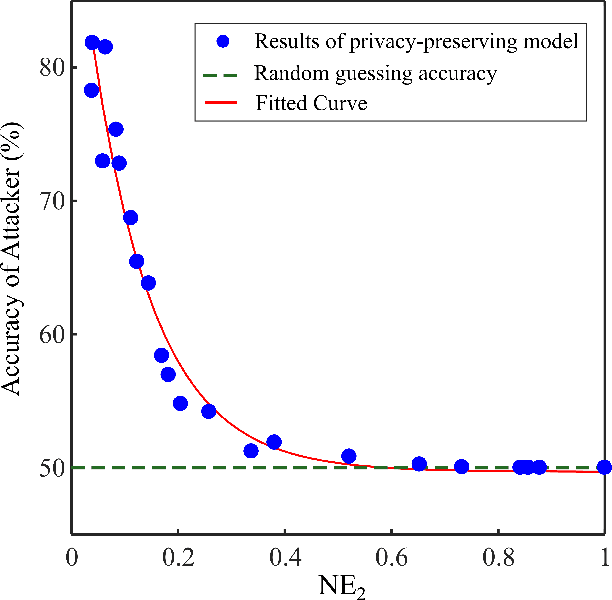

Abstract:The explosion of data collection has raised serious privacy concerns in users due to the possibility that sharing data may also reveal sensitive information. The main goal of a privacy-preserving mechanism is to prevent a malicious third party from inferring sensitive information while keeping the shared data useful. In this paper, we study this problem in the context of time series data and smart meters (SMs) power consumption measurements in particular. Although Mutual Information (MI) between private and released variables has been used as a common information-theoretic privacy measure, it fails to capture the causal time dependencies present in the power consumption time series data. To overcome this limitation, we introduce the Directed Information (DI) as a more meaningful measure of privacy in the considered setting and propose a novel loss function. The optimization is then performed using an adversarial framework where two Recurrent Neural Networks (RNNs), referred to as the releaser and the adversary, are trained with opposite goals. Our empirical studies on real-world data sets from SMs measurements in the worst-case scenario where an attacker has access to all the training data set used by the releaser, validate the proposed method and show the existing trade-offs between privacy and utility.

Deep Recurrent Adversarial Learning for Privacy-Preserving Smart Meter Data Release

Jun 14, 2019

Abstract:Smart Meters (SMs) are an important component of smart electrical grids, but they have also generated serious concerns about privacy data of consumers. In this paper, we present a general formulation of the privacy-preserving problem in SMs from an information-theoretic perspective. In order to capture the casual time series structure of the power measurements, we employ Directed Information (DI) as an adequate measure of privacy. On the other hand, to cope with a variety of potential applications of SMs data, we study different distortion measures along with the standard squared-error distortion. This formulation leads to a quite general training objective (or loss) which is optimized under a deep learning adversarial framework where two Recurrent Neural Networks (RNNs), referred to as the releaser and the attacker, are trained with opposite goals. An exhaustive empirical study is then performed to validate the proposed approach for different privacy problems in three actual data sets. Finally, we study the impact of the data mismatch problem, which occurs when the releaser and the attacker have different training data sets and show that privacy may not require a large level of distortion in real-world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge