Florian Richoux

Injecting Combinatorial Optimization into MCTS: Application to the Board Game boop

Jun 13, 2024

Abstract:Games, including abstract board games, constitute a convenient ground to create, design, and improve new AI methods. In this field, Monte Carlo Tree Search is a popular algorithm family, aiming to build game trees and explore them efficiently. Combinatorial Optimization, on the other hand, aims to model and solve problems with an objective to optimize and constraints to satisfy, and is less common in Game AI. We believe however that both methods can be combined efficiently, by injecting Combinatorial Optimization into Monte Carlo Tree Search to help the tree search, leading to a novel combination of these two techniques. Tested on the board game boop., our method beats 96% of the time the Monte Carlo Tree Search algorithm baseline. We conducted an ablation study to isolate and analyze which injections and combinations of injections lead to such performances. Finally, we opposed our AI method against human players on the Board Game Arena platform, and reached a 373 ELO rating after 51 boop. games, with a 69% win rate and finishing ranked 56th worldwide on the platform over 5,316 boop. players.

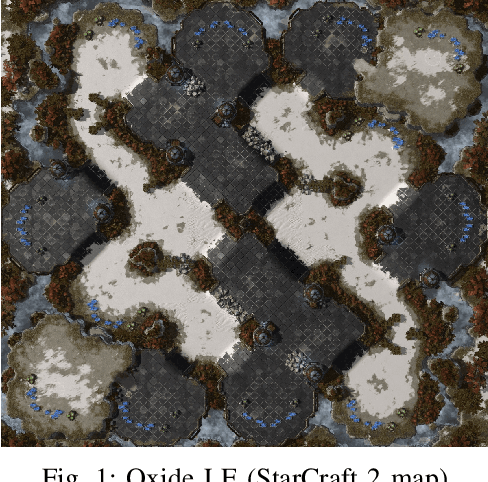

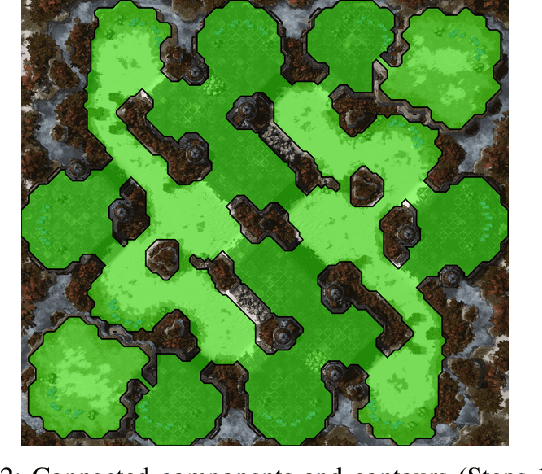

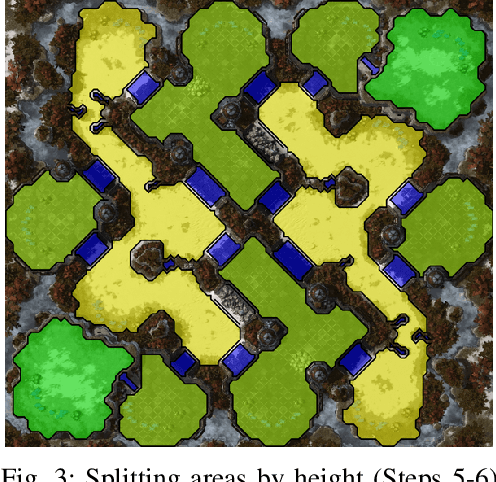

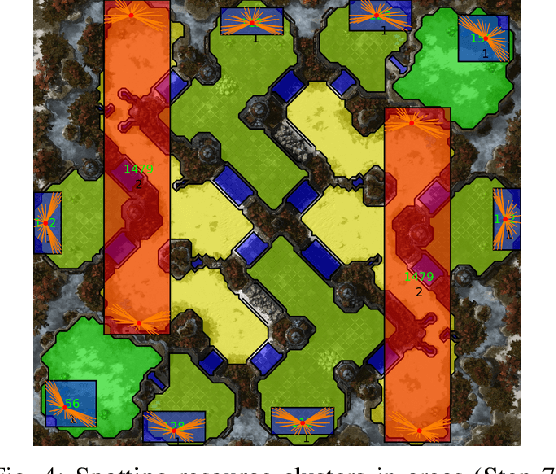

Terrain Analysis in StarCraft 1 and 2 as Combinatorial Optimization

May 18, 2022

Abstract:Terrain analysis in Real-Time Strategy games is a necessary step to allow spacial reasoning. The goal of terrain analysis is to gather and process data about the map topology and properties to have a qualitative spatial representation. On StarCraft games, all previous works on terrain analysis propose a crisp analysis based on connected component detection, Voronoi diagram computation and pruning, and region merging. Those methods have been implemented as game-specific libraries, and they can only offer the same kind of analysis for all maps and all users. In this paper, we propose a way to consider terrain analysis as a combinatorial optimization problem. Our method allows different kinds of analysis by changing constraints or the objective function in the problem model. We also present a library, Taunt, implementing our method and able to handle both StarCraft 1 and StarCraft 2 maps. This makes our library a universal tool for StarCraft bots with different spatial representation needs. We believe our library unlocks the possibility to have real adaptive AIs playing StarCraft, and can be the starting point of a new wave of bots.

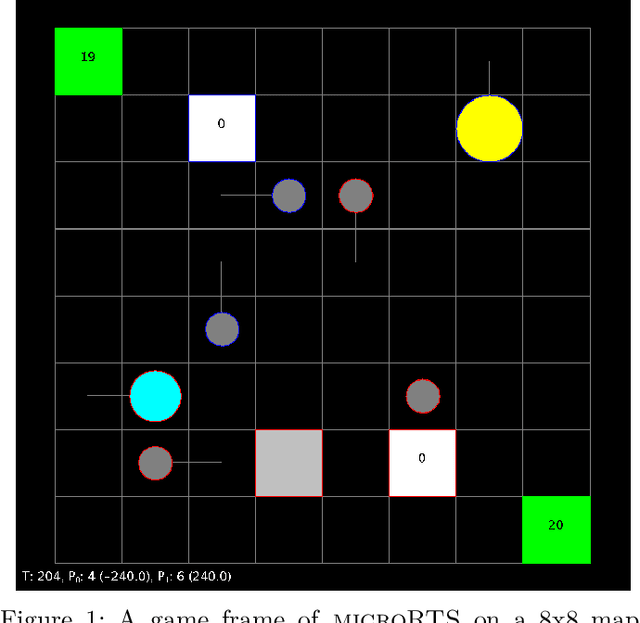

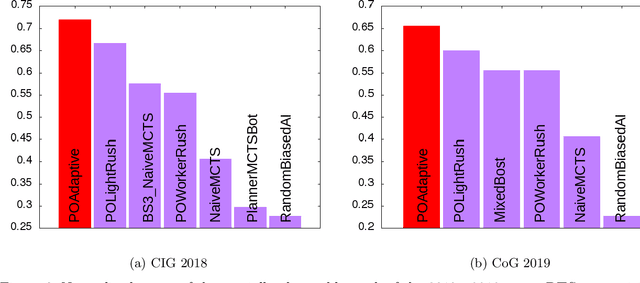

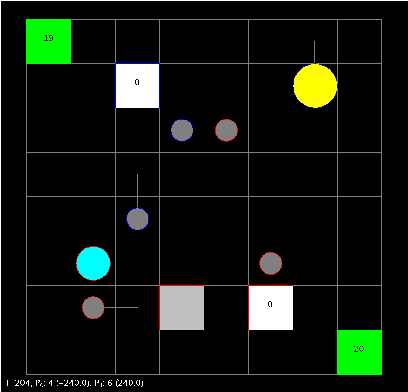

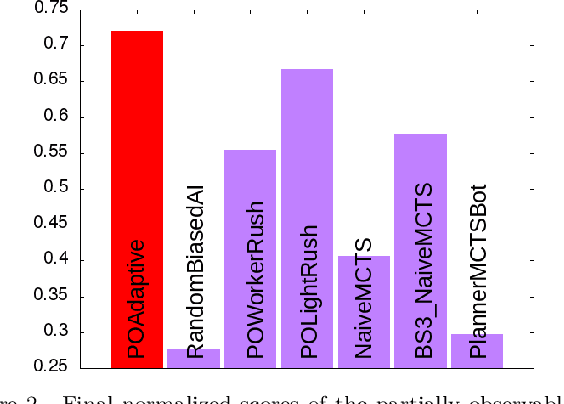

microPhantom: Playing microRTS under uncertainty and chaos

Jun 17, 2020

Abstract:This competition paper presents microPhantom, a bot playing microRTS and participating in the 2020 microRTS AI competition. microPhantom is based on our previous bot POAdaptive which won the partially observable track of the 2018 and 2019 microRTS AI competitions. In this paper, we focus on decision-making under uncertainty, by tackling the Unit Production Problem with a method based on a combination of Constraint Programming and decision theory. We show that using our method to decide which units to train improves significantly the win rate against the second-best microRTS bot from the partially observable track. We also show that our method is resilient in chaotic environments, with a very small loss of efficiency only. To allow replicability and to facilitate further research, the source code of microPhantom is available, as well as the Constraint Programming toolkit it uses.

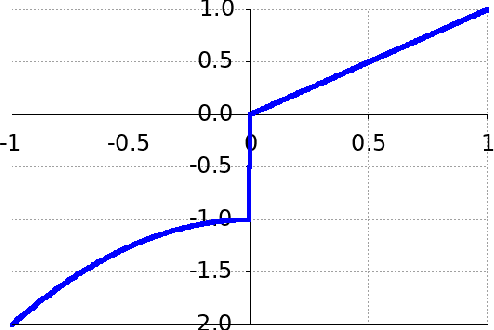

Automatic Cost Function Learning with Interpretable Compositional Networks

Feb 23, 2020

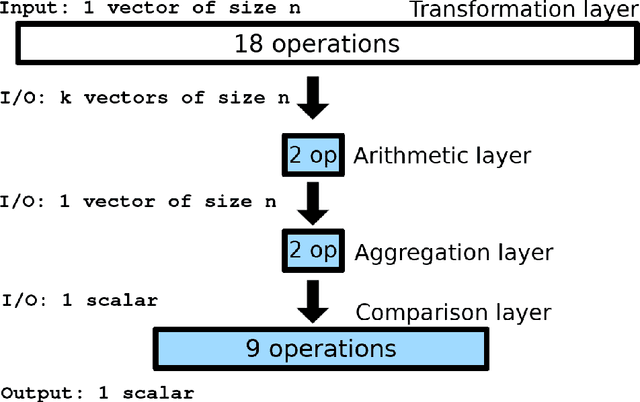

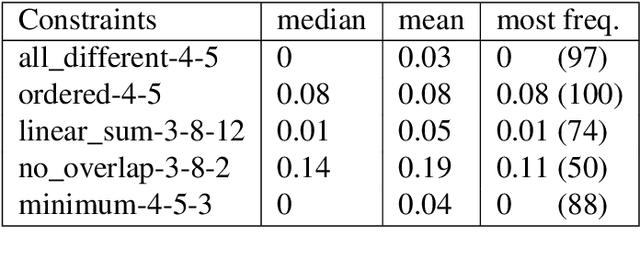

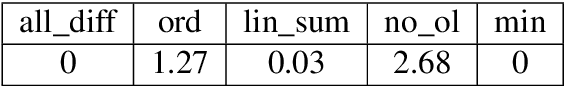

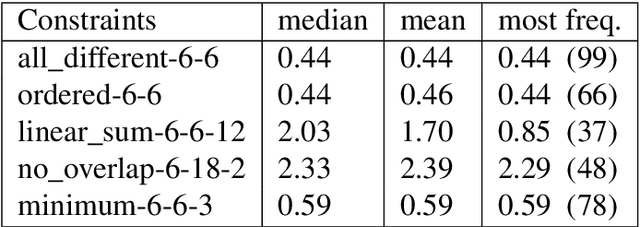

Abstract:Cost Function Networks (CFN) are a formalism in Constraint Programming to model combinatorial satisfaction or optimization problems. By associating a function to each constraint type to evaluate the quality of an assignment, it extends the expressivity of regular CSP/COP formalisms but at a price of making harder the problem modeling. Indeed, in addition to regular variables/domains/constraints sets, one must provide a set of cost functions that are not always easy to define. Here we propose a method to automatically learn a cost function of a constraint, given a function deciding if assignments are valid or not. This is to the best of our knowledge the first attempt to automatically learn cost functions. Our method aims to learn cost functions in a supervised fashion, trying to reproduce the Hamming distance, by using a variation of neural networks we named Interpretable Compositional Networks, allowing us to get explainable results, unlike regular artificial neural networks. We experiment it on 5 different constraints to show its versatility. Experiments show that functions learned on small dimensions scale on high dimensions, outputting a perfect or near-perfect Hamming distance for most constraints. Our system can be used to automatically generate cost functions and then having the expressivity of CFN with the same modeling effort than for CSP/COP.

Comparing two deep learning sequence-based models for protein-protein interaction prediction

Jan 15, 2019

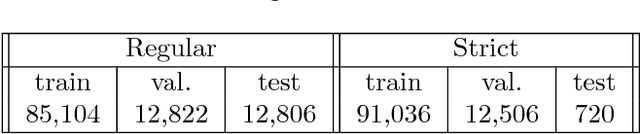

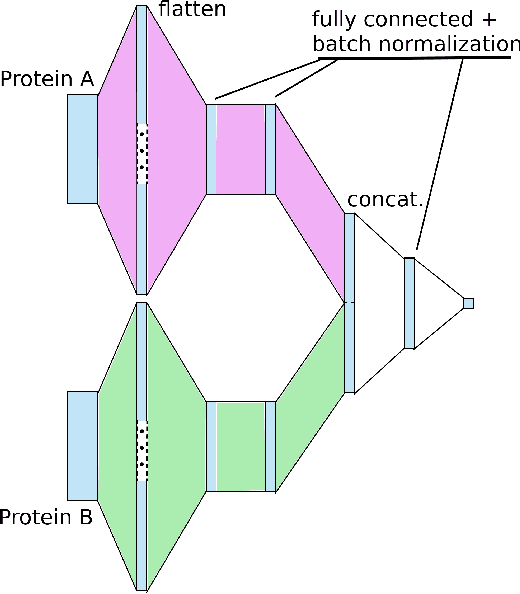

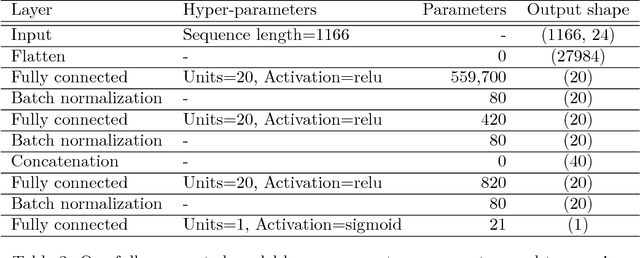

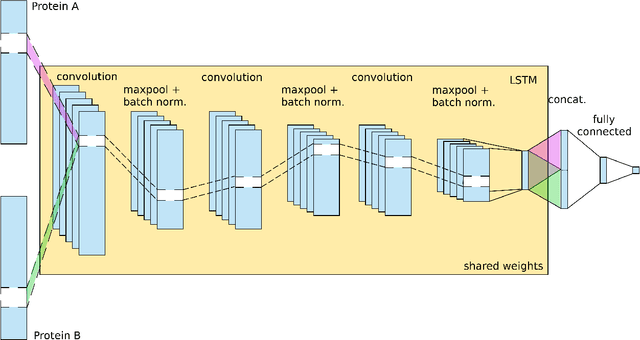

Abstract:Biological data are extremely diverse, complex but also quite sparse. The recent developments in deep learning methods are offering new possibilities for the analysis of complex data. However, it is easy to be get a deep learning model that seems to have good results but is in fact either overfitting the training data or the validation data. In particular, the fact to overfit the validation data, called "information leak", is almost never treated in papers proposing deep learning models to predict protein-protein interactions (PPI). In this work, we compare two carefully designed deep learning models and show pitfalls to avoid while predicting PPIs through machine learning methods. Our best model predicts accurately more than 78% of human PPI, in very strict conditions both for training and testing. The methodology we propose here allow us to have strong confidences about the ability of a model to scale up on larger datasets. This would allow sharper models when larger datasets would be available, rather than current models prone to information leaks. Our solid methodological foundations shall be applicable to more organisms and whole proteome networks predictions.

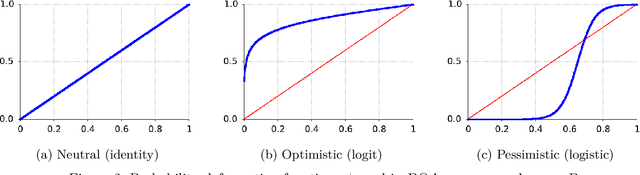

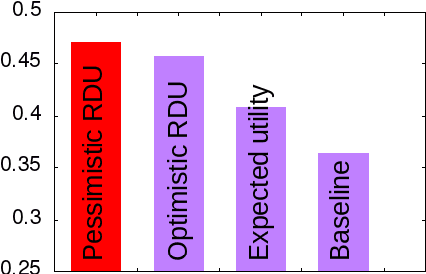

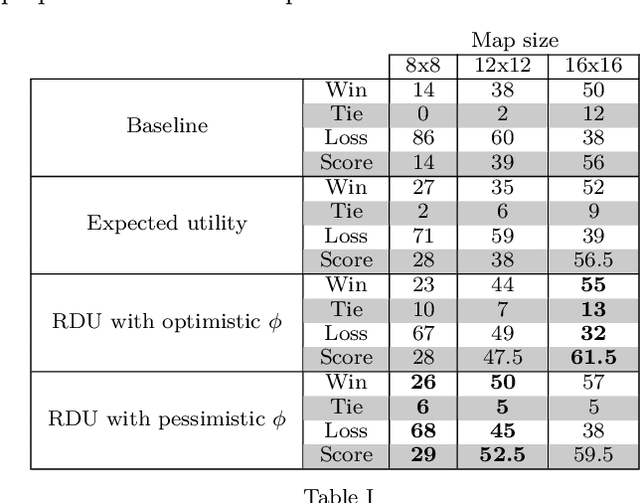

Constrained optimization under uncertainty for decision-making problems: Application to Real-Time Strategy games

Jan 07, 2019

Abstract:Decision-making problems can be modeled as combinatorial optimization problems with Constraint Programming formalisms such as Constrained Optimization Problems. However, few Constraint Programming formalisms can deal with both optimization and uncertainty at the same time, and none of them are convenient to model problems we tackle in this paper. Here, we propose a way to deal with combinatorial optimization problems under uncertainty within the classical Constrained Optimization Problems formalism by injecting the Rank Dependent Utility from decision theory. We also propose a proof of concept of our method to show it is implementable and can solve concrete decision-making problems using a regular constraint solver, and propose a bot that won the partially observable track of the 2018 {\mu}RTS AI competition. Our result shows it is possible to handle uncertainty with regular Constraint Programming solvers, without having to define a new formalism neither to develop dedicated solvers. This brings new perspective to tackle uncertainty in Constraint Programming.

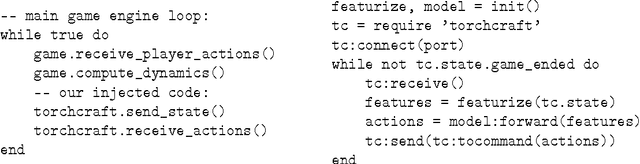

TorchCraft: a Library for Machine Learning Research on Real-Time Strategy Games

Nov 03, 2016

Abstract:We present TorchCraft, a library that enables deep learning research on Real-Time Strategy (RTS) games such as StarCraft: Brood War, by making it easier to control these games from a machine learning framework, here Torch. This white paper argues for using RTS games as a benchmark for AI research, and describes the design and components of TorchCraft.

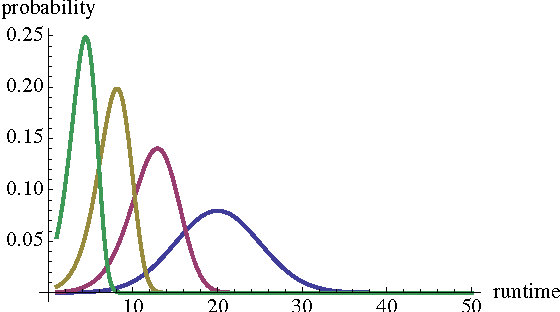

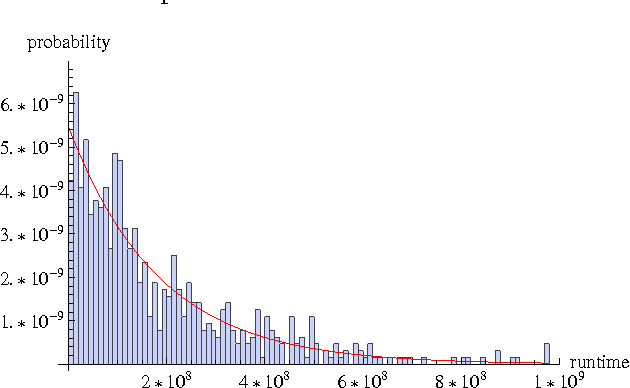

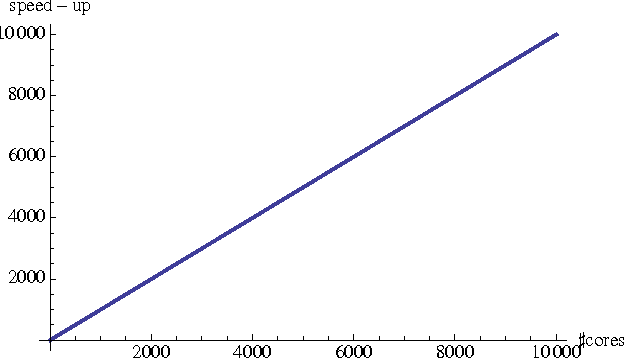

Prediction of Parallel Speed-ups for Las Vegas Algorithms

Dec 18, 2012

Abstract:We propose a probabilistic model for the parallel execution of Las Vegas algorithms, i.e., randomized algorithms whose runtime might vary from one execution to another, even with the same input. This model aims at predicting the parallel performances (i.e., speedups) by analysis the runtime distribution of the sequential runs of the algorithm. Then, we study in practice the case of a particular Las Vegas algorithm for combinatorial optimization, on three classical problems, and compare with an actual parallel implementation up to 256 cores. We show that the prediction can be quite accurate, matching the actual speedups very well up to 100 parallel cores and then with a deviation of about 20% up to 256 cores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge