Fernando Vieira

Automatic Prediction of Amyotrophic Lateral Sclerosis Progression using Longitudinal Speech Transformer

Jun 26, 2024

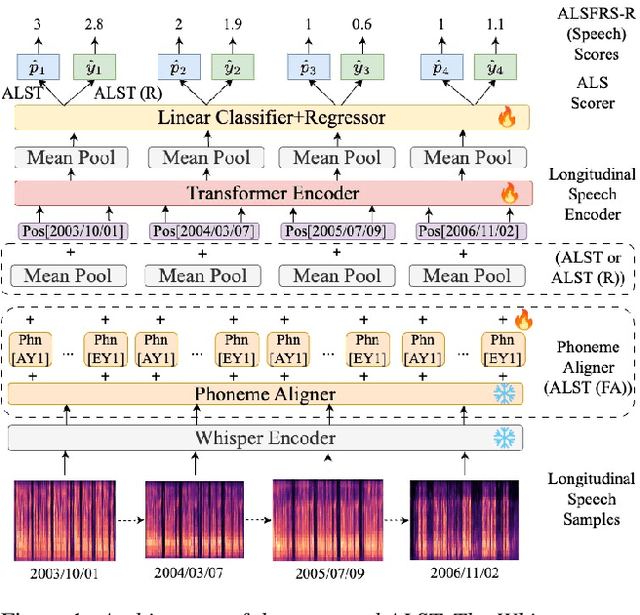

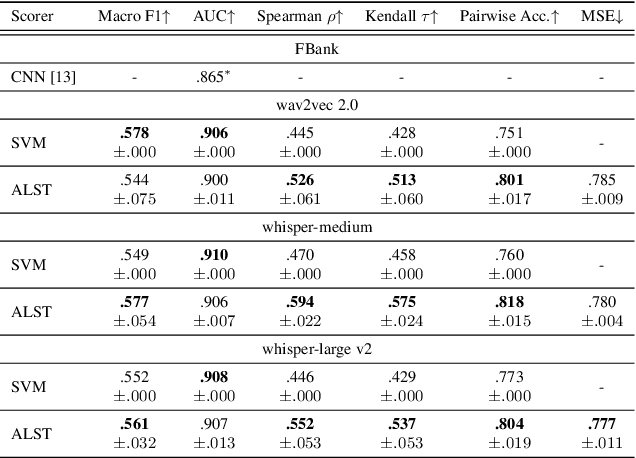

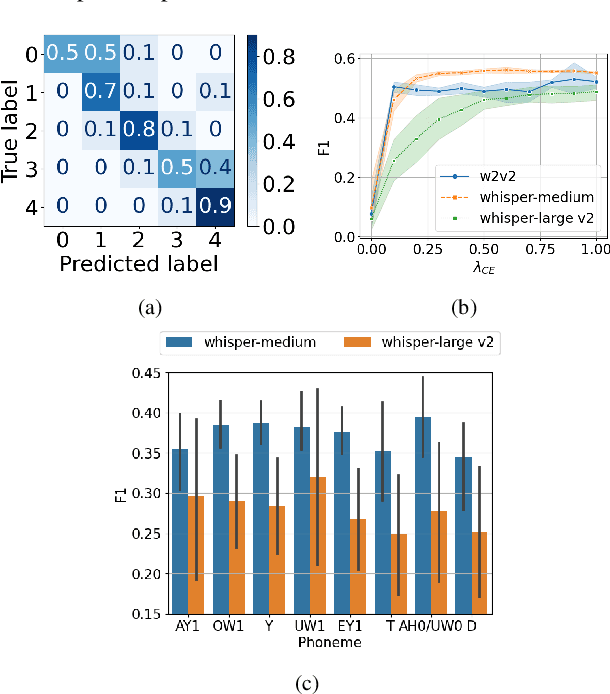

Abstract:Automatic prediction of amyotrophic lateral sclerosis (ALS) disease progression provides a more efficient and objective alternative than manual approaches. We propose ALS longitudinal speech transformer (ALST), a neural network-based automatic predictor of ALS disease progression from longitudinal speech recordings of ALS patients. By taking advantage of high-quality pretrained speech features and longitudinal information in the recordings, our best model achieves 91.0\% AUC, improving upon the previous best model by 5.6\% relative on the ALS TDI dataset. Careful analysis reveals that ALST is capable of fine-grained and interpretable predictions of ALS progression, especially for distinguishing between rarer and more severe cases. Code is publicly available.

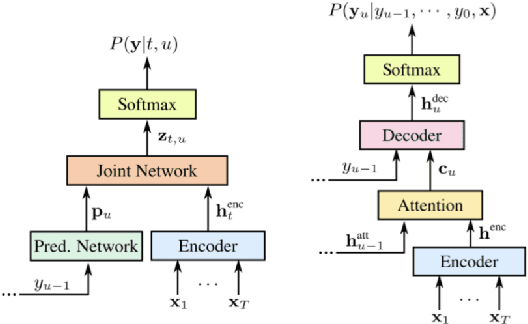

Personalizing ASR for Dysarthric and Accented Speech with Limited Data

Jul 31, 2019

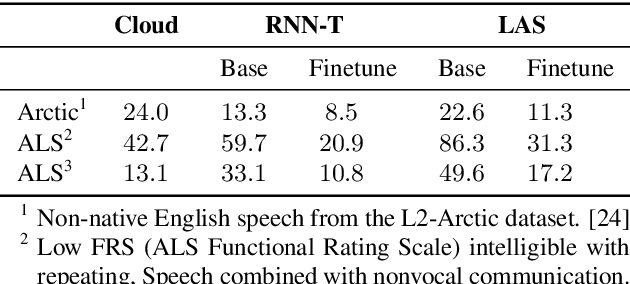

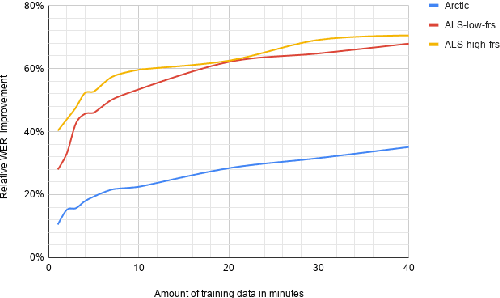

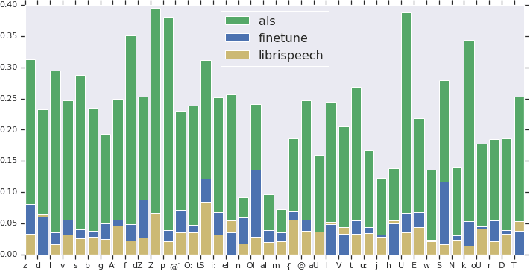

Abstract:Automatic speech recognition (ASR) systems have dramatically improved over the last few years. ASR systems are most often trained from 'typical' speech, which means that underrepresented groups don't experience the same level of improvement. In this paper, we present and evaluate finetuning techniques to improve ASR for users with non-standard speech. We focus on two types of non-standard speech: speech from people with amyotrophic lateral sclerosis (ALS) and accented speech. We train personalized models that achieve 62% and 35% relative WER improvement on these two groups, bringing the absolute WER for ALS speakers, on a test set of message bank phrases, down to 10% for mild dysarthria and 20% for more serious dysarthria. We show that 71% of the improvement comes from only 5 minutes of training data. Finetuning a particular subset of layers (with many fewer parameters) often gives better results than finetuning the entire model. This is the first step towards building state of the art ASR models for dysarthric speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge