Yishu Gong

Can Diffusion Models Disentangle? A Theoretical Perspective

Mar 31, 2025Abstract:This paper presents a novel theoretical framework for understanding how diffusion models can learn disentangled representations. Within this framework, we establish identifiability conditions for general disentangled latent variable models, analyze training dynamics, and derive sample complexity bounds for disentangled latent subspace models. To validate our theory, we conduct disentanglement experiments across diverse tasks and modalities, including subspace recovery in latent subspace Gaussian mixture models, image colorization, image denoising, and voice conversion for speech classification. Additionally, our experiments show that training strategies inspired by our theory, such as style guidance regularization, consistently enhance disentanglement performance.

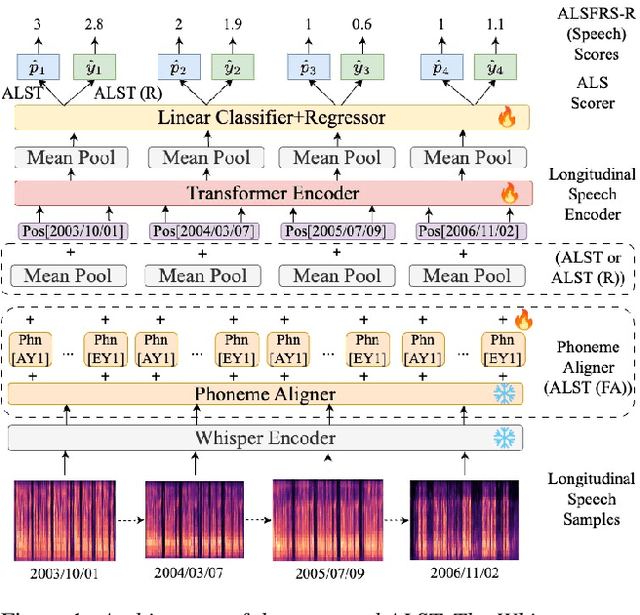

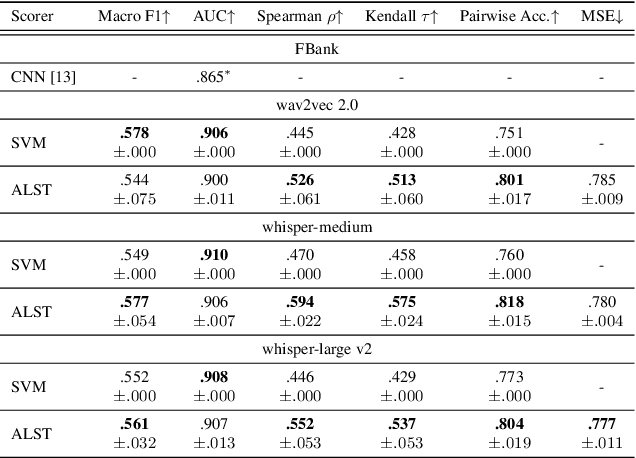

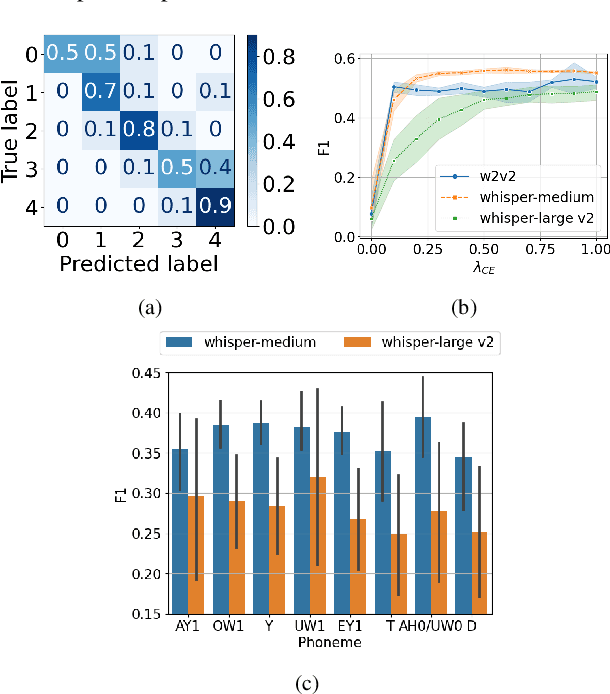

Automatic Prediction of Amyotrophic Lateral Sclerosis Progression using Longitudinal Speech Transformer

Jun 26, 2024

Abstract:Automatic prediction of amyotrophic lateral sclerosis (ALS) disease progression provides a more efficient and objective alternative than manual approaches. We propose ALS longitudinal speech transformer (ALST), a neural network-based automatic predictor of ALS disease progression from longitudinal speech recordings of ALS patients. By taking advantage of high-quality pretrained speech features and longitudinal information in the recordings, our best model achieves 91.0\% AUC, improving upon the previous best model by 5.6\% relative on the ALS TDI dataset. Careful analysis reveals that ALST is capable of fine-grained and interpretable predictions of ALS progression, especially for distinguishing between rarer and more severe cases. Code is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge