Fengyang Sun

ESS-ReduNet: Enhancing Subspace Separability of ReduNet via Dynamic Expansion with Bayesian Inference

Nov 27, 2024

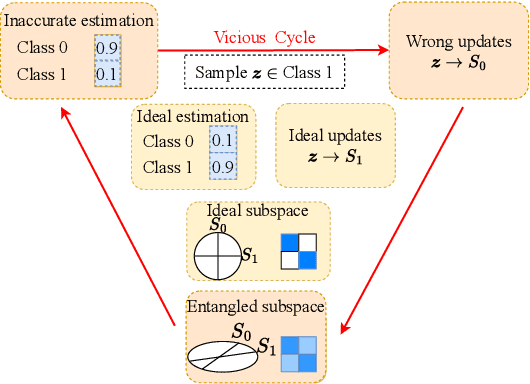

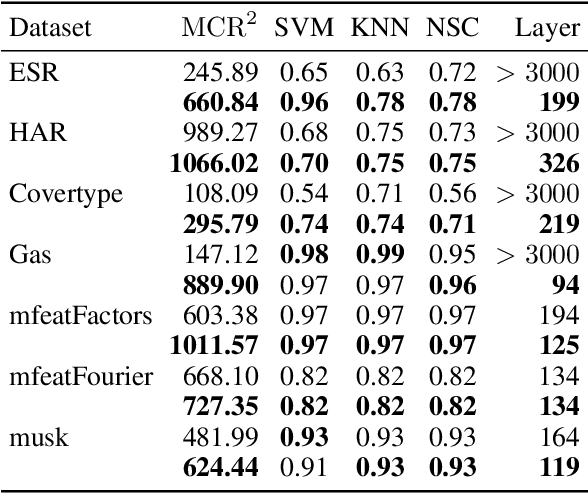

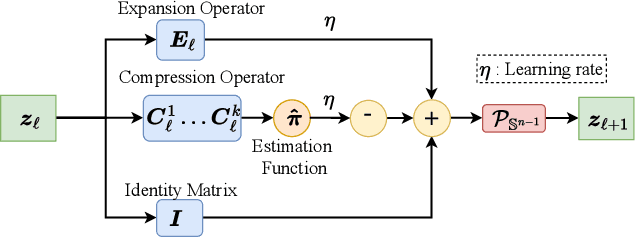

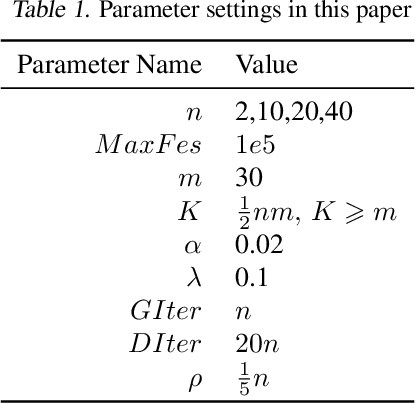

Abstract:ReduNet is a deep neural network model that leverages the principle of maximal coding rate \textbf{redu}ction to transform original data samples into a low-dimensional, linear discriminative feature representation. Unlike traditional deep learning frameworks, ReduNet constructs its parameters explicitly layer by layer, with each layer's parameters derived based on the features transformed from the preceding layer. Rather than directly using labels, ReduNet uses the similarity between each category's spanned subspace and the data samples for feature updates at each layer. This may lead to features being updated in the wrong direction, impairing the correct construction of network parameters and reducing the network's convergence speed. To address this issue, based on the geometric interpretation of the network parameters, this paper presents ESS-ReduNet to enhance the separability of each category's subspace by dynamically controlling the expansion of the overall spanned space of the samples. Meanwhile, label knowledge is incorporated with Bayesian inference to encourage the decoupling of subspaces. Finally, stability, as assessed by the condition number, serves as an auxiliary criterion for halting training. Experiments on the ESR, HAR, Covertype, and Gas datasets demonstrate that ESS-ReduNet achieves more than 10x improvement in convergence compared to ReduNet. Notably, on the ESR dataset, the features transformed by ESS-ReduNet achieve a 47\% improvement in SVM classification accuracy.

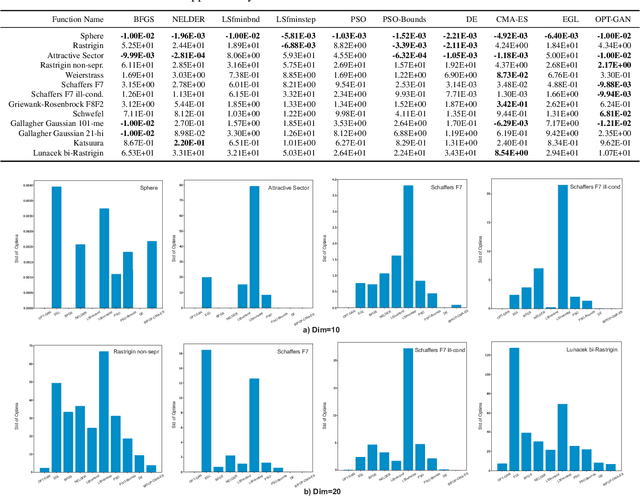

Black-Box Optimization via Generative Adversarial Nets

Feb 07, 2021

Abstract:Black-box optimization (BBO) algorithms are concerned with finding the best solutions for the problems with missing analytical details. Most classical methods for such problems are based on strong and fixed \emph{a priori} assumptions such as Gaussian distribution. However, lots of complex real-world problems are far from the \emph{a priori} distribution, bringing some unexpected obstacles to these methods. In this paper, we present an optimizer using generative adversarial nets (OPT-GAN) to guide search on black-box problems via estimating the distribution of optima. The method learns the extensive distribution of the optimal region dominated by selective candidates. Experiments demonstrate that OPT-GAN outperforms other classical BBO algorithms, in particular the ones with Gaussian assumptions.

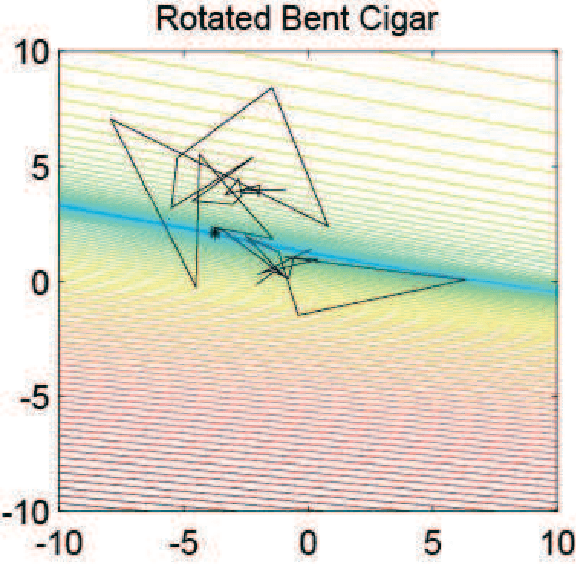

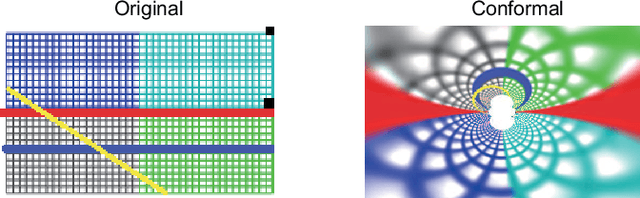

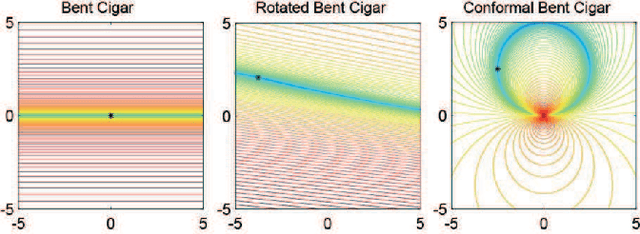

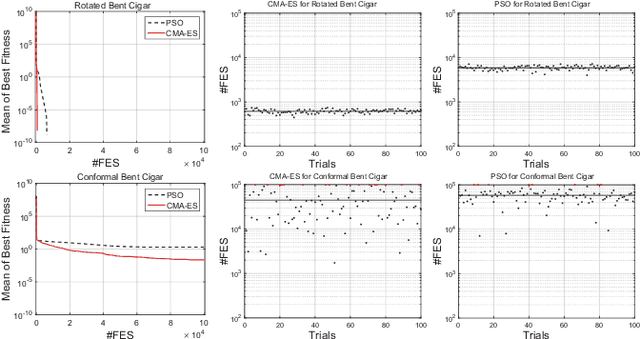

A Novel Graphic Bending Transformation on Benchmark

Apr 21, 2020

Abstract:Classical benchmark problems utilize multiple transformation techniques to increase optimization difficulty, e.g., shift for anti centering effect and rotation for anti dimension sensitivity. Despite testing the transformation invariance, however, such operations do not really change the landscape's "shape", but rather than change the "view point". For instance, after rotated, ill conditional problems are turned around in terms of orientation but still keep proportional components, which, to some extent, does not create much obstacle in optimization. In this paper, inspired from image processing, we investigate a novel graphic conformal mapping transformation on benchmark problems to deform the function shape. The bending operation does not alter the function basic properties, e.g., a unimodal function can almost maintain its unimodality after bent, but can modify the shape of interested area in the search space. Experiments indicate the same optimizer spends more search budget and encounter more failures on the conformal bent functions than the rotated version. Several parameters of the proposed function are also analyzed to reveal performance sensitivity of the evolutionary algorithms.

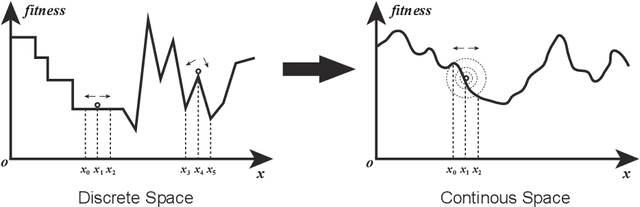

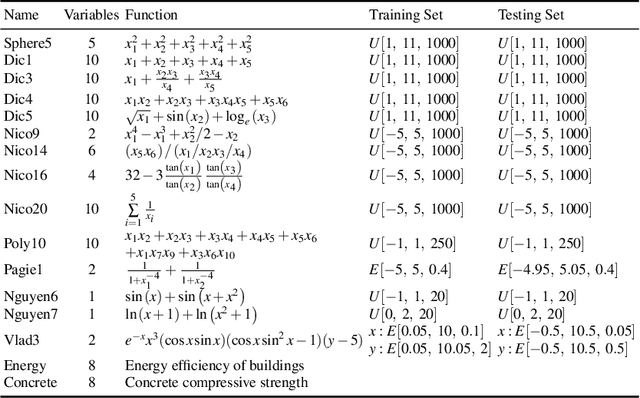

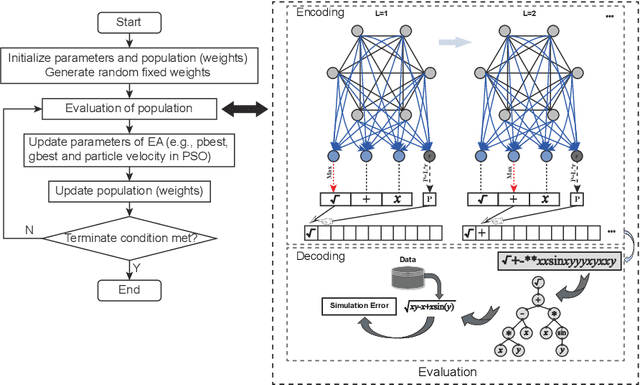

A Novel Continuous Representation of Genetic Programmings using Recurrent Neural Networks for Symbolic Regression

Apr 06, 2019

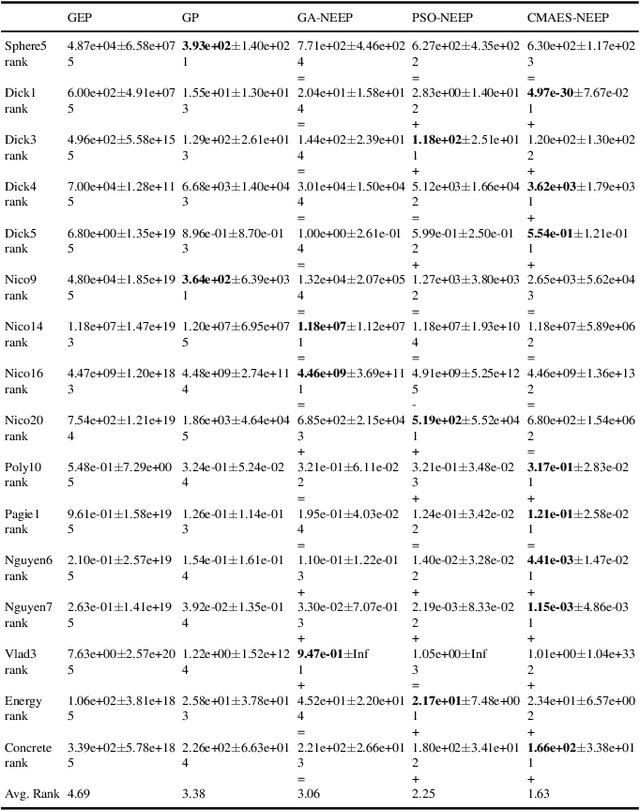

Abstract:Neuro-encoded expression programming that aims to offer a novel continuous representation of combinatorial encoding for genetic programming methods is proposed in this paper. Genetic programming with linear representation uses nature-inspired operators to tune expressions and finally search out the best explicit function to simulate data. The encoding mechanism is essential for genetic programmings to find a desirable solution efficiently. However, the linear representation methods manipulate the expression tree in discrete solution space, where a small change of the input can cause a large change of the output. The unsmooth landscapes destroy the local information and make difficulty in searching. The neuro-encoded expression programming constructs the gene string with recurrent neural network (RNN) and the weights of the network are optimized by powerful continuous evolutionary algorithms. The neural network mappings smoothen the sharp fitness landscape and provide rich neighborhood information to find the best expression. The experiments indicate that the novel approach improves test accuracy and efficiency on several well-known symbolic regression problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge