Black-Box Optimization via Generative Adversarial Nets

Paper and Code

Feb 07, 2021

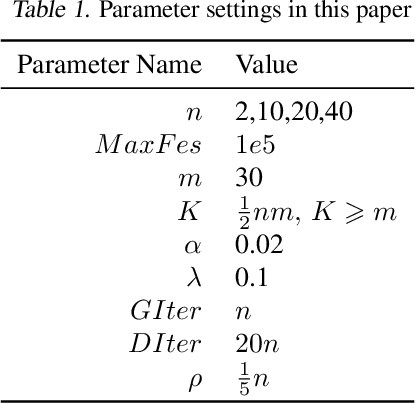

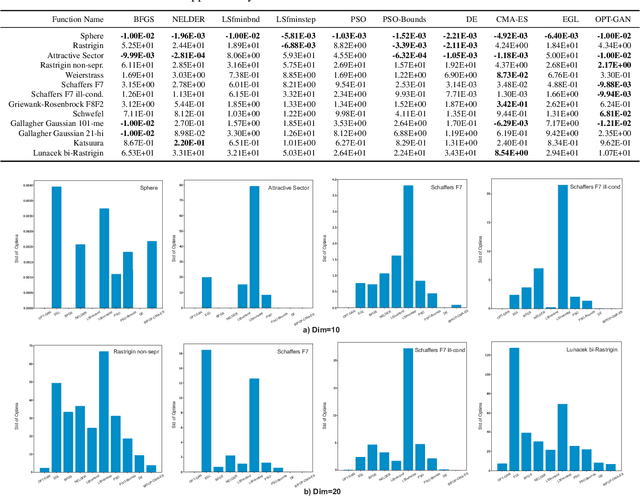

Black-box optimization (BBO) algorithms are concerned with finding the best solutions for the problems with missing analytical details. Most classical methods for such problems are based on strong and fixed \emph{a priori} assumptions such as Gaussian distribution. However, lots of complex real-world problems are far from the \emph{a priori} distribution, bringing some unexpected obstacles to these methods. In this paper, we present an optimizer using generative adversarial nets (OPT-GAN) to guide search on black-box problems via estimating the distribution of optima. The method learns the extensive distribution of the optimal region dominated by selective candidates. Experiments demonstrate that OPT-GAN outperforms other classical BBO algorithms, in particular the ones with Gaussian assumptions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge