Lizhi Peng

ESS-ReduNet: Enhancing Subspace Separability of ReduNet via Dynamic Expansion with Bayesian Inference

Nov 27, 2024

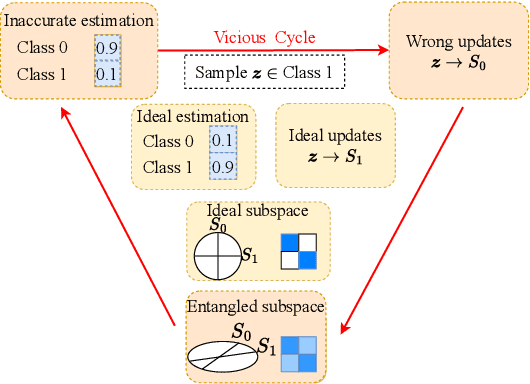

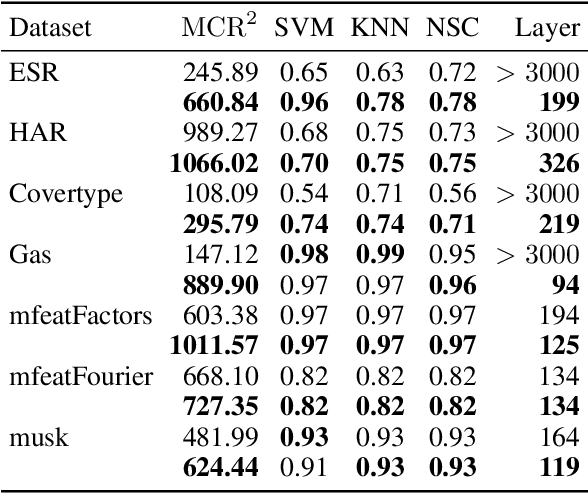

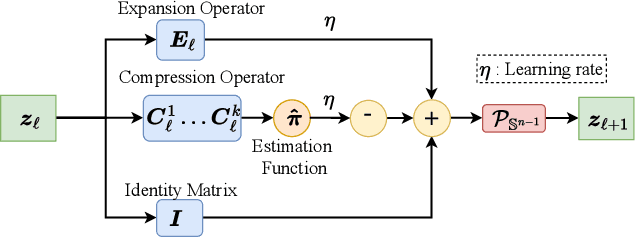

Abstract:ReduNet is a deep neural network model that leverages the principle of maximal coding rate \textbf{redu}ction to transform original data samples into a low-dimensional, linear discriminative feature representation. Unlike traditional deep learning frameworks, ReduNet constructs its parameters explicitly layer by layer, with each layer's parameters derived based on the features transformed from the preceding layer. Rather than directly using labels, ReduNet uses the similarity between each category's spanned subspace and the data samples for feature updates at each layer. This may lead to features being updated in the wrong direction, impairing the correct construction of network parameters and reducing the network's convergence speed. To address this issue, based on the geometric interpretation of the network parameters, this paper presents ESS-ReduNet to enhance the separability of each category's subspace by dynamically controlling the expansion of the overall spanned space of the samples. Meanwhile, label knowledge is incorporated with Bayesian inference to encourage the decoupling of subspaces. Finally, stability, as assessed by the condition number, serves as an auxiliary criterion for halting training. Experiments on the ESR, HAR, Covertype, and Gas datasets demonstrate that ESS-ReduNet achieves more than 10x improvement in convergence compared to ReduNet. Notably, on the ESR dataset, the features transformed by ESS-ReduNet achieve a 47\% improvement in SVM classification accuracy.

Video traffic identification with novel feature extraction and selection method

Mar 06, 2023Abstract:In recent years, the rapid rise of video applications has led to an explosion of Internet video traffic, thereby posing severe challenges to network management. Therefore, effectively identifying and managing video traffic has become an urgent problem to be solved. However, the existing video traffic feature extraction methods mainly target at the traditional packet and flow level features, and the video traffic identification accuracy is low. Additionally, the issue of high data dimension often exists in video traffic identification, requiring an effective approach to select the most relevant features to complete the identification task. Although numerous studies have used feature selection to achieve improved identification performance, no feature selection research has focused on measuring feature distributions that do not overlap or have a small overlap. First, this study proposes to extract video-related features to construct a large-scale feature set to identify video traffic. Second, to reduce the cost of video traffic identification and select an effective feature subset, the current research proposes an adaptive distribution distance-based feature selection (ADDFS) method, which uses Wasserstein distance to measure the distance between feature distributions. To test the effectiveness of the proposal, we collected a set of video traffic from different platforms in a campus network environment and conducted a set of experiments using these data sets. Experimental results suggest that the proposed method can achieve high identification performance for video scene traffic and cloud game video traffic identification. Lastly, a comparison of ADDFS with other feature selection methods shows that ADDFS is a practical feature selection technique not only for video traffic identification, but also for general classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge