Felipe Moraes

Qini curve estimation under clustered network interference

Feb 27, 2025

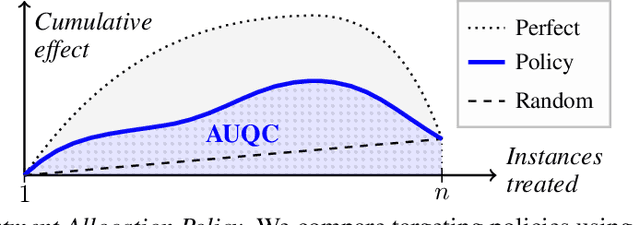

Abstract:Qini curves are a widely used tool for assessing treatment policies under allocation constraints as they visualize the incremental gain of a new treatment policy versus the cost of its implementation. Standard Qini curve estimation assumes no interference between units: that is, that treating one unit does not influence the outcome of any other unit. In many real-life applications such as public policy or marketing, however, the presence of interference is common. Ignoring interference in these scenarios can lead to systematically biased Qini curves that over- or under-estimate a treatment policy's cost-effectiveness. In this paper, we address the problem of Qini curve estimation under clustered network interference, where interfering units form independent clusters. We propose a formal description of the problem setting with an experimental study design under which we can account for clustered network interference. Within this framework, we introduce three different estimation strategies suited for different conditions. Moreover, we introduce a marketplace simulator that emulates clustered network interference in a typical e-commerce setting. From both theoretical and empirical insights, we provide recommendations in choosing the best estimation strategy by identifying an inherent bias-variance trade-off among the estimation strategies.

Metalearners for Ranking Treatment Effects

May 03, 2024

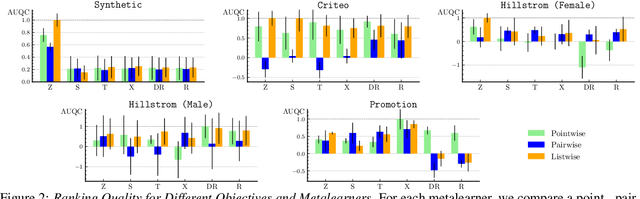

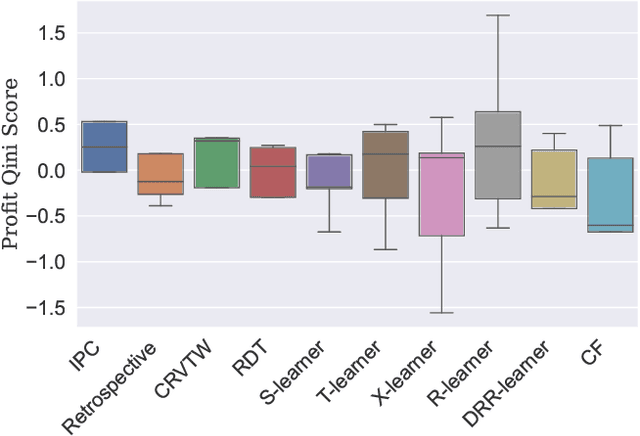

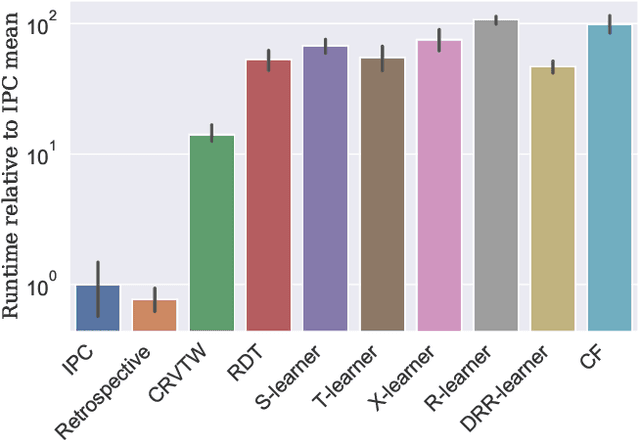

Abstract:Efficiently allocating treatments with a budget constraint constitutes an important challenge across various domains. In marketing, for example, the use of promotions to target potential customers and boost conversions is limited by the available budget. While much research focuses on estimating causal effects, there is relatively limited work on learning to allocate treatments while considering the operational context. Existing methods for uplift modeling or causal inference primarily estimate treatment effects, without considering how this relates to a profit maximizing allocation policy that respects budget constraints. The potential downside of using these methods is that the resulting predictive model is not aligned with the operational context. Therefore, prediction errors are propagated to the optimization of the budget allocation problem, subsequently leading to a suboptimal allocation policy. We propose an alternative approach based on learning to rank. Our proposed methodology directly learns an allocation policy by prioritizing instances in terms of their incremental profit. We propose an efficient sampling procedure for the optimization of the ranking model to scale our methodology to large-scale data sets. Theoretically, we show how learning to rank can maximize the area under a policy's incremental profit curve. Empirically, we validate our methodology and show its effectiveness in practice through a series of experiments on both synthetic and real-world data.

Uplift Modeling: from Causal Inference to Personalization

Aug 17, 2023Abstract:Uplift modeling is a collection of machine learning techniques for estimating causal effects of a treatment at the individual or subgroup levels. Over the last years, causality and uplift modeling have become key trends in personalization at online e-commerce platforms, enabling the selection of the best treatment for each user in order to maximize the target business metric. Uplift modeling can be particularly useful for personalized promotional campaigns, where the potential benefit caused by a promotion needs to be weighed against the potential costs. In this tutorial we will cover basic concepts of causality and introduce the audience to state-of-the-art techniques in uplift modeling. We will discuss the advantages and the limitations of different approaches and dive into the unique setup of constrained uplift modeling. Finally, we will present real-life applications and discuss challenges in implementing these models in production.

Incremental Profit per Conversion: a Response Transformation for Uplift Modeling in E-Commerce Promotions

Jun 23, 2023

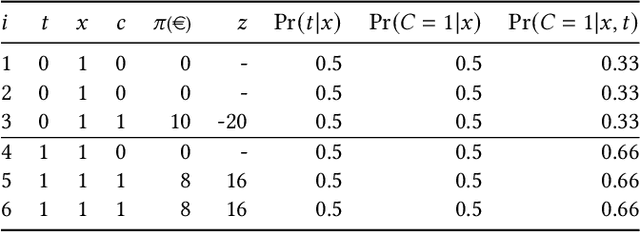

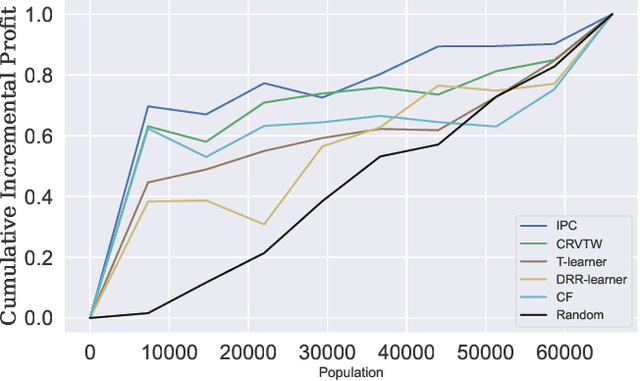

Abstract:Promotions play a crucial role in e-commerce platforms, and various cost structures are employed to drive user engagement. This paper focuses on promotions with response-dependent costs, where expenses are incurred only when a purchase is made. Such promotions include discounts and coupons. While existing uplift model approaches aim to address this challenge, these approaches often necessitate training multiple models, like meta-learners, or encounter complications when estimating profit due to zero-inflated values stemming from non-converted individuals with zero cost and profit. To address these challenges, we introduce Incremental Profit per Conversion (IPC), a novel uplift measure of promotional campaigns' efficiency in unit economics. Through a proposed response transformation, we demonstrate that IPC requires only converted data, its propensity, and a single model to be estimated. As a result, IPC resolves the issues mentioned above while mitigating the noise typically associated with the class imbalance in conversion datasets and biases arising from the many-to-one mapping between search and purchase data. Lastly, we validate the efficacy of our approach by presenting results obtained from a synthetic simulation of a discount coupon campaign.

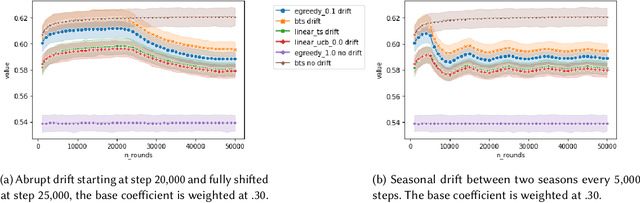

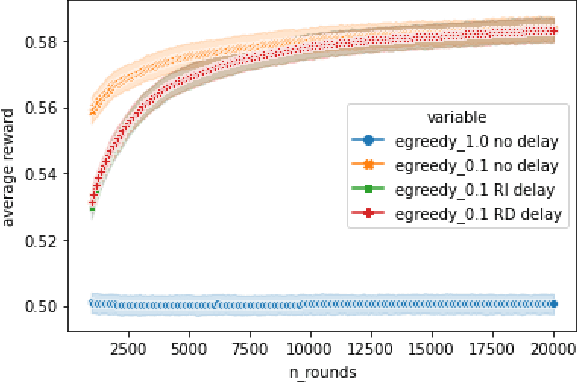

Extending Open Bandit Pipeline to Simulate Industry Challenges

Sep 09, 2022

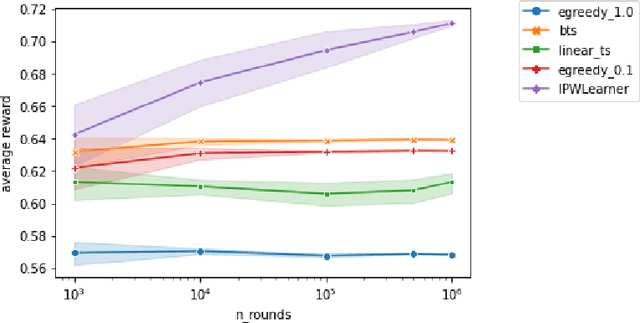

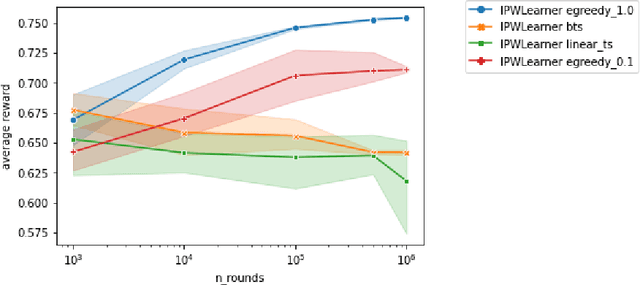

Abstract:Bandit algorithms are often used in the e-commerce industry to train Machine Learning (ML) systems when pre-labeled data is unavailable. However, the industry setting poses various challenges that make implementing bandit algorithms in practice non-trivial. In this paper, we elaborate on the challenges of off-policy optimisation, delayed reward, concept drift, reward design, and business rules constraints that practitioners at Booking.com encounter when applying bandit algorithms. Our main contributions is an extension to the Open Bandit Pipeline (OBP) framework. We provide simulation components for some of the above-mentioned challenges to provide future practitioners, researchers, and educators with a resource to address challenges encountered in the e-commerce industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge