Fei He

SegRap2025: A Benchmark of Gross Tumor Volume and Lymph Node Clinical Target Volume Segmentation for Radiotherapy Planning of Nasopharyngeal Carcinoma

Jan 28, 2026Abstract:Accurate delineation of Gross Tumor Volume (GTV), Lymph Node Clinical Target Volume (LN CTV), and Organ-at-Risk (OAR) from Computed Tomography (CT) scans is essential for precise radiotherapy planning in Nasopharyngeal Carcinoma (NPC). Building upon SegRap2023, which focused on OAR and GTV segmentation using single-center paired non-contrast CT (ncCT) and contrast-enhanced CT (ceCT) scans, the SegRap2025 challenge aims to enhance the generalizability and robustness of segmentation models across imaging centers and modalities. SegRap2025 comprises two tasks: Task01 addresses GTV segmentation using paired CT from the SegRap2023 dataset, with an additional external testing set to evaluate cross-center generalization, and Task02 focuses on LN CTV segmentation using multi-center training data and an unseen external testing set, where each case contains paired CT scans or a single modality, emphasizing both cross-center and cross-modality robustness. This paper presents the challenge setup and provides a comprehensive analysis of the solutions submitted by ten participating teams. For GTV segmentation task, the top-performing models achieved average Dice Similarity Coefficient (DSC) of 74.61% and 56.79% on the internal and external testing cohorts, respectively. For LN CTV segmentation task, the highest average DSC values reached 60.24%, 60.50%, and 57.23% on paired CT, ceCT-only, and ncCT-only subsets, respectively. SegRap2025 establishes a large-scale multi-center, multi-modality benchmark for evaluating the generalization and robustness in radiotherapy target segmentation, providing valuable insights toward clinically applicable automated radiotherapy planning systems. The benchmark is available at: https://hilab-git.github.io/SegRap2025_Challenge.

AlignDrive: Aligned Lateral-Longitudinal Planning for End-to-End Autonomous Driving

Jan 05, 2026Abstract:End-to-end autonomous driving has rapidly progressed, enabling joint perception and planning in complex environments. In the planning stage, state-of-the-art (SOTA) end-to-end autonomous driving models decouple planning into parallel lateral and longitudinal predictions. While effective, this parallel design can lead to i) coordination failures between the planned path and speed, and ii) underutilization of the drive path as a prior for longitudinal planning, thus redundantly encoding static information. To address this, we propose a novel cascaded framework that explicitly conditions longitudinal planning on the drive path, enabling coordinated and collision-aware lateral and longitudinal planning. Specifically, we introduce a path-conditioned formulation that explicitly incorporates the drive path into longitudinal planning. Building on this, the model predicts longitudinal displacements along the drive path rather than full 2D trajectory waypoints. This design simplifies longitudinal reasoning and more tightly couples it with lateral planning. Additionally, we introduce a planning-oriented data augmentation strategy that simulates rare safety-critical events, such as vehicle cut-ins, by adding agents and relabeling longitudinal targets to avoid collision. Evaluated on the challenging Bench2Drive benchmark, our method sets a new SOTA, achieving a driving score of 89.07 and a success rate of 73.18%, demonstrating significantly improved coordination and safety

Retrieving Filter Spectra in CNN for Explainable Sleep Stage Classification

Feb 10, 2025Abstract:Despite significant advances in deep learning-based sleep stage classification, the clinical adoption of automatic classification models remains slow. One key challenge is the lack of explainability, as many models function as black boxes with millions of parameters. In response, recent work has increasingly focussed on enhancing model explainability. This study contributes to these efforts by globally explaining spectral processing of individual EEG channels. Specifically, we introduce a method to retrieve the filter spectrum of low-level convolutional feature extraction and compare it with the classification-relevant spectral information in the data. We evaluate our approach on the MSA-CNN model using the ISRUC-S3 and Sleep-EDF-20 datasets. Our findings show that spectral processing plays a significant role in the lower frequency bands. In addition, comparing the correlation between filter spectrum and data-based spectral information with univariate performance indicates that the model naturally prioritises the most informative channels in a multimodal setting. We specify how these insights can be leveraged to enhance model performance. The code for the filter spectrum retrieval and its analysis is available at https://github.com/sgoerttler/MSA-CNN.

MSA-CNN: A Lightweight Multi-Scale CNN with Attention for Sleep Stage Classification

Jan 06, 2025

Abstract:Recent advancements in machine learning-based signal analysis, coupled with open data initiatives, have fuelled efforts in automatic sleep stage classification. Despite the proliferation of classification models, few have prioritised reducing model complexity, which is a crucial factor for practical applications. In this work, we introduce Multi-Scale and Attention Convolutional Neural Network (MSA-CNN), a lightweight architecture featuring as few as ~10,000 parameters. MSA-CNN leverages a novel multi-scale module employing complementary pooling to eliminate redundant filter parameters and dense convolutions. Model complexity is further reduced by separating temporal and spatial feature extraction and using cost-effective global spatial convolutions. This separation of tasks not only reduces model complexity but also mirrors the approach used by human experts in sleep stage scoring. We evaluated both small and large configurations of MSA-CNN against nine state-of-the-art baseline models across three public datasets, treating univariate and multivariate models separately. Our evaluation, based on repeated cross-validation and re-evaluation of all baseline models, demonstrated that the large MSA-CNN outperformed all baseline models on all three datasets in terms of accuracy and Cohen's kappa, despite its significantly reduced parameter count. Lastly, we explored various model variants and conducted an in-depth analysis of the key modules and techniques, providing deeper insights into the underlying mechanisms. The code for our models, baselines, and evaluation procedures is available at https://github.com/sgoerttler/MSA-CNN.

EEG-GMACN: Interpretable EEG Graph Mutual Attention Convolutional Network

Dec 15, 2024

Abstract:Electroencephalogram (EEG) is a valuable technique to record brain electrical activity through electrodes placed on the scalp. Analyzing EEG signals contributes to the understanding of neurological conditions and developing brain-computer interface. Graph Signal Processing (GSP) has emerged as a promising method for EEG spatial-temporal analysis, by further considering the topological relationships between electrodes. However, existing GSP studies lack interpretability of electrode importance and the credibility of prediction confidence. This work proposes an EEG Graph Mutual Attention Convolutional Network (EEG-GMACN), by introducing an 'Inverse Graph Weight Module' to output interpretable electrode graph weights, enhancing the clinical credibility and interpretability of EEG classification results. Additionally, we incorporate a mutual attention mechanism module into the model to improve its capability to distinguish critical electrodes and introduce credibility calibration to assess the uncertainty of prediction results. This study enhances the transparency and effectiveness of EEG analysis, paving the way for its widespread use in clinical and neuroscience research.

NonSysId: A nonlinear system identification package with improved model term selection for NARMAX models

Nov 25, 2024

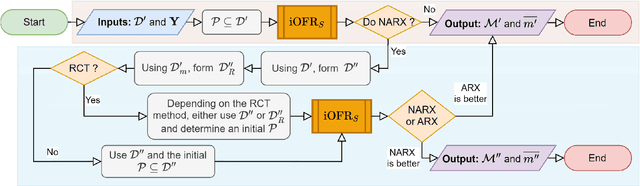

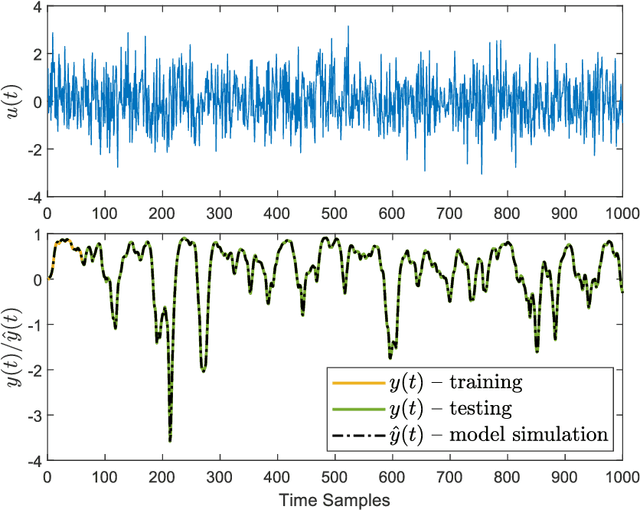

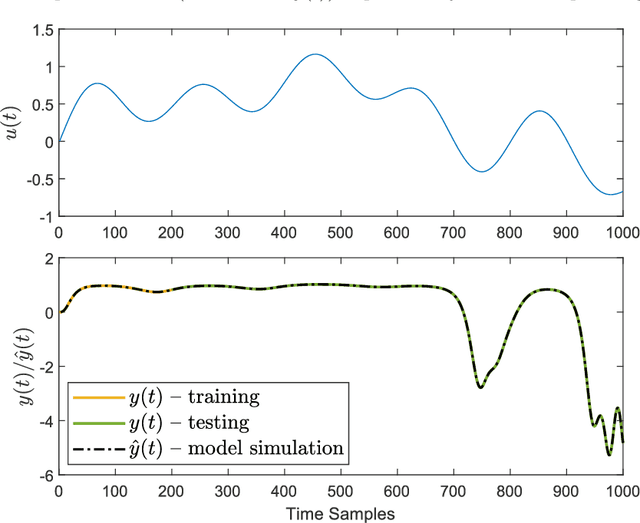

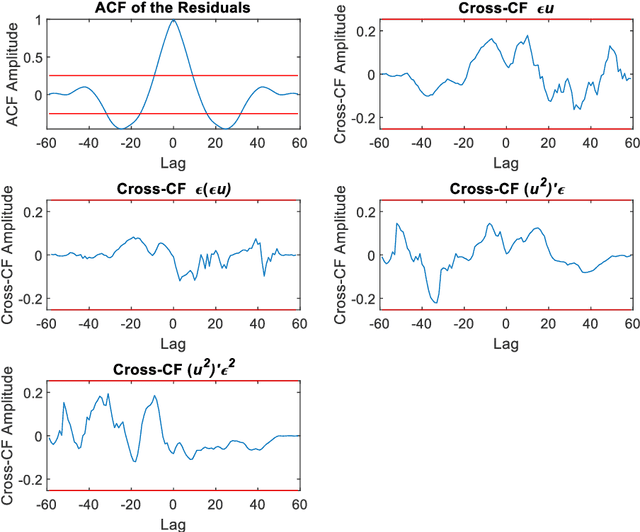

Abstract:System identification involves constructing mathematical models of dynamic systems using input-output data, enabling analysis and prediction of system behaviour in both time and frequency domains. This approach can model the entire system or capture specific dynamics within it. For meaningful analysis, it is essential for the model to accurately reflect the underlying system's behaviour. This paper introduces NonSysId, an open-sourced MATLAB software package designed for nonlinear system identification, specifically focusing on NARMAX models. The software incorporates an advanced term selection methodology that prioritises on simulation (free-run) accuracy while preserving model parsimony. A key feature is the integration of iterative Orthogonal Forward Regression (iOFR) with Predicted Residual Sum of Squares (PRESS) statistic-based term selection, facilitating robust model generalisation without the need for a separate validation dataset. Furthermore, techniques for reducing computational overheads are implemented. These features make NonSysId particularly suitable for real-time applications such as structural health monitoring, fault diagnosis, and biomedical signal processing, where it is a challenge to capture the signals under consistent conditions, resulting in limited or no validation data.

Balancing Spectral, Temporal and Spatial Information for EEG-based Alzheimer's Disease Classification

Feb 21, 2024

Abstract:The prospect of future treatment warrants the development of cost-effective screening for Alzheimer's disease (AD). A promising candidate in this regard is electroencephalography (EEG), as it is one of the most economic imaging modalities. Recent efforts in EEG analysis have shifted towards leveraging spatial information, employing novel frameworks such as graph signal processing or graph neural networks. Here, we systematically investigate the importance of spatial information relative to spectral or temporal information by varying the proportion of each dimension for AD classification. To do so, we test various dimension resolution configurations on two routine EEG datasets. We find that spatial information is consistently more relevant than temporal information and equally relevant as spectral information. These results emphasise the necessity to consider spatial information for EEG-based AD classification. On our second dataset, we further find that well-balanced feature resolutions boost classification accuracy by up to 1.6%. Our resolution-based feature extraction has the potential to improve AD classification specifically, and multivariate signal classification generally.

Stochastic Graph Heat Modelling for Diffusion-based Connectivity Retrieval

Feb 20, 2024Abstract:Heat diffusion describes the process by which heat flows from areas with higher temperatures to ones with lower temperatures. This concept was previously adapted to graph structures, whereby heat flows between nodes of a graph depending on the graph topology. Here, we combine the graph heat equation with the stochastic heat equation, which ultimately yields a model for multivariate time signals on a graph. We show theoretically how the model can be used to directly compute the diffusion-based connectivity structure from multivariate signals. Unlike other connectivity measures, our heat model-based approach is inherently multivariate and yields an absolute scaling factor, namely the graph thermal diffusivity, which captures the extent of heat-like graph propagation in the data. On two datasets, we show how the graph thermal diffusivity can be used to characterise Alzheimer's disease. We find that the graph thermal diffusivity is lower for Alzheimer's patients than healthy controls and correlates with dementia scores, suggesting structural impairment in patients in line with previous findings.

Deep Automated Mechanism Design for Integrating Ad Auction and Allocation in Feed

Jan 03, 2024

Abstract:E-commerce platforms usually present an ordered list, mixed with several organic items and an advertisement, in response to each user's page view request. This list, the outcome of ad auction and allocation processes, directly impacts the platform's ad revenue and gross merchandise volume (GMV). Specifically, the ad auction determines which ad is displayed and the corresponding payment, while the ad allocation decides the display positions of the advertisement and organic items. The prevalent methods of segregating the ad auction and allocation into two distinct stages face two problems: 1) Ad auction does not consider externalities, such as the influence of actual display position and context on ad Click-Through Rate (CTR); 2) The ad allocation, which utilizes the auction-winning ad's payment to determine the display position dynamically, fails to maintain incentive compatibility (IC) for the advertisement. For instance, in the auction stage employing the traditional Generalized Second Price (GSP) , even if the winning ad increases its bid, its payment remains unchanged. This implies that the advertisement cannot secure a better position and thus loses the opportunity to achieve higher utility in the subsequent ad allocation stage. Previous research often focused on one of the two stages, neglecting the two-stage problem, which may result in suboptimal outcomes...

Understanding Concepts in Graph Signal Processing for Neurophysiological Signal Analysis

Dec 06, 2023Abstract:Multivariate signals, which are measured simultaneously over time and acquired by sensor networks, are becoming increasingly common. The emerging field of graph signal processing (GSP) promises to analyse spectral characteristics of these multivariate signals, while at the same time taking the spatial structure between the time signals into account. A central idea in GSP is the graph Fourier transform, which projects a multivariate signal onto frequency-ordered graph Fourier modes, and can therefore be regarded as a spatial analog of the temporal Fourier transform. This chapter derives and discusses key concepts in GSP, with a specific focus on how the various concepts relate to one another. The experimental section focuses on the role of graph frequency in data classification, with applications to neuroimaging. To address the limited sample size of neurophysiological datasets, we introduce a minimalist simulation framework that can generate arbitrary amounts of data. Using this artificial data, we find that lower graph frequency signals are less suitable for classifying neurophysiological data as compared to higher graph frequency signals. Finally, we introduce a baseline testing framework for GSP. Employing this framework, our results suggest that GSP applications may attenuate spectral characteristics in the signals, highlighting current limitations of GSP for neuroimaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge