Faezeh Faez

Logic Synthesis Optimization with Predictive Self-Supervision via Causal Transformers

Sep 16, 2024

Abstract:Contemporary hardware design benefits from the abstraction provided by high-level logic gates, streamlining the implementation of logic circuits. Logic Synthesis Optimization (LSO) operates at one level of abstraction within the Electronic Design Automation (EDA) workflow, targeting improvements in logic circuits with respect to performance metrics such as size and speed in the final layout. Recent trends in the field show a growing interest in leveraging Machine Learning (ML) for EDA, notably through ML-guided logic synthesis utilizing policy-based Reinforcement Learning (RL) methods.Despite these advancements, existing models face challenges such as overfitting and limited generalization, attributed to constrained public circuits and the expressiveness limitations of graph encoders. To address these hurdles, and tackle data scarcity issues, we introduce LSOformer, a novel approach harnessing Autoregressive transformer models and predictive SSL to predict the trajectory of Quality of Results (QoR). LSOformer integrates cross-attention modules to merge insights from circuit graphs and optimization sequences, thereby enhancing prediction accuracy for QoR metrics. Experimental studies validate the effectiveness of LSOformer, showcasing its superior performance over baseline architectures in QoR prediction tasks, where it achieves improvements of 5.74%, 4.35%, and 17.06% on the EPFL, OABCD, and proprietary circuits datasets, respectively, in inductive setup.

MTLSO: A Multi-Task Learning Approach for Logic Synthesis Optimization

Sep 09, 2024

Abstract:Electronic Design Automation (EDA) is essential for IC design and has recently benefited from AI-based techniques to improve efficiency. Logic synthesis, a key EDA stage, transforms high-level hardware descriptions into optimized netlists. Recent research has employed machine learning to predict Quality of Results (QoR) for pairs of And-Inverter Graphs (AIGs) and synthesis recipes. However, the severe scarcity of data due to a very limited number of available AIGs results in overfitting, significantly hindering performance. Additionally, the complexity and large number of nodes in AIGs make plain GNNs less effective for learning expressive graph-level representations. To tackle these challenges, we propose MTLSO - a Multi-Task Learning approach for Logic Synthesis Optimization. On one hand, it maximizes the use of limited data by training the model across different tasks. This includes introducing an auxiliary task of binary multi-label graph classification alongside the primary regression task, allowing the model to benefit from diverse supervision sources. On the other hand, we employ a hierarchical graph representation learning strategy to improve the model's capacity for learning expressive graph-level representations of large AIGs, surpassing traditional plain GNNs. Extensive experiments across multiple datasets and against state-of-the-art baselines demonstrate the superiority of our method, achieving an average performance gain of 8.22\% for delay and 5.95\% for area.

Todyformer: Towards Holistic Dynamic Graph Transformers with Structure-Aware Tokenization

Feb 02, 2024

Abstract:Temporal Graph Neural Networks have garnered substantial attention for their capacity to model evolving structural and temporal patterns while exhibiting impressive performance. However, it is known that these architectures are encumbered by issues that constrain their performance, such as over-squashing and over-smoothing. Meanwhile, Transformers have demonstrated exceptional computational capacity to effectively address challenges related to long-range dependencies. Consequently, we introduce Todyformer-a novel Transformer-based neural network tailored for dynamic graphs. It unifies the local encoding capacity of Message-Passing Neural Networks (MPNNs) with the global encoding of Transformers through i) a novel patchifying paradigm for dynamic graphs to improve over-squashing, ii) a structure-aware parametric tokenization strategy leveraging MPNNs, iii) a Transformer with temporal positional-encoding to capture long-range dependencies, and iv) an encoding architecture that alternates between local and global contextualization, mitigating over-smoothing in MPNNs. Experimental evaluations on public benchmark datasets demonstrate that Todyformer consistently outperforms the state-of-the-art methods for downstream tasks. Furthermore, we illustrate the underlying aspects of the proposed model in effectively capturing extensive temporal dependencies in dynamic graphs.

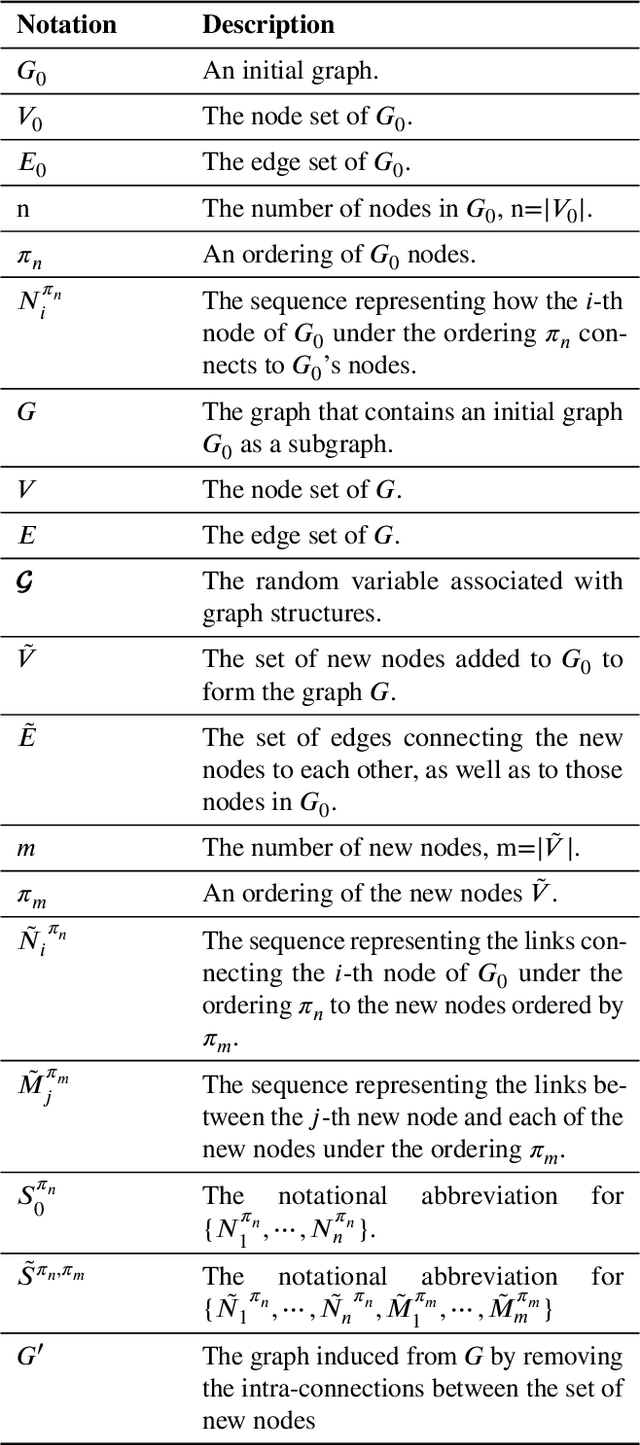

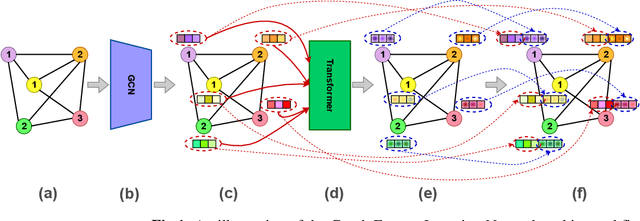

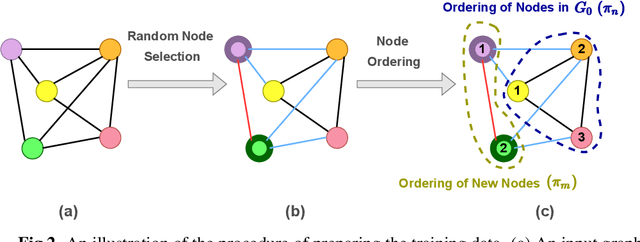

SCGG: A Deep Structure-Conditioned Graph Generative Model

Sep 20, 2022

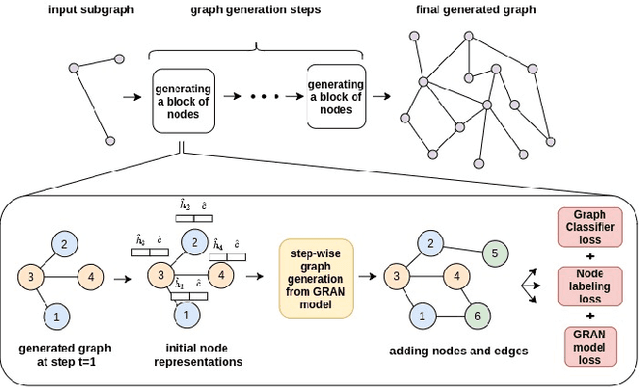

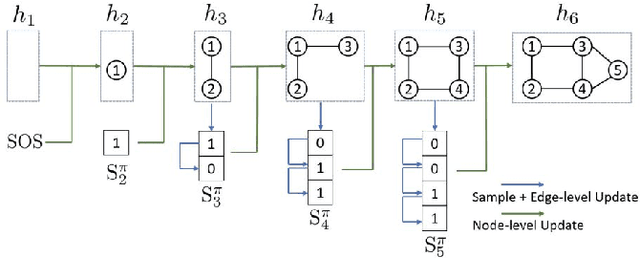

Abstract:Deep learning-based graph generation approaches have remarkable capacities for graph data modeling, allowing them to solve a wide range of real-world problems. Making these methods able to consider different conditions during the generation procedure even increases their effectiveness by empowering them to generate new graph samples that meet the desired criteria. This paper presents a conditional deep graph generation method called SCGG that considers a particular type of structural conditions. Specifically, our proposed SCGG model takes an initial subgraph and autoregressively generates new nodes and their corresponding edges on top of the given conditioning substructure. The architecture of SCGG consists of a graph representation learning network and an autoregressive generative model, which is trained end-to-end. Using this model, we can address graph completion, a rampant and inherently difficult problem of recovering missing nodes and their associated edges of partially observed graphs. Experimental results on both synthetic and real-world datasets demonstrate the superiority of our method compared with state-of-the-art baselines.

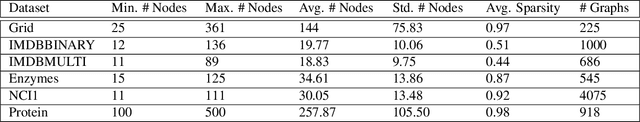

CCGG: A Deep Autoregressive Model for Class-Conditional Graph Generation

Oct 07, 2021

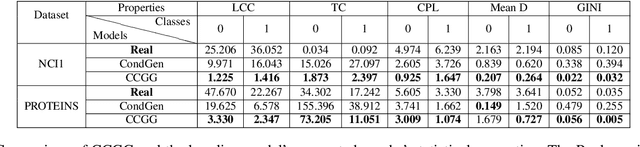

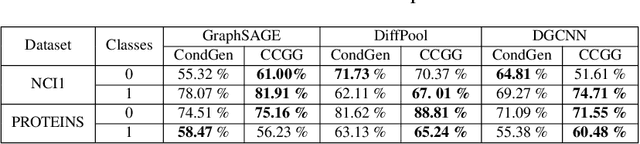

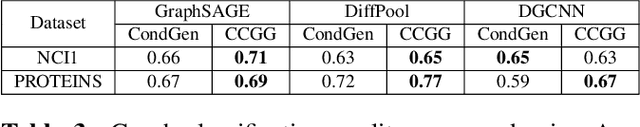

Abstract:Graph data structures are fundamental for studying connected entities. With an increase in the number of applications where data is represented as graphs, the problem of graph generation has recently become a hot topic in many signal processing areas. However, despite its significance, conditional graph generation that creates graphs with desired features is relatively less explored in previous studies. This paper addresses the problem of class-conditional graph generation that uses class labels as generation constraints by introducing the Class Conditioned Graph Generator (CCGG). We built CCGG by adding the class information as an additional input to a graph generator model and including a classification loss in its total loss along with a gradient passing trick. Our experiments show that CCGG outperforms existing conditional graph generation methods on various datasets. It also manages to maintain the quality of the generated graphs in terms of distribution-based evaluation metrics.

Deep Graph Generators: A Survey

Dec 31, 2020

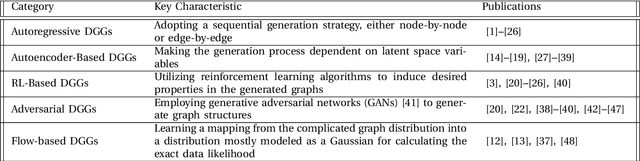

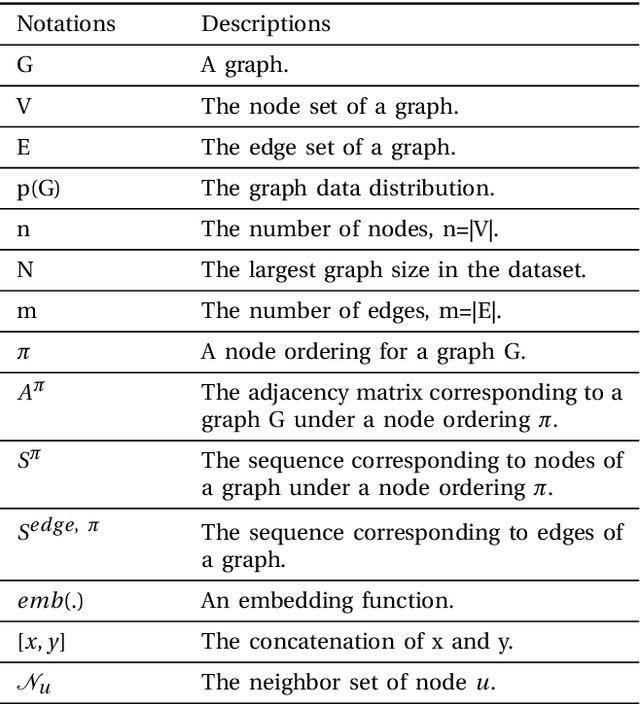

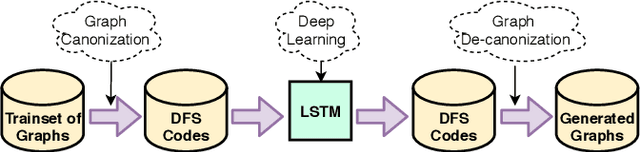

Abstract:Deep generative models have achieved great success in areas such as image, speech, and natural language processing in the past few years. Thanks to the advances in graph-based deep learning, and in particular graph representation learning, deep graph generation methods have recently emerged with new applications ranging from discovering novel molecular structures to modeling social networks. This paper conducts a comprehensive survey on deep learning-based graph generation approaches and classifies them into five broad categories, namely, autoregressive, autoencoder-based, RL-based, adversarial, and flow-based graph generators, providing the readers a detailed description of the methods in each class. We also present publicly available source codes, commonly used datasets, and the most widely utilized evaluation metrics. Finally, we highlight the existing challenges and discuss future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge