Fabien Alibart

Expanding memory in recurrent spiking networks

Oct 29, 2023Abstract:Recurrent spiking neural networks (RSNNs) are notoriously difficult to train because of the vanishing gradient problem that is enhanced by the binary nature of the spikes. In this paper, we review the ability of the current state-of-the-art RSNNs to solve long-term memory tasks, and show that they have strong constraints both in performance, and for their implementation on hardware analog neuromorphic processors. We present a novel spiking neural network that circumvents these limitations. Our biologically inspired neural network uses synaptic delays, branching factor regularization and a novel surrogate derivative for the spiking function. The proposed network proves to be more successful in using the recurrent connections on memory tasks.

Hardware-aware Training Techniques for Improving Robustness of Ex-Situ Neural Network Transfer onto Passive TiO2 ReRAM Crossbars

May 29, 2023

Abstract:Passive resistive random access memory (ReRAM) crossbar arrays, a promising emerging technology used for analog matrix-vector multiplications, are far superior to their active (1T1R) counterparts in terms of the integration density. However, current transfers of neural network weights into the conductance state of the memory devices in the crossbar architecture are accompanied by significant losses in precision due to hardware variabilities such as sneak path currents, biasing scheme effects and conductance tuning imprecision. In this work, training approaches that adapt techniques such as dropout, the reparametrization trick and regularization to TiO2 crossbar variabilities are proposed in order to generate models that are better adapted to their hardware transfers. The viability of this approach is demonstrated by comparing the outputs and precision of the proposed hardware-aware network with those of a regular fully connected network over a few thousand weight transfers using the half moons dataset in a simulation based on experimental data. For the neural network trained using the proposed hardware-aware method, 79.5% of the test set's data points can be classified with an accuracy of 95% or higher, while only 18.5% of the test set's data points can be classified with this accuracy by the regularly trained neural network.

Voltage-Dependent Synaptic Plasticity (VDSP): Unsupervised probabilistic Hebbian plasticity rule based on neurons membrane potential

Apr 14, 2022

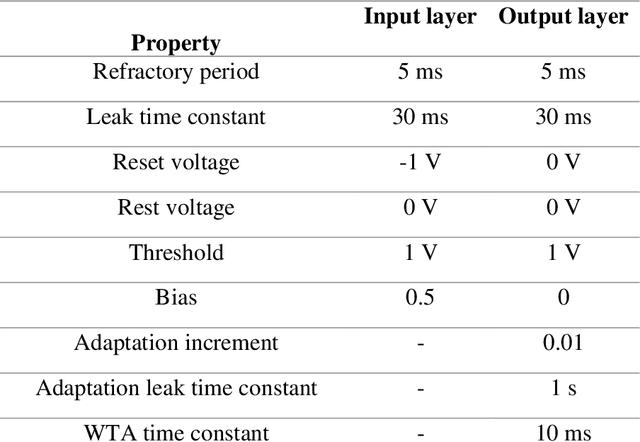

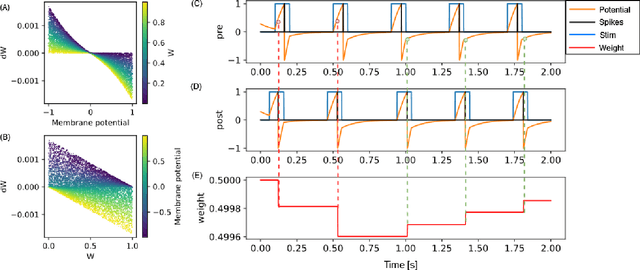

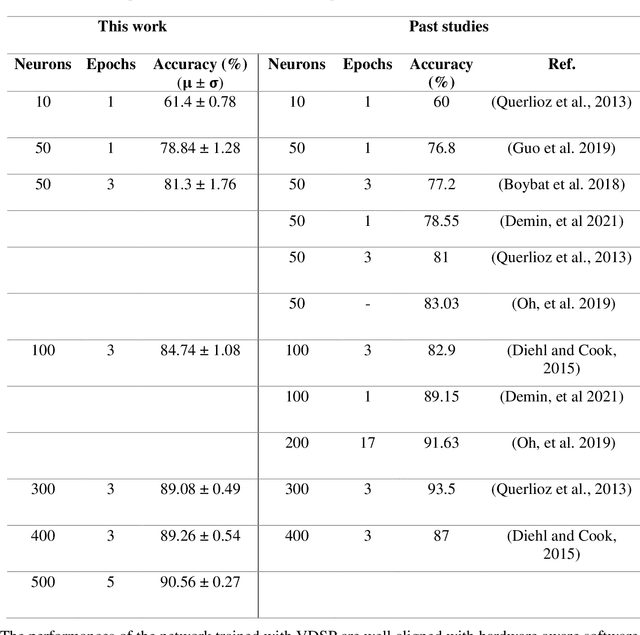

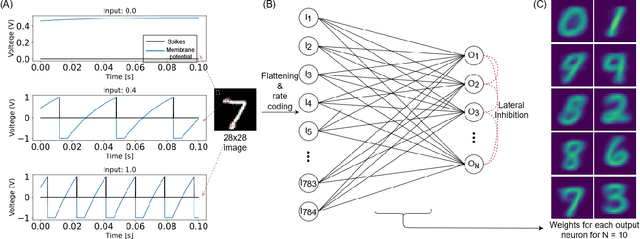

Abstract:This study proposes voltage-dependent-synaptic plasticity (VDSP), a novel brain-inspired unsupervised local learning rule for the online implementation of Hebb's plasticity mechanism on neuromorphic hardware. The proposed VDSP learning rule updates the synaptic conductance on the spike of the postsynaptic neuron only, which reduces by a factor of two the number of updates with respect to standard spike-timing-dependent plasticity (STDP). This update is dependent on the membrane potential of the presynaptic neuron, which is readily available as part of neuron implementation and hence does not require additional memory for storage. Moreover, the update is also regularized on synaptic weight and prevents explosion or vanishing of weights on repeated stimulation. Rigorous mathematical analysis is performed to draw an equivalence between VDSP and STDP. To validate the system-level performance of VDSP, we train a single-layer spiking neural network (SNN) for the recognition of handwritten digits. We report 85.01 $ \pm $ 0.76% (Mean $ \pm $ S.D.) accuracy for a network of 100 output neurons on the MNIST dataset. The performance improves when scaling the network size (89.93 $ \pm $ 0.41% for 400 output neurons, 90.56 $ \pm $ 0.27 for 500 neurons), which validates the applicability of the proposed learning rule for large-scale computer vision tasks. Interestingly, the learning rule better adapts than STDP to the frequency of input signal and does not require hand-tuning of hyperparameters.

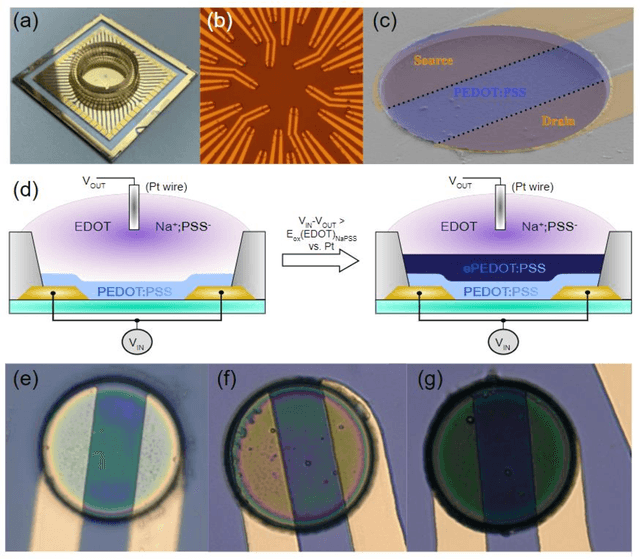

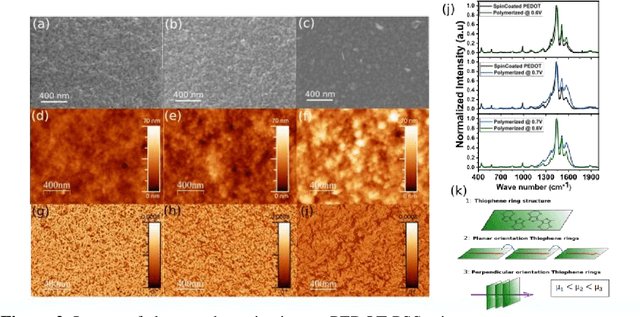

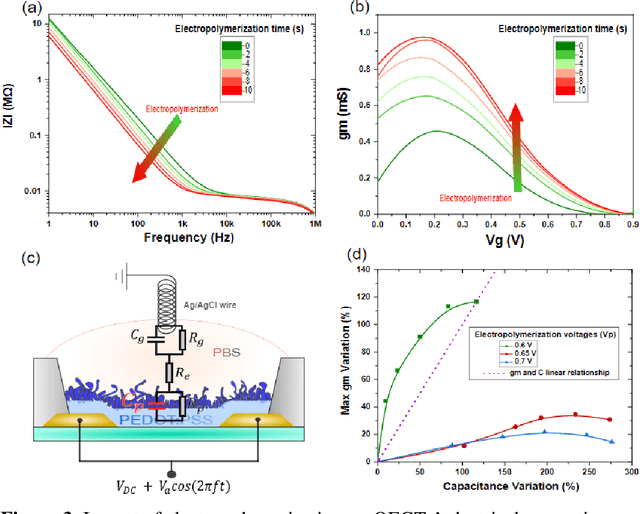

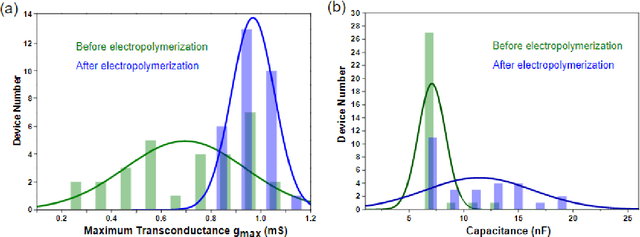

Bio-inspired adaptive sensing through electropolymerization of organic electrochemical transistors

Aug 30, 2021

Abstract:Organic Electrochemical Transistors are considered today as a key technology to interact with biological medium through their intrinsic ionic-electronic coupling. In this paper, we show how this coupling can be finely tuned (in operando) post-microfabrication via electropolymerization technique. This strategy exploits the concept of adaptive sensing where both transconductance and impedance are tunable and can be modified on-demand to match different sensing requirements. Material investigation through Raman spectroscopy, atomic force microscopy and scanning electron microscopy reveals that electropolymerization can lead to a fine control of PEDOT microdomains organization, which directly affect the iono-electronic properties of OECTs. We further highlight how volumetric capacitance and effective mobility of PEDOT:PSS influence distinctively the transconductance and impedance of OECTs. This approach shows to improve the transconductance by 150% while reducing their variability by 60% in comparison with standard spin-coated OECTs. Finally, we show how to the technique can influence voltage spike rate hardware classificationwith direct interest in bio-signals sorting applications.

Signals to Spikes for Neuromorphic Regulated Reservoir Computing and EMG Hand Gesture Recognition

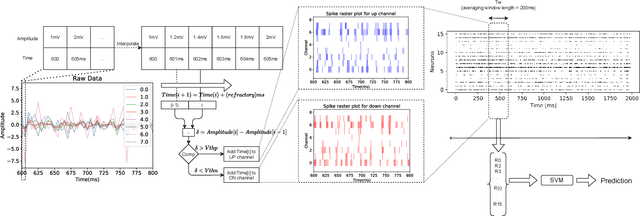

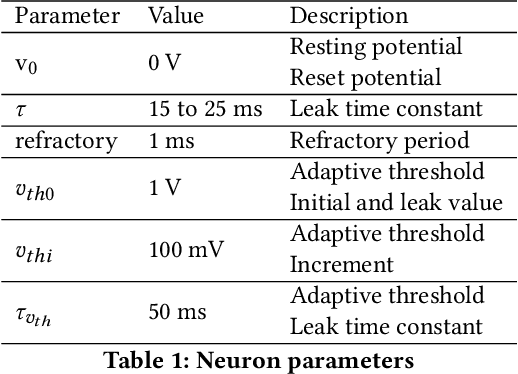

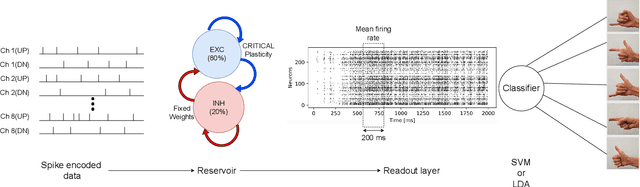

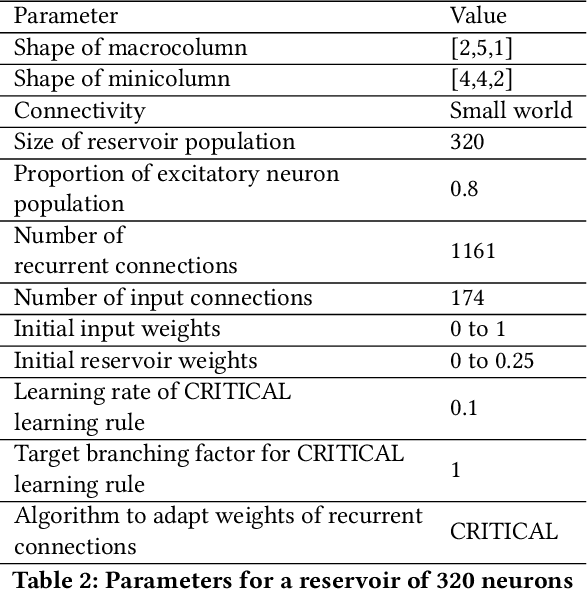

Jul 04, 2021

Abstract:Surface electromyogram (sEMG) signals result from muscle movement and hence they are an ideal candidate for benchmarking event-driven sensing and computing. We propose a simple yet novel approach for optimizing the spike encoding algorithm's hyper-parameters inspired by the readout layer concept in reservoir computing. Using a simple machine learning algorithm after spike encoding, we report performance higher than the state-of-the-art spiking neural networks on two open-source datasets for hand gesture recognition. The spike encoded data is processed through a spiking reservoir with a biologically inspired topology and neuron model. When trained with the unsupervised activity regulation CRITICAL algorithm to operate at the edge of chaos, the reservoir yields better performance than state-of-the-art convolutional neural networks. The reservoir performance with regulated activity was found to be 89.72% for the Roshambo EMG dataset and 70.6% for the EMG subset of sensor fusion dataset. Therefore, the biologically-inspired computing paradigm, which is known for being power efficient, also proves to have a great potential when compared with conventional AI algorithms.

Exploiting the Short-term to Long-term Plasticity Transition in Memristive Nanodevice Learning Architectures

Jun 27, 2016

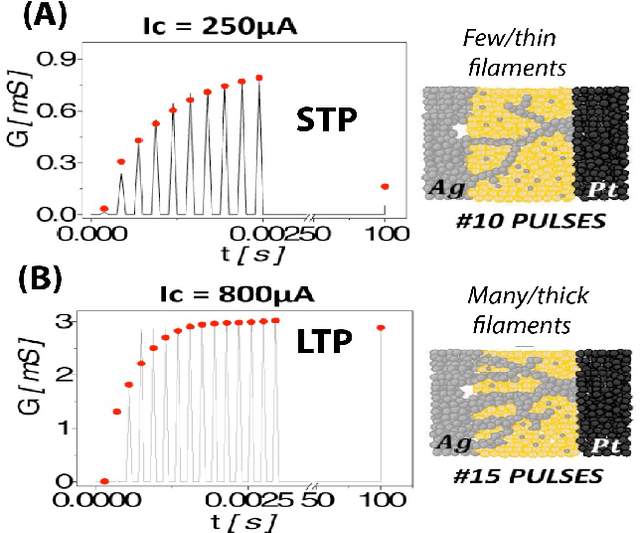

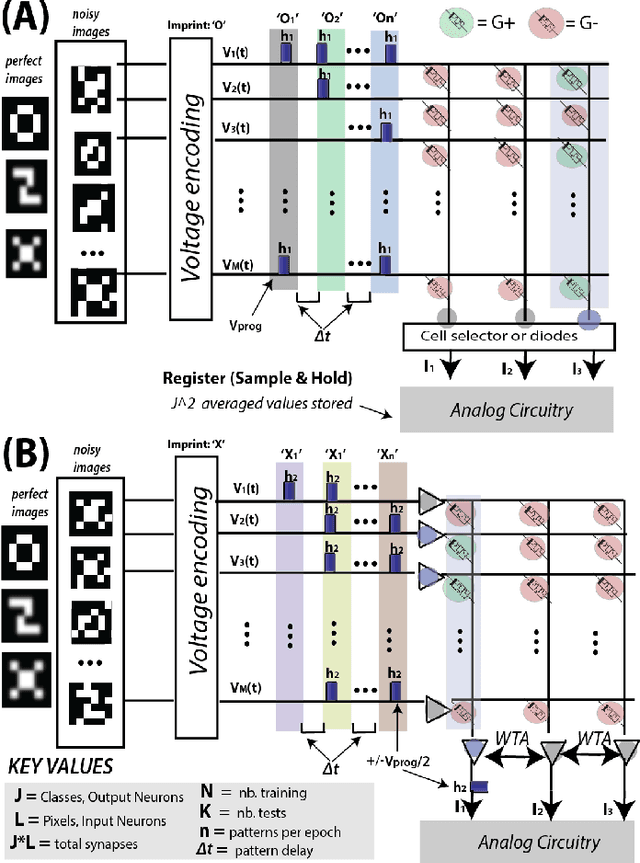

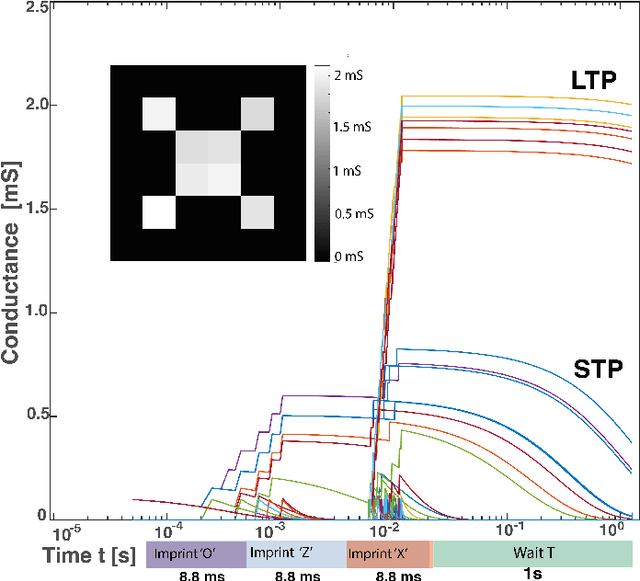

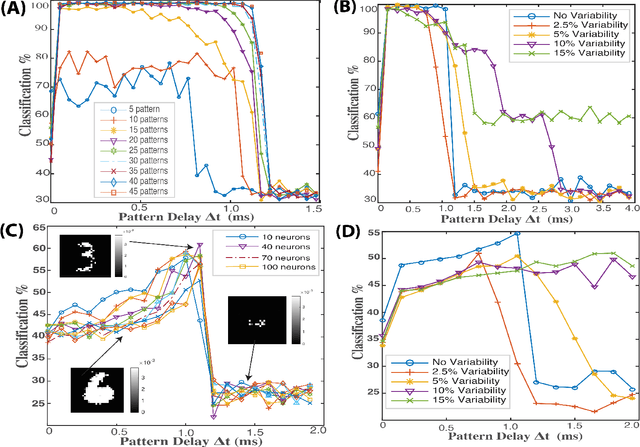

Abstract:Memristive nanodevices offer new frontiers for computing systems that unite arithmetic and memory operations on-chip. Here, we explore the integration of electrochemical metallization cell (ECM) nanodevices with tunable filamentary switching in nanoscale learning systems. Such devices offer a natural transition between short-term plasticity (STP) and long-term plasticity (LTP). In this work, we show that this property can be exploited to efficiently solve noisy classification tasks. A single crossbar learning scheme is first introduced and evaluated. Perfect classification is possible only for simple input patterns, within critical timing parameters, and when device variability is weak. To overcome these limitations, a dual-crossbar learning system partly inspired by the extreme learning machine (ELM) approach is then introduced. This approach outperforms a conventional ELM-inspired system when the first layer is imprinted before training and testing, and especially so when variability in device timing evolution is considered: variability is therefore transformed from an issue to a feature. In attempting to classify the MNIST database under the same conditions, conventional ELM obtains 84% classification, the imprinted, uniform device system obtains 88% classification, and the imprinted, variable device system reaches 92% classification. We discuss benefits and drawbacks of both systems in terms of energy, complexity, area imprint, and speed. All these results highlight that tuning and exploiting intrinsic device timing parameters may be of central interest to future bio-inspired approximate computing systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge