Exploiting the Short-term to Long-term Plasticity Transition in Memristive Nanodevice Learning Architectures

Paper and Code

Jun 27, 2016

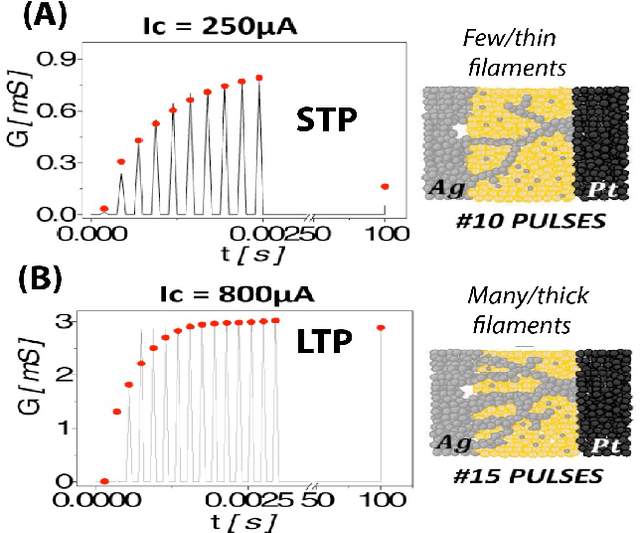

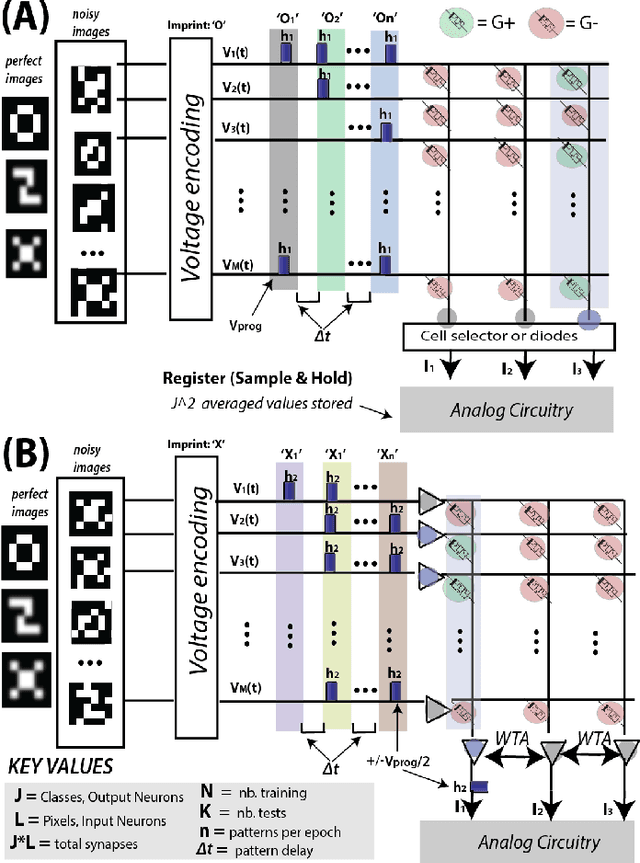

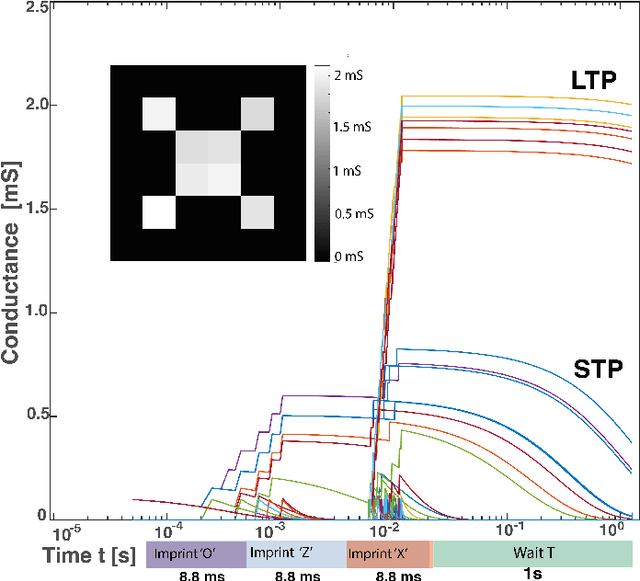

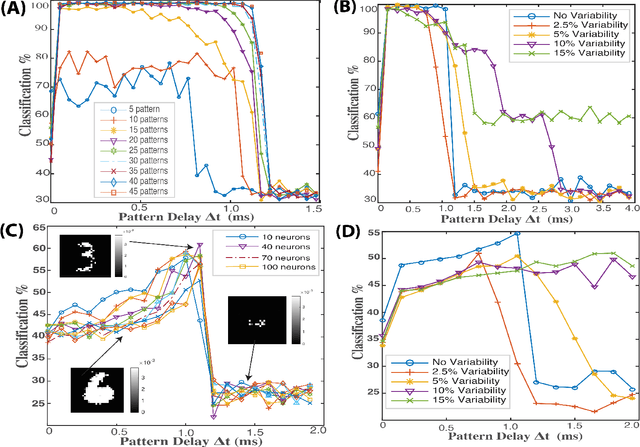

Memristive nanodevices offer new frontiers for computing systems that unite arithmetic and memory operations on-chip. Here, we explore the integration of electrochemical metallization cell (ECM) nanodevices with tunable filamentary switching in nanoscale learning systems. Such devices offer a natural transition between short-term plasticity (STP) and long-term plasticity (LTP). In this work, we show that this property can be exploited to efficiently solve noisy classification tasks. A single crossbar learning scheme is first introduced and evaluated. Perfect classification is possible only for simple input patterns, within critical timing parameters, and when device variability is weak. To overcome these limitations, a dual-crossbar learning system partly inspired by the extreme learning machine (ELM) approach is then introduced. This approach outperforms a conventional ELM-inspired system when the first layer is imprinted before training and testing, and especially so when variability in device timing evolution is considered: variability is therefore transformed from an issue to a feature. In attempting to classify the MNIST database under the same conditions, conventional ELM obtains 84% classification, the imprinted, uniform device system obtains 88% classification, and the imprinted, variable device system reaches 92% classification. We discuss benefits and drawbacks of both systems in terms of energy, complexity, area imprint, and speed. All these results highlight that tuning and exploiting intrinsic device timing parameters may be of central interest to future bio-inspired approximate computing systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge