Eugene Valassakis

HandDGP: Camera-Space Hand Mesh Prediction with Differentiable Global Positioning

Jul 22, 2024

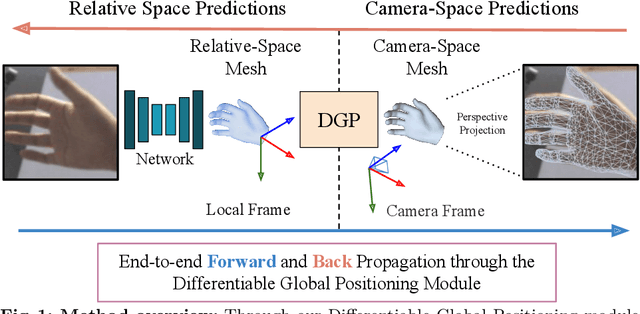

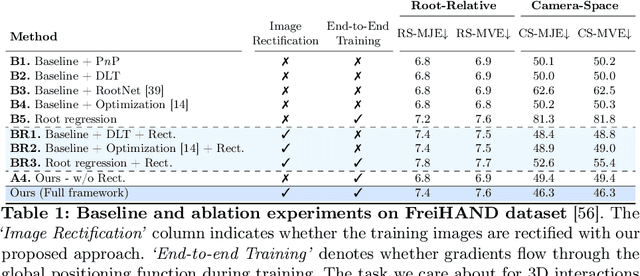

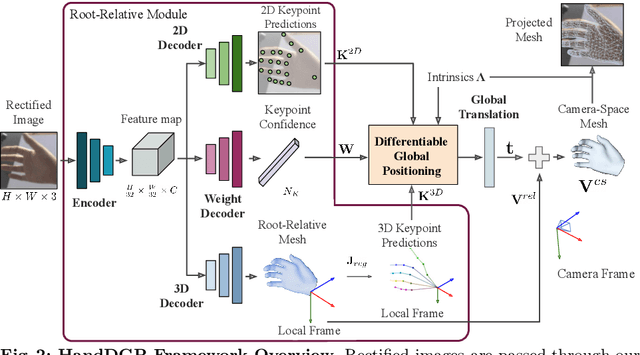

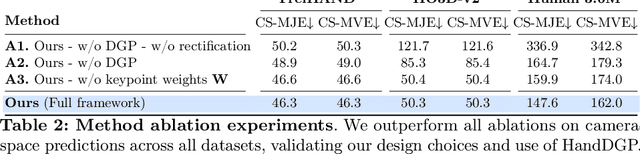

Abstract:Predicting camera-space hand meshes from single RGB images is crucial for enabling realistic hand interactions in 3D virtual and augmented worlds. Previous work typically divided the task into two stages: given a cropped image of the hand, predict meshes in relative coordinates, followed by lifting these predictions into camera space in a separate and independent stage, often resulting in the loss of valuable contextual and scale information. To prevent the loss of these cues, we propose unifying these two stages into an end-to-end solution that addresses the 2D-3D correspondence problem. This solution enables back-propagation from camera space outputs to the rest of the network through a new differentiable global positioning module. We also introduce an image rectification step that harmonizes both the training dataset and the input image as if they were acquired with the same camera, helping to alleviate the inherent scale-depth ambiguity of the problem. We validate the effectiveness of our framework in evaluations against several baselines and state-of-the-art approaches across three public benchmarks.

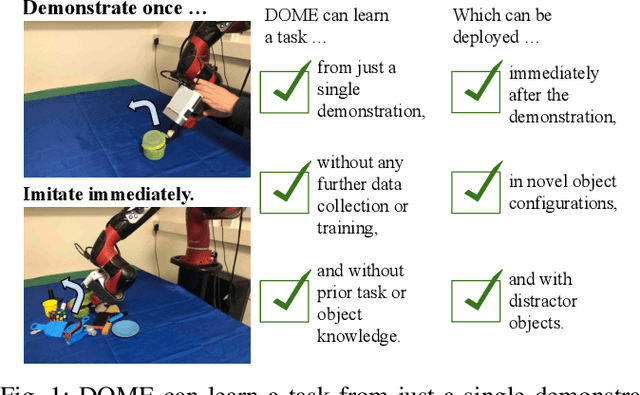

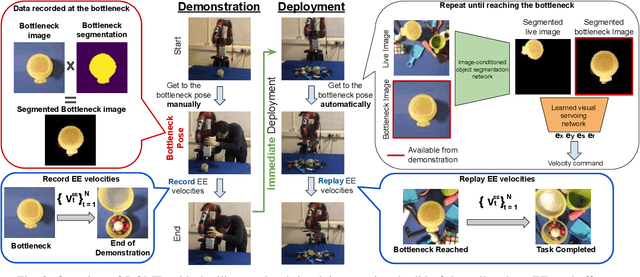

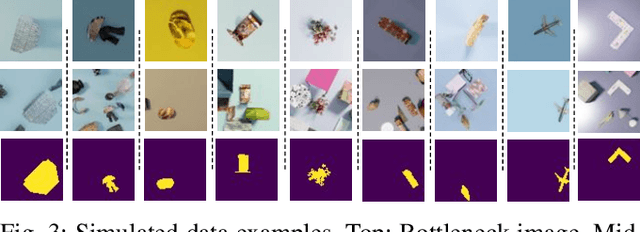

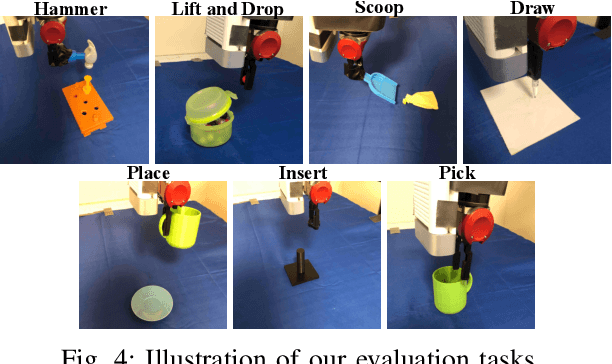

Demonstrate Once, Imitate Immediately (DOME): Learning Visual Servoing for One-Shot Imitation Learning

Apr 06, 2022

Abstract:We present DOME, a novel method for one-shot imitation learning, where a task can be learned from just a single demonstration and then be deployed immediately, without any further data collection or training. DOME does not require prior task or object knowledge, and can perform the task in novel object configurations and with distractors. At its core, DOME uses an image-conditioned object segmentation network followed by a learned visual servoing network, to move the robot's end-effector to the same relative pose to the object as during the demonstration, after which the task can be completed by replaying the demonstration's end-effector velocities. We show that DOME achieves near 100% success rate on 7 real-world everyday tasks, and we perform several studies to thoroughly understand each individual component of DOME.

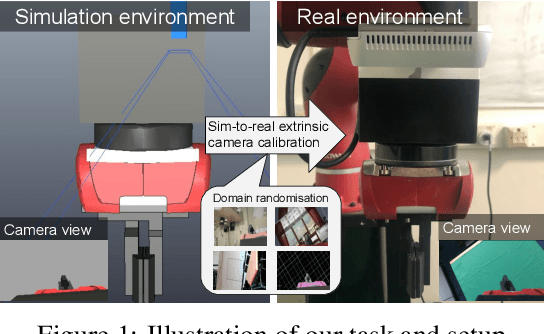

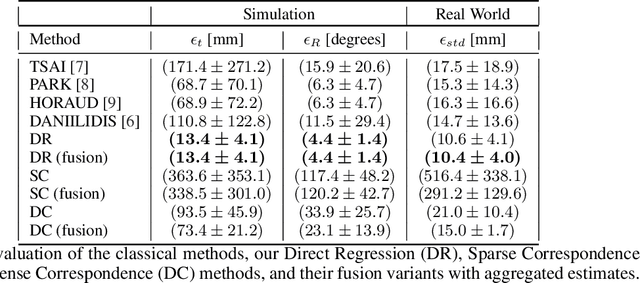

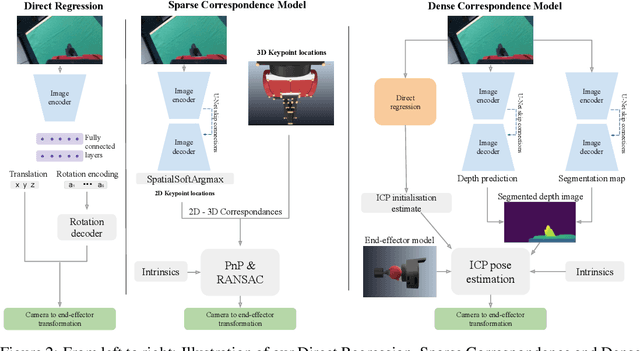

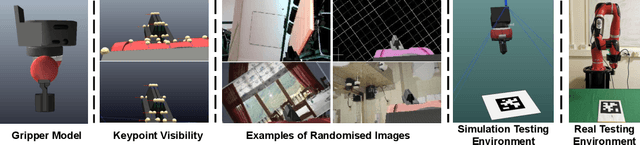

Learning Eye-in-Hand Camera Calibration from a Single Image

Nov 03, 2021

Abstract:Eye-in-hand camera calibration is a fundamental and long-studied problem in robotics. We present a study on using learning-based methods for solving this problem online from a single RGB image, whilst training our models with entirely synthetic data. We study three main approaches: one direct regression model that directly predicts the extrinsic matrix from an image, one sparse correspondence model that regresses 2D keypoints and then uses PnP, and one dense correspondence model that uses regressed depth and segmentation maps to enable ICP pose estimation. In our experiments, we benchmark these methods against each other and against well-established classical methods, to find the surprising result that direct regression outperforms other approaches, and we perform noise-sensitivity analysis to gain further insights into these results.

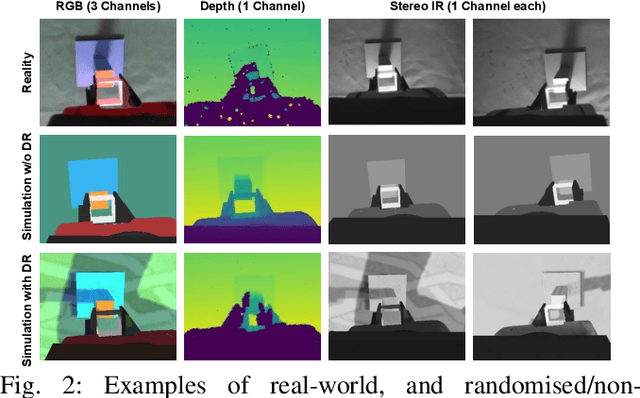

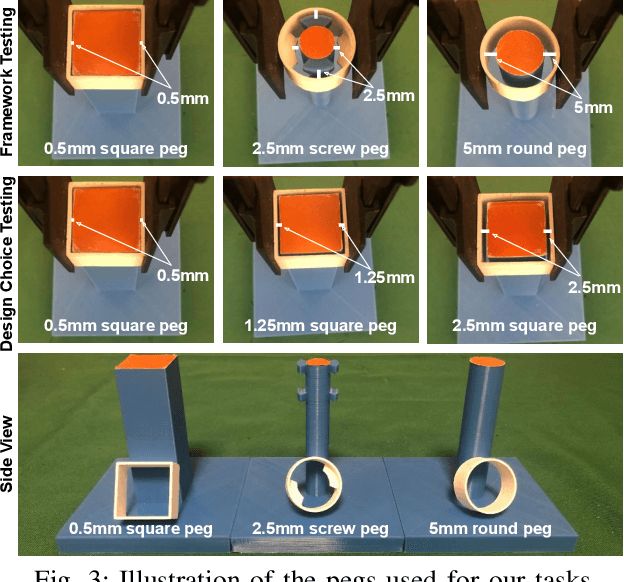

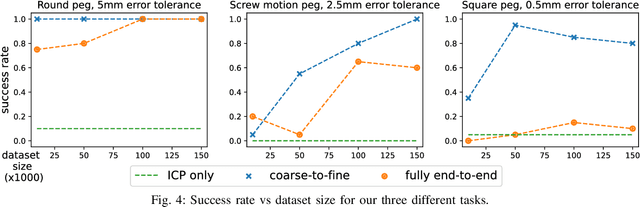

Coarse-to-Fine for Sim-to-Real: Sub-Millimetre Precision Across the Workspace

May 24, 2021

Abstract:When training control policies for robot manipulation via deep learning, sim-to-real transfer can help satisfy the large data requirements. In this paper, we study the problem of zero-shot sim-to-real when the task requires both highly precise control, with sub-millimetre error tolerance, and full workspace generalisation. Our framework involves a coarse-to-fine controller, where trajectories initially begin with classical motion planning based on pose estimation, and transition to an end-to-end controller which maps images to actions and is trained in simulation with domain randomisation. In this way, we achieve precise control whilst also generalising the controller across the workspace and keeping the generality and robustness of vision-based, end-to-end control. Real-world experiments on a range of different tasks show that, by exploiting the best of both worlds, our framework significantly outperforms purely motion planning methods, and purely learning-based methods. Furthermore, we answer a range of questions on best practices for precise sim-to-real transfer, such as how different image sensor modalities and image feature representations perform.

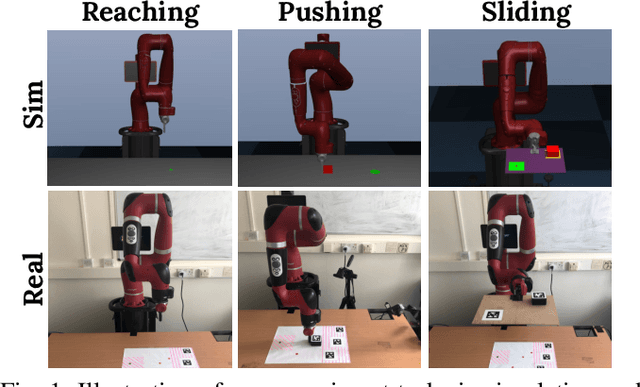

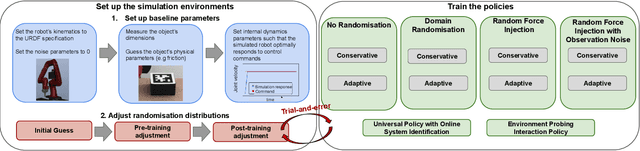

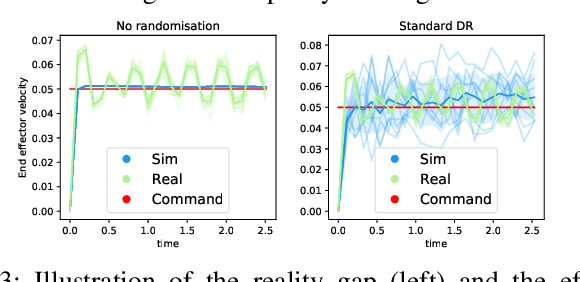

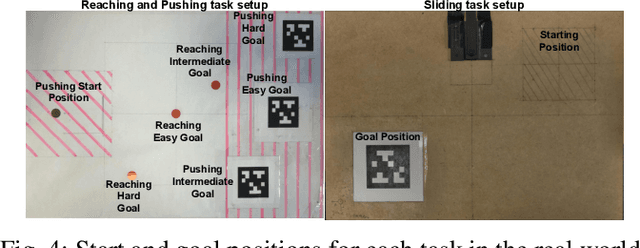

Crossing The Gap: A Deep Dive into Zero-Shot Sim-to-Real Transfer for Dynamics

Aug 15, 2020

Abstract:Zero-shot sim-to-real transfer of tasks with complex dynamics is a highly challenging and unsolved problem. A number of solutions have been proposed in recent years, but we have found that many works do not present a thorough evaluation in the real world, or underplay the significant engineering effort and task-specific fine tuning that is required to achieve the published results. In this paper, we dive deeper into the sim-to-real transfer challenge, investigate why this is such a difficult problem, and present objective evaluations of a number of transfer methods across a range of real-world tasks. Surprisingly, we found that a method which simply injects random forces into the simulation performs just as well as more complex methods, such as those which randomise the simulator's dynamics parameters, or adapt a policy online using recurrent network architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge