Coarse-to-Fine for Sim-to-Real: Sub-Millimetre Precision Across the Workspace

Paper and Code

May 24, 2021

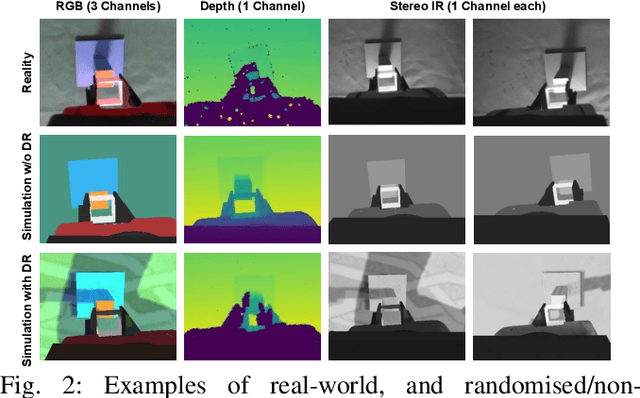

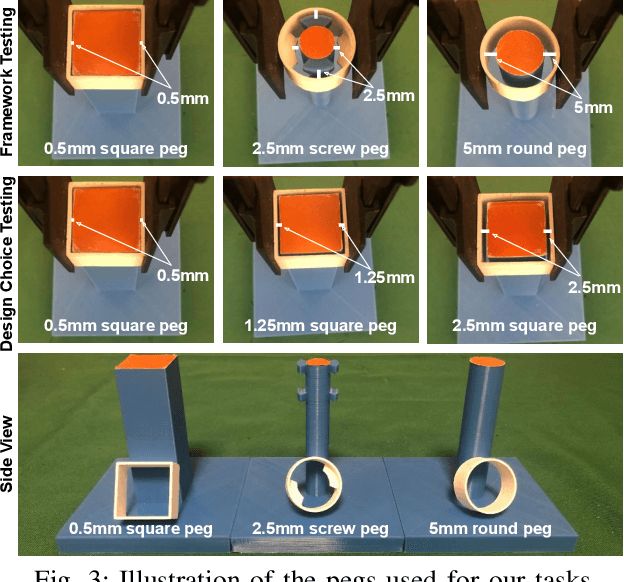

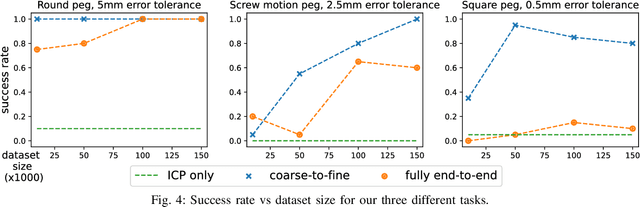

When training control policies for robot manipulation via deep learning, sim-to-real transfer can help satisfy the large data requirements. In this paper, we study the problem of zero-shot sim-to-real when the task requires both highly precise control, with sub-millimetre error tolerance, and full workspace generalisation. Our framework involves a coarse-to-fine controller, where trajectories initially begin with classical motion planning based on pose estimation, and transition to an end-to-end controller which maps images to actions and is trained in simulation with domain randomisation. In this way, we achieve precise control whilst also generalising the controller across the workspace and keeping the generality and robustness of vision-based, end-to-end control. Real-world experiments on a range of different tasks show that, by exploiting the best of both worlds, our framework significantly outperforms purely motion planning methods, and purely learning-based methods. Furthermore, we answer a range of questions on best practices for precise sim-to-real transfer, such as how different image sensor modalities and image feature representations perform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge