Eric Wengrowski

Why Don't You Clean Your Glasses? Perception Attacks with Dynamic Optical Perturbations

Jul 27, 2023

Abstract:Camera-based autonomous systems that emulate human perception are increasingly being integrated into safety-critical platforms. Consequently, an established body of literature has emerged that explores adversarial attacks targeting the underlying machine learning models. Adapting adversarial attacks to the physical world is desirable for the attacker, as this removes the need to compromise digital systems. However, the real world poses challenges related to the "survivability" of adversarial manipulations given environmental noise in perception pipelines and the dynamicity of autonomous systems. In this paper, we take a sensor-first approach. We present EvilEye, a man-in-the-middle perception attack that leverages transparent displays to generate dynamic physical adversarial examples. EvilEye exploits the camera's optics to induce misclassifications under a variety of illumination conditions. To generate dynamic perturbations, we formalize the projection of a digital attack into the physical domain by modeling the transformation function of the captured image through the optical pipeline. Our extensive experiments show that EvilEye's generated adversarial perturbations are much more robust across varying environmental light conditions relative to existing physical perturbation frameworks, achieving a high attack success rate (ASR) while bypassing state-of-the-art physical adversarial detection frameworks. We demonstrate that the dynamic nature of EvilEye enables attackers to adapt adversarial examples across a variety of objects with a significantly higher ASR compared to state-of-the-art physical world attack frameworks. Finally, we discuss mitigation strategies against the EvilEye attack.

Reading Between the Pixels: Photographic Steganography for Camera Display Messaging

Apr 06, 2016

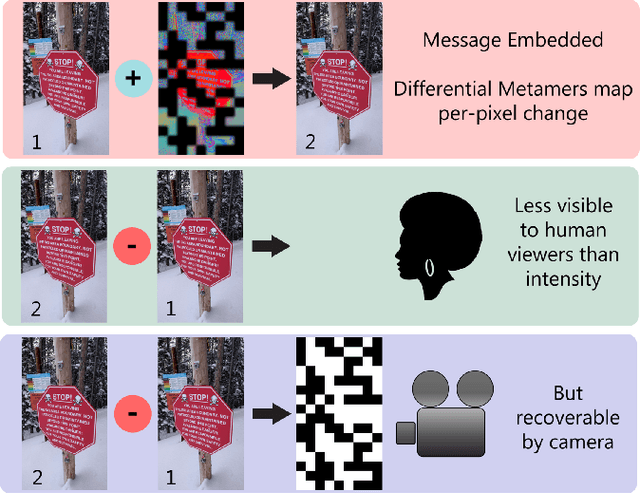

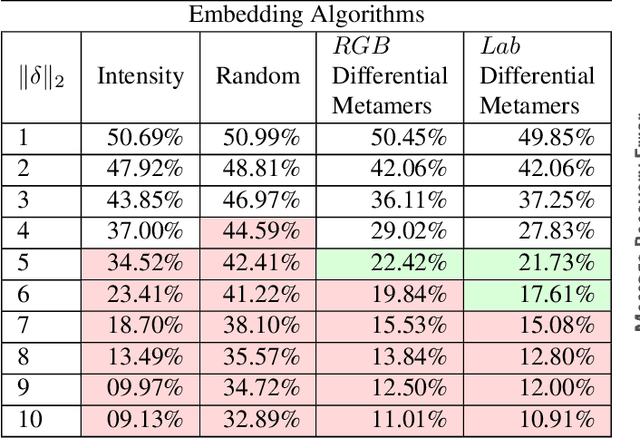

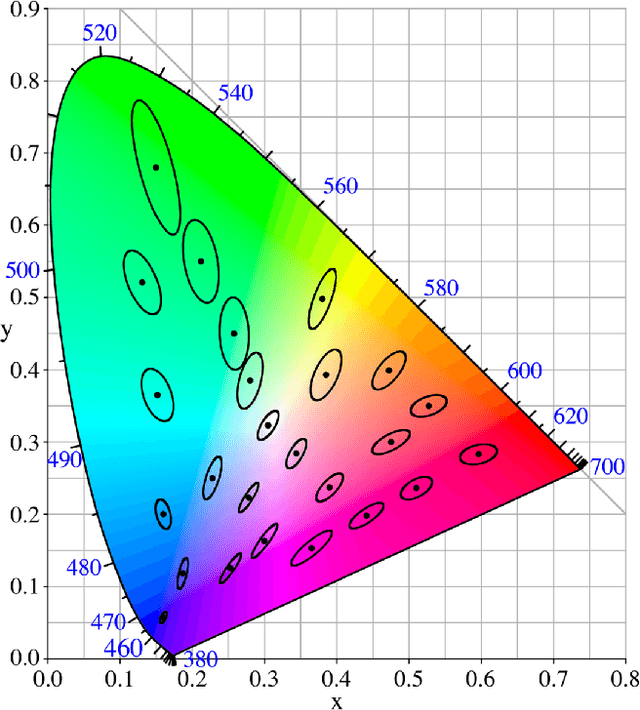

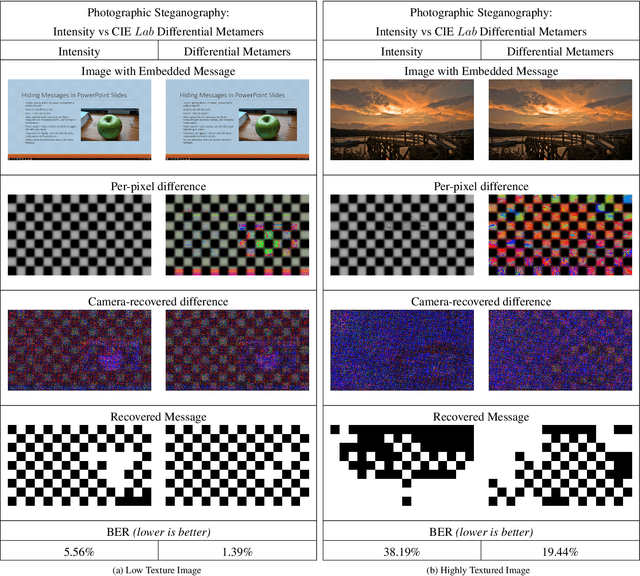

Abstract:We exploit human color metamers to send light-modulated messages less visible to the human eye, but recoverable by cameras. These messages are a key component to camera-display messaging, such as handheld smartphones capturing information from electronic signage. Each color pixel in the display image is modified by a particular color gradient vector. The challenge is to find the color gradient that maximizes camera response, while minimizing human response. The mismatch in human spectral and camera sensitivity curves creates an opportunity for hidden messaging. Our approach does not require knowledge of these sensitivity curves, instead we employ a data-driven method. We learn an ellipsoidal partitioning of the six-dimensional space of colors and color gradients. This partitioning creates metamer sets defined by the base color at the display pixel and the color gradient direction for message encoding. We sample from the resulting metamer sets to find color steps for each base color to embed a binary message into an arbitrary image with reduced visible artifacts. Unlike previous methods that rely on visually obtrusive intensity modulation, we embed with color so that the message is more hidden. Ordinary displays and cameras are used without the need for expensive LEDs or high speed devices. The primary contribution of this work is a framework to map the pixels in an arbitrary image to a metamer pair for steganographic photo messaging.

Optimal Radiometric Calibration for Camera-Display Communication

Jan 08, 2015

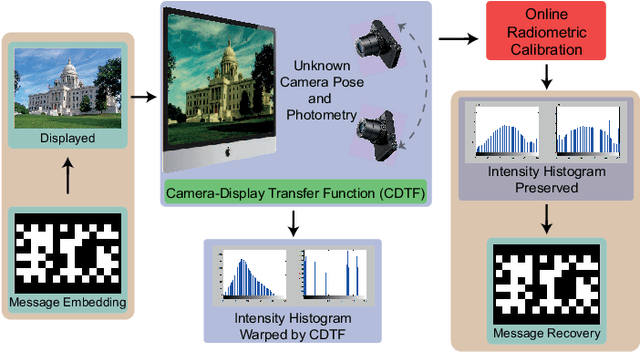

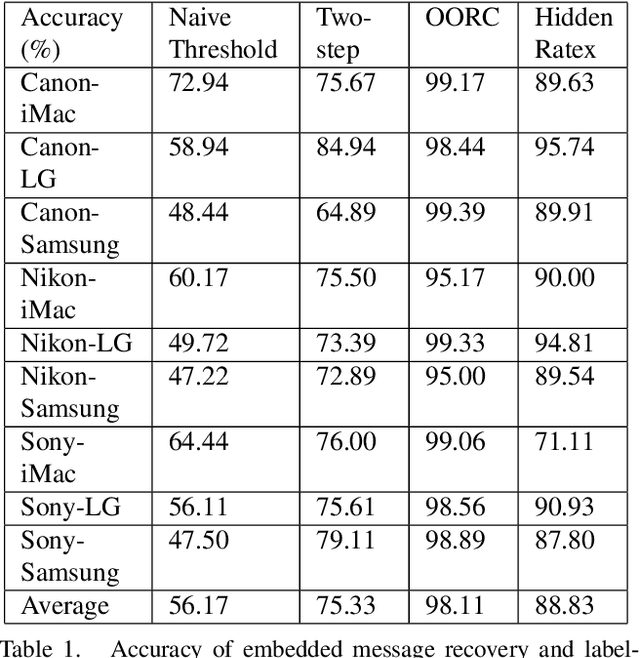

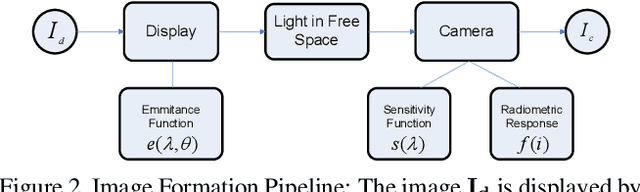

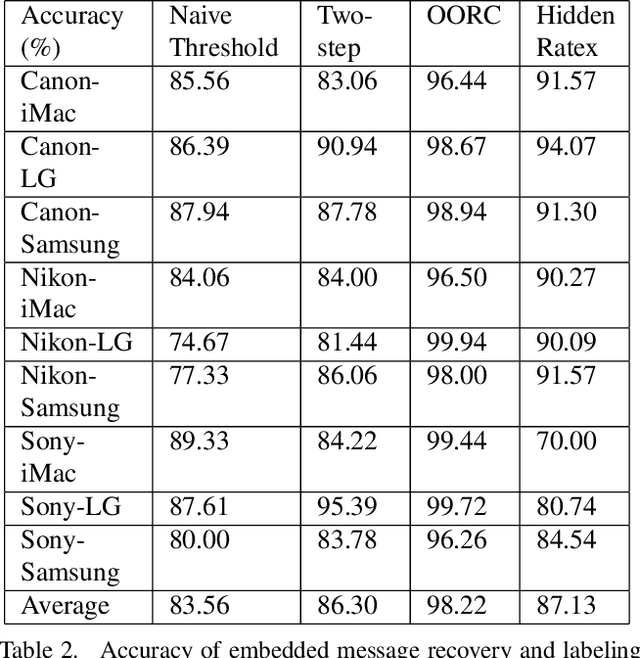

Abstract:We present a novel method for communicating between a camera and display by embedding and recovering hidden and dynamic information within a displayed image. A handheld camera pointed at the display can receive not only the display image, but also the underlying message. These active scenes are fundamentally different from traditional passive scenes like QR codes because image formation is based on display emittance, not surface reflectance. Detecting and decoding the message requires careful photometric modeling for computational message recovery. Unlike standard watermarking and steganography methods that lie outside the domain of computer vision, our message recovery algorithm uses illumination to optically communicate hidden messages in real world scenes. The key innovation of our approach is an algorithm that performs simultaneous radiometric calibration and message recovery in one convex optimization problem. By modeling the photometry of the system using a camera-display transfer function (CDTF), we derive a physics-based kernel function for support vector machine classification. We demonstrate that our method of optimal online radiometric calibration (OORC) leads to an efficient and robust algorithm for computational messaging between nine commercial cameras and displays.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge