Nils Ole Tippenhauer

Why Don't You Clean Your Glasses? Perception Attacks with Dynamic Optical Perturbations

Jul 27, 2023

Abstract:Camera-based autonomous systems that emulate human perception are increasingly being integrated into safety-critical platforms. Consequently, an established body of literature has emerged that explores adversarial attacks targeting the underlying machine learning models. Adapting adversarial attacks to the physical world is desirable for the attacker, as this removes the need to compromise digital systems. However, the real world poses challenges related to the "survivability" of adversarial manipulations given environmental noise in perception pipelines and the dynamicity of autonomous systems. In this paper, we take a sensor-first approach. We present EvilEye, a man-in-the-middle perception attack that leverages transparent displays to generate dynamic physical adversarial examples. EvilEye exploits the camera's optics to induce misclassifications under a variety of illumination conditions. To generate dynamic perturbations, we formalize the projection of a digital attack into the physical domain by modeling the transformation function of the captured image through the optical pipeline. Our extensive experiments show that EvilEye's generated adversarial perturbations are much more robust across varying environmental light conditions relative to existing physical perturbation frameworks, achieving a high attack success rate (ASR) while bypassing state-of-the-art physical adversarial detection frameworks. We demonstrate that the dynamic nature of EvilEye enables attackers to adapt adversarial examples across a variety of objects with a significantly higher ASR compared to state-of-the-art physical world attack frameworks. Finally, we discuss mitigation strategies against the EvilEye attack.

No Need to Know Physics: Resilience of Process-based Model-free Anomaly Detection for Industrial Control Systems

Dec 07, 2020

Abstract:In recent years, a number of process-based anomaly detection schemes for Industrial Control Systems were proposed. In this work, we provide the first systematic analysis of such schemes, and introduce a taxonomy of properties that are verified by those detection systems. We then present a novel general framework to generate adversarial spoofing signals that violate physical properties of the system, and use the framework to analyze four anomaly detectors published at top security conferences. We find that three of those detectors are susceptible to a number of adversarial manipulations (e.g., spoofing with precomputed patterns), which we call Synthetic Sensor Spoofing and one is resilient against our attacks. We investigate the root of its resilience and demonstrate that it comes from the properties that we introduced. Our attacks reduce the Recall (True Positive Rate) of the attacked schemes making them not able to correctly detect anomalies. Thus, the vulnerabilities we discovered in the anomaly detectors show that (despite an original good detection performance), those detectors are not able to reliably learn physical properties of the system. Even attacks that prior work was expected to be resilient against (based on verified properties) were found to be successful. We argue that our findings demonstrate the need for both more complete attacks in datasets, and more critical analysis of process-based anomaly detectors. We plan to release our implementation as open-source, together with an extension of two public datasets with a set of Synthetic Sensor Spoofing attacks as generated by our framework.

Real-time Evasion Attacks with Physical Constraints on Deep Learning-based Anomaly Detectors in Industrial Control Systems

Jul 17, 2019

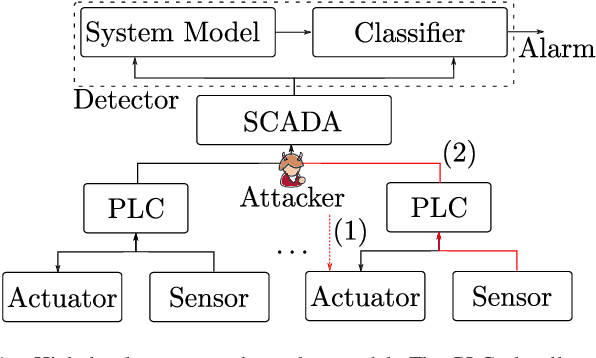

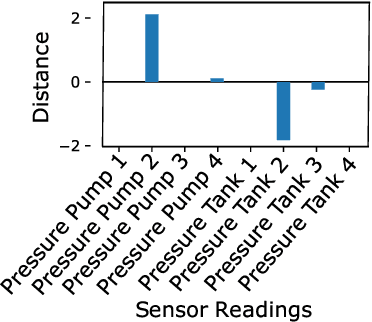

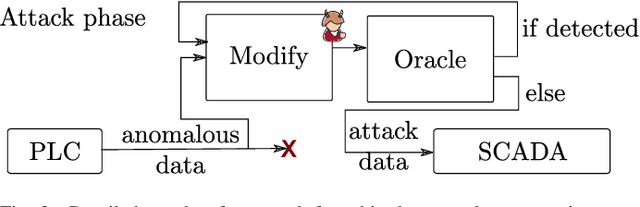

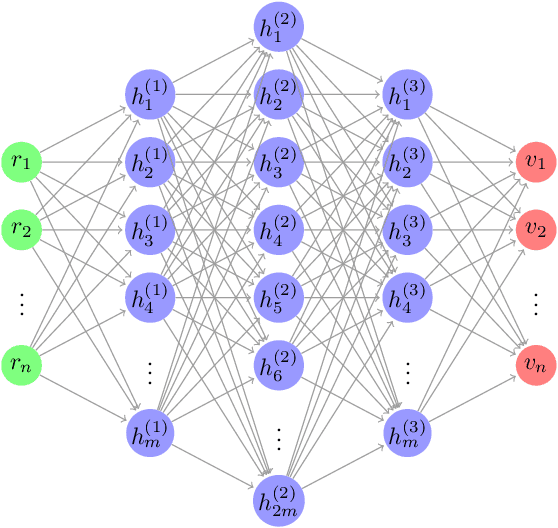

Abstract:Recently, a number of deep learning-based anomaly detection algorithms were proposed to detect attacks in dynamic industrial control systems. The detectors operate on measured sensor data, leveraging physical process models learned a priori. Evading detection by such systems is challenging, as an attacker needs to manipulate a constrained number of sensor readings in real-time with realistic perturbations according to the current state of the system. In this work, we propose a number of evasion attacks (with different assumptions on the attacker's knowledge), and compare the attacks' cost and efficiency against replay attacks. In particular, we show that a replay attack on a subset of sensor values can be detected easily as it violates physical constraints. In contrast, our proposed attacks leverage manipulated sensor readings that observe learned physical constraints of the system. Our proposed white box attacker uses an optimization approach with a detection oracle, while our black box attacker uses an autoencoder (or a convolutional neural network) to translate anomalous data into normal data. Our proposed approaches are implemented and evaluated on two different datasets pertaining to the domain of water distribution networks. We then demonstrated the efficacy of the real-time attack on a realistic testbed. Results show that the accuracy of the detection algorithms can be significantly reduced through real-time adversarial actions: for the BATADAL dataset, the attacker can reduce the detection accuracy from 0.6 to 0.14. In addition, we discuss and implement an Availability attack, in which the attacker introduces detection events with minimal changes of the reported data, in order to reduce confidence in the detector.

Detection of Unauthorized IoT Devices Using Machine Learning Techniques

Sep 14, 2017

Abstract:Security experts have demonstrated numerous risks imposed by Internet of Things (IoT) devices on organizations. Due to the widespread adoption of such devices, their diversity, standardization obstacles, and inherent mobility, organizations require an intelligent mechanism capable of automatically detecting suspicious IoT devices connected to their networks. In particular, devices not included in a white list of trustworthy IoT device types (allowed to be used within the organizational premises) should be detected. In this research, Random Forest, a supervised machine learning algorithm, was applied to features extracted from network traffic data with the aim of accurately identifying IoT device types from the white list. To train and evaluate multi-class classifiers, we collected and manually labeled network traffic data from 17 distinct IoT devices, representing nine types of IoT devices. Based on the classification of 20 consecutive sessions and the use of majority rule, IoT device types that are not on the white list were correctly detected as unknown in 96% of test cases (on average), and white listed device types were correctly classified by their actual types in 99% of cases. Some IoT device types were identified quicker than others (e.g., sockets and thermostats were successfully detected within five TCP sessions of connecting to the network). Perfect detection of unauthorized IoT device types was achieved upon analyzing 110 consecutive sessions; perfect classification of white listed types required 346 consecutive sessions, 110 of which resulted in 99.49% accuracy. Further experiments demonstrated the successful applicability of classifiers trained in one location and tested on another. In addition, a discussion is provided regarding the resilience of our machine learning-based IoT white listing method to adversarial attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge