Real-time Evasion Attacks with Physical Constraints on Deep Learning-based Anomaly Detectors in Industrial Control Systems

Paper and Code

Jul 17, 2019

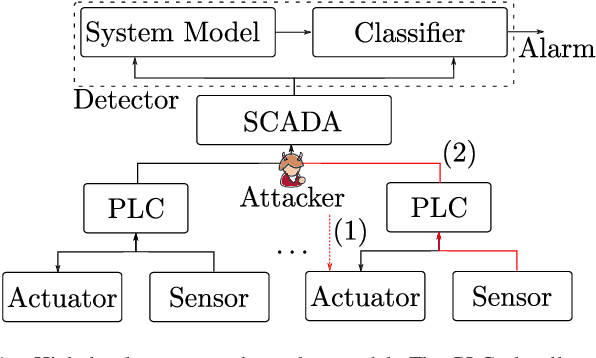

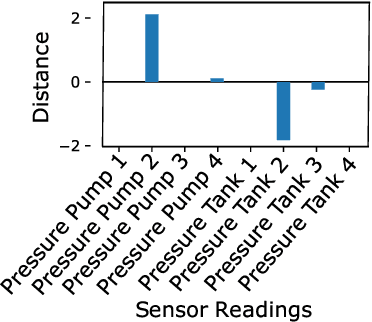

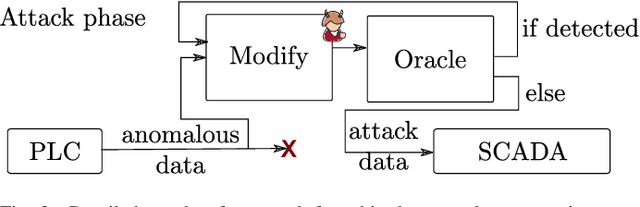

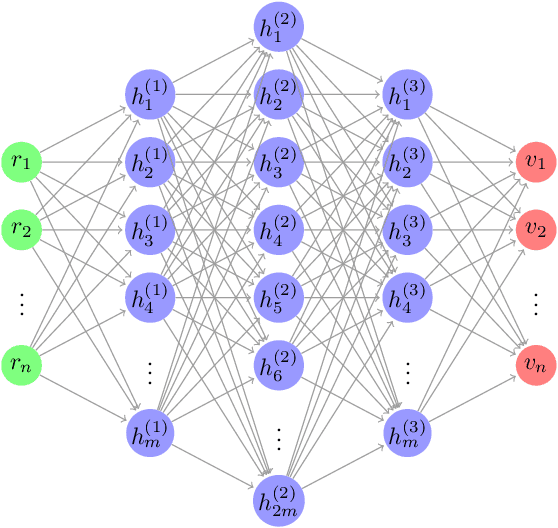

Recently, a number of deep learning-based anomaly detection algorithms were proposed to detect attacks in dynamic industrial control systems. The detectors operate on measured sensor data, leveraging physical process models learned a priori. Evading detection by such systems is challenging, as an attacker needs to manipulate a constrained number of sensor readings in real-time with realistic perturbations according to the current state of the system. In this work, we propose a number of evasion attacks (with different assumptions on the attacker's knowledge), and compare the attacks' cost and efficiency against replay attacks. In particular, we show that a replay attack on a subset of sensor values can be detected easily as it violates physical constraints. In contrast, our proposed attacks leverage manipulated sensor readings that observe learned physical constraints of the system. Our proposed white box attacker uses an optimization approach with a detection oracle, while our black box attacker uses an autoencoder (or a convolutional neural network) to translate anomalous data into normal data. Our proposed approaches are implemented and evaluated on two different datasets pertaining to the domain of water distribution networks. We then demonstrated the efficacy of the real-time attack on a realistic testbed. Results show that the accuracy of the detection algorithms can be significantly reduced through real-time adversarial actions: for the BATADAL dataset, the attacker can reduce the detection accuracy from 0.6 to 0.14. In addition, we discuss and implement an Availability attack, in which the attacker introduces detection events with minimal changes of the reported data, in order to reduce confidence in the detector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge