Elham Sagheb

Explainable Diagnosis Prediction through Neuro-Symbolic Integration

Oct 01, 2024

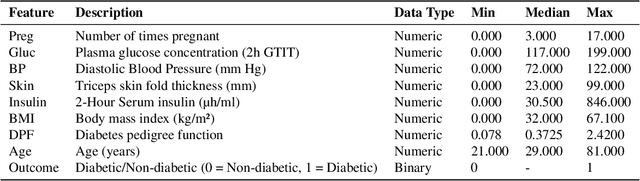

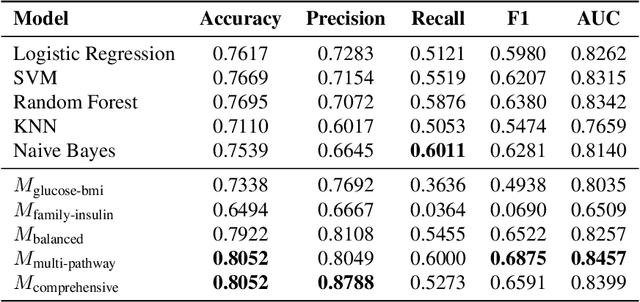

Abstract:Diagnosis prediction is a critical task in healthcare, where timely and accurate identification of medical conditions can significantly impact patient outcomes. Traditional machine learning and deep learning models have achieved notable success in this domain but often lack interpretability which is a crucial requirement in clinical settings. In this study, we explore the use of neuro-symbolic methods, specifically Logical Neural Networks (LNNs), to develop explainable models for diagnosis prediction. Essentially, we design and implement LNN-based models that integrate domain-specific knowledge through logical rules with learnable thresholds. Our models, particularly $M_{\text{multi-pathway}}$ and $M_{\text{comprehensive}}$, demonstrate superior performance over traditional models such as Logistic Regression, SVM, and Random Forest, achieving higher accuracy (up to 80.52\%) and AUROC scores (up to 0.8457) in the case study of diabetes prediction. The learned weights and thresholds within the LNN models provide direct insights into feature contributions, enhancing interpretability without compromising predictive power. These findings highlight the potential of neuro-symbolic approaches in bridging the gap between accuracy and explainability in healthcare AI applications. By offering transparent and adaptable diagnostic models, our work contributes to the advancement of precision medicine and supports the development of equitable healthcare solutions. Future research will focus on extending these methods to larger and more diverse datasets to further validate their applicability across different medical conditions and populations.

Give me a knee radiograph, I will tell you where the knee joint area is: a deep convolutional neural network adventure

Feb 11, 2022

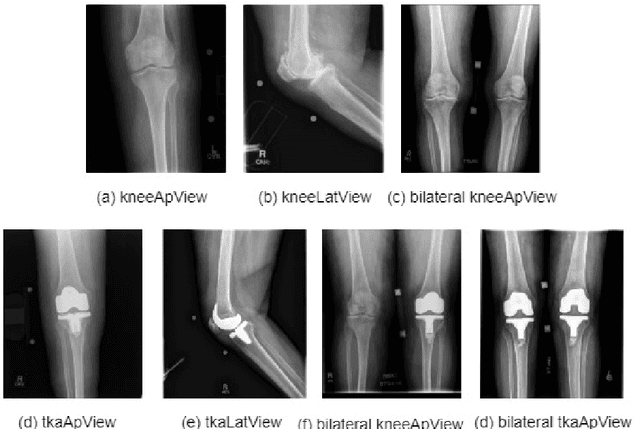

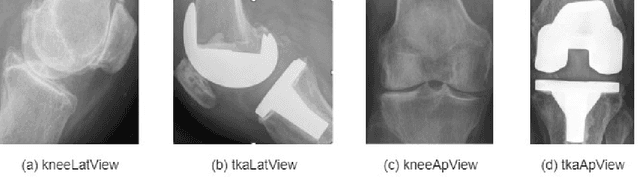

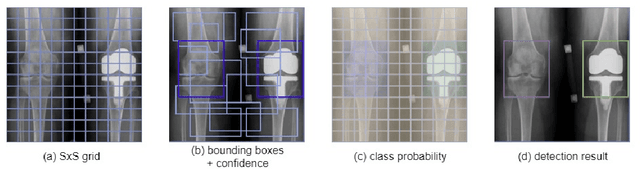

Abstract:Knee pain is undoubtedly the most common musculoskeletal symptom that impairs quality of life, confines mobility and functionality across all ages. Knee pain is clinically evaluated by routine radiographs, where the widespread adoption of radiographic images and their availability at low cost, make them the principle component in the assessment of knee pain and knee pathologies, such as arthritis, trauma, and sport injuries. However, interpretation of the knee radiographs is still highly subjective, and overlapping structures within the radiographs and the large volume of images needing to be analyzed on a daily basis, make interpretation challenging for both naive and experienced practitioners. There is thus a need to implement an artificial intelligence strategy to objectively and automatically interpret knee radiographs, facilitating triage of abnormal radiographs in a timely fashion. The current work proposes an accurate and effective pipeline for autonomous detection, localization, and classification of knee joint area in plain radiographs combining the You Only Look Once (YOLO v3) deep convolutional neural network with a large and fully-annotated knee radiographs dataset. The present work is expected to stimulate more interest from the deep learning computer vision community to this pragmatic and clinical application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge