Eddie Huang

Graph Coarsening via Convolution Matching for Scalable Graph Neural Network Training

Dec 24, 2023

Abstract:Graph summarization as a preprocessing step is an effective and complementary technique for scalable graph neural network (GNN) training. In this work, we propose the Coarsening Via Convolution Matching (CONVMATCH) algorithm and a highly scalable variant, A-CONVMATCH, for creating summarized graphs that preserve the output of graph convolution. We evaluate CONVMATCH on six real-world link prediction and node classification graph datasets, and show it is efficient and preserves prediction performance while significantly reducing the graph size. Notably, CONVMATCH achieves up to 95% of the prediction performance of GNNs on node classification while trained on graphs summarized down to 1% the size of the original graph. Furthermore, on link prediction tasks, CONVMATCH consistently outperforms all baselines, achieving up to a 2x improvement.

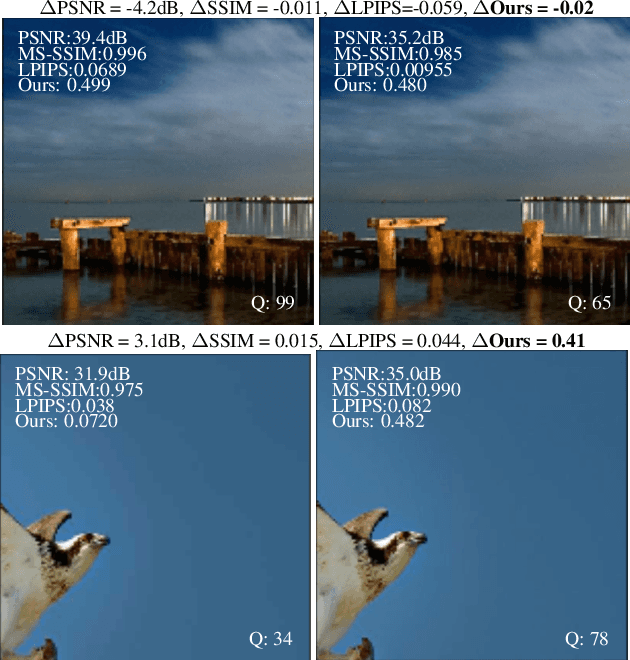

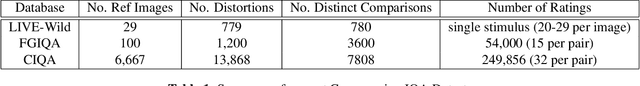

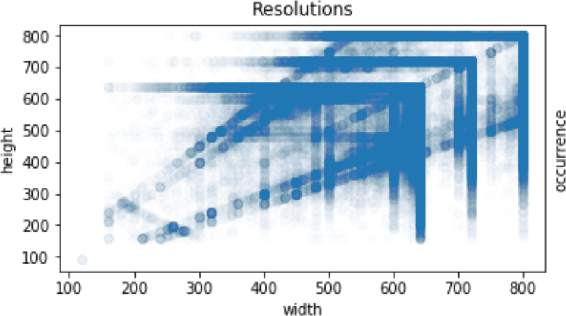

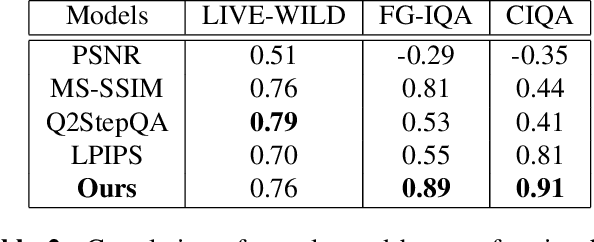

Deep Perceptual Image Quality Assessment for Compression

Mar 01, 2021

Abstract:Lossy Image compression is necessary for efficient storage and transfer of data. Typically the trade-off between bit-rate and quality determines the optimal compression level. This makes the image quality metric an integral part of any imaging system. While the existing full-reference metrics such as PSNR and SSIM may be less sensitive to perceptual quality, the recently introduced learning methods may fail to generalize to unseen data. In this paper we propose the largest image compression quality dataset to date with human perceptual preferences, enabling the use of deep learning, and we develop a full reference perceptual quality assessment metric for lossy image compression that outperforms the existing state-of-the-art methods. We show that the proposed model can effectively learn from thousands of examples available in the new dataset, and consequently it generalizes better to other unseen datasets of human perceptual preference.

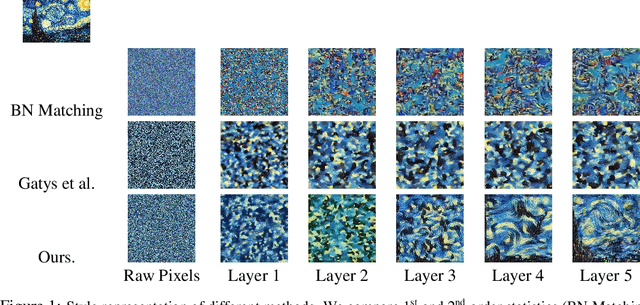

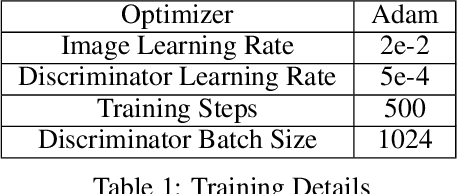

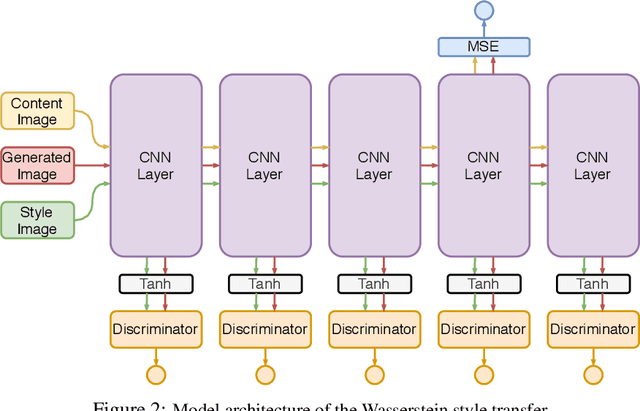

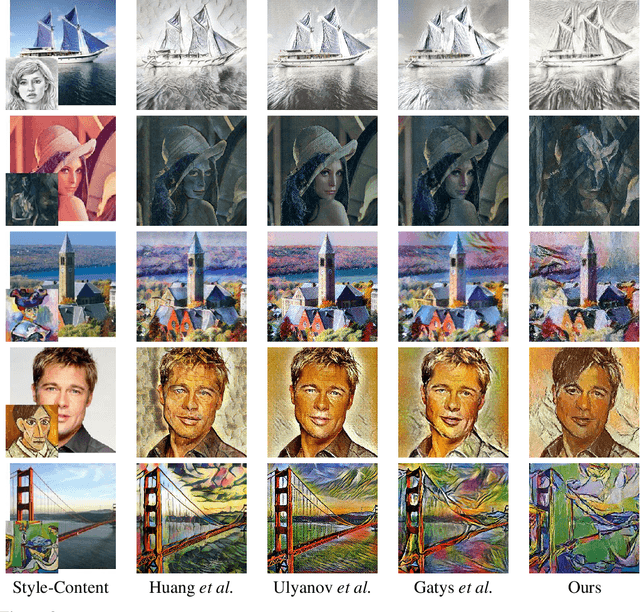

Style is a Distribution of Features

Jul 25, 2020

Abstract:Neural style transfer (NST) is a powerful image generation technique that uses a convolutional neural network (CNN) to merge the content of one image with the style of another. Contemporary methods of NST use first or second order statistics of the CNN's features to achieve transfers with relatively little computational cost. However, these methods cannot fully extract the style from the CNN's features. We present a new algorithm for style transfer that fully extracts the style from the features by redefining the style loss as the Wasserstein distance between the distribution of features. Thus, we set a new standard in style transfer quality. In addition, we state two important interpretations of NST. The first is a re-emphasis from Li et al., which states that style is simply the distribution of features. The second states that NST is a type of generative adversarial network (GAN) problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge