Eakta Jain

Towards mitigating uncann(eye)ness in face swaps via gaze-centric loss terms

Feb 05, 2024Abstract:Advances in face swapping have enabled the automatic generation of highly realistic faces. Yet face swaps are perceived differently than when looking at real faces, with key differences in viewer behavior surrounding the eyes. Face swapping algorithms generally place no emphasis on the eyes, relying on pixel or feature matching losses that consider the entire face to guide the training process. We further investigate viewer perception of face swaps, focusing our analysis on the presence of an uncanny valley effect. We additionally propose a novel loss equation for the training of face swapping models, leveraging a pretrained gaze estimation network to directly improve representation of the eyes. We confirm that viewed face swaps do elicit uncanny responses from viewers. Our proposed improvements significant reduce viewing angle errors between face swaps and their source material. Our method additionally reduces the prevalence of the eyes as a deciding factor when viewers perform deepfake detection tasks. Our findings have implications on face swapping for special effects, as digital avatars, as privacy mechanisms, and more; negative responses from users could limit effectiveness in said applications. Our gaze improvements are a first step towards alleviating negative viewer perceptions via a targeted approach.

Introducing Explicit Gaze Constraints to Face Swapping

May 25, 2023

Abstract:Face swapping combines one face's identity with another face's non-appearance attributes (expression, head pose, lighting) to generate a synthetic face. This technology is rapidly improving, but falls flat when reconstructing some attributes, particularly gaze. Image-based loss metrics that consider the full face do not effectively capture the perceptually important, yet spatially small, eye regions. Improving gaze in face swaps can improve naturalness and realism, benefiting applications in entertainment, human computer interaction, and more. Improved gaze will also directly improve Deepfake detection efforts, serving as ideal training data for classifiers that rely on gaze for classification. We propose a novel loss function that leverages gaze prediction to inform the face swap model during training and compare against existing methods. We find all methods to significantly benefit gaze in resulting face swaps.

Eye-tracked Virtual Reality: A Comprehensive Survey on Methods and Privacy Challenges

May 23, 2023

Abstract:Latest developments in computer hardware, sensor technologies, and artificial intelligence can make virtual reality (VR) and virtual spaces an important part of human everyday life. Eye tracking offers not only a hands-free way of interaction but also the possibility of a deeper understanding of human visual attention and cognitive processes in VR. Despite these possibilities, eye-tracking data also reveal privacy-sensitive attributes of users when it is combined with the information about the presented stimulus. To address these possibilities and potential privacy issues, in this survey, we first cover major works in eye tracking, VR, and privacy areas between the years 2012 and 2022. While eye tracking in the VR part covers the complete pipeline of eye-tracking methodology from pupil detection and gaze estimation to offline use and analyses, as for privacy and security, we focus on eye-based authentication as well as computational methods to preserve the privacy of individuals and their eye-tracking data in VR. Later, taking all into consideration, we draw three main directions for the research community by mainly focusing on privacy challenges. In summary, this survey provides an extensive literature review of the utmost possibilities with eye tracking in VR and the privacy implications of those possibilities.

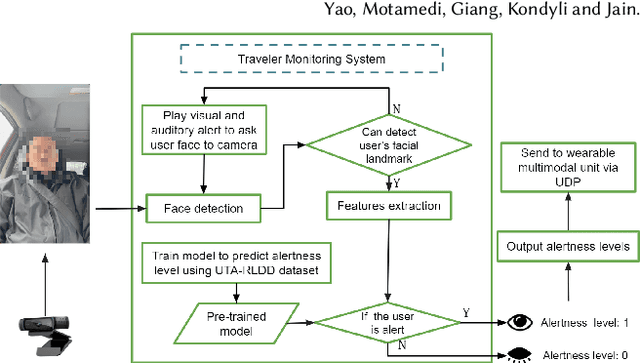

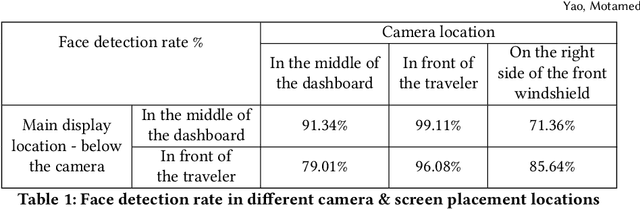

In-vehicle alertness monitoring for older adults

Aug 17, 2022

Abstract:Alertness monitoring in the context of driving improves safety and saves lives. Computer vision based alertness monitoring is an active area of research. However, the algorithms and datasets that exist for alertness monitoring are primarily aimed at younger adults (18-50 years old). We present a system for in-vehicle alertness monitoring for older adults. Through a design study, we ascertained the variables and parameters that are suitable for older adults traveling independently in Level 5 vehicles. We implemented a prototype traveler monitoring system and evaluated the alertness detection algorithm on ten older adults (70 years and older). We report on the system design and implementation at a level of detail that is suitable for the beginning researcher or practitioner. Our study suggests that dataset development is the foremost challenge for developing alertness monitoring systems targeted at older adults. This study is the first of its kind for a hitherto under-studied population and has implications for future work on algorithm development and system design through participatory methods.

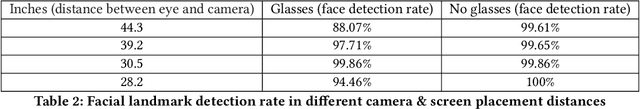

Practical Digital Disguises: Leveraging Face Swaps to Protect Patient Privacy

Apr 13, 2022

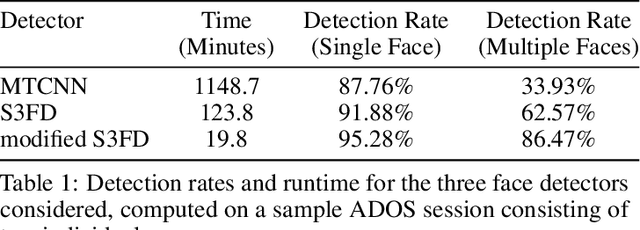

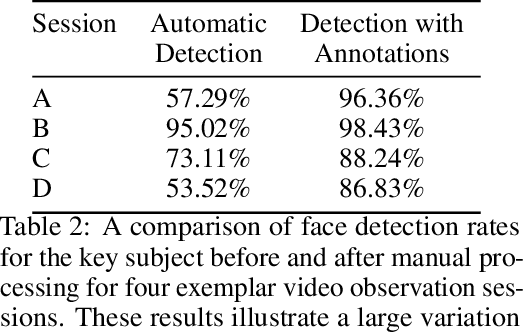

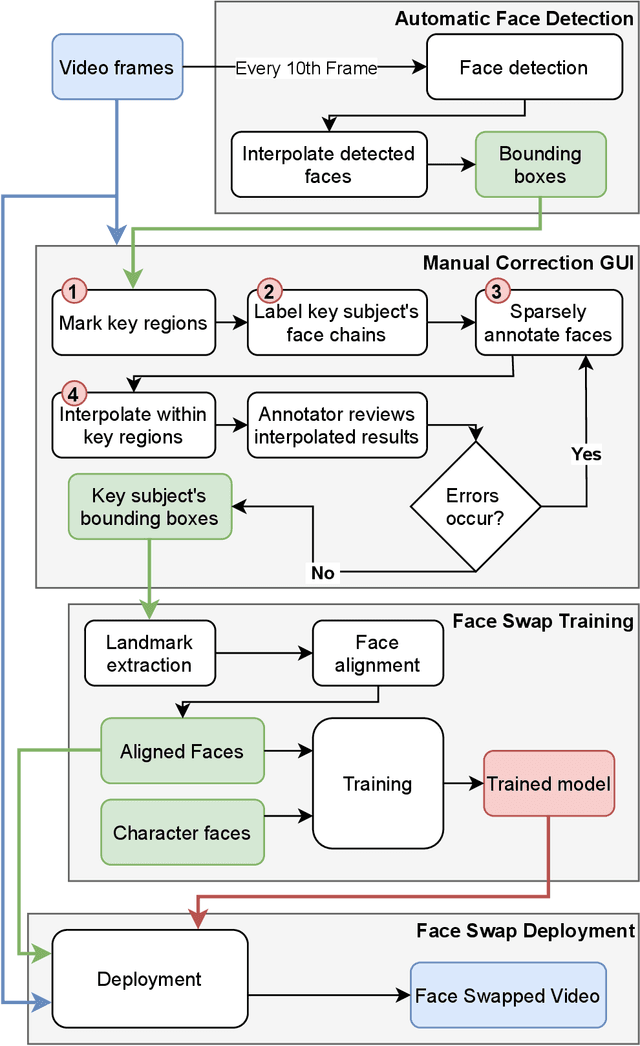

Abstract:With rapid advancements in image generation technology, face swapping for privacy protection has emerged as an active area of research. The ultimate benefit is improved access to video datasets, e.g. in healthcare settings. Recent literature has proposed deep network-based architectures to perform facial swaps and reported the associated reduction in facial recognition accuracy. However, there is not much reporting on how well these methods preserve the types of semantic information needed for the privatized videos to remain useful for their intended application. Our main contribution is a novel end-to-end face swapping pipeline for recorded videos of standardized assessments of autism symptoms in children. Through this design, we are the first to provide a methodology for assessing the privacy-utility trade-offs for the face swapping approach to patient privacy protection. Our methodology can show, for example, that current deep network based face swapping is bottle-necked by face detection in real world videos, and the extent to which gaze and expression information is preserved by face swaps relative to baseline privatization methods such as blurring.

Differential Privacy for Eye-Tracking Data

Apr 15, 2019

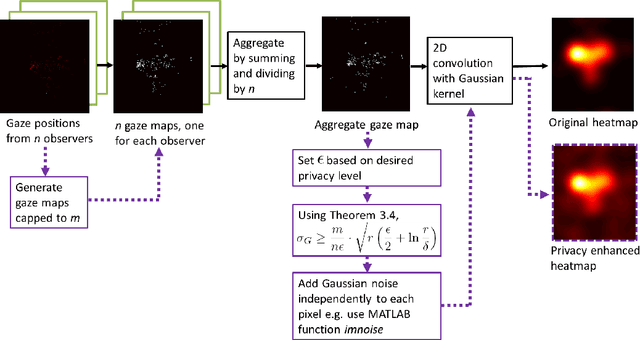

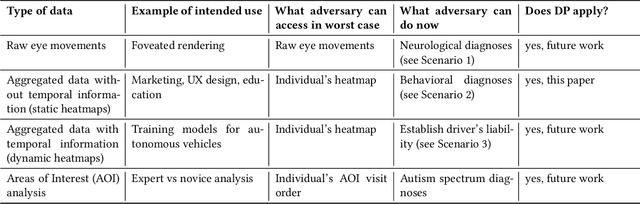

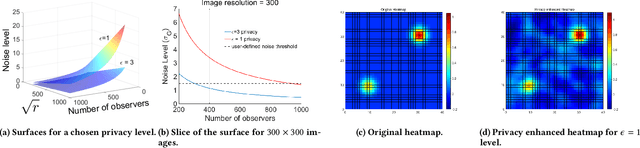

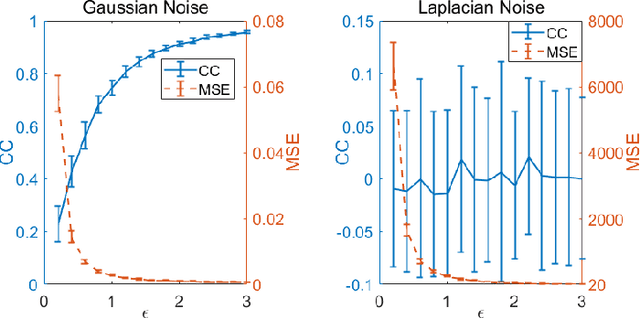

Abstract:As large eye-tracking datasets are created, data privacy is a pressing concern for the eye-tracking community. De-identifying data does not guarantee privacy because multiple datasets can be linked for inferences. A common belief is that aggregating individuals' data into composite representations such as heatmaps protects the individual. However, we analytically examine the privacy of (noise-free) heatmaps and show that they do not guarantee privacy. We further propose two noise mechanisms that guarantee privacy and analyze their privacy-utility tradeoff. Analysis reveals that our Gaussian noise mechanism is an elegant solution to preserve privacy for heatmaps. Our results have implications for interdisciplinary research to create differentially private mechanisms for eye tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge