Efe Bozkir

Exploring User Acceptance and Concerns toward LLM-powered Conversational Agents in Immersive Extended Reality

Dec 17, 2025

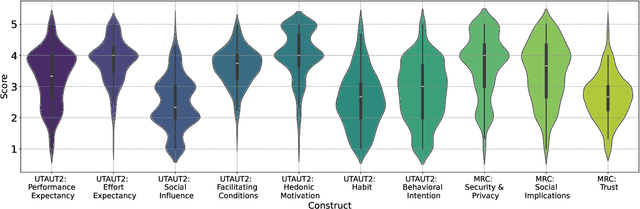

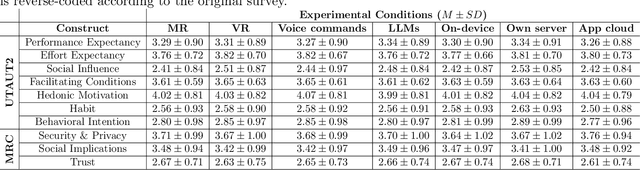

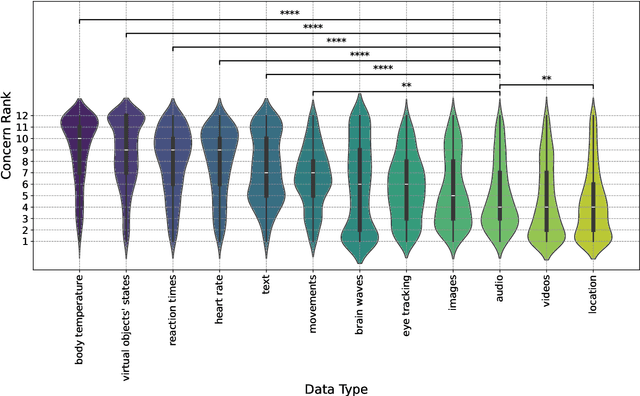

Abstract:The rapid development of generative artificial intelligence (AI) and large language models (LLMs), and the availability of services that make them accessible, have led the general public to begin incorporating them into everyday life. The extended reality (XR) community has also sought to integrate LLMs, particularly in the form of conversational agents, to enhance user experience and task efficiency. When interacting with such conversational agents, users may easily disclose sensitive information due to the naturalistic flow of the conversations, and combining such conversational data with fine-grained sensor data may lead to novel privacy issues. To address these issues, a user-centric understanding of technology acceptance and concerns is essential. Therefore, to this end, we conducted a large-scale crowdsourcing study with 1036 participants, examining user decision-making processes regarding LLM-powered conversational agents in XR, across factors of XR setting type, speech interaction type, and data processing location. We found that while users generally accept these technologies, they express concerns related to security, privacy, social implications, and trust. Our results suggest that familiarity plays a crucial role, as daily generative AI use is associated with greater acceptance. In contrast, previous ownership of XR devices is linked to less acceptance, possibly due to existing familiarity with the settings. We also found that men report higher acceptance with fewer concerns than women. Regarding data type sensitivity, location data elicited the most significant concern, while body temperature and virtual object states were considered least sensitive. Overall, our study highlights the importance of practitioners effectively communicating their measures to users, who may remain distrustful. We conclude with implications and recommendations for LLM-powered XR.

Examining the Role of LLM-Driven Interactions on Attention and Cognitive Engagement in Virtual Classrooms

May 12, 2025Abstract:Transforming educational technologies through the integration of large language models (LLMs) and virtual reality (VR) offers the potential for immersive and interactive learning experiences. However, the effects of LLMs on user engagement and attention in educational environments remain open questions. In this study, we utilized a fully LLM-driven virtual learning environment, where peers and teachers were LLM-driven, to examine how students behaved in such settings. Specifically, we investigate how peer question-asking behaviors influenced student engagement, attention, cognitive load, and learning outcomes and found that, in conditions where LLM-driven peer learners asked questions, students exhibited more targeted visual scanpaths, with their attention directed toward the learning content, particularly in complex subjects. Our results suggest that peer questions did not introduce extraneous cognitive load directly, as the cognitive load is strongly correlated with increased attention to the learning material. Considering these findings, we provide design recommendations for optimizing VR learning spaces.

Multimodal Assessment of Classroom Discourse Quality: A Text-Centered Attention-Based Multi-Task Learning Approach

May 12, 2025Abstract:Classroom discourse is an essential vehicle through which teaching and learning take place. Assessing different characteristics of discursive practices and linking them to student learning achievement enhances the understanding of teaching quality. Traditional assessments rely on manual coding of classroom observation protocols, which is time-consuming and costly. Despite many studies utilizing AI techniques to analyze classroom discourse at the utterance level, investigations into the evaluation of discursive practices throughout an entire lesson segment remain limited. To address this gap, our study proposes a novel text-centered multimodal fusion architecture to assess the quality of three discourse components grounded in the Global Teaching InSights (GTI) observation protocol: Nature of Discourse, Questioning, and Explanations. First, we employ attention mechanisms to capture inter- and intra-modal interactions from transcript, audio, and video streams. Second, a multi-task learning approach is adopted to jointly predict the quality scores of the three components. Third, we formulate the task as an ordinal classification problem to account for rating level order. The effectiveness of these designed elements is demonstrated through an ablation study on the GTI Germany dataset containing 92 videotaped math lessons. Our results highlight the dominant role of text modality in approaching this task. Integrating acoustic features enhances the model's consistency with human ratings, achieving an overall Quadratic Weighted Kappa score of 0.384, comparable to human inter-rater reliability (0.326). Our study lays the groundwork for the future development of automated discourse quality assessment to support teacher professional development through timely feedback on multidimensional discourse practices.

Automated Visual Attention Detection using Mobile Eye Tracking in Behavioral Classroom Studies

May 12, 2025Abstract:Teachers' visual attention and its distribution across the students in classrooms can constitute important implications for student engagement, achievement, and professional teacher training. Despite that, inferring the information about where and which student teachers focus on is not trivial. Mobile eye tracking can provide vital help to solve this issue; however, the use of mobile eye tracking alone requires a significant amount of manual annotations. To address this limitation, we present an automated processing pipeline concept that requires minimal manually annotated data to recognize which student the teachers focus on. To this end, we utilize state-of-the-art face detection models and face recognition feature embeddings to train face recognition models with transfer learning in the classroom context and combine these models with the teachers' gaze from mobile eye trackers. We evaluated our approach with data collected from four different classrooms, and our results show that while it is possible to estimate the visually focused students with reasonable performance in all of our classroom setups, U-shaped and small classrooms led to the best results with accuracies of approximately 0.7 and 0.9, respectively. While we did not evaluate our method for teacher-student interactions and focused on the validity of the technical approach, as our methodology does not require a vast amount of manually annotated data and offers a non-intrusive way of handling teachers' visual attention, it could help improve instructional strategies, enhance classroom management, and provide feedback for professional teacher development.

Exploring Context-aware and LLM-driven Locomotion for Immersive Virtual Reality

Apr 24, 2025

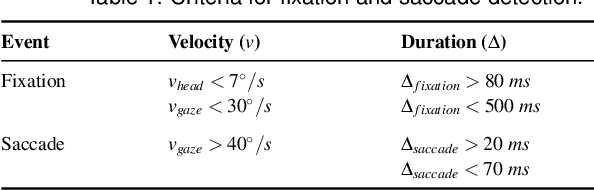

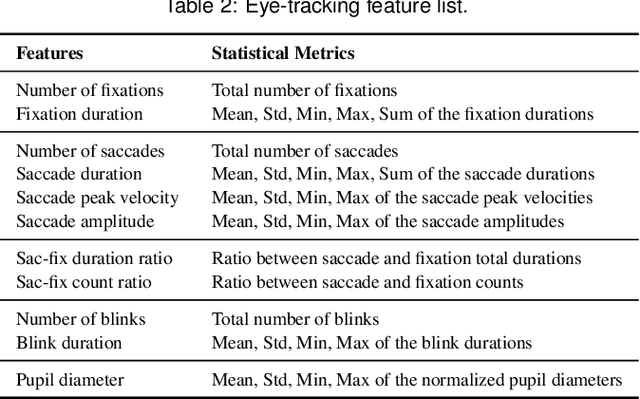

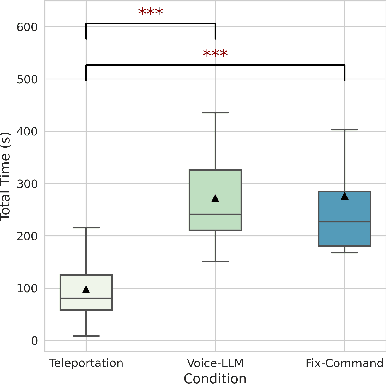

Abstract:Locomotion plays a crucial role in shaping the user experience within virtual reality environments. In particular, hands-free locomotion offers a valuable alternative by supporting accessibility and freeing users from reliance on handheld controllers. To this end, traditional speech-based methods often depend on rigid command sets, limiting the naturalness and flexibility of interaction. In this study, we propose a novel locomotion technique powered by large language models (LLMs), which allows users to navigate virtual environments using natural language with contextual awareness. We evaluate three locomotion methods: controller-based teleportation, voice-based steering, and our language model-driven approach. Our evaluation measures include eye-tracking data analysis, including explainable machine learning through SHAP analysis as well as standardized questionnaires for usability, presence, cybersickness, and cognitive load to examine user attention and engagement. Our findings indicate that the LLM-driven locomotion possesses comparable usability, presence, and cybersickness scores to established methods like teleportation, demonstrating its novel potential as a comfortable, natural language-based, hands-free alternative. In addition, it enhances user attention within the virtual environment, suggesting greater engagement. Complementary to these findings, SHAP analysis revealed that fixation, saccade, and pupil-related features vary across techniques, indicating distinct patterns of visual attention and cognitive processing. Overall, we state that our method can facilitate hands-free locomotion in virtual spaces, especially in supporting accessibility.

Trade-offs in Privacy-Preserving Eye Tracking through Iris Obfuscation: A Benchmarking Study

Apr 15, 2025

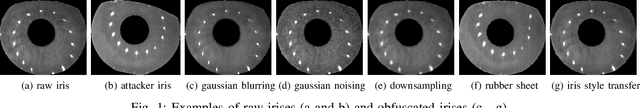

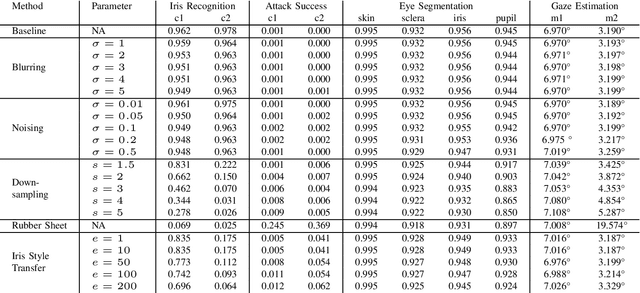

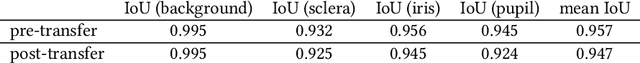

Abstract:Recent developments in hardware, computer graphics, and AI may soon enable AR/VR head-mounted displays (HMDs) to become everyday devices like smartphones and tablets. Eye trackers within HMDs provide a special opportunity for such setups as it is possible to facilitate gaze-based research and interaction. However, estimating users' gaze information often requires raw eye images and videos that contain iris textures, which are considered a gold standard biometric for user authentication, and this raises privacy concerns. Previous research in the eye-tracking community focused on obfuscating iris textures while keeping utility tasks such as gaze estimation accurate. Despite these attempts, there is no comprehensive benchmark that evaluates state-of-the-art approaches. Considering all, in this paper, we benchmark blurring, noising, downsampling, rubber sheet model, and iris style transfer to obfuscate user identity, and compare their impact on image quality, privacy, utility, and risk of imposter attack on two datasets. We use eye segmentation and gaze estimation as utility tasks, and reduction in iris recognition accuracy as a measure of privacy protection, and false acceptance rate to estimate risk of attack. Our experiments show that canonical image processing methods like blurring and noising cause a marginal impact on deep learning-based tasks. While downsampling, rubber sheet model, and iris style transfer are effective in hiding user identifiers, iris style transfer, with higher computation cost, outperforms others in both utility tasks, and is more resilient against spoof attacks. Our analyses indicate that there is no universal optimal approach to balance privacy, utility, and computation burden. Therefore, we recommend practitioners consider the strengths and weaknesses of each approach, and possible combinations of those to reach an optimal privacy-utility trade-off.

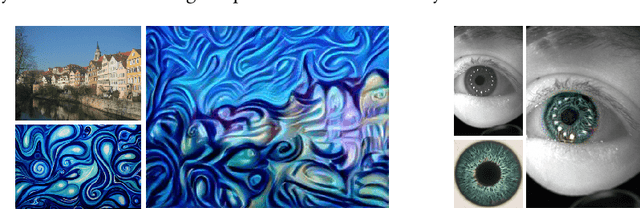

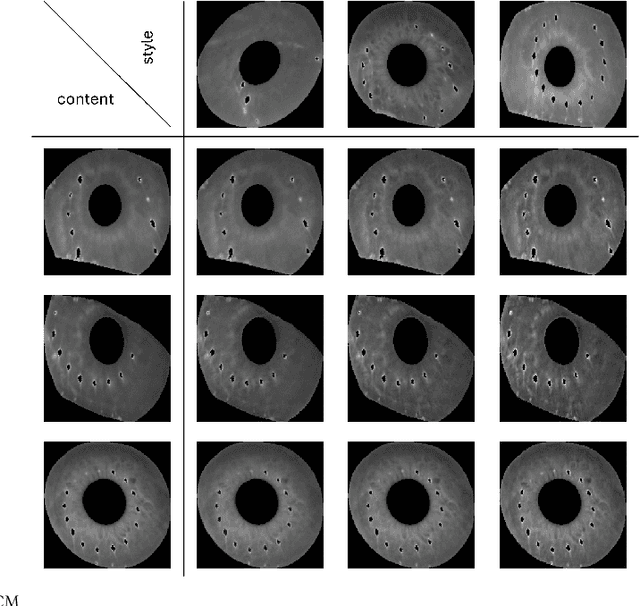

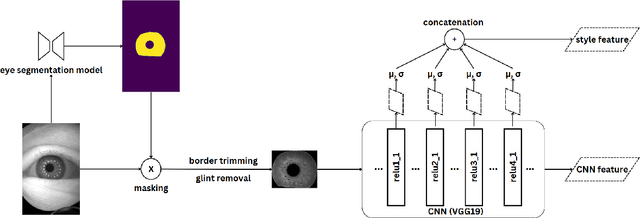

Iris Style Transfer: Enhancing Iris Recognition with Style Features and Privacy Preservation through Neural Style Transfer

Mar 06, 2025

Abstract:Iris texture is widely regarded as a gold standard biometric modality for authentication and identification. The demand for robust iris recognition methods, coupled with growing security and privacy concerns regarding iris attacks, has escalated recently. Inspired by neural style transfer, an advanced technique that leverages neural networks to separate content and style features, we hypothesize that iris texture's style features provide a reliable foundation for recognition and are more resilient to variations like rotation and perspective shifts than traditional approaches. Our experimental results support this hypothesis, showing a significantly higher classification accuracy compared to conventional features. Further, we propose using neural style transfer to mask identifiable iris style features, ensuring the protection of sensitive biometric information while maintaining the utility of eye images for tasks like eye segmentation and gaze estimation. This work opens new avenues for iris-oriented, secure, and privacy-aware biometric systems.

CUIfy the XR: An Open-Source Package to Embed LLM-powered Conversational Agents in XR

Nov 07, 2024

Abstract:Recent developments in computer graphics, machine learning, and sensor technologies enable numerous opportunities for extended reality (XR) setups for everyday life, from skills training to entertainment. With large corporations offering consumer-grade head-mounted displays (HMDs) in an affordable way, it is likely that XR will become pervasive, and HMDs will develop as personal devices like smartphones and tablets. However, having intelligent spaces and naturalistic interactions in XR is as important as technological advances so that users grow their engagement in virtual and augmented spaces. To this end, large language model (LLM)--powered non-player characters (NPCs) with speech-to-text (STT) and text-to-speech (TTS) models bring significant advantages over conventional or pre-scripted NPCs for facilitating more natural conversational user interfaces (CUIs) in XR. In this paper, we provide the community with an open-source, customizable, extensible, and privacy-aware Unity package, CUIfy, that facilitates speech-based NPC-user interaction with various LLMs, STT, and TTS models. Our package also supports multiple LLM-powered NPCs per environment and minimizes the latency between different computational models through streaming to achieve usable interactions between users and NPCs. We publish our source code in the following repository: https://gitlab.lrz.de/hctl/cuify

From Passive Watching to Active Learning: Empowering Proactive Participation in Digital Classrooms with AI Video Assistant

Sep 24, 2024Abstract:In online education, innovative tools are crucial for enhancing learning outcomes. SAM (Study with AI Mentor) is an advanced platform that integrates educational videos with a context-aware chat interface powered by large language models. SAM encourages students to ask questions and explore unclear concepts in real-time, offering personalized, context-specific assistance, including explanations of formulas, slides, and images. In a crowdsourced user study involving 140 participants, SAM was evaluated through pre- and post-knowledge tests, comparing a group using SAM with a control group. The results demonstrated that SAM users achieved greater knowledge gains, with a 96.8% answer accuracy. Participants also provided positive feedback on SAM's usability and effectiveness. SAM's proactive approach to learning not only enhances learning outcomes but also empowers students to take full ownership of their educational experience, representing a promising future direction for online learning tools.

Evaluating Usability and Engagement of Large Language Models in Virtual Reality for Traditional Scottish Curling

Aug 17, 2024

Abstract:This paper explores the innovative application of Large Language Models (LLMs) in Virtual Reality (VR) environments to promote heritage education, focusing on traditional Scottish curling presented in the game ``Scottish Bonspiel VR''. Our study compares the effectiveness of LLM-based chatbots with pre-defined scripted chatbots, evaluating key criteria such as usability, user engagement, and learning outcomes. The results show that LLM-based chatbots significantly improve interactivity and engagement, creating a more dynamic and immersive learning environment. This integration helps document and preserve cultural heritage and enhances dissemination processes, which are crucial for safeguarding intangible cultural heritage (ICH) amid environmental changes. Furthermore, the study highlights the potential of novel technologies in education to provide immersive experiences that foster a deeper appreciation of cultural heritage. These findings support the wider application of LLMs and VR in cultural education to address global challenges and promote sustainable practices to preserve and enhance cultural heritage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge