Dylan Foster

Efficient Contextual Bandits with Knapsacks via Regression

Nov 14, 2022Abstract:We consider contextual bandits with knapsacks (CBwK), a variant of the contextual bandit which places global constraints on budget consumption. We present a new algorithm that is simple, statistically optimal, and computationally efficient. Our algorithm combines LagrangeBwK (Immorlica et al., FOCS'19), a Lagrangian-based technique for CBwK, and SquareCB (Foster and Rakhlin, ICML'20), a regression-based technique for contextual bandits. Our analysis emphasizes the modularity of both techniques.

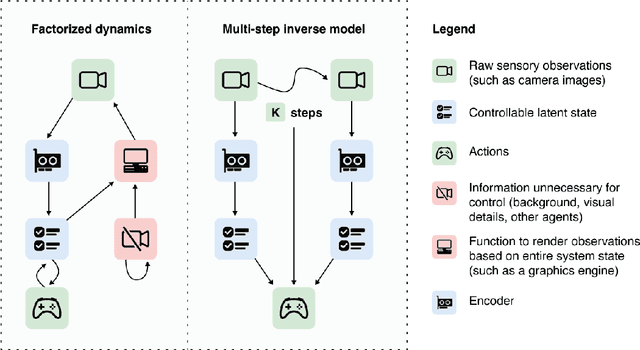

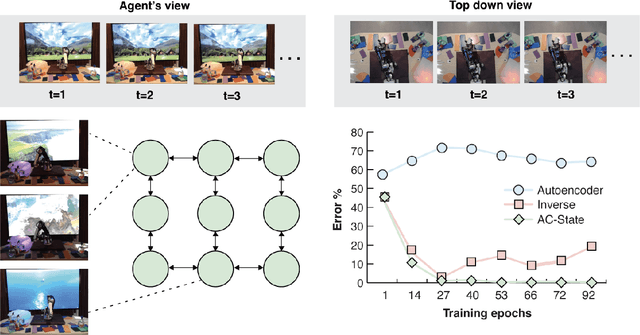

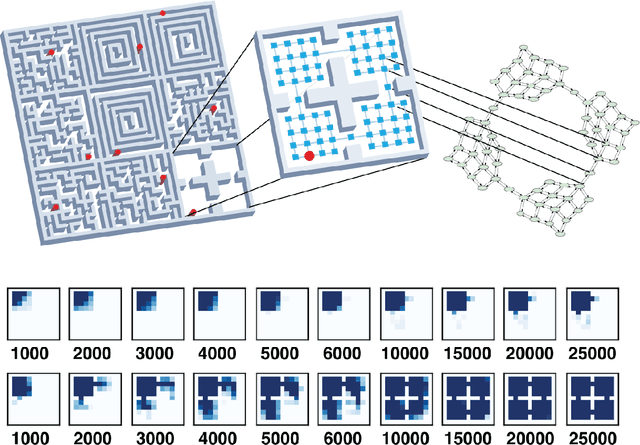

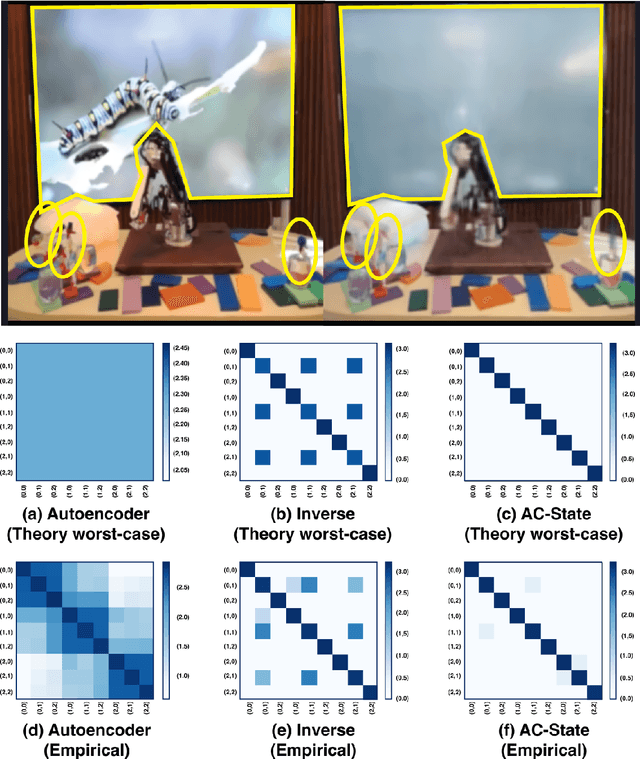

Guaranteed Discovery of Controllable Latent States with Multi-Step Inverse Models

Jul 17, 2022

Abstract:A person walking along a city street who tries to model all aspects of the world would quickly be overwhelmed by a multitude of shops, cars, and people moving in and out of view, following their own complex and inscrutable dynamics. Exploration and navigation in such an environment is an everyday task, requiring no vast exertion of mental resources. Is it possible to turn this fire hose of sensory information into a minimal latent state which is necessary and sufficient for an agent to successfully act in the world? We formulate this question concretely, and propose the Agent-Controllable State Discovery algorithm (AC-State), which has theoretical guarantees and is practically demonstrated to discover the \textit{minimal controllable latent state} which contains all of the information necessary for controlling the agent, while fully discarding all irrelevant information. This algorithm consists of a multi-step inverse model (predicting actions from distant observations) with an information bottleneck. AC-State enables localization, exploration, and navigation without reward or demonstrations. We demonstrate the discovery of controllable latent state in three domains: localizing a robot arm with distractions (e.g., changing lighting conditions and background), exploring in a maze alongside other agents, and navigating in the Matterport house simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge