Dumitru-Clementin Cercel

Parameter Efficient Multimodal Instruction Tuning for Romanian Vision Language Models

Dec 16, 2025

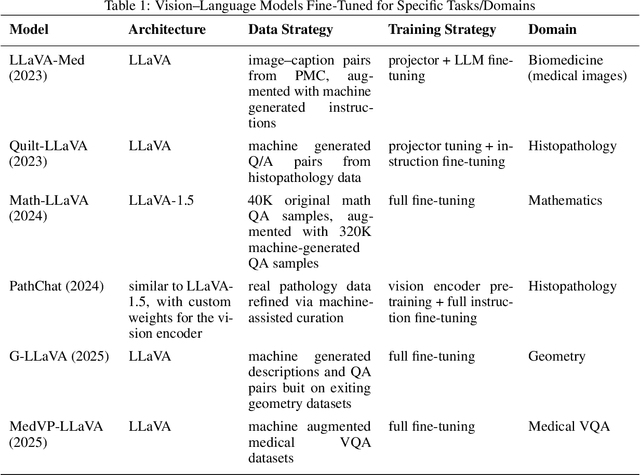

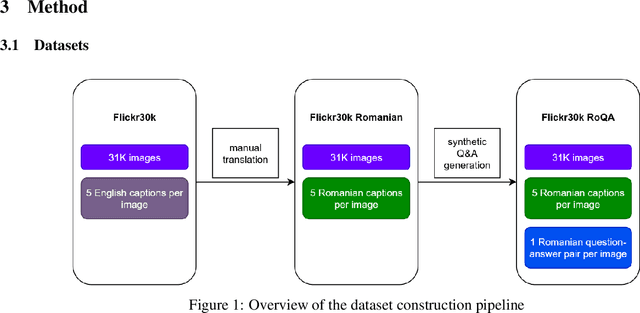

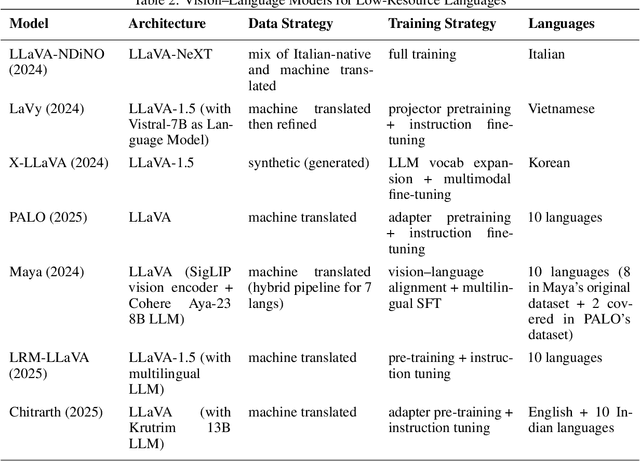

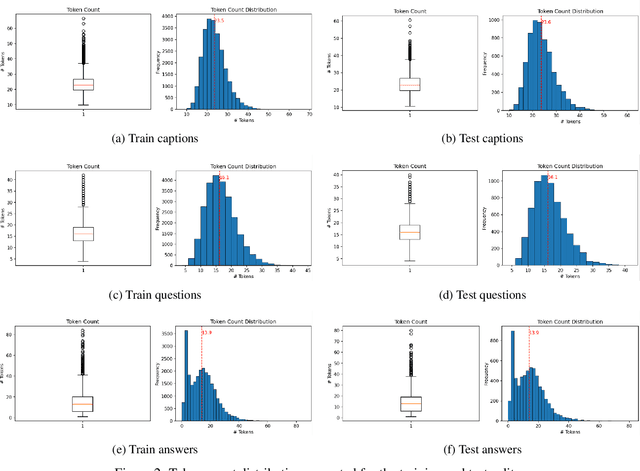

Abstract:Focusing on low-resource languages is an essential step toward democratizing generative AI. In this work, we contribute to reducing the multimodal NLP resource gap for Romanian. We translate the widely known Flickr30k dataset into Romanian and further extend it for visual question answering by leveraging open-source LLMs. We demonstrate the usefulness of our datasets by fine-tuning open-source VLMs on Romanian visual question answering. We select VLMs from three widely used model families: LLaMA 3.2, LLaVA 1.6, and Qwen2. For fine-tuning, we employ the parameter-efficient LoRA method. Our models show improved Romanian capabilities in visual QA, as well as on tasks they were not trained on, such as Romanian image description generation. The seven-billion-parameter Qwen2-VL-RoVQA obtains top scores on both tasks, with improvements of +6.05% and +2.61% in BERTScore F1 over its original version. Finally, the models show substantial reductions in grammatical errors compared to their original forms, indicating improvements not only in language understanding but also in Romanian fluency.

RoCoISLR: A Romanian Corpus for Isolated Sign Language Recognition

Nov 16, 2025Abstract:Automatic sign language recognition plays a crucial role in bridging the communication gap between deaf communities and hearing individuals; however, most available datasets focus on American Sign Language. For Romanian Isolated Sign Language Recognition (RoISLR), no large-scale, standardized dataset exists, which limits research progress. In this work, we introduce a new corpus for RoISLR, named RoCoISLR, comprising over 9,000 video samples that span nearly 6,000 standardized glosses from multiple sources. We establish benchmark results by evaluating seven state-of-the-art video recognition models-I3D, SlowFast, Swin Transformer, TimeSformer, Uniformer, VideoMAE, and PoseConv3D-under consistent experimental setups, and compare their performance with that of the widely used WLASL2000 corpus. According to the results, transformer-based architectures outperform convolutional baselines; Swin Transformer achieved a Top-1 accuracy of 34.1%. Our benchmarks highlight the challenges associated with long-tail class distributions in low-resource sign languages, and RoCoISLR provides the initial foundation for systematic RoISLR research.

MuSaRoNews: A Multidomain, Multimodal Satire Dataset from Romanian News Articles

Apr 10, 2025Abstract:Satire and fake news can both contribute to the spread of false information, even though both have different purposes (one if for amusement, the other is to misinform). However, it is not enough to rely purely on text to detect the incongruity between the surface meaning and the actual meaning of the news articles, and, often, other sources of information (e.g., visual) provide an important clue for satire detection. This work introduces a multimodal corpus for satire detection in Romanian news articles named MuSaRoNews. Specifically, we gathered 117,834 public news articles from real and satirical news sources, composing the first multimodal corpus for satire detection in the Romanian language. We conducted experiments and showed that the use of both modalities improves performance.

SaRoHead: A Dataset for Satire Detection in Romanian Multi-Domain News Headlines

Apr 10, 2025Abstract:The headline is an important part of a news article, influenced by expressiveness and connection to the exposed subject. Although most news outlets aim to present reality objectively, some publications prefer a humorous approach in which stylistic elements of satire, irony, and sarcasm blend to cover specific topics. Satire detection can be difficult because a headline aims to expose the main idea behind a news article. In this paper, we propose SaRoHead, the first corpus for satire detection in Romanian multi-domain news headlines. Our findings show that the clickbait used in some non-satirical headlines significantly influences the model.

Scaling Federated Learning Solutions with Kubernetes for Synthesizing Histopathology Images

Apr 05, 2025Abstract:In the field of deep learning, large architectures often obtain the best performance for many tasks, but also require massive datasets. In the histological domain, tissue images are expensive to obtain and constitute sensitive medical information, raising concerns about data scarcity and privacy. Vision Transformers are state-of-the-art computer vision models that have proven helpful in many tasks, including image classification. In this work, we combine vision Transformers with generative adversarial networks to generate histopathological images related to colorectal cancer and test their quality by augmenting a training dataset, leading to improved classification accuracy. Then, we replicate this performance using the federated learning technique and a realistic Kubernetes setup with multiple nodes, simulating a scenario where the training dataset is split among several hospitals unable to share their information directly due to privacy concerns.

UniBERTs: Adversarial Training for Language-Universal Representations

Mar 16, 2025Abstract:This paper presents UniBERT, a compact multilingual language model that leverages an innovative training framework integrating three components: masked language modeling, adversarial training, and knowledge distillation. Pre-trained on a meticulously curated Wikipedia corpus spanning 107 languages, UniBERT is designed to reduce the computational demands of large-scale models while maintaining competitive performance across various natural language processing tasks. Comprehensive evaluations on four tasks -- named entity recognition, natural language inference, question answering, and semantic textual similarity -- demonstrate that our multilingual training strategy enhanced by an adversarial objective significantly improves cross-lingual generalization. Specifically, UniBERT models show an average relative improvement of 7.72% over traditional baselines, which achieved an average relative improvement of only 1.17%, with statistical analysis confirming the significance of these gains (p-value = 0.0181). This work highlights the benefits of combining adversarial training and knowledge distillation to build scalable and robust language models, thereby advancing the field of multilingual and cross-lingual natural language processing.

RoLargeSum: A Large Dialect-Aware Romanian News Dataset for Summary, Headline, and Keyword Generation

Dec 15, 2024

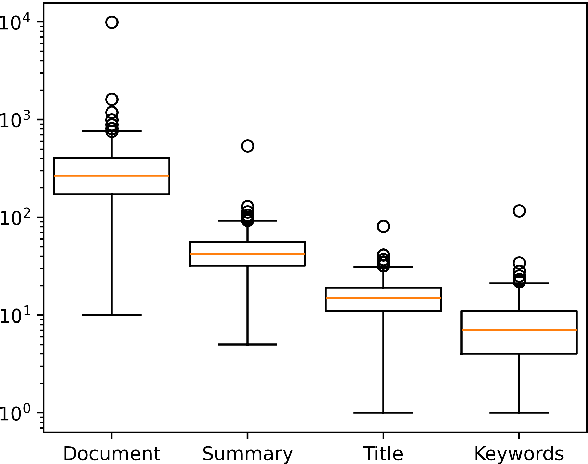

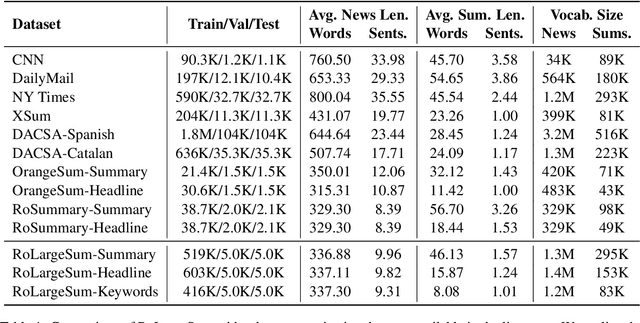

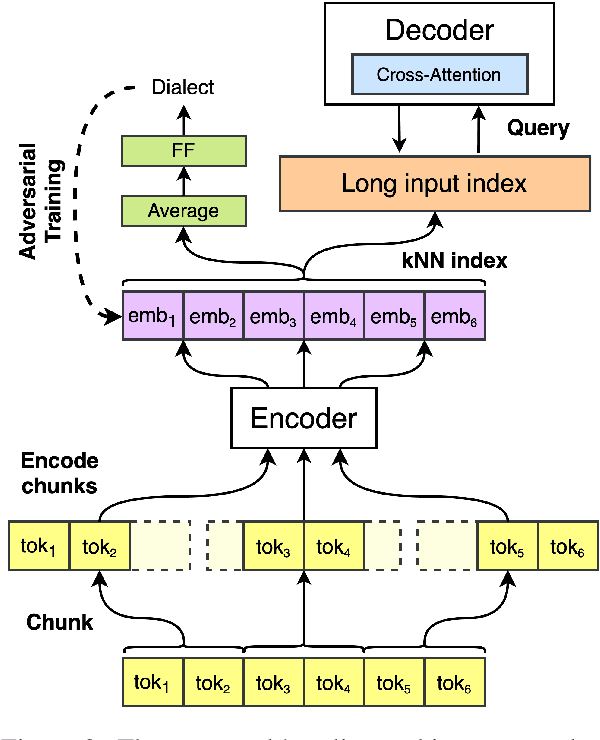

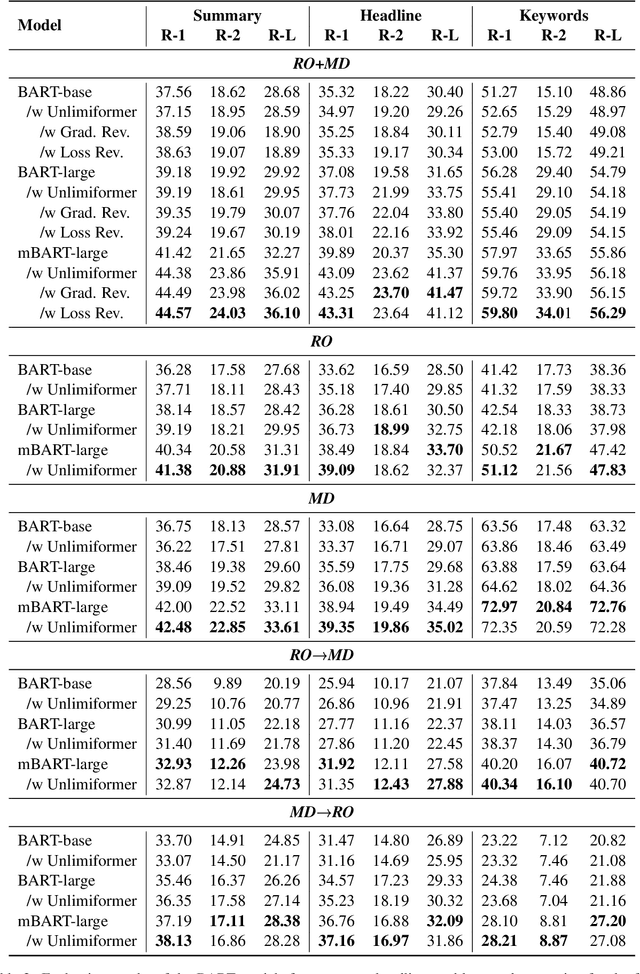

Abstract:Using supervised automatic summarisation methods requires sufficient corpora that include pairs of documents and their summaries. Similarly to many tasks in natural language processing, most of the datasets available for summarization are in English, posing challenges for developing summarization models in other languages. Thus, in this work, we introduce RoLargeSum, a novel large-scale summarization dataset for the Romanian language crawled from various publicly available news websites from Romania and the Republic of Moldova that were thoroughly cleaned to ensure a high-quality standard. RoLargeSum contains more than 615K news articles, together with their summaries, as well as their headlines, keywords, dialect, and other metadata that we found on the targeted websites. We further evaluated the performance of several BART variants and open-source large language models on RoLargeSum for benchmarking purposes. We manually evaluated the results of the best-performing system to gain insight into the potential pitfalls of this data set and future development.

GRAF: Graph Retrieval Augmented by Facts for Legal Question Answering

Dec 05, 2024

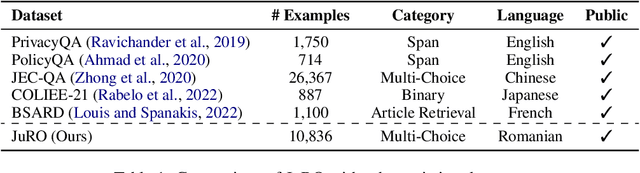

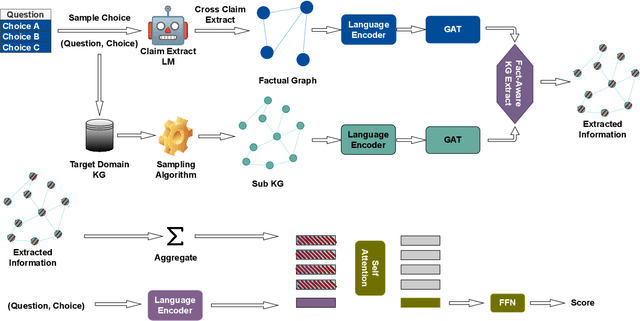

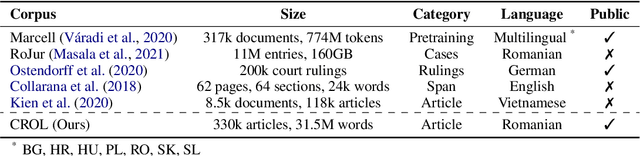

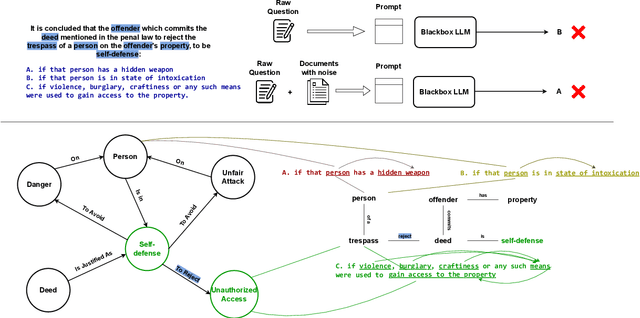

Abstract:Pre-trained Language Models (PLMs) have shown remarkable performances in recent years, setting a new paradigm for NLP research and industry. The legal domain has received some attention from the NLP community partly due to its textual nature. Some tasks from this domain are represented by question-answering (QA) tasks. This work explores the legal domain Multiple-Choice QA (MCQA) for a low-resource language. The contribution of this work is multi-fold. We first introduce JuRO, the first openly available Romanian legal MCQA dataset, comprising three different examinations and a number of 10,836 total questions. Along with this dataset, we introduce CROL, an organized corpus of laws that has a total of 93 distinct documents with their modifications from 763 time spans, that we leveraged in this work for Information Retrieval (IR) techniques. Moreover, we are the first to propose Law-RoG, a Knowledge Graph (KG) for the Romanian language, and this KG is derived from the aforementioned corpus. Lastly, we propose a novel approach for MCQA, Graph Retrieval Augmented by Facts (GRAF), which achieves competitive results with generally accepted SOTA methods and even exceeds them in most settings.

Investigating Large Language Models for Complex Word Identification in Multilingual and Multidomain Setups

Nov 03, 2024

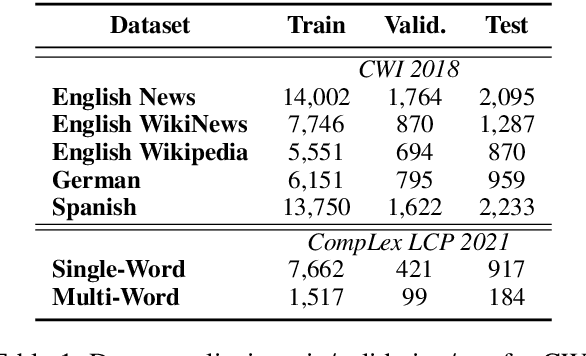

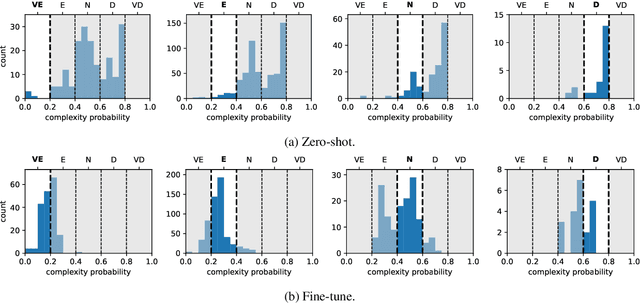

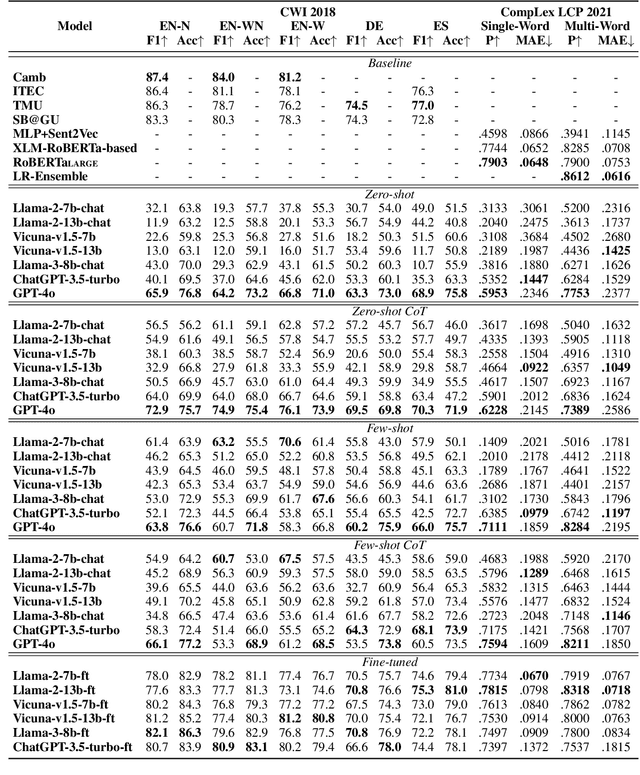

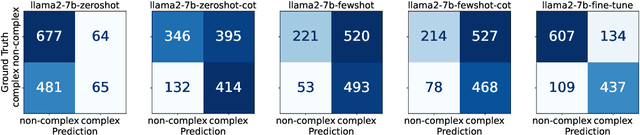

Abstract:Complex Word Identification (CWI) is an essential step in the lexical simplification task and has recently become a task on its own. Some variations of this binary classification task have emerged, such as lexical complexity prediction (LCP) and complexity evaluation of multi-word expressions (MWE). Large language models (LLMs) recently became popular in the Natural Language Processing community because of their versatility and capability to solve unseen tasks in zero/few-shot settings. Our work investigates LLM usage, specifically open-source models such as Llama 2, Llama 3, and Vicuna v1.5, and closed-source, such as ChatGPT-3.5-turbo and GPT-4o, in the CWI, LCP, and MWE settings. We evaluate zero-shot, few-shot, and fine-tuning settings and show that LLMs struggle in certain conditions or achieve comparable results against existing methods. In addition, we provide some views on meta-learning combined with prompt learning. In the end, we conclude that the current state of LLMs cannot or barely outperform existing methods, which are usually much smaller.

A Cross-Lingual Meta-Learning Method Based on Domain Adaptation for Speech Emotion Recognition

Oct 06, 2024

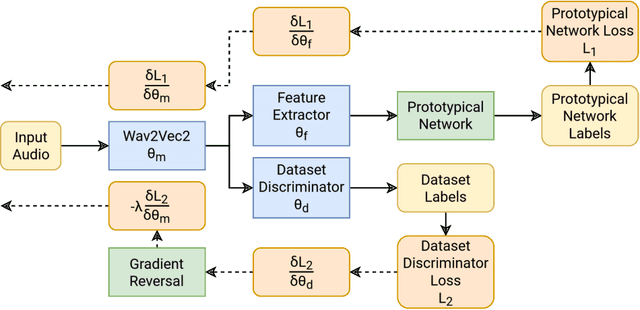

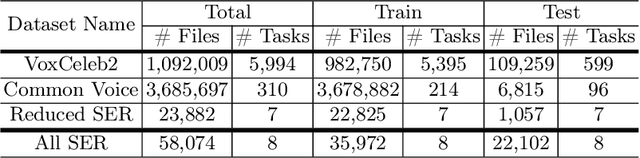

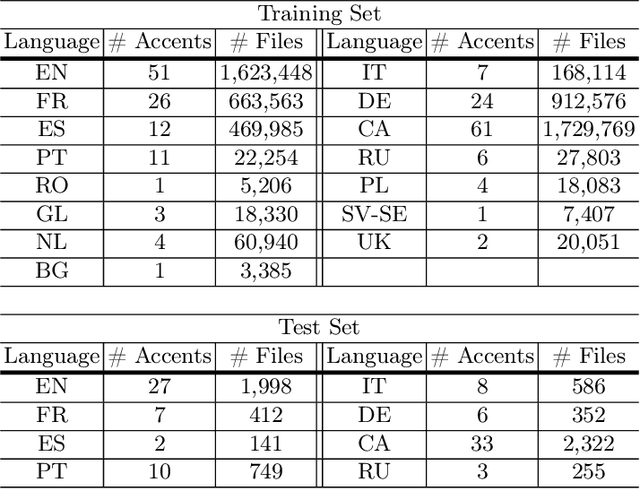

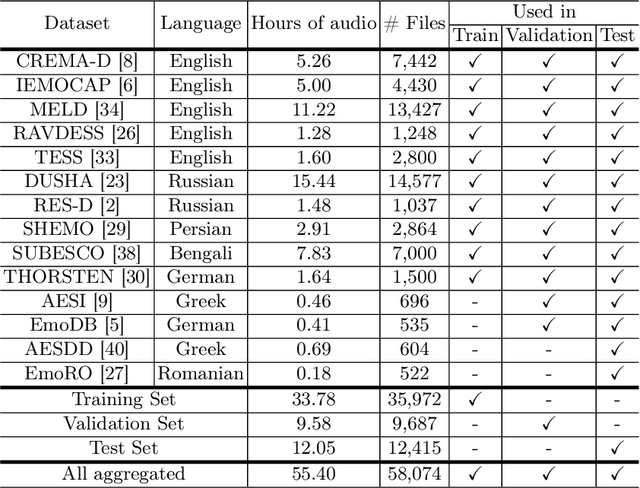

Abstract:Best-performing speech models are trained on large amounts of data in the language they are meant to work for. However, most languages have sparse data, making training models challenging. This shortage of data is even more prevalent in speech emotion recognition. Our work explores the model's performance in limited data, specifically for speech emotion recognition. Meta-learning specializes in improving the few-shot learning. As a result, we employ meta-learning techniques on speech emotion recognition tasks, accent recognition, and person identification. To this end, we propose a series of improvements over the multistage meta-learning method. Unlike other works focusing on smaller models due to the high computational cost of meta-learning algorithms, we take a more practical approach. We incorporate a large pre-trained backbone and a prototypical network, making our methods more feasible and applicable. Our most notable contribution is an improved fine-tuning technique during meta-testing that significantly boosts the performance on out-of-distribution datasets. This result, together with incremental improvements from several other works, helped us achieve accuracy scores of 83.78% and 56.30% for Greek and Romanian speech emotion recognition datasets not included in the training or validation splits in the context of 4-way 5-shot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge