Donghyun Na

SwitchLight: Co-design of Physics-driven Architecture and Pre-training Framework for Human Portrait Relighting

Feb 29, 2024

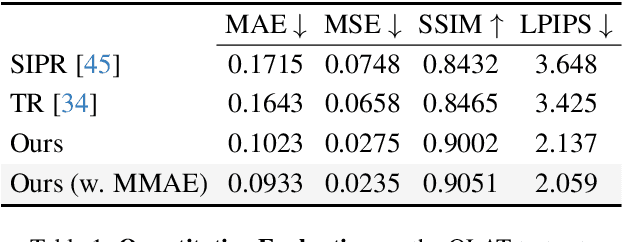

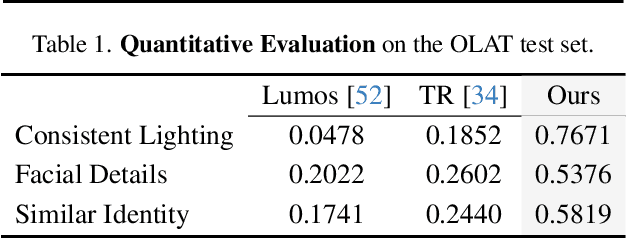

Abstract:We introduce a co-designed approach for human portrait relighting that combines a physics-guided architecture with a pre-training framework. Drawing on the Cook-Torrance reflectance model, we have meticulously configured the architecture design to precisely simulate light-surface interactions. Furthermore, to overcome the limitation of scarce high-quality lightstage data, we have developed a self-supervised pre-training strategy. This novel combination of accurate physical modeling and expanded training dataset establishes a new benchmark in relighting realism.

Learning to Generalize to Unseen Tasks with Bilevel Optimization

Aug 05, 2019

Abstract:Recent metric-based meta-learning approaches, which learn a metric space that generalizes well over combinatorial number of different classification tasks sampled from a task distribution, have been shown to be effective for few-shot classification tasks of unseen classes. They are often trained with episodic training where they iteratively train a common metric space that reduces distance between the class representatives and instances belonging to each class, over large number of episodes with random classes. However, this training is limited in that while the main target is the generalization to the classification of unseen classes during training, there is no explicit consideration of generalization during meta-training phase. To tackle this issue, we propose a simple yet effective meta-learning framework for metricbased approaches, which we refer to as learning to generalize (L2G), that explicitly constrains the learning on a sampled classification task to reduce the classification error on a randomly sampled unseen classification task with a bilevel optimization scheme. This explicit learning aimed toward generalization allows the model to obtain a metric that separates well between unseen classes. We validate our L2G framework on mini-ImageNet and tiered-ImageNet datasets with two base meta-learning few-shot classification models, Prototypical Networks and Relation Networks. The results show that L2G significantly improves the performance of the two methods over episodic training. Further visualization shows that L2G obtains a metric space that clusters and separates unseen classes well.

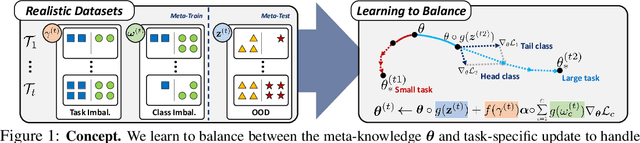

Learning to Balance: Bayesian Meta-Learning for Imbalanced and Out-of-distribution Tasks

May 30, 2019

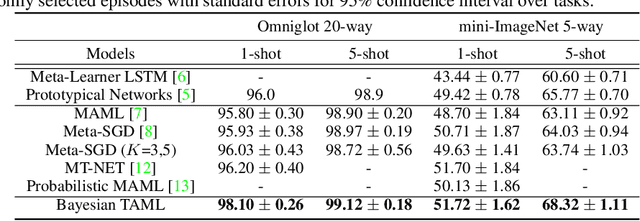

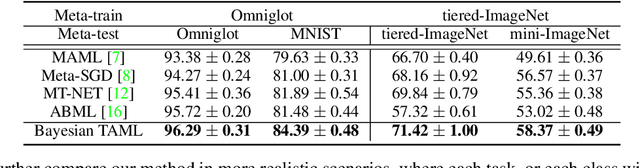

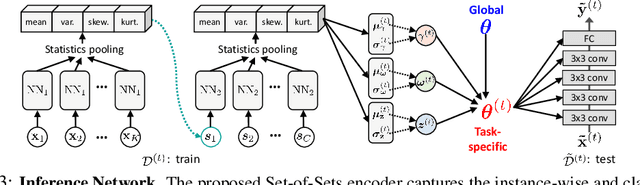

Abstract:While tasks could come with varying number of instances in realistic settings, the existing meta-learning approaches for few-shot classfication assume even task distributions where the number of instances for each task and class are fixed. Due to such restriction, they learn to equally utilize the meta-knowledge across all the tasks, even when the number of instances per task and class largely varies. Moreover, they do not consider distributional difference in unseen tasks at the meta-test time, on which the meta-knowledge may have varying degree of usefulness depending on the task relatedness. To overcome these limitations, we propose a novel meta-learning model that adaptively balances the effect of the meta-learning and task-specific learning, and also class-specific learning within each task. Through the learning of the balancing variables, we can decide whether to obtain a solution close to the initial parameter or far from it. We formulate this objective into a Bayesian inference framework and solve it using variational inference. Our Bayesian Task-Adaptive Meta-Learning (Bayesian-TAML) significantly outperforms existing meta-learning approaches on benchmark datasets for both few-shot and realistic class- and task-imbalanced datasets, with especially higher gains on the latter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge