Diksha Goel

TempoNet: Learning Realistic Communication and Timing Patterns for Network Traffic Simulation

Jan 22, 2026Abstract:Realistic network traffic simulation is critical for evaluating intrusion detection systems, stress-testing network protocols, and constructing high-fidelity environments for cybersecurity training. While attack traffic can often be layered into training environments using red-teaming or replay methods, generating authentic benign background traffic remains a core challenge -- particularly in simulating the complex temporal and communication dynamics of real-world networks. This paper introduces TempoNet, a novel generative model that combines multi-task learning with multi-mark temporal point processes to jointly model inter-arrival times and all packet- and flow-header fields. TempoNet captures fine-grained timing patterns and higher-order correlations such as host-pair behavior and seasonal trends, addressing key limitations of GAN-, LLM-, and Bayesian-based methods that fail to reproduce structured temporal variation. TempoNet produces temporally consistent, high-fidelity traces, validated on real-world datasets. Furthermore, we show that intrusion detection models trained on TempoNet-generated background traffic perform comparably to those trained on real data, validating its utility for real-world security applications.

Unveiling the Black Box: A Multi-Layer Framework for Explaining Reinforcement Learning-Based Cyber Agents

May 16, 2025Abstract:Reinforcement Learning (RL) agents are increasingly used to simulate sophisticated cyberattacks, but their decision-making processes remain opaque, hindering trust, debugging, and defensive preparedness. In high-stakes cybersecurity contexts, explainability is essential for understanding how adversarial strategies are formed and evolve over time. In this paper, we propose a unified, multi-layer explainability framework for RL-based attacker agents that reveals both strategic (MDP-level) and tactical (policy-level) reasoning. At the MDP level, we model cyberattacks as a Partially Observable Markov Decision Processes (POMDPs) to expose exploration-exploitation dynamics and phase-aware behavioural shifts. At the policy level, we analyse the temporal evolution of Q-values and use Prioritised Experience Replay (PER) to surface critical learning transitions and evolving action preferences. Evaluated across CyberBattleSim environments of increasing complexity, our framework offers interpretable insights into agent behaviour at scale. Unlike previous explainable RL methods, which are often post-hoc, domain-specific, or limited in depth, our approach is both agent- and environment-agnostic, supporting use cases ranging from red-team simulation to RL policy debugging. By transforming black-box learning into actionable behavioural intelligence, our framework enables both defenders and developers to better anticipate, analyse, and respond to autonomous cyber threats.

CAMP in the Odyssey: Provably Robust Reinforcement Learning with Certified Radius Maximization

Jan 29, 2025

Abstract:Deep reinforcement learning (DRL) has gained widespread adoption in control and decision-making tasks due to its strong performance in dynamic environments. However, DRL agents are vulnerable to noisy observations and adversarial attacks, and concerns about the adversarial robustness of DRL systems have emerged. Recent efforts have focused on addressing these robustness issues by establishing rigorous theoretical guarantees for the returns achieved by DRL agents in adversarial settings. Among these approaches, policy smoothing has proven to be an effective and scalable method for certifying the robustness of DRL agents. Nevertheless, existing certifiably robust DRL relies on policies trained with simple Gaussian augmentations, resulting in a suboptimal trade-off between certified robustness and certified return. To address this issue, we introduce a novel paradigm dubbed \texttt{C}ertified-r\texttt{A}dius-\texttt{M}aximizing \texttt{P}olicy (\texttt{CAMP}) training. \texttt{CAMP} is designed to enhance DRL policies, achieving better utility without compromising provable robustness. By leveraging the insight that the global certified radius can be derived from local certified radii based on training-time statistics, \texttt{CAMP} formulates a surrogate loss related to the local certified radius and optimizes the policy guided by this surrogate loss. We also introduce \textit{policy imitation} as a novel technique to stabilize \texttt{CAMP} training. Experimental results demonstrate that \texttt{CAMP} significantly improves the robustness-return trade-off across various tasks. Based on the results, \texttt{CAMP} can achieve up to twice the certified expected return compared to that of baselines. Our code is available at https://github.com/NeuralSec/camp-robust-rl.

The Future of AI: Exploring the Potential of Large Concept Models

Jan 08, 2025Abstract:The field of Artificial Intelligence (AI) continues to drive transformative innovations, with significant progress in conversational interfaces, autonomous vehicles, and intelligent content creation. Since the launch of ChatGPT in late 2022, the rise of Generative AI has marked a pivotal era, with the term Large Language Models (LLMs) becoming a ubiquitous part of daily life. LLMs have demonstrated exceptional capabilities in tasks such as text summarization, code generation, and creative writing. However, these models are inherently limited by their token-level processing, which restricts their ability to perform abstract reasoning, conceptual understanding, and efficient generation of long-form content. To address these limitations, Meta has introduced Large Concept Models (LCMs), representing a significant shift from traditional token-based frameworks. LCMs use concepts as foundational units of understanding, enabling more sophisticated semantic reasoning and context-aware decision-making. Given the limited academic research on this emerging technology, our study aims to bridge the knowledge gap by collecting, analyzing, and synthesizing existing grey literature to provide a comprehensive understanding of LCMs. Specifically, we (i) identify and describe the features that distinguish LCMs from LLMs, (ii) explore potential applications of LCMs across multiple domains, and (iii) propose future research directions and practical strategies to advance LCM development and adoption.

Machine Learning Driven Smishing Detection Framework for Mobile Security

Dec 09, 2024

Abstract:The increasing reliance on smartphones for communication, financial transactions, and personal data management has made them prime targets for cyberattacks, particularly smishing, a sophisticated variant of phishing conducted via SMS. Despite the growing threat, traditional detection methods often struggle with the informal and evolving nature of SMS language, which includes abbreviations, slang, and short forms. This paper presents an enhanced content-based smishing detection framework that leverages advanced text normalization techniques to improve detection accuracy. By converting nonstandard text into its standardized form, the proposed model enhances the efficacy of machine learning classifiers, particularly the Naive Bayesian classifier, in distinguishing smishing messages from legitimate ones. Our experimental results, validated on a publicly available dataset, demonstrate a detection accuracy of 96.2%, with a low False Positive Rate of 3.87% and False Negative Rate of 2.85%. This approach significantly outperforms existing methodologies, providing a robust solution to the increasingly sophisticated threat of smishing in the mobile environment.

ChatNVD: Advancing Cybersecurity Vulnerability Assessment with Large Language Models

Dec 06, 2024Abstract:The increasing frequency and sophistication of cybersecurity vulnerabilities in software systems underscore the urgent need for robust and effective methods of vulnerability assessment. However, existing approaches often rely on highly technical and abstract frameworks, which hinders understanding and increases the likelihood of exploitation, resulting in severe cyberattacks. Given the growing adoption of Large Language Models (LLMs) across diverse domains, this paper explores their potential application in cybersecurity, specifically for enhancing the assessment of software vulnerabilities. We propose ChatNVD, an LLM-based cybersecurity vulnerability assessment tool leveraging the National Vulnerability Database (NVD) to provide context-rich insights and streamline vulnerability analysis for cybersecurity professionals, developers, and non-technical users. We develop three variants of ChatNVD, utilizing three prominent LLMs: GPT-4o mini by OpenAI, Llama 3 by Meta, and Gemini 1.5 Pro by Google. To evaluate their efficacy, we conduct a comparative analysis of these models using a comprehensive questionnaire comprising common security vulnerability questions, assessing their accuracy in identifying and analyzing software vulnerabilities. This study provides valuable insights into the potential of LLMs to address critical challenges in understanding and mitigation of software vulnerabilities.

Optimizing Cyber Defense in Dynamic Active Directories through Reinforcement Learning

Jun 28, 2024

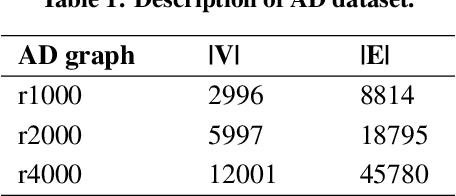

Abstract:This paper addresses a significant gap in Autonomous Cyber Operations (ACO) literature: the absence of effective edge-blocking ACO strategies in dynamic, real-world networks. It specifically targets the cybersecurity vulnerabilities of organizational Active Directory (AD) systems. Unlike the existing literature on edge-blocking defenses which considers AD systems as static entities, our study counters this by recognizing their dynamic nature and developing advanced edge-blocking defenses through a Stackelberg game model between attacker and defender. We devise a Reinforcement Learning (RL)-based attack strategy and an RL-assisted Evolutionary Diversity Optimization-based defense strategy, where the attacker and defender improve each other strategy via parallel gameplay. To address the computational challenges of training attacker-defender strategies on numerous dynamic AD graphs, we propose an RL Training Facilitator that prunes environments and neural networks to eliminate irrelevant elements, enabling efficient and scalable training for large graphs. We extensively train the attacker strategy, as a sophisticated attacker model is essential for a robust defense. Our empirical results successfully demonstrate that our proposed approach enhances defender's proficiency in hardening dynamic AD graphs while ensuring scalability for large-scale AD.

Evolving Reinforcement Learning Environment to Minimize Learner's Achievable Reward: An Application on Hardening Active Directory Systems

Apr 08, 2023

Abstract:We study a Stackelberg game between one attacker and one defender in a configurable environment. The defender picks a specific environment configuration. The attacker observes the configuration and attacks via Reinforcement Learning (RL trained against the observed environment). The defender's goal is to find the environment with minimum achievable reward for the attacker. We apply Evolutionary Diversity Optimization (EDO) to generate diverse population of environments for training. Environments with clearly high rewards are killed off and replaced by new offsprings to avoid wasting training time. Diversity not only improves training quality but also fits well with our RL scenario: RL agents tend to improve gradually, so a slightly worse environment earlier on may become better later. We demonstrate the effectiveness of our approach by focusing on a specific application, Active Directory (AD). AD is the default security management system for Windows domain networks. AD environment describes an attack graph, where nodes represent computers/accounts/etc., and edges represent accesses. The attacker aims to find the best attack path to reach the highest-privilege node. The defender can change the graph by removing a limited number of edges (revoke accesses). Our approach generates better defensive plans than the existing approach and scales better.

Defending Active Directory by Combining Neural Network based Dynamic Program and Evolutionary Diversity Optimisation

Apr 08, 2022

Abstract:Active Directory (AD) is the default security management system for Windows domain networks. We study a Stackelberg game model between one attacker and one defender on an AD attack graph. The attacker initially has access to a set of entry nodes. The attacker can expand this set by strategically exploring edges. Every edge has a detection rate and a failure rate. The attacker aims to maximize their chance of successfully reaching the destination before getting detected. The defender's task is to block a constant number of edges to decrease the attacker's chance of success. We show that the problem is #P-hard and, therefore, intractable to solve exactly. We convert the attacker's problem to an exponential sized Dynamic Program that is approximated by a Neural Network (NN). Once trained, the NN provides an efficient fitness function for the defender's Evolutionary Diversity Optimisation (EDO). The diversity emphasis on the defender's solution provides a diverse set of training samples, which improves the training accuracy of our NN for modelling the attacker. We go back and forth between NN training and EDO. Experimental results show that for R500 graph, our proposed EDO based defense is less than 1% away from the optimal defense.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge