Diego Navarro Navarro

PointDifformer: Robust Point Cloud Registration With Neural Diffusion and Transformer

Apr 22, 2024

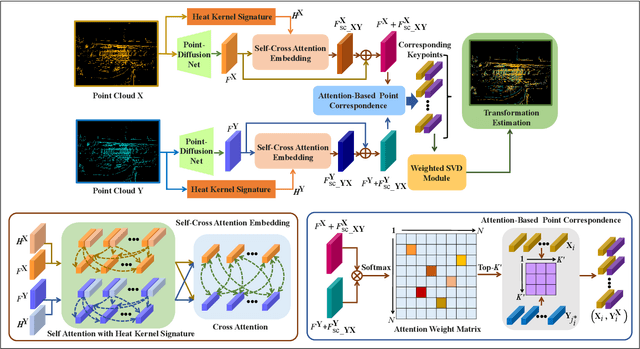

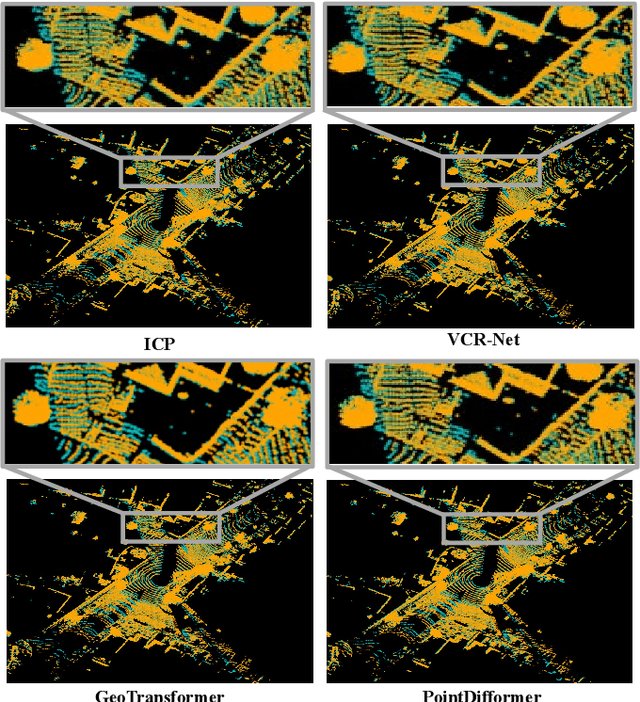

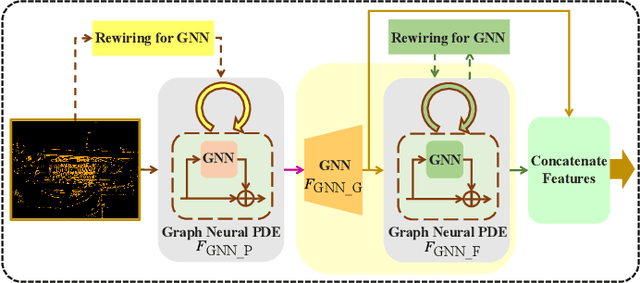

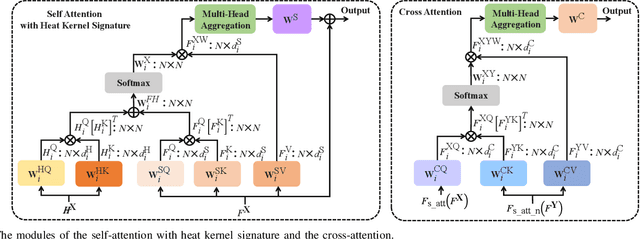

Abstract:Point cloud registration is a fundamental technique in 3-D computer vision with applications in graphics, autonomous driving, and robotics. However, registration tasks under challenging conditions, under which noise or perturbations are prevalent, can be difficult. We propose a robust point cloud registration approach that leverages graph neural partial differential equations (PDEs) and heat kernel signatures. Our method first uses graph neural PDE modules to extract high dimensional features from point clouds by aggregating information from the 3-D point neighborhood, thereby enhancing the robustness of the feature representations. Then, we incorporate heat kernel signatures into an attention mechanism to efficiently obtain corresponding keypoints. Finally, a singular value decomposition (SVD) module with learnable weights is used to predict the transformation between two point clouds. Empirical experiments on a 3-D point cloud dataset demonstrate that our approach not only achieves state-of-the-art performance for point cloud registration but also exhibits better robustness to additive noise or 3-D shape perturbations.

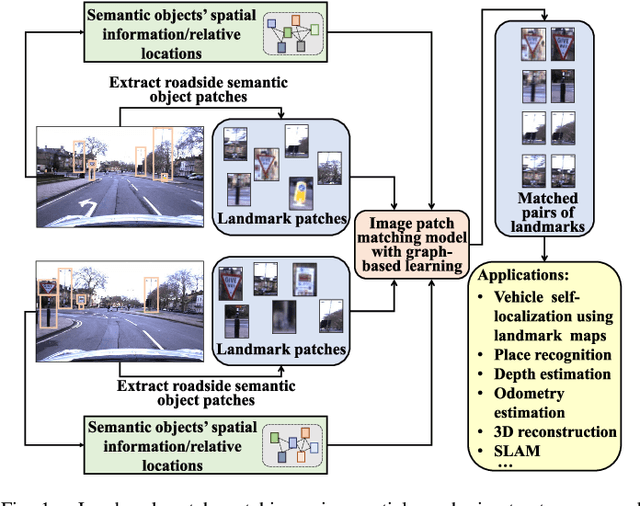

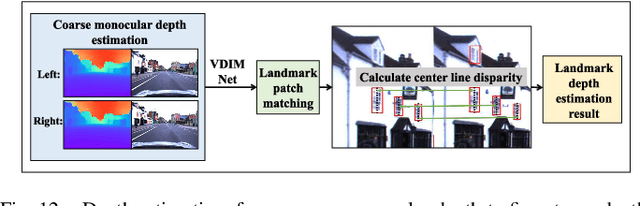

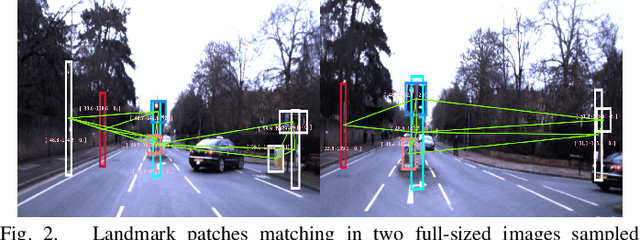

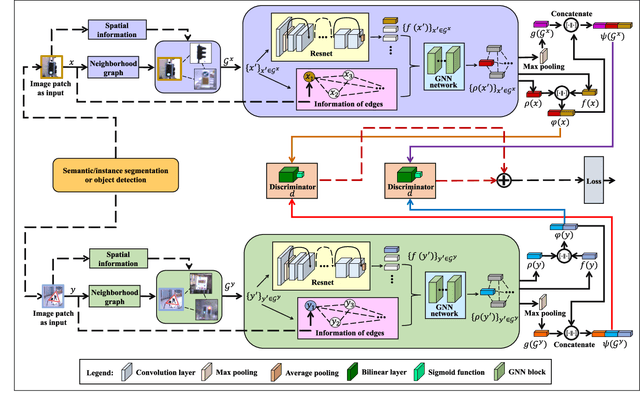

Image Patch-Matching with Graph-Based Learning in Street Scenes

Nov 08, 2023

Abstract:Matching landmark patches from a real-time image captured by an on-vehicle camera with landmark patches in an image database plays an important role in various computer perception tasks for autonomous driving. Current methods focus on local matching for regions of interest and do not take into account spatial neighborhood relationships among the image patches, which typically correspond to objects in the environment. In this paper, we construct a spatial graph with the graph vertices corresponding to patches and edges capturing the spatial neighborhood information. We propose a joint feature and metric learning model with graph-based learning. We provide a theoretical basis for the graph-based loss by showing that the information distance between the distributions conditioned on matched and unmatched pairs is maximized under our framework. We evaluate our model using several street-scene datasets and demonstrate that our approach achieves state-of-the-art matching results.

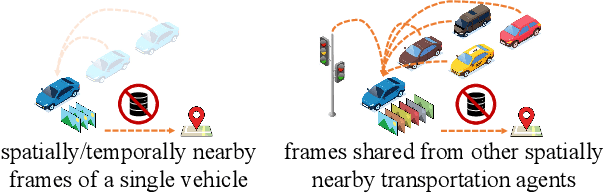

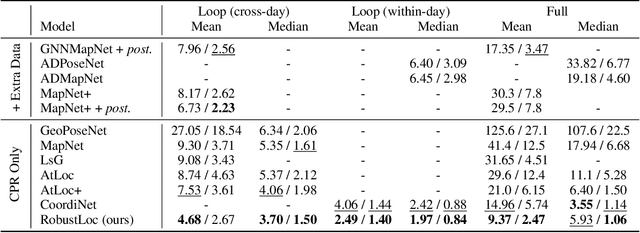

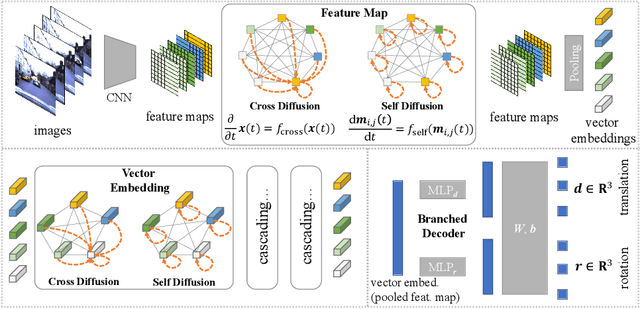

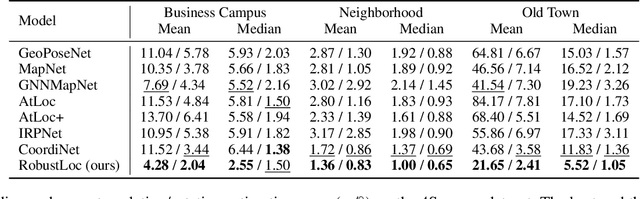

RobustLoc: Robust Camera Pose Regression in Challenging Driving Environments

Nov 23, 2022

Abstract:Camera relocalization has various applications in autonomous driving. Previous camera pose regression models consider only ideal scenarios where there is little environmental perturbation. To deal with challenging driving environments that may have changing seasons, weather, illumination, and the presence of unstable objects, we propose RobustLoc, which derives its robustness against perturbations from neural differential equations. Our model uses a convolutional neural network to extract feature maps from multi-view images, a robust neural differential equation diffusion block module to diffuse information interactively, and a branched pose decoder with multi-layer training to estimate the vehicle poses. Experiments demonstrate that RobustLoc surpasses current state-of-the-art camera pose regression models and achieves robust performance in various environments. Our code is released at: https://github.com/sijieaaa/RobustLoc

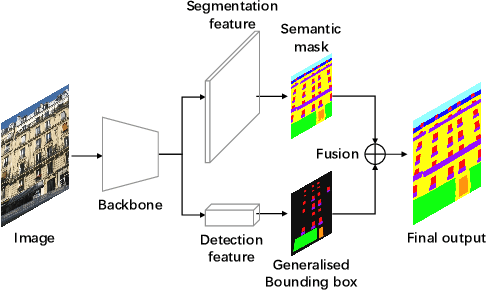

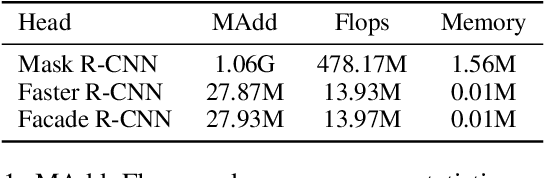

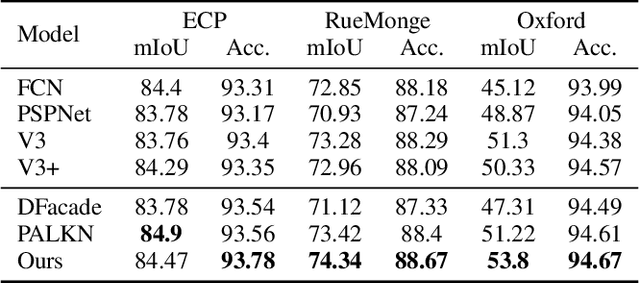

Building Facade Parsing R-CNN

May 12, 2022

Abstract:Building facade parsing, which predicts pixel-level labels for building facades, has applications in computer vision perception for autonomous vehicle (AV) driving. However, instead of a frontal view, an on-board camera of an AV captures a deformed view of the facade of the buildings on both sides of the road the AV is travelling on, due to the camera perspective. We propose Facade R-CNN, which includes a transconv module, generalized bounding box detection, and convex regularization, to perform parsing of deformed facade views. Experiments demonstrate that Facade R-CNN achieves better performance than the current state-of-the-art facade parsing models, which are primarily developed for frontal views. We also publish a new building facade parsing dataset derived from the Oxford RobotCar dataset, which we call the Oxford RobotCar Facade dataset. This dataset contains 500 street-view images from the Oxford RobotCar dataset augmented with accurate annotations of building facade objects. The published dataset is available at https://github.com/sijieaaa/Oxford-RobotCar-Facade

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge