Dennis Becker

Towards Robust and Efficient Communications for Urban Air Mobility

Sep 15, 2023

Abstract:For the realization of the future urban air mobility, reliable information exchange based on robust and efficient communication between all airspace participants will be one of the key factors to ensure safe operations. Especially in dense urban scenarios, the direct and fast information exchange between drones based on Drone-to-Drone communications is a promising technology for enabling reliable collision avoidance systems. However, to mitigate collisions and to increase overall reliability, unmanned aircraft still lack a redundant, higher-level safety net to coordinate and monitor traffic, as is common in today's civil aviation. In addition, direct and fast information exchange based on ad hoc communication is needed to cope with the very short reaction times required to avoid collisions and to cope with the the high traffic densities. Therefore, we are developing a \ac{d2d} communication and surveillance system, called DroneCAST, which is specifically tailored to the requirements of a future urban airspace and will be part of a multi-link approach. In this work we discuss challenges and expected safety-critical applications that will have to rely on communications for \ac{uam} and present our communication concept and necessary steps towards DroneCAST. As a first step towards an implementation, we equipped two drones with hardware prototypes of the experimental communication system and performed several flights around the model city to evaluate the performance of the hardware and to demonstrate different applications that will rely on robust and efficient communications.

The Emotional Dilemma: Influence of a Human-like Robot on Trust and Cooperation

Jul 06, 2023Abstract:Increasing anthropomorphic robot behavioral design could affect trust and cooperation positively. However, studies have shown contradicting results and suggest a task-dependent relationship between robots that display emotions and trust. Therefore, this study analyzes the effect of robots that display human-like emotions on trust, cooperation, and participants' emotions. In the between-group study, participants play the coin entrustment game with an emotional and a non-emotional robot. The results show that the robot that displays emotions induces more anxiety than the neutral robot. Accordingly, the participants trust the emotional robot less and are less likely to cooperate. Furthermore, the perceived intelligence of a robot increases trust, while a desire to outcompete the robot can reduce trust and cooperation. Thus, the design of robots expressing emotions should be task dependent to avoid adverse effects that reduce trust and cooperation.

Integrating Uncertainty into Neural Network-based Speech Enhancement

May 15, 2023Abstract:Supervised masking approaches in the time-frequency domain aim to employ deep neural networks to estimate a multiplicative mask to extract clean speech. This leads to a single estimate for each input without any guarantees or measures of reliability. In this paper, we study the benefits of modeling uncertainty in clean speech estimation. Prediction uncertainty is typically categorized into aleatoric uncertainty and epistemic uncertainty. The former refers to inherent randomness in data, while the latter describes uncertainty in the model parameters. In this work, we propose a framework to jointly model aleatoric and epistemic uncertainties in neural network-based speech enhancement. The proposed approach captures aleatoric uncertainty by estimating the statistical moments of the speech posterior distribution and explicitly incorporates the uncertainty estimate to further improve clean speech estimation. For epistemic uncertainty, we investigate two Bayesian deep learning approaches: Monte Carlo dropout and Deep ensembles to quantify the uncertainty of the neural network parameters. Our analyses show that the proposed framework promotes capturing practical and reliable uncertainty, while combining different sources of uncertainties yields more reliable predictive uncertainty estimates. Furthermore, we demonstrate the benefits of modeling uncertainty on speech enhancement performance by evaluating the framework on different datasets, exhibiting notable improvement over comparable models that fail to account for uncertainty.

* Accepted version

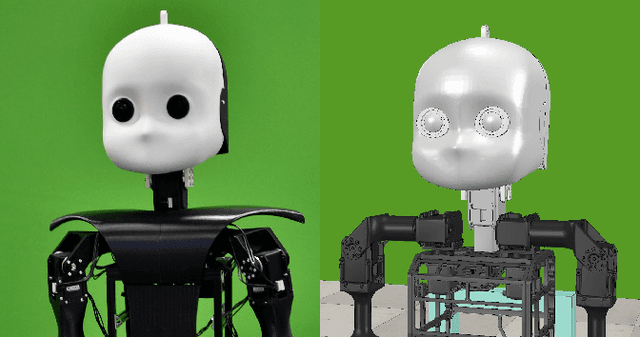

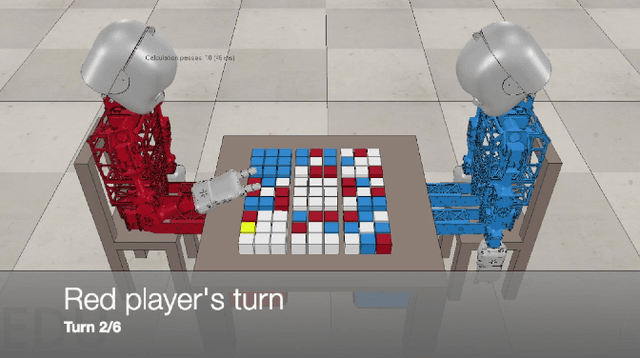

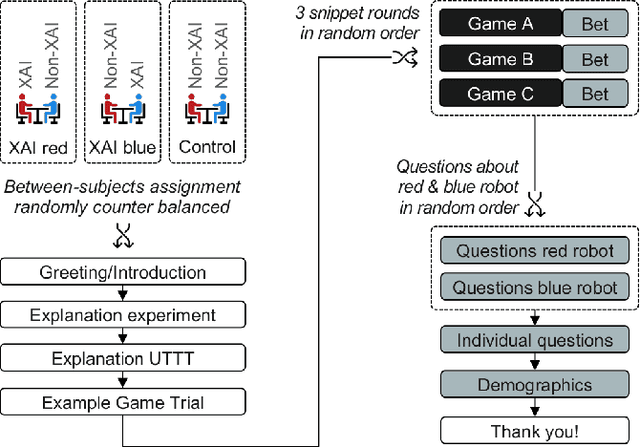

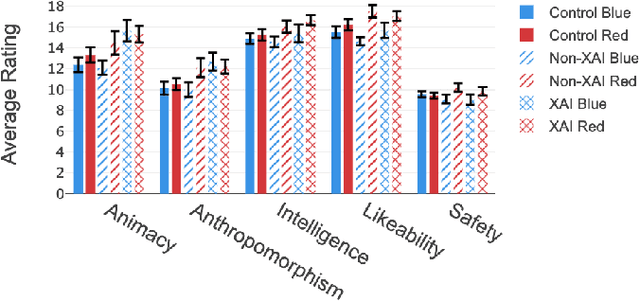

Explain yourself! Effects of Explanations in Human-Robot Interaction

Apr 09, 2022

Abstract:Recent developments in explainable artificial intelligence promise the potential to transform human-robot interaction: Explanations of robot decisions could affect user perceptions, justify their reliability, and increase trust. However, the effects on human perceptions of robots that explain their decisions have not been studied thoroughly. To analyze the effect of explainable robots, we conduct a study in which two simulated robots play a competitive board game. While one robot explains its moves, the other robot only announces them. Providing explanations for its actions was not sufficient to change the perceived competence, intelligence, likeability or safety ratings of the robot. However, the results show that the robot that explains its moves is perceived as more lively and human-like. This study demonstrates the need for and potential of explainable human-robot interaction and the wider assessment of its effects as a novel research direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge