Dawn M. Nilsen

ReactEMG Stroke: Healthy-to-Stroke Few-shot Adaptation for sEMG-Based Intent Detection

Jan 29, 2026Abstract:Surface electromyography (sEMG) is a promising control signal for assist-as-needed hand rehabilitation after stroke, but detecting intent from paretic muscles often requires lengthy, subject-specific calibration and remains brittle to variability. We propose a healthy-to-stroke adaptation pipeline that initializes an intent detector from a model pretrained on large-scale able-bodied sEMG, then fine-tunes it for each stroke participant using only a small amount of subject-specific data. Using a newly collected dataset from three individuals with chronic stroke, we compare adaptation strategies (head-only tuning, parameter-efficient LoRA adapters, and full end-to-end fine-tuning) and evaluate on held-out test sets that include realistic distribution shifts such as within-session drift, posture changes, and armband repositioning. Across conditions, healthy-pretrained adaptation consistently improves stroke intent detection relative to both zero-shot transfer and stroke-only training under the same data budget; the best adaptation methods improve average transition accuracy from 0.42 to 0.61 and raw accuracy from 0.69 to 0.78. These results suggest that transferring a reusable healthy-domain EMG representation can reduce calibration burden while improving robustness for real-time post-stroke intent detection.

ReactEMG: Zero-Shot, Low-Latency Intent Detection via sEMG

Jun 24, 2025

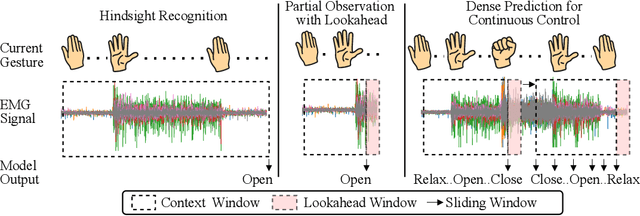

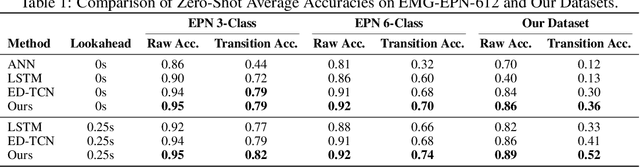

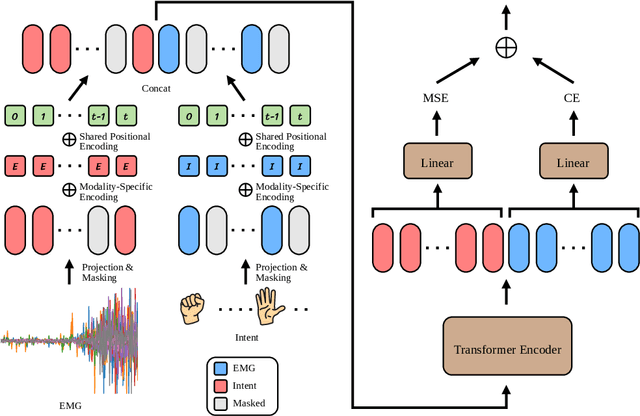

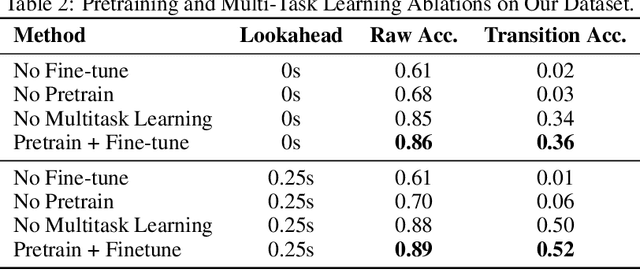

Abstract:Surface electromyography (sEMG) signals show promise for effective human-computer interfaces, particularly in rehabilitation and prosthetics. However, challenges remain in developing systems that respond quickly and reliably to user intent, across different subjects and without requiring time-consuming calibration. In this work, we propose a framework for EMG-based intent detection that addresses these challenges. Unlike traditional gesture recognition models that wait until a gesture is completed before classifying it, our approach uses a segmentation strategy to assign intent labels at every timestep as the gesture unfolds. We introduce a novel masked modeling strategy that aligns muscle activations with their corresponding user intents, enabling rapid onset detection and stable tracking of ongoing gestures. In evaluations against baseline methods, considering both accuracy and stability for device control, our approach surpasses state-of-the-art performance in zero-shot transfer conditions, demonstrating its potential for wearable robotics and next-generation prosthetic systems. Our project page is available at: https://reactemg.github.io

Reciprocal Learning of Intent Inferral with Augmented Visual Feedback for Stroke

Dec 10, 2024

Abstract:Intent inferral, the process by which a robotic device predicts a user's intent from biosignals, offers an effective and intuitive way to control wearable robots. Classical intent inferral methods treat biosignal inputs as unidirectional ground truths for training machine learning models, where the internal state of the model is not directly observable by the user. In this work, we propose reciprocal learning, a bidirectional paradigm that facilitates human adaptation to an intent inferral classifier. Our paradigm consists of iterative, interwoven stages that alternate between updating machine learning models and guiding human adaptation with the use of augmented visual feedback. We demonstrate this paradigm in the context of controlling a robotic hand orthosis for stroke, where the device predicts open, close, and relax intents from electromyographic (EMG) signals and provides appropriate assistance. We use LED progress-bar displays to communicate to the user the predicted probabilities for open and close intents by the classifier. Our experiments with stroke subjects show reciprocal learning improving performance in a subset of subjects (two out of five) without negatively impacting performance on the others. We hypothesize that, during reciprocal learning, subjects can learn to reproduce more distinguishable muscle activation patterns and generate more separable biosignals.

ChatEMG: Synthetic Data Generation to Control a Robotic Hand Orthosis for Stroke

Jun 17, 2024

Abstract:Intent inferral on a hand orthosis for stroke patients is challenging due to the difficulty of data collection from impaired subjects. Additionally, EMG signals exhibit significant variations across different conditions, sessions, and subjects, making it hard for classifiers to generalize. Traditional approaches require a large labeled dataset from the new condition, session, or subject to train intent classifiers; however, this data collection process is burdensome and time-consuming. In this paper, we propose ChatEMG, an autoregressive generative model that can generate synthetic EMG signals conditioned on prompts (i.e., a given sequence of EMG signals). ChatEMG enables us to collect only a small dataset from the new condition, session, or subject and expand it with synthetic samples conditioned on prompts from this new context. ChatEMG leverages a vast repository of previous data via generative training while still remaining context-specific via prompting. Our experiments show that these synthetic samples are classifier-agnostic and can improve intent inferral accuracy for different types of classifiers. We demonstrate that our complete approach can be integrated into a single patient session, including the use of the classifier for functional orthosis-assisted tasks. To the best of our knowledge, this is the first time an intent classifier trained partially on synthetic data has been deployed for functional control of an orthosis by a stroke survivor. Videos and additional information can be found at https://jxu.ai/chatemg.

Grasp Force Assistance via Throttle-based Wrist Angle Control on a Robotic Hand Orthosis for C6-C7 Spinal Cord Injury

Feb 12, 2024Abstract:Individuals with hand paralysis resulting from C6-C7 spinal cord injuries frequently rely on tenodesis for grasping. However, tenodesis generates limited grasping force and demands constant exertion to maintain a grasp, leading to fatigue and sometimes pain. We introduce the MyHand-SCI, a wearable robot that provides grasping assistance through motorized exotendons. Our user-driven device enables independent, ipsilateral operation via a novel Throttle-based Wrist Angle control method, which allows users to maintain grasps without continued wrist extension. A pilot case study with a person with C6 spinal cord injury shows an improvement in functional grasping and grasping force, as well as a preserved ability to modulate grasping force while using our device, thus improving their ability to manipulate everyday objects. This research is a step towards developing effective and intuitive wearable assistive devices for individuals with spinal cord injury.

Volitional Control of the Paretic Hand Post-Stroke Increases Finger Stiffness and Resistance to Robot-Assisted Movement

Feb 12, 2024

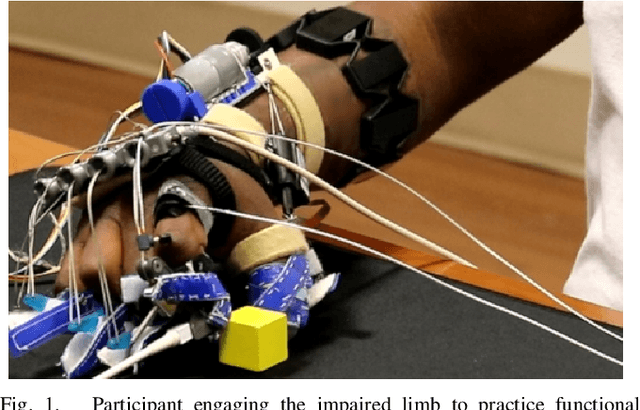

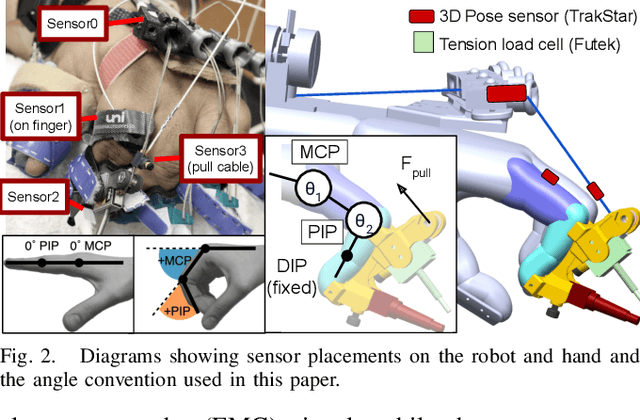

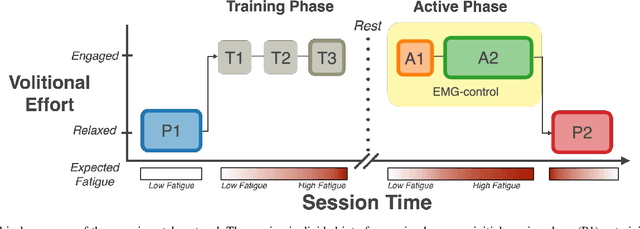

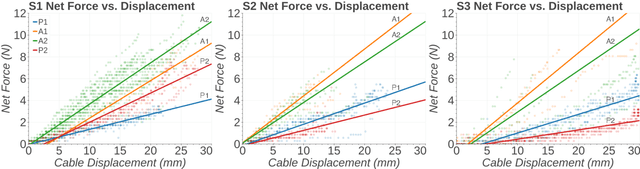

Abstract:Increased effort during use of the paretic arm and hand can provoke involuntary abnormal synergy patterns and amplify stiffness effects of muscle tone for individuals after stroke, which can add difficulty for user-controlled devices to assist hand movement during functional tasks. We study how volitional effort, exerted in an attempt to open or close the hand, affects resistance to robot-assisted movement at the finger level. We perform experiments with three chronic stroke survivors to measure changes in stiffness when the user is actively exerting effort to activate ipsilateral EMG-controlled robot-assisted hand movements, compared with when the fingers are passively stretched, as well as overall effects from sustained active engagement and use. Our results suggest that active engagement of the upper extremity increases muscle tone in the finger to a much greater degree than through passive-stretch or sustained exertion over time. Potential design implications of this work suggest that developers should anticipate higher levels of finger stiffness when relying on user-driven ipsilateral control methods for assistive or rehabilitative devices for stroke.

Towards Tenodesis-Modulated Control of an Assistive Hand Exoskeleton for SCI

Nov 28, 2023

Abstract:Restoration of hand function is one of the highest priorities for SCI populations. In this work, we present a prototype of a robotic assistive orthosis capable of implementing tenodesis user control. The underactuated device provides active grasping assistance while preserving free wrist mobility through the use of Bowden cables. This device enables force modulation during grasping, which was effectively leveraged by a participant with C6 SCI to demonstrate improved grasping abilities using the orthosis, scoring 11 on the Grasp and Release Test using the device compared to 1 without it.

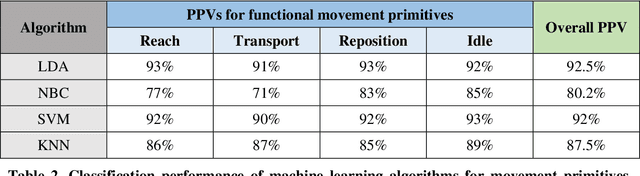

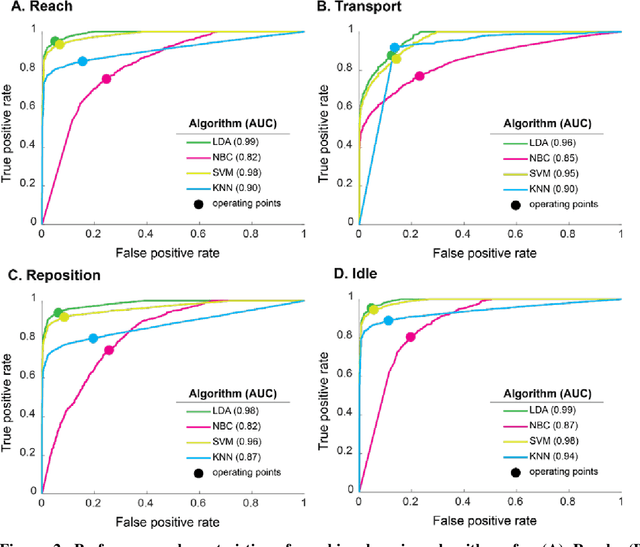

Pragmatic classification of movement primitives for stroke rehabilitation

Mar 11, 2019

Abstract:Rehabilitation training is the primary intervention to improve motor recovery after stroke, but a tool to measure functional training does not currently exist. To bridge this gap, we previously developed an approach to classify functional movement primitives using wearable sensors and a machine learning (ML) algorithm. We found that this approach had encouraging classification performance but had computational and practical limitations, such as training time, sensor cost, and magnetic drift. Here, we sought to refine this approach and determine the algorithm, sensor configurations, and data requirements needed to maximize computational and practical performance. Motion data had been previously collected from 6 stroke patients wearing 11 inertial measurement units (IMUs) as they moved objects on a target array. To identify optimal ML performance, we evaluated 4 algorithms that are commonly used in activity recognition (linear discriminant analysis (LDA), na\"ive Bayes, support vector machine, and k-nearest neighbors). We compared their classification accuracy, computational complexity, and tuning requirements. To identify optimal sensor configuration, we progressively sampled fewer sensors and compared classification accuracy. To identify optimal data requirements, we compared accuracy using data from IMUs versus accelerometers. We found that LDA had the highest classification accuracy (92%) of the algorithms tested. It also was the most pragmatic, with low training and testing times and modest tuning requirements. We found that 7 sensors on the paretic arm and back resulted in the best accuracy. Using this array, accelerometers had a lower accuracy (84%). We refined strategies to accurately and pragmatically quantify functional movement primitives in stroke patients. We propose that this optimized ML-sensor approach could be a means to quantify training dose after stroke.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge