David Western

Window Stacking Meta-Models for Clinical EEG Classification

Jan 14, 2024Abstract:Windowing is a common technique in EEG machine learning classification and other time series tasks. However, a challenge arises when employing this technique: computational expense inhibits learning global relationships across an entire recording or set of recordings. Furthermore, the labels inherited by windows from their parent recordings may not accurately reflect the content of that window in isolation. To resolve these issues, we introduce a multi-stage model architecture, incorporating meta-learning principles tailored to time-windowed data aggregation. We further tested two distinct strategies to alleviate these issues: lengthening the window and utilizing overlapping to augment data. Our methods, when tested on the Temple University Hospital Abnormal EEG Corpus (TUAB), dramatically boosted the benchmark accuracy from 89.8 percent to 99.0 percent. This breakthrough performance surpasses prior performance projections for this dataset and paves the way for clinical applications of machine learning solutions to EEG interpretation challenges. On a broader and more varied dataset from the Temple University Hospital EEG Corpus (TUEG), we attained an accuracy of 86.7%, nearing the assumed performance ceiling set by variable inter-rater agreement on such datasets.

Diegetic Graphical User Interfaces and Intuitive Control of Assistive Robots via Eye-gaze

Jan 08, 2024Abstract:Individuals with tetraplegia and similar forms of paralysis suffer physically and emotionally due to a lack of autonomy. To help regain part of this autonomy, assistive robotic arms have been shown to increase living independence. However, users with paralysis pose unique challenging conditions for the control of these devices. In this article, we present the use of Diegetic Graphical User Interfaces, a novel, intuitive, and computationally inexpensive approach for gaze-controlled interfaces applied to robots. By using symbols paired with fiducial markers, interactive buttons can be defined in the real world which the user can trigger via gaze, and which can be embedded easily into the environment. We apply this system to pilot a 3-degree-of-freedom robotic arm for precision pick-and-place tasks. The interface is placed directly on the robot to allow intuitive and direct interaction, eliminating the need for context-switching between external screens, menus, and the robot. After calibration and a brief habituation period, twenty-one participants from multiple backgrounds, ages and eye-sight conditions completed the Yale-CMU-Berkeley (YCB) Block Pick and Place Protocol to benchmark the system, achieving a mean score of 13.71 out of the maximum 16.00 points. Good usability and user experience were reported (System Usability Score of 75.36) while achieving a low task workload measure (NASA-TLX of 44.76). Results show that users can employ multiple interface elements to perform actions with minimal practice and with a small cognitive load. To our knowledge, this is the first easily reconfigurable screenless system that enables robot control entirely via gaze for Cartesian robot control without the need for eye or face gestures.

Scope and Arbitration in Machine Learning Clinical EEG Classification

Mar 11, 2023

Abstract:A key task in clinical EEG interpretation is to classify a recording or session as normal or abnormal. In machine learning approaches to this task, recordings are typically divided into shorter windows for practical reasons, and these windows inherit the label of their parent recording. We hypothesised that window labels derived in this manner can be misleading for example, windows without evident abnormalities can be labelled `abnormal' disrupting the learning process and degrading performance. We explored two separable approaches to mitigate this problem: increasing the window length and introducing a second-stage model to arbitrate between the window-specific predictions within a recording. Evaluating these methods on the Temple University Hospital Abnormal EEG Corpus, we significantly improved state-of-the-art average accuracy from 89.8 percent to 93.3 percent. This result defies previous estimates of the upper limit for performance on this dataset and represents a major step towards clinical translation of machine learning approaches to this problem.

A Corroborative Approach to Verification and Validation of Human--Robot Teams

Aug 15, 2018

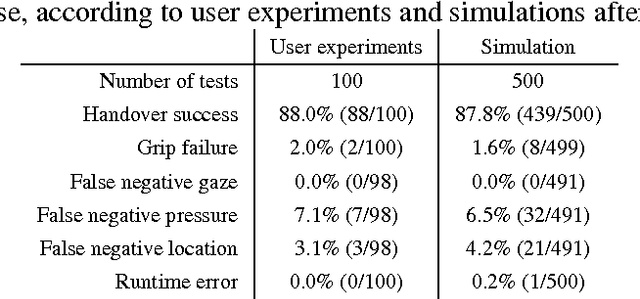

Abstract:We present an approach for the verification and validation (V&V) of robot assistants in the context of human-robot interactions (HRI), to demonstrate their trustworthiness through corroborative evidence of their safety and functional correctness. Key challenges include the complex and unpredictable nature of the real world in which assistant and service robots operate, the limitations on available V&V techniques when used individually, and the consequent lack of confidence in the V&V results. Our approach, called corroborative V&V, addresses these challenges by combining several different V&V techniques; in this paper we use formal verification (model checking), simulation-based testing, and user validation in experiments with a real robot. We demonstrate our corroborative V&V approach through a handover task, the most critical part of a complex cooperative manufacturing scenario, for which we propose some safety and liveness requirements to verify and validate. We construct formal models, simulations and an experimental test rig for the HRI. To capture requirements we use temporal logic properties, assertion checkers and textual descriptions. This combination of approaches allows V&V of the HRI task at different levels of modelling detail and thoroughness of exploration, thus overcoming the individual limitations of each technique. Should the resulting V&V evidence present discrepancies, an iterative process between the different V&V techniques takes place until corroboration between the V&V techniques is gained from refining and improving the assets (i.e., system and requirement models) to represent the HRI task in a more truthful manner. Therefore, corroborative V&V affords a systematic approach to 'meta-V&V,' in which different V&V techniques can be used to corroborate and check one another, increasing the level of certainty in the results of V&V.

Systematic and Realistic Testing in Simulation of Control Code for Robots in Collaborative Human-Robot Interactions

Jul 13, 2016

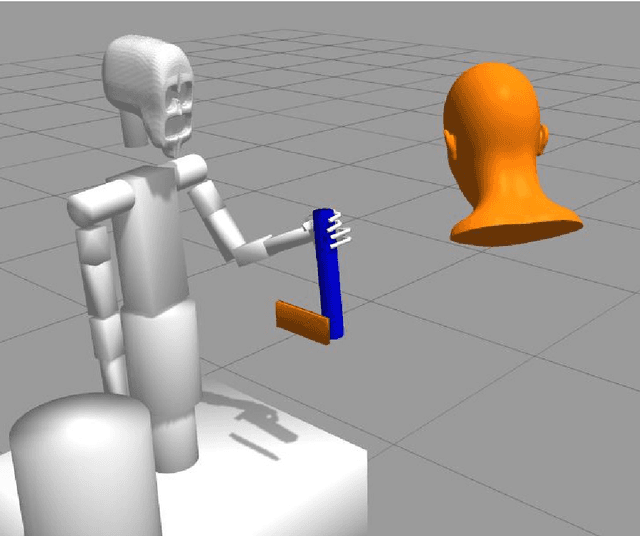

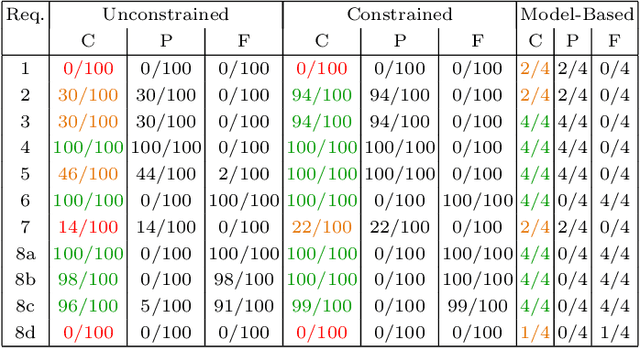

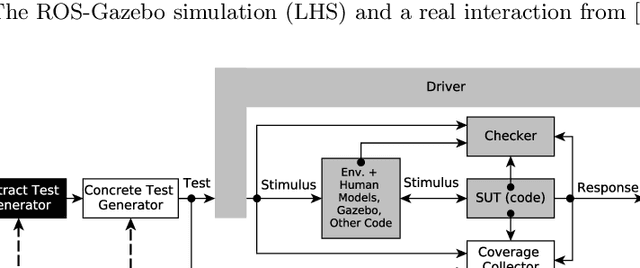

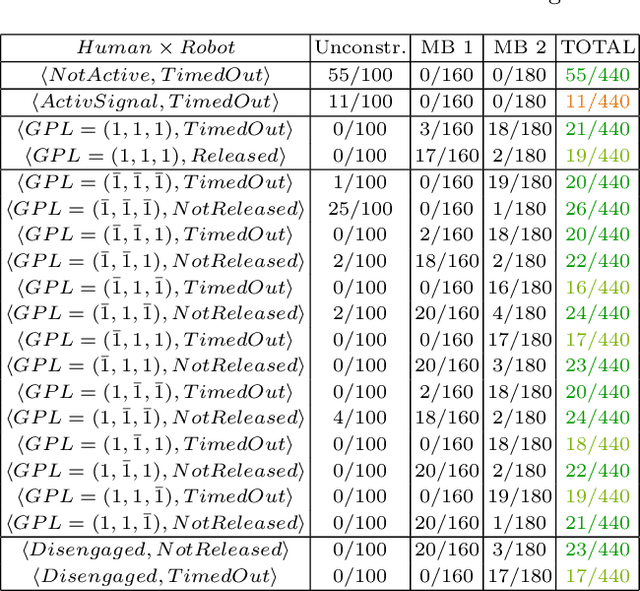

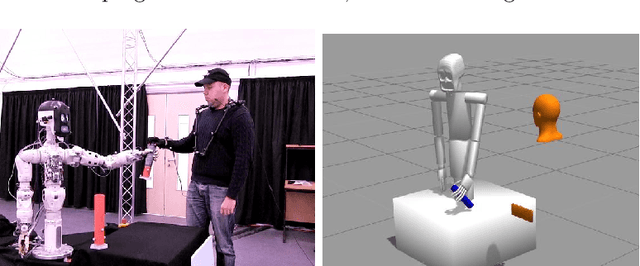

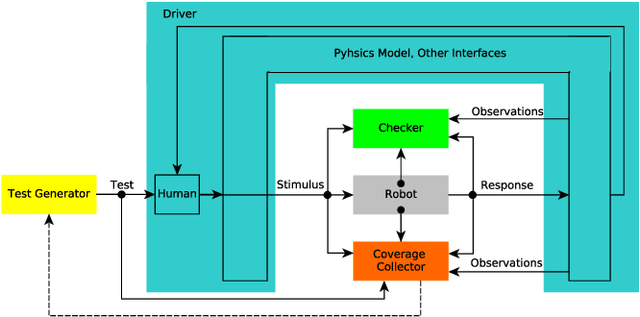

Abstract:Industries such as flexible manufacturing and home care will be transformed by the presence of robotic assistants. Assurance of safety and functional soundness for these robotic systems will require rigorous verification and validation. We propose testing in simulation using Coverage-Driven Verification (CDV) to guide the testing process in an automatic and systematic way. We use a two-tiered test generation approach, where abstract test sequences are computed first and then concretized (e.g., data and variables are instantiated), to reduce the complexity of the test generation problem. To demonstrate the effectiveness of our approach, we developed a testbench for robotic code, running in ROS-Gazebo, that implements an object handover as part of a human-robot interaction (HRI) task. Tests are generated to stimulate the robot's code in a realistic manner, through stimulating the human, environment, sensors, and actuators in simulation. We compare the merits of unconstrained, constrained and model-based test generation in achieving thorough exploration of the code under test, and interesting combinations of human-robot interactions. Our results show that CDV combined with systematic test generation achieves a very high degree of automation in simulation-based verification of control code for robots in HRI.

Coverage-Driven Verification - An approach to verify code for robots that directly interact with humans

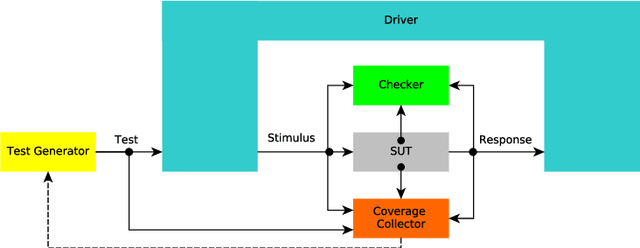

Sep 16, 2015

Abstract:Collaborative robots could transform several industries, such as manufacturing and healthcare, but they present a significant challenge to verification. The complex nature of their working environment necessitates testing in realistic detail under a broad range of circumstances. We propose the use of Coverage-Driven Verification (CDV) to meet this challenge. By automating the simulation-based testing process as far as possible, CDV provides an efficient route to coverage closure. We discuss the need, practical considerations, and potential benefits of transferring this approach from microelectronic design verification to the field of human-robot interaction. We demonstrate the validity and feasibility of the proposed approach by constructing a custom CDV testbench and applying it to the verification of an object handover task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge