Anthony G. Pipe

A Corroborative Approach to Verification and Validation of Human--Robot Teams

Aug 15, 2018

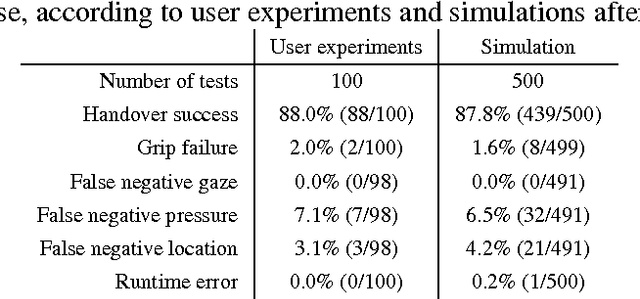

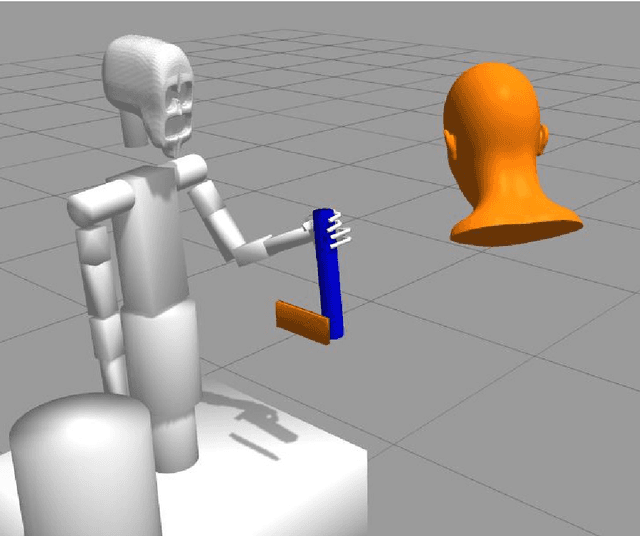

Abstract:We present an approach for the verification and validation (V&V) of robot assistants in the context of human-robot interactions (HRI), to demonstrate their trustworthiness through corroborative evidence of their safety and functional correctness. Key challenges include the complex and unpredictable nature of the real world in which assistant and service robots operate, the limitations on available V&V techniques when used individually, and the consequent lack of confidence in the V&V results. Our approach, called corroborative V&V, addresses these challenges by combining several different V&V techniques; in this paper we use formal verification (model checking), simulation-based testing, and user validation in experiments with a real robot. We demonstrate our corroborative V&V approach through a handover task, the most critical part of a complex cooperative manufacturing scenario, for which we propose some safety and liveness requirements to verify and validate. We construct formal models, simulations and an experimental test rig for the HRI. To capture requirements we use temporal logic properties, assertion checkers and textual descriptions. This combination of approaches allows V&V of the HRI task at different levels of modelling detail and thoroughness of exploration, thus overcoming the individual limitations of each technique. Should the resulting V&V evidence present discrepancies, an iterative process between the different V&V techniques takes place until corroboration between the V&V techniques is gained from refining and improving the assets (i.e., system and requirement models) to represent the HRI task in a more truthful manner. Therefore, corroborative V&V affords a systematic approach to 'meta-V&V,' in which different V&V techniques can be used to corroborate and check one another, increasing the level of certainty in the results of V&V.

Model-based Test Generation for Robotic Software: Automata versus Belief-Desire-Intention Agents

Dec 12, 2016

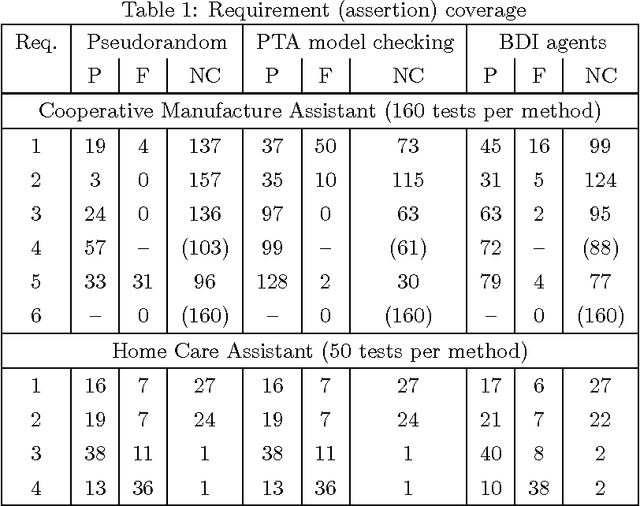

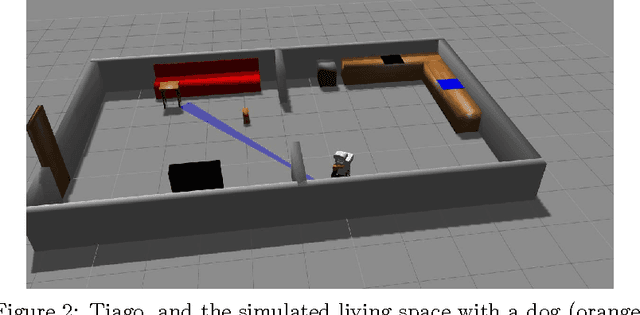

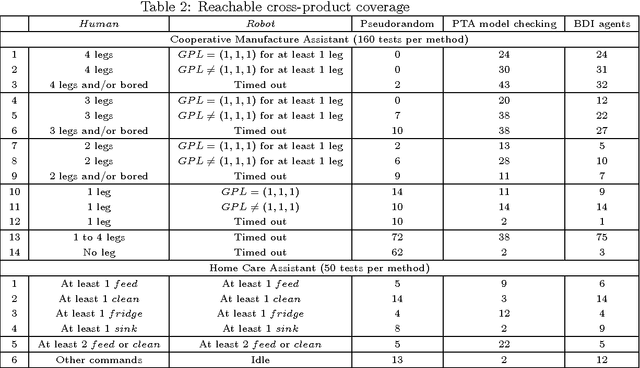

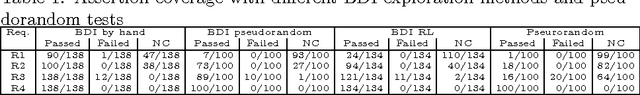

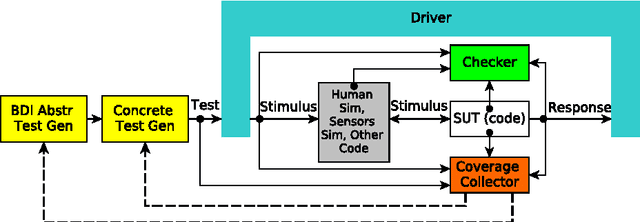

Abstract:Robotic code needs to be verified to ensure its safety and functional correctness, especially when the robot is interacting with people. Testing real code in simulation is a viable option. However, generating tests that cover rare scenarios, as well as exercising most of the code, is a challenge amplified by the complexity of the interactions between the environment and the software. Model-based test generation methods can automate otherwise manual processes and facilitate reaching rare scenarios during testing. In this paper, we compare using Belief-Desire-Intention (BDI) agents as models for test generation with more conventional automata-based techniques that exploit model checking, in terms of practicality, performance, transferability to different scenarios, and exploration (`coverage'), through two case studies: a cooperative manufacturing task, and a home care scenario. The results highlight the advantages of using BDI agents for test generation. BDI agents naturally emulate the agency present in Human-Robot Interactions (HRIs), and are thus more expressive than automata. The performance of the BDI-based test generation is at least as high, and the achieved coverage is higher or equivalent, compared to test generation based on model checking automata.

Systematic and Realistic Testing in Simulation of Control Code for Robots in Collaborative Human-Robot Interactions

Jul 13, 2016

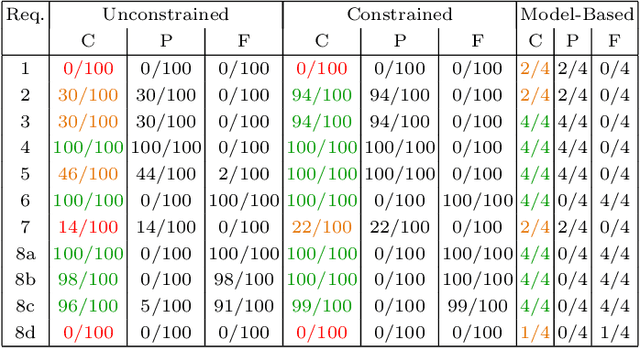

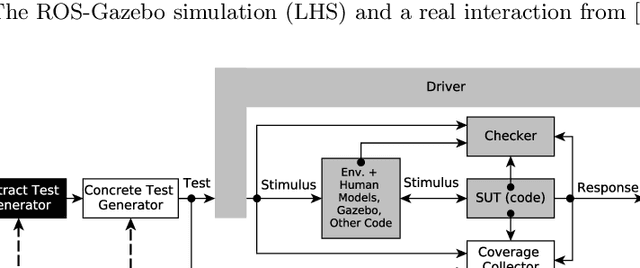

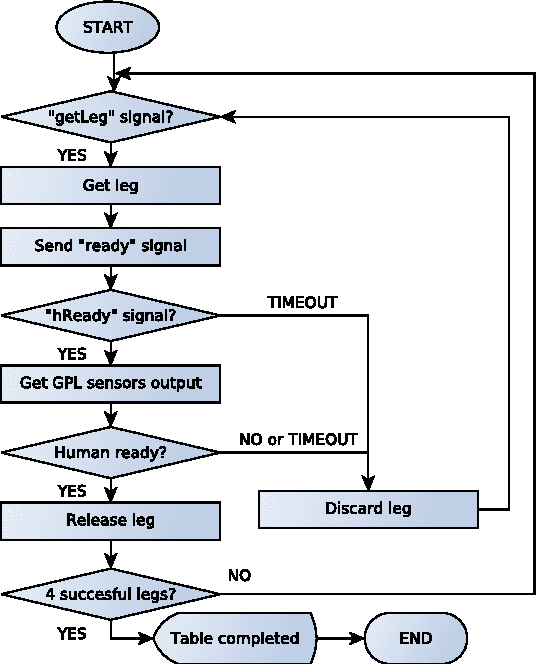

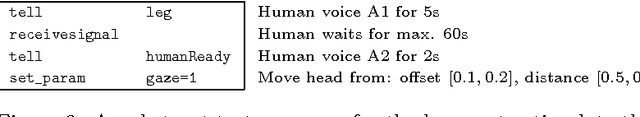

Abstract:Industries such as flexible manufacturing and home care will be transformed by the presence of robotic assistants. Assurance of safety and functional soundness for these robotic systems will require rigorous verification and validation. We propose testing in simulation using Coverage-Driven Verification (CDV) to guide the testing process in an automatic and systematic way. We use a two-tiered test generation approach, where abstract test sequences are computed first and then concretized (e.g., data and variables are instantiated), to reduce the complexity of the test generation problem. To demonstrate the effectiveness of our approach, we developed a testbench for robotic code, running in ROS-Gazebo, that implements an object handover as part of a human-robot interaction (HRI) task. Tests are generated to stimulate the robot's code in a realistic manner, through stimulating the human, environment, sensors, and actuators in simulation. We compare the merits of unconstrained, constrained and model-based test generation in achieving thorough exploration of the code under test, and interesting combinations of human-robot interactions. Our results show that CDV combined with systematic test generation achieves a very high degree of automation in simulation-based verification of control code for robots in HRI.

Intelligent Agent-Based Stimulation for Testing Robotic Software in Human-Robot Interactions

Jul 13, 2016

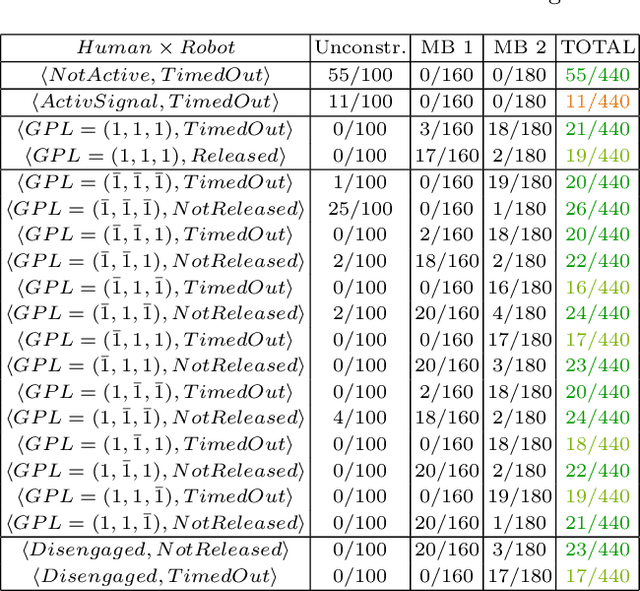

Abstract:The challenges of robotic software testing extend beyond conventional software testing. Valid, realistic and interesting tests need to be generated for multiple programs and hardware running concurrently, deployed into dynamic environments with people. We investigate the use of Belief-Desire-Intention (BDI) agents as models for test generation, in the domain of human-robot interaction (HRI) in simulations. These models provide rational agency, causality, and a reasoning mechanism for planning, which emulate both intelligent and adaptive robots, as well as smart testing environments directed by humans. We introduce reinforcement learning (RL) to automate the exploration of the BDI models using a reward function based on coverage feedback. Our approach is evaluated using a collaborative manufacture example, where the robotic software under test is stimulated indirectly via a simulated human co-worker. We conclude that BDI agents provide intuitive models for test generation in the HRI domain. Our results demonstrate that RL can fully automate BDI model exploration, leading to very effective coverage-directed test generation.

Believing in BERT: Using expressive communication to enhance trust and counteract operational error in physical Human-Robot Interaction

Jun 22, 2016

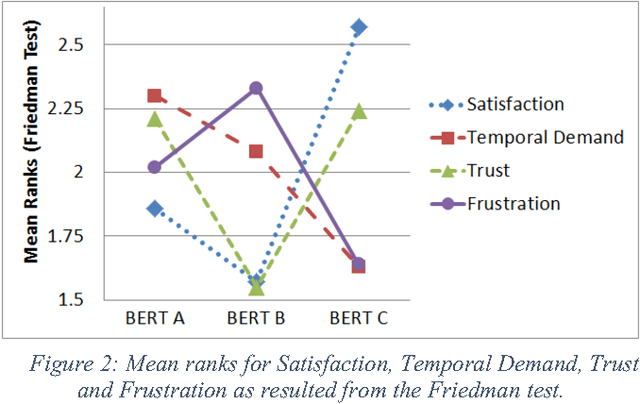

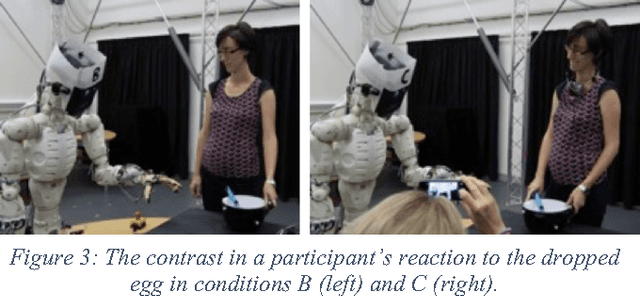

Abstract:Strategies are necessary to mitigate the impact of unexpected behavior in collaborative robotics, and research to develop solutions is lacking. Our aim here was to explore the benefits of an affective interaction, as opposed to a more efficient, less error prone but non-communicative one. The experiment took the form of an omelet-making task, with a wide range of participants interacting directly with BERT2, a humanoid robot assistant. Having significant implications for design, results suggest that efficiency is not the most important aspect of performance for users; a personable, expressive robot was found to be preferable over a more efficient one, despite a considerable trade off in time taken to perform the task. Our findings also suggest that a robot exhibiting human-like characteristics may make users reluctant to 'hurt its feelings'; they may even lie in order to avoid this.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge