David Roberts

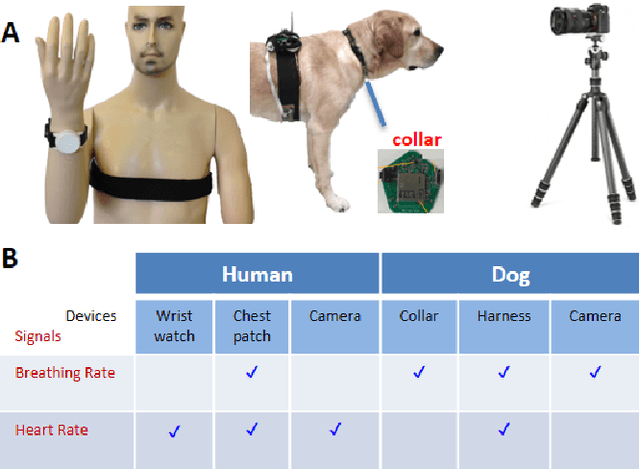

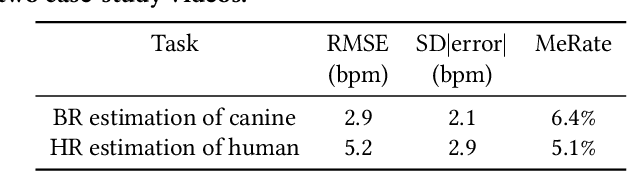

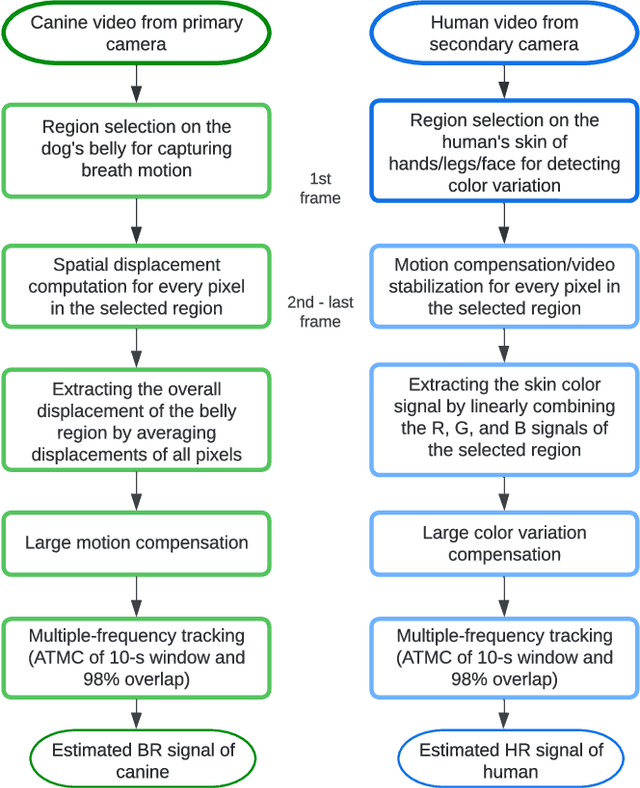

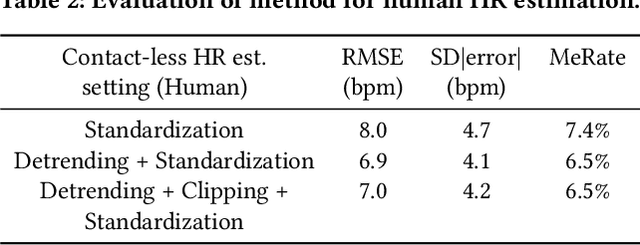

Contact-Free Simultaneous Sensing of Human Heart Rate and Canine Breathing Rate for Animal Assisted Interactions

Nov 11, 2022

Abstract:Animal Assisted Interventions (AAIs) involve pleasant interactions between humans and animals and can potentially benefit both types of participants. Research in this field may help to uncover universal insights about cross-species bonding, dynamic affect detection, and the influence of environmental factors on dyadic interactions. However, experiments evaluating these outcomes are limited to methodologies that are qualitative, subjective, and cumbersome due to the ergonomic challenges related to attaching sensors to the body. Current approaches in AAIs also face challenges when translating beyond controlled clinical environments or research contexts. These also often neglect the measurements from the animal throughout the interaction. Here, we present our preliminary effort toward a contact-free approach to facilitate AAI assessment via the physiological sensing of humans and canines using consumer-grade cameras. This initial effort focuses on verifying the technological feasibility of remotely sensing the heart rate signal of the human subject and the breathing rate signal of the dog subject while they are interacting. Small amounts of motion such as patting and involuntary body shaking or movement can be tolerated with our custom designed vision-based algorithms. The experimental results show that the physiological measurements obtained by our algorithms were consistent with those provided by the standard reference devices. With further validation and expansion to other physiological parameters, the presented approach offers great promise for many scenarios from the AAI research space to veterinary, surgical, and clinical applications.

Interactive Learning from Policy-Dependent Human Feedback

Jan 21, 2017

Abstract:For agents and robots to become more useful, they must be able to quickly learn from non-technical users. This paper investigates the problem of interactively learning behaviors communicated by a human teacher using positive and negative feedback. Much previous work on this problem has made the assumption that people provide feedback for decisions that is dependent on the behavior they are teaching and is independent from the learner's current policy. We present empirical results that show this assumption to be false---whether human trainers give a positive or negative feedback for a decision is influenced by the learner's current policy. We argue that policy-dependent feedback, in addition to being commonplace, enables useful training strategies from which agents should benefit. Based on this insight, we introduce Convergent Actor-Critic by Humans (COACH), an algorithm for learning from policy-dependent feedback that converges to a local optimum. Finally, we demonstrate that COACH can successfully learn multiple behaviors on a physical robot, even with noisy image features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge