Mushfiqur Rahman

SpectraSentinel: LightWeight Dual-Stream Real-Time Drone Detection, Tracking and Payload Identification

Jul 30, 2025Abstract:The proliferation of drones in civilian airspace has raised urgent security concerns, necessitating robust real-time surveillance systems. In response to the 2025 VIP Cup challenge tasks - drone detection, tracking, and payload identification - we propose a dual-stream drone monitoring framework. Our approach deploys independent You Only Look Once v11-nano (YOLOv11n) object detectors on parallel infrared (thermal) and visible (RGB) data streams, deliberately avoiding early fusion. This separation allows each model to be specifically optimized for the distinct characteristics of its input modality, addressing the unique challenges posed by small aerial objects in diverse environmental conditions. We customize data preprocessing and augmentation strategies per domain - such as limiting color jitter for IR imagery - and fine-tune training hyperparameters to enhance detection performance under conditions of heavy noise, low light, and motion blur. The resulting lightweight YOLOv11n models demonstrate high accuracy in distinguishing drones from birds and in classifying payload types, all while maintaining real-time performance. This report details the rationale for a dual-modality design, the specialized training pipelines, and the architectural optimizations that collectively enable efficient and accurate drone surveillance across RGB and IR channels.

UAV-Assisted Coverage Hole Detection Using Reinforcement Learning in Urban Cellular Networks

Mar 09, 2025Abstract:Deployment of cellular networks in urban areas requires addressing various challenges. For example, high-rise buildings with varying geometrical shapes and heights contribute to signal attenuation, reflection, diffraction, and scattering effects. This creates a high possibility of coverage holes (CHs) within the proximity of the buildings. Detecting these CHs is critical for network operators to ensure quality of service, as customers in such areas experience weak or no signal reception. To address this challenge, we propose an approach using an autonomous vehicle, such as an unmanned aerial vehicle (UAV), to detect CHs, for minimizing drive test efforts and reducing human labor. The UAV leverages reinforcement learning (RL) to find CHs using stored local building maps, its current location, and measured signal strengths. As the UAV moves, it dynamically updates its knowledge of the signal environment and its direction to a nearby CH while avoiding collisions with buildings. We created a wide range of testing scenarios using building maps from OpenStreetMap and signal strength data generated by NVIDIA Sionna raytracing simulations. The results demonstrate that the RL-based approach performs better than non-machine learning, geometry-based methods in detecting CHs in urban areas. Additionally, even with a limited number of UAV measurements, the method achieves performance close to theoretical upper bounds that assume complete knowledge of all signal strengths.

Individualized Deepfake Detection Exploiting Traces Due to Double Neural-Network Operations

Dec 13, 2023Abstract:In today's digital landscape, journalists urgently require tools to verify the authenticity of facial images and videos depicting specific public figures before incorporating them into news stories. Existing deepfake detectors are not optimized for this detection task when an image is associated with a specific and identifiable individual. This study focuses on the deepfake detection of facial images of individual public figures. We propose to condition the proposed detector on the identity of the identified individual given the advantages revealed by our theory-driven simulations. While most detectors in the literature rely on perceptible or imperceptible artifacts present in deepfake facial images, we demonstrate that the detection performance can be improved by exploiting the idempotency property of neural networks. In our approach, the training process involves double neural-network operations where we pass an authentic image through a deepfake simulating network twice. Experimental results show that the proposed method improves the area under the curve (AUC) from 0.92 to 0.94 and reduces its standard deviation by 17\%. For evaluating the detection performance of individual public figures, a facial image dataset with individuals' names is required, a criterion not met by the current deepfake datasets. To address this, we curated a dataset comprising 32k images featuring 45 public figures, which we intend to release to the public after the paper is published.

Bengali Document Layout Analysis with Detectron2

Aug 26, 2023

Abstract:Document digitization is vital for preserving historical records, efficient document management, and advancing OCR (Optical Character Recognition) research. Document Layout Analysis (DLA) involves segmenting documents into meaningful units like text boxes, paragraphs, images, and tables. Challenges arise when dealing with diverse layouts, historical documents, and unique scripts like Bengali, hindered by the lack of comprehensive Bengali DLA datasets. We improved the accuracy of the DLA model for Bengali documents by utilizing advanced Mask R-CNN models available in the Detectron2 library. Our evaluation involved three variants: Mask R-CNN R-50, R-101, and X-101, both with and without pretrained weights from PubLayNet, on the BaDLAD dataset, which contains human-annotated Bengali documents in four categories: text boxes, paragraphs, images, and tables. Results show the effectiveness of these models in accurately segmenting Bengali documents. We discuss speed-accuracy tradeoffs and underscore the significance of pretrained weights. Our findings expand the applicability of Mask R-CNN in document layout analysis, efficient document management, and OCR research while suggesting future avenues for fine-tuning and data augmentation.

Contact-Free Simultaneous Sensing of Human Heart Rate and Canine Breathing Rate for Animal Assisted Interactions

Nov 11, 2022

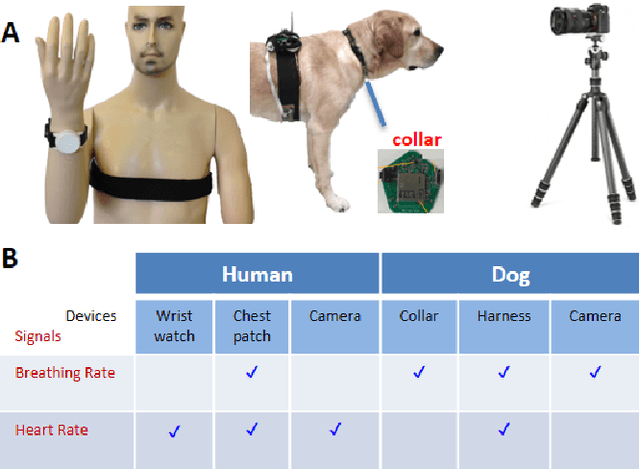

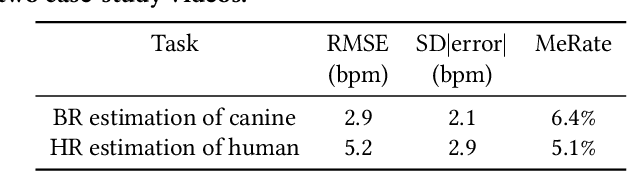

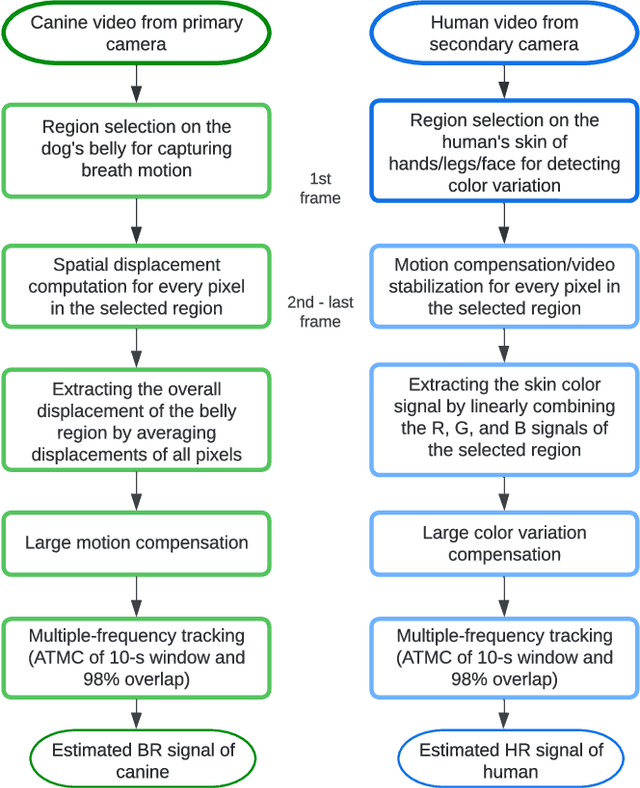

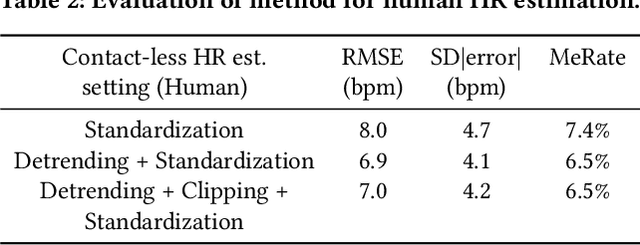

Abstract:Animal Assisted Interventions (AAIs) involve pleasant interactions between humans and animals and can potentially benefit both types of participants. Research in this field may help to uncover universal insights about cross-species bonding, dynamic affect detection, and the influence of environmental factors on dyadic interactions. However, experiments evaluating these outcomes are limited to methodologies that are qualitative, subjective, and cumbersome due to the ergonomic challenges related to attaching sensors to the body. Current approaches in AAIs also face challenges when translating beyond controlled clinical environments or research contexts. These also often neglect the measurements from the animal throughout the interaction. Here, we present our preliminary effort toward a contact-free approach to facilitate AAI assessment via the physiological sensing of humans and canines using consumer-grade cameras. This initial effort focuses on verifying the technological feasibility of remotely sensing the heart rate signal of the human subject and the breathing rate signal of the dog subject while they are interacting. Small amounts of motion such as patting and involuntary body shaking or movement can be tolerated with our custom designed vision-based algorithms. The experimental results show that the physiological measurements obtained by our algorithms were consistent with those provided by the standard reference devices. With further validation and expansion to other physiological parameters, the presented approach offers great promise for many scenarios from the AAI research space to veterinary, surgical, and clinical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge