Dasol Hong

CoCoA-Mix: Confusion-and-Confidence-Aware Mixture Model for Context Optimization

Jun 09, 2025Abstract:Prompt tuning, which adapts vision-language models by freezing model parameters and optimizing only the prompt, has proven effective for task-specific adaptations. The core challenge in prompt tuning is improving specialization for a specific task and generalization for unseen domains. However, frozen encoders often produce misaligned features, leading to confusion between classes and limiting specialization. To overcome this issue, we propose a confusion-aware loss (CoA-loss) that improves specialization by refining the decision boundaries between confusing classes. Additionally, we mathematically demonstrate that a mixture model can enhance generalization without compromising specialization. This is achieved using confidence-aware weights (CoA-weights), which adjust the weights of each prediction in the mixture model based on its confidence within the class domains. Extensive experiments show that CoCoA-Mix, a mixture model with CoA-loss and CoA-weights, outperforms state-of-the-art methods by enhancing specialization and generalization. Our code is publicly available at https://github.com/url-kaist/CoCoA-Mix.

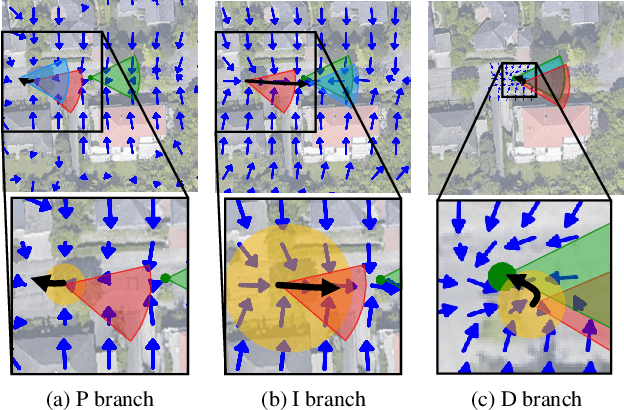

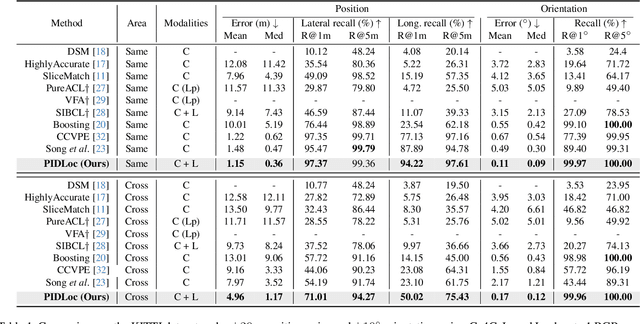

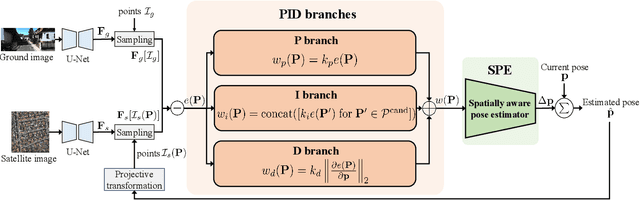

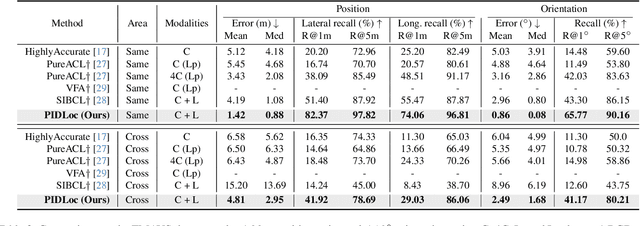

PIDLoc: Cross-View Pose Optimization Network Inspired by PID Controllers

Mar 04, 2025

Abstract:Accurate localization is essential for autonomous driving, but GNSS-based methods struggle in challenging environments such as urban canyons. Cross-view pose optimization offers an effective solution by directly estimating vehicle pose using satellite-view images. However, existing methods primarily rely on cross-view features at a given pose, neglecting fine-grained contexts for precision and global contexts for robustness against large initial pose errors. To overcome these limitations, we propose PIDLoc, a novel cross-view pose optimization approach inspired by the proportional-integral-derivative (PID) controller. Using RGB images and LiDAR, the PIDLoc comprises the PID branches to model cross-view feature relationships and the spatially aware pose estimator (SPE) to estimate the pose from these relationships. The PID branches leverage feature differences for local context (P), aggregated feature differences for global context (I), and gradients of feature differences for precise pose adjustment (D) to enhance localization accuracy under large initial pose errors. Integrated with the PID branches, the SPE captures spatial relationships within the PID-branch features for consistent localization. Experimental results demonstrate that the PIDLoc achieves state-of-the-art performance in cross-view pose estimation for the KITTI dataset, reducing position error by $37.8\%$ compared with the previous state-of-the-art.

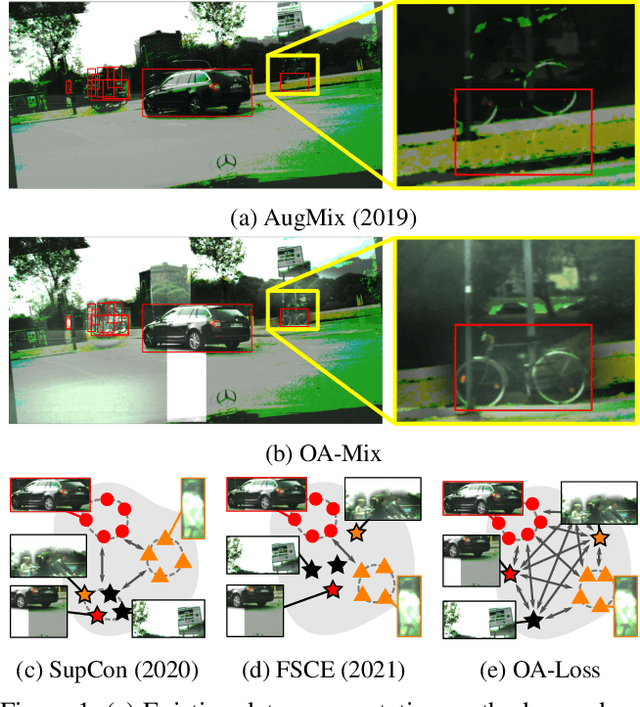

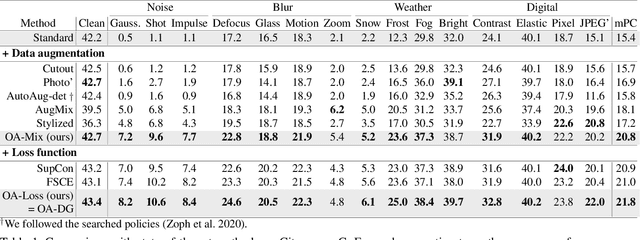

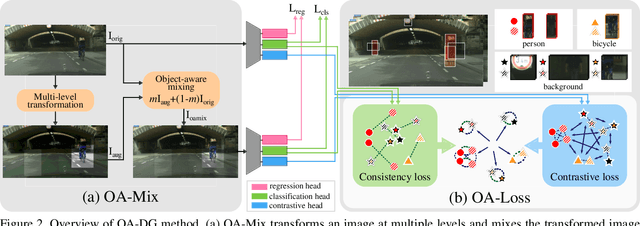

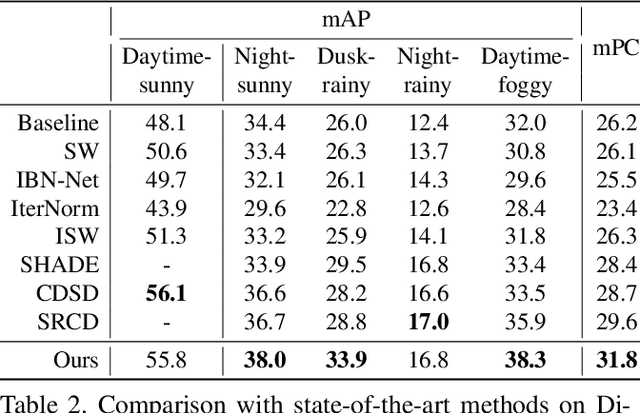

Object-Aware Domain Generalization for Object Detection

Dec 19, 2023

Abstract:Single-domain generalization (S-DG) aims to generalize a model to unseen environments with a single-source domain. However, most S-DG approaches have been conducted in the field of classification. When these approaches are applied to object detection, the semantic features of some objects can be damaged, which can lead to imprecise object localization and misclassification. To address these problems, we propose an object-aware domain generalization (OA-DG) method for single-domain generalization in object detection. Our method consists of data augmentation and training strategy, which are called OA-Mix and OA-Loss, respectively. OA-Mix generates multi-domain data with multi-level transformation and object-aware mixing strategy. OA-Loss enables models to learn domain-invariant representations for objects and backgrounds from the original and OA-Mixed images. Our proposed method outperforms state-of-the-art works on standard benchmarks. Our code is available at https://github.com/WoojuLee24/OA-DG.

X-MAS: Extremely Large-Scale Multi-Modal Sensor Dataset for Outdoor Surveillance in Real Environments

Dec 30, 2022

Abstract:In robotics and computer vision communities, extensive studies have been widely conducted regarding surveillance tasks, including human detection, tracking, and motion recognition with a camera. Additionally, deep learning algorithms are widely utilized in the aforementioned tasks as in other computer vision tasks. Existing public datasets are insufficient to develop learning-based methods that handle various surveillance for outdoor and extreme situations such as harsh weather and low illuminance conditions. Therefore, we introduce a new large-scale outdoor surveillance dataset named eXtremely large-scale Multi-modAl Sensor dataset (X-MAS) containing more than 500,000 image pairs and the first-person view data annotated by well-trained annotators. Moreover, a single pair contains multi-modal data (e.g. an IR image, an RGB image, a thermal image, a depth image, and a LiDAR scan). This is the first large-scale first-person view outdoor multi-modal dataset focusing on surveillance tasks to the best of our knowledge. We present an overview of the proposed dataset with statistics and present methods of exploiting our dataset with deep learning-based algorithms. The latest information on the dataset and our study are available at https://github.com/lge-robot-navi, and the dataset will be available for download through a server.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge