Danny Tran

Whole-Body Conditioned Egocentric Video Prediction

Jun 26, 2025

Abstract:We train models to Predict Ego-centric Video from human Actions (PEVA), given the past video and an action represented by the relative 3D body pose. By conditioning on kinematic pose trajectories, structured by the joint hierarchy of the body, our model learns to simulate how physical human actions shape the environment from a first-person point of view. We train an auto-regressive conditional diffusion transformer on Nymeria, a large-scale dataset of real-world egocentric video and body pose capture. We further design a hierarchical evaluation protocol with increasingly challenging tasks, enabling a comprehensive analysis of the model's embodied prediction and control abilities. Our work represents an initial attempt to tackle the challenges of modeling complex real-world environments and embodied agent behaviors with video prediction from the perspective of a human.

Navigation World Models

Dec 04, 2024

Abstract:Navigation is a fundamental skill of agents with visual-motor capabilities. We introduce a Navigation World Model (NWM), a controllable video generation model that predicts future visual observations based on past observations and navigation actions. To capture complex environment dynamics, NWM employs a Conditional Diffusion Transformer (CDiT), trained on a diverse collection of egocentric videos of both human and robotic agents, and scaled up to 1 billion parameters. In familiar environments, NWM can plan navigation trajectories by simulating them and evaluating whether they achieve the desired goal. Unlike supervised navigation policies with fixed behavior, NWM can dynamically incorporate constraints during planning. Experiments demonstrate its effectiveness in planning trajectories from scratch or by ranking trajectories sampled from an external policy. Furthermore, NWM leverages its learned visual priors to imagine trajectories in unfamiliar environments from a single input image, making it a flexible and powerful tool for next-generation navigation systems.

Efficient Mitigation of Bus Bunching through Setter-Based Curriculum Learning

May 23, 2024Abstract:Curriculum learning has been growing in the domain of reinforcement learning as a method of improving training efficiency for various tasks. It involves modifying the difficulty (lessons) of the environment as the agent learns, in order to encourage more optimal agent behavior and higher reward states. However, most curriculum learning methods currently involve discrete transitions of the curriculum or predefined steps by the programmer or using automatic curriculum learning on only a small subset training such as only on an adversary. In this paper, we propose a novel approach to curriculum learning that uses a Setter Model to automatically generate an action space, adversary strength, initialization, and bunching strength. Transportation and traffic optimization is a well known area of study, especially for reinforcement learning based solutions. We specifically look at the bus bunching problem for the context of this study. The main idea of the problem is to minimize the delays caused by inefficient bus timings for passengers arriving and departing from a system of buses. While the heavy exploration in the area makes innovation and improvement with regards to performance marginal, it simultaneously provides an effective baseline for developing new generalized techniques. Our group is particularly interested in examining curriculum learning and its effect on training efficiency and overall performance. We decide to try a lesser known approach to curriculum learning, in which the curriculum is not fixed or discretely thresholded. Our method for automated curriculum learning involves a curriculum that is dynamically chosen and learned by an adversary network made to increase the difficulty of the agent's training, and defined by multiple forms of input. Our results are shown in the following sections of this paper.

EgoPet: Egomotion and Interaction Data from an Animal's Perspective

Apr 15, 2024

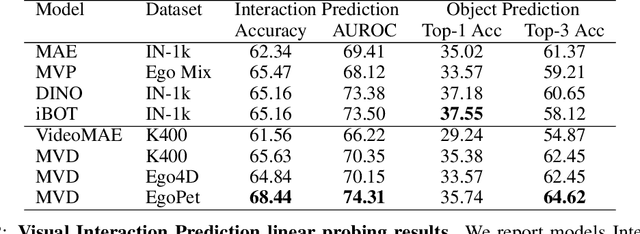

Abstract:Animals perceive the world to plan their actions and interact with other agents to accomplish complex tasks, demonstrating capabilities that are still unmatched by AI systems. To advance our understanding and reduce the gap between the capabilities of animals and AI systems, we introduce a dataset of pet egomotion imagery with diverse examples of simultaneous egomotion and multi-agent interaction. Current video datasets separately contain egomotion and interaction examples, but rarely both at the same time. In addition, EgoPet offers a radically distinct perspective from existing egocentric datasets of humans or vehicles. We define two in-domain benchmark tasks that capture animal behavior, and a third benchmark to assess the utility of EgoPet as a pretraining resource to robotic quadruped locomotion, showing that models trained from EgoPet outperform those trained from prior datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge