Daniela Pfeiffer

1-4

Uncertainty-guided Generation of Dark-field Radiographs

Jan 22, 2026Abstract:X-ray dark-field radiography provides complementary diagnostic information to conventional attenuation imaging by visualizing microstructural tissue changes through small-angle scattering. However, the limited availability of such data poses challenges for developing robust deep learning models. In this work, we present the first framework for generating dark-field images directly from standard attenuation chest X-rays using an Uncertainty-Guided Progressive Generative Adversarial Network. The model incorporates both aleatoric and epistemic uncertainty to improve interpretability and reliability. Experiments demonstrate high structural fidelity of the generated images, with consistent improvement of quantitative metrics across stages. Furthermore, out-of-distribution evaluation confirms that the proposed model generalizes well. Our results indicate that uncertainty-guided generative modeling enables realistic dark-field image synthesis and provides a reliable foundation for future clinical applications.

Beam Geometry and Input Dimensionality: Impact on Sparse-Sampling Artifact Correction for Clinical CT with U-Nets

Aug 25, 2025Abstract:This study aims to investigate the effect of various beam geometries and dimensions of input data on the sparse-sampling streak artifact correction task with U-Nets for clinical CT scans as a means of incorporating the volumetric context into artifact reduction tasks to improve model performance. A total of 22 subjects were retrospectively selected (01.2016-12.2018) from the Technical University of Munich's research hospital, TUM Klinikum rechts der Isar. Sparsely-sampled CT volumes were simulated with the Astra toolbox for parallel, fan, and cone beam geometries. 2048 views were taken as full-view scans. 2D and 3D U-Nets were trained and validated on 14, and tested on 8 subjects, respectively. For the dimensionality study, in addition to the 512x512 2D CT images, the CT scans were further pre-processed to generate a so-called '2.5D', and 3D data: Each CT volume was divided into 64x64x64 voxel blocks. The 3D data refers to individual 64-voxel blocks. An axial, coronal, and sagittal cut through the center of each block resulted in three 64x64 2D patches that were rearranged as a single 64x64x3 image, proposed as 2.5D data. Model performance was assessed with the mean squared error (MSE) and structural similarity index measure (SSIM). For all geometries, the 2D U-Net trained on axial 2D slices results in the best MSE and SSIM values, outperforming the 2.5D and 3D input data dimensions.

Deformable Image Registration of Dark-Field Chest Radiographs for Local Lung Signal Change Assessment

Jan 18, 2025

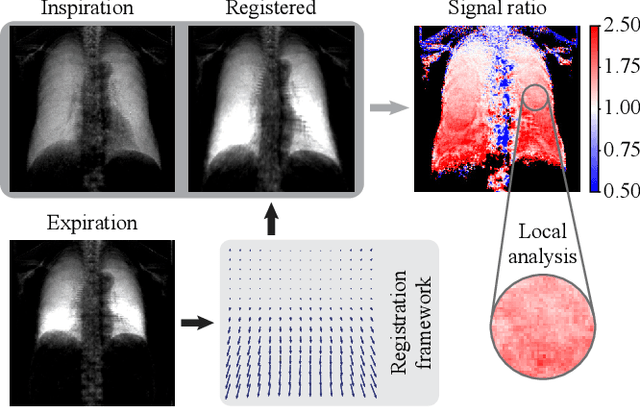

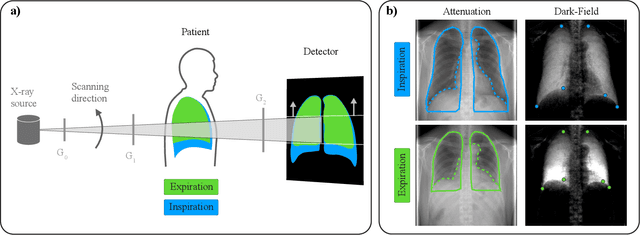

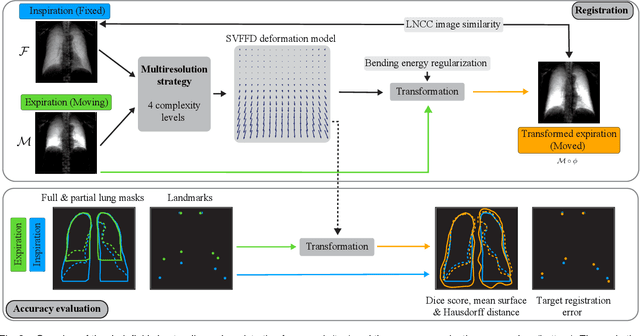

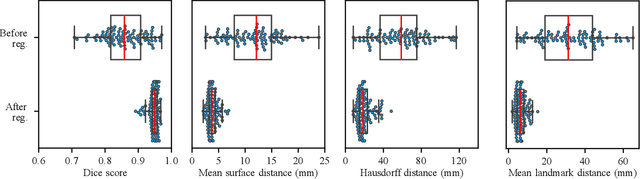

Abstract:Dark-field radiography of the human chest has been demonstrated to have promising potential for the analysis of the lung microstructure and the diagnosis of respiratory diseases. However, previous studies of dark-field chest radiographs evaluated the lung signal only in the inspiratory breathing state. Our work aims to add a new perspective to these previous assessments by locally comparing dark-field lung information between different respiratory states. To this end, we discuss suitable image registration methods for dark-field chest radiographs to enable consistent spatial alignment of the lung in distinct breathing states. Utilizing full inspiration and expiration scans from a clinical chronic obstructive pulmonary disease study, we assess the performance of the proposed registration framework and outline applicable evaluation approaches. Our regional characterization of lung dark-field signal changes between the breathing states provides a proof-of-principle that dynamic radiography-based lung function assessment approaches may benefit from considering registered dark-field images in addition to standard plain chest radiographs.

Influence of Medical Foreign Bodies on Dark-Field Chest Radiographs: First experiences

Aug 20, 2024Abstract:Objectives: Evaluating the effects and artifacts introduced by medical foreign bodies in clinical dark-field chest radiographs and assessing their influence on the evaluation of pulmonary tissue, compared to conventional radiographs. Material & Methods: This retrospective study analyzed data from subjects enrolled in clinical trials conducted between 2018 and 2021, focusing on chronic obstructive pulmonary disease (COPD) and COVID-19 patients. All patients obtained a radiograph using an in-house developed clinical prototype for grating-based dark-field chest radiography. The prototype simultaneously delivers a conventional and dark-field radiograph. Two radiologists independently assessed the clinical studies to identify patients with foreign bodies. Subsequently, an analysis was conducted on the effects and artifacts attributed to distinct foreign bodies and their impact on the assessment of pulmonary tissue. Results: Overall, 30 subjects with foreign bodies were included in this study (mean age, 64 years +/- 11 [standard deviation]; 15 men). Foreign bodies composed of materials lacking microstructure exhibited a diminished dark-field signal or no discernible signal. Foreign bodies with a microstructure, in our investigations the cementation of the kyphoplasty, produce a positive dark-field signal. Since most foreign bodies lack microstructural features, dark-field imaging revealed fewer signals and artifacts by foreign bodies compared to conventional radiographs. Conclusion: Dark-field radiography enhances the assessment of pulmonary tissue with overlaying foreign bodies compared to conventional radiography. Reduced interfering signals result in fewer overlapping radiopaque artifacts within the investigated regions. This mitigates the impact on image quality and interpretability of the radiographs and the projection-related limitations of radiography compared to CT.

Improving Image Quality of Sparse-view Lung Cancer CT Images with a Convolutional Neural Network

Jul 28, 2023Abstract:Purpose: To improve the image quality of sparse-view computed tomography (CT) images with a U-Net for lung cancer detection and to determine the best trade-off between number of views, image quality, and diagnostic confidence. Methods: CT images from 41 subjects (34 with lung cancer, seven healthy) were retrospectively selected (01.2016-12.2018) and forward projected onto 2048-view sinograms. Six corresponding sparse-view CT data subsets at varying levels of undersampling were reconstructed from sinograms using filtered backprojection with 16, 32, 64, 128, 256, and 512 views, respectively. A dual-frame U-Net was trained and evaluated for each subsampling level on 8,658 images from 22 diseased subjects. A representative image per scan was selected from 19 subjects (12 diseased, seven healthy) for a single-blinded reader study. The selected slices, for all levels of subsampling, with and without post-processing by the U-Net model, were presented to three readers. Image quality and diagnostic confidence were ranked using pre-defined scales. Subjective nodule segmentation was evaluated utilizing sensitivity (Se) and Dice Similarity Coefficient (DSC) with 95% confidence intervals (CI). Results: The 64-projection sparse-view images resulted in Se = 0.89 and DSC = 0.81 [0.75,0.86] while their counterparts, post-processed with the U-Net, had improved metrics (Se = 0.94, DSC = 0.85 [0.82,0.87]). Fewer views lead to insufficient quality for diagnostic purposes. For increased views, no substantial discrepancies were noted between the sparse-view and post-processed images. Conclusion: Projection views can be reduced from 2048 to 64 while maintaining image quality and the confidence of the radiologists on a satisfactory level.

Improving Automated Hemorrhage Detection in Sparse-view Computed Tomography via Deep Convolutional Neural Network based Artifact Reduction

Mar 16, 2023Abstract:Intracranial hemorrhage poses a serious health problem requiring rapid and often intensive medical treatment. For diagnosis, a Cranial Computed Tomography (CCT) scan is usually performed. However, the increased health risk caused by radiation is a concern. The most important strategy to reduce this potential risk is to keep the radiation dose as low as possible and consistent with the diagnostic task. Sparse-view CT can be an effective strategy to reduce dose by reducing the total number of views acquired, albeit at the expense of image quality. In this work, we use a U-Net architecture to reduce artifacts from sparse-view CCTs, predicting fully sampled reconstructions from sparse-view ones. We evaluate the hemorrhage detectability in the predicted CCTs with a hemorrhage classification convolutional neural network, trained on fully sampled CCTs to detect and classify different sub-types of hemorrhages. Our results suggest that the automated classification and detection accuracy of hemorrhages in sparse-view CCTs can be improved substantially by the U-Net. This demonstrates the feasibility of rapid automated hemorrhage detection on low-dose CT data to assist radiologists in routine clinical practice.

Optimizing Convolutional Neural Networks for Chronic Obstructive Pulmonary Disease Detection in Clinical Computed Tomography Imaging

Mar 13, 2023

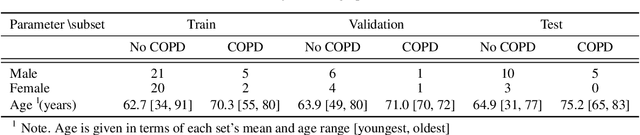

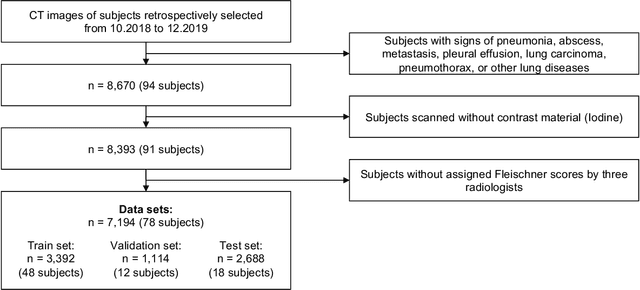

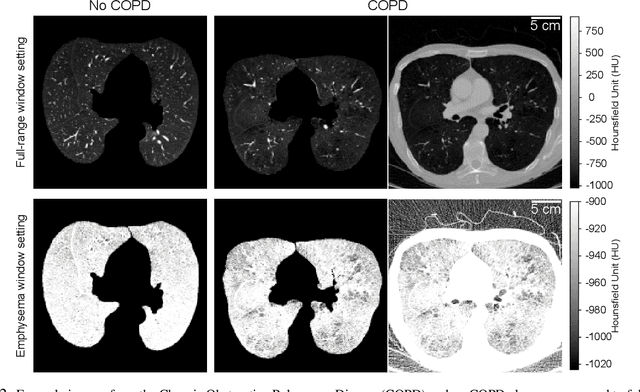

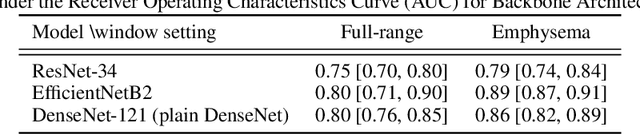

Abstract:Chronic Obstructive Pulmonary Disease (COPD) is a leading cause of death worldwide, yet early detection and treatment can prevent the progression of the disease. In contrast to the conventional method of detecting COPD with spirometry tests, X-ray Computed Tomography (CT) scans of the chest provide a measure of morphological changes in the lung. It has been shown that automated detection of COPD can be performed with deep learning models. However, the potential of incorporating optimal window setting selection, typically carried out by clinicians during examination of CT scans for COPD, is generally overlooked in deep learning approaches. We aim to optimize the binary classification of COPD with densely connected convolutional neural networks (DenseNets) through implementation of manual and automated Window-Setting Optimization (WSO) steps. Our dataset consisted of 78 CT scans from the Klinikum rechts der Isar research hospital. Repeated inference on the test set showed that without WSO, the plain DenseNet resulted in a mean slice-level AUC of 0.80$\pm$0.05. With input images manually adjusted to the emphysema window setting, the plain DenseNet model predicted COPD with a mean AUC of 0.86$\pm$0.04. By automating the WSO through addition of a customized layer to the DenseNet, an optimal window setting in the proximity of the emphysema window setting was learned and a mean AUC of 0.82$\pm$0.04 was achieved. Detection of COPD with DenseNet models was optimized by WSO of CT data to the emphysema window setting range, demonstrating the importance of implementing optimal window setting selection in the deep learning pipeline.

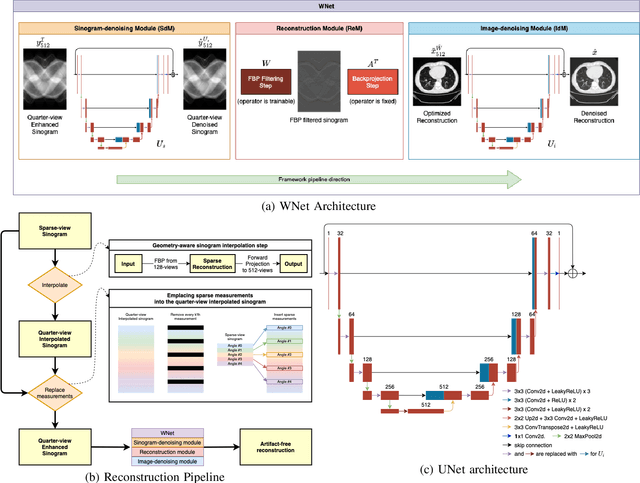

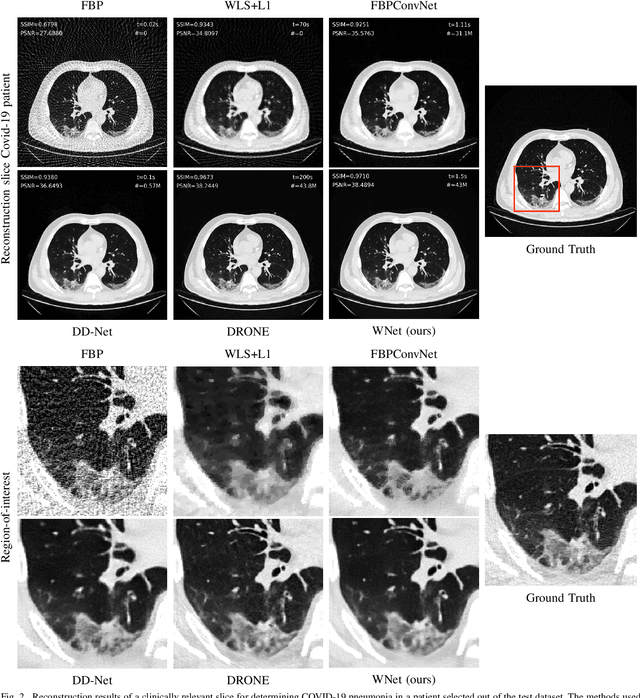

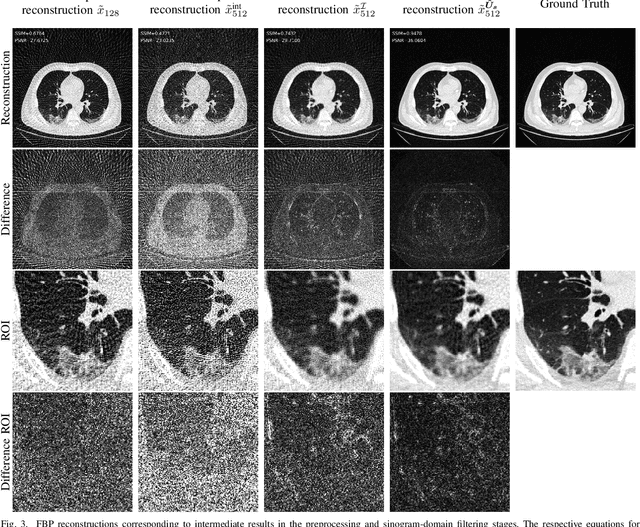

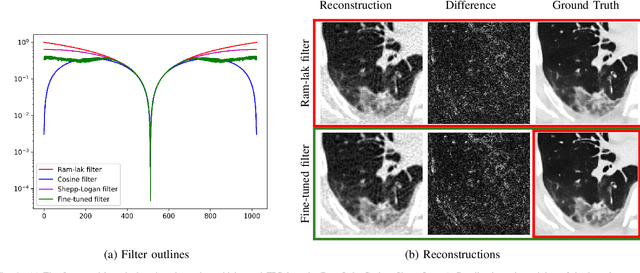

WNet: A data-driven dual-domain denoising model for sparse-view computed tomography with a trainable reconstruction layer

Jul 01, 2022

Abstract:Deep learning based solutions are being succesfully implemented for a wide variety of applications. Most notably, clinical use-cases have gained an increased interest and have been the main driver behind some of the cutting-edge data-driven algorithms proposed in the last years. For applications like sparse-view tomographic reconstructions, where the amount of measurement data is small in order to keep acquisition times short and radiation dose low, reduction of the streaking artifacts has prompted the development of data-driven denoising algorithms with the main goal of obtaining diagnostically viable images with only a subset of a full-scan data. We propose WNet, a data-driven dual-domain denoising model which contains a trainable reconstruction layer for sparse-view artifact denoising. Two encoder-decoder networks perform denoising in both sinogram- and reconstruction-domain simultaneously, while a third layer implementing the Filtered Backprojection algorithm is sandwiched between the first two and takes care of the reconstruction operation. We investigate the performance of the network on sparse-view chest CT scans, and we highlight the added benefit of having a trainable reconstruction layer over the more conventional fixed ones. We train and test our network on two clinically relevant datasets and we compare the obtained results with three different types of sparse-view CT denoising and reconstruction algorithms.

Per-Pixel Lung Thickness and Lung Capacity Estimation on Chest X-Rays using Convolutional Neural Networks

Oct 27, 2021

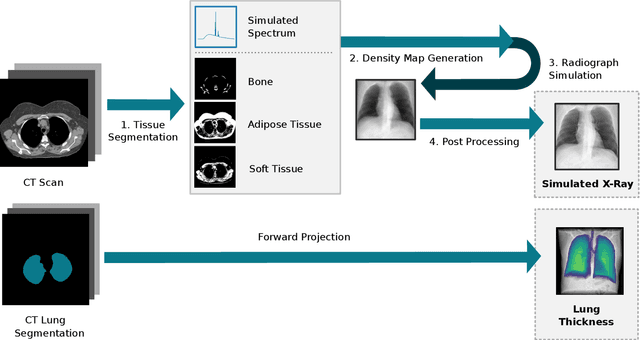

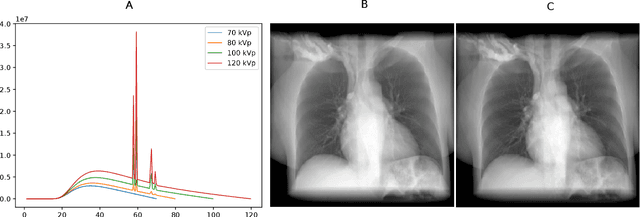

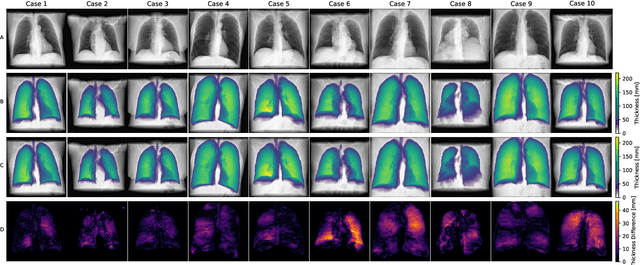

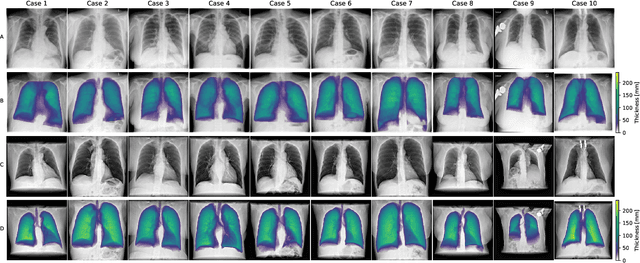

Abstract:Estimating the lung depth on x-ray images could provide both an accurate opportunistic lung volume estimation during clinical routine and improve image contrast in modern structural chest imaging techniques like x-ray dark-field imaging. We present a method based on a convolutional neural network that allows a per-pixel lung thickness estimation and subsequent total lung capacity estimation. The network was trained and validated using 5250 simulated radiographs generated from 525 real CT scans. Furthermore, we are able to infer the model trained with simulation data on real radiographs. For 35 patients, quantitative and qualitative evaluation was performed on standard clinical radiographs. The ground-truth for each patient's total lung volume was defined based on the patients' corresponding CT scan. The mean-absolute error between the estimated lung volume on the 35 real radiographs and groundtruth volume was 0.73 liter. Additionally, we predicted the lung thicknesses on a synthetic dataset of 131 radiographs, where the mean-absolute error was 0.27 liter. The results show, that it is possible to transfer the knowledge obtained in a simulation model to real x-ray images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge