Daniel W. Apley

Bootstrapped Control Limits for Score-Based Concept Drift Control Charts

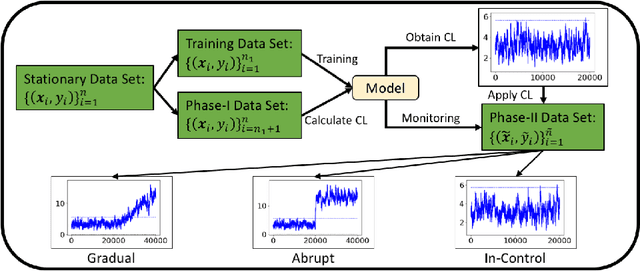

Jul 22, 2025Abstract:Monitoring for changes in a predictive relationship represented by a fitted supervised learning model (aka concept drift detection) is a widespread problem, e.g., for retrospective analysis to determine whether the predictive relationship was stable over the training data, for prospective analysis to determine when it is time to update the predictive model, for quality control of processes whose behavior can be characterized by a predictive relationship, etc. A general and powerful Fisher score-based concept drift approach has recently been proposed, in which concept drift detection reduces to detecting changes in the mean of the model's score vector using a multivariate exponentially weighted moving average (MEWMA). To implement the approach, the initial data must be split into two subsets. The first subset serves as the training sample to which the model is fit, and the second subset serves as an out-of-sample test set from which the MEWMA control limit (CL) is determined. In this paper, we develop a novel bootstrap procedure for computing the CL. Our bootstrap CL provides much more accurate control of false-alarm rate, especially when the sample size and/or false-alarm rate is small. It also allows the entire initial sample to be used for training, resulting in a more accurate fitted supervised learning model. We show that a standard nested bootstrap (inner loop accounting for future data variability and outer loop accounting for training sample variability) substantially underestimates variability and develop a 632-like correction that appropriately accounts for this. We demonstrate the advantages with numerical examples.

A Framework for Supervised and Unsupervised Segmentation and Classification of Materials Microstructure Images

Feb 10, 2025Abstract:Microstructure of materials is often characterized through image analysis to understand processing-structure-properties linkages. We propose a largely automated framework that integrates unsupervised and supervised learning methods to classify micrographs according to microstructure phase/class and, for multiphase microstructures, segments them into different homogeneous regions. With the advance of manufacturing and imaging techniques, the ultra-high resolution of imaging that reveals the complexity of microstructures and the rapidly increasing quantity of images (i.e., micrographs) enables and necessitates a more powerful and automated framework to extract materials characteristics and knowledge. The framework we propose can be used to gradually build a database of microstructure classes relevant to a particular process or group of materials, which can help in analyzing and discovering/identifying new materials. The framework has three steps: (1) segmentation of multiphase micrographs through a recently developed score-based method so that different microstructure homogeneous regions can be identified in an unsupervised manner; (2) {identification and classification of} homogeneous regions of micrographs through an uncertainty-aware supervised classification network trained using the segmented micrographs from Step $1$ with their identified labels verified via the built-in uncertainty quantification and minimal human inspection; (3) supervised segmentation (more powerful than the segmentation in Step $1$) of multiphase microstructures through a segmentation network trained with micrographs and the results from Steps $1$-$2$ using a form of data augmentation. This framework can iteratively characterize/segment new homogeneous or multiphase materials while expanding the database to enhance performance. The framework is demonstrated on various sets of materials and texture images.

Emerging Microelectronic Materials by Design: Navigating Combinatorial Design Space with Scarce and Dispersed Data

Dec 23, 2024

Abstract:The increasing demands of sustainable energy, electronics, and biomedical applications call for next-generation functional materials with unprecedented properties. Of particular interest are emerging materials that display exceptional physical properties, making them promising candidates in energy-efficient microelectronic devices. As the conventional Edisonian approach becomes significantly outpaced by growing societal needs, emerging computational modeling and machine learning (ML) methods are employed for the rational design of materials. However, the complex physical mechanisms, cost of first-principles calculations, and the dispersity and scarcity of data pose challenges to both physics-based and data-driven materials modeling. Moreover, the combinatorial composition-structure design space is high-dimensional and often disjoint, making design optimization nontrivial. In this Account, we review a team effort toward establishing a framework that integrates data-driven and physics-based methods to address these challenges and accelerate materials design. We begin by presenting our integrated materials design framework and its three components in a general context. We then provide an example of applying this materials design framework to metal-insulator transition (MIT) materials, a specific type of emerging materials with practical importance in next-generation memory technologies. We identify multiple new materials which may display this property and propose pathways for their synthesis. Finally, we identify some outstanding challenges in data-driven materials design, such as materials data quality issues and property-performance mismatch. We seek to raise awareness of these overlooked issues hindering materials design, thus stimulating efforts toward developing methods to mitigate the gaps.

Interpretable Architecture Neural Networks for Function Visualization

Mar 03, 2023Abstract:In many scientific research fields, understanding and visualizing a black-box function in terms of the effects of all the input variables is of great importance. Existing visualization tools do not allow one to visualize the effects of all the input variables simultaneously. Although one can select one or two of the input variables to visualize via a 2D or 3D plot while holding other variables fixed, this presents an oversimplified and incomplete picture of the model. To overcome this shortcoming, we present a new visualization approach using an interpretable architecture neural network (IANN) to visualize the effects of all the input variables directly and simultaneously. We propose two interpretable structures, each of which can be conveniently represented by a specific IANN, and we discuss a number of possible extensions. We also provide a Python package to implement our proposed method. The supplemental materials are available online.

Fully Bayesian inference for latent variable Gaussian process models

Nov 04, 2022

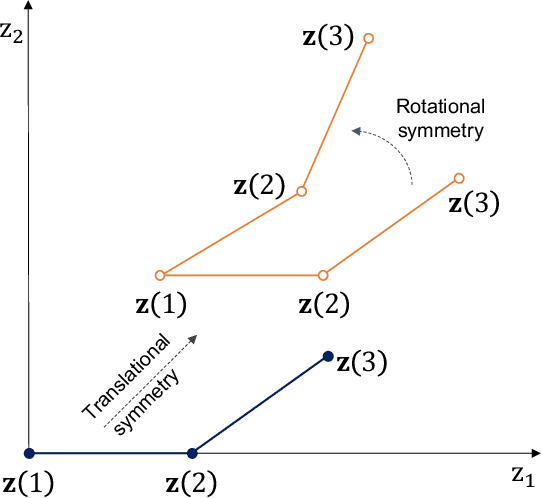

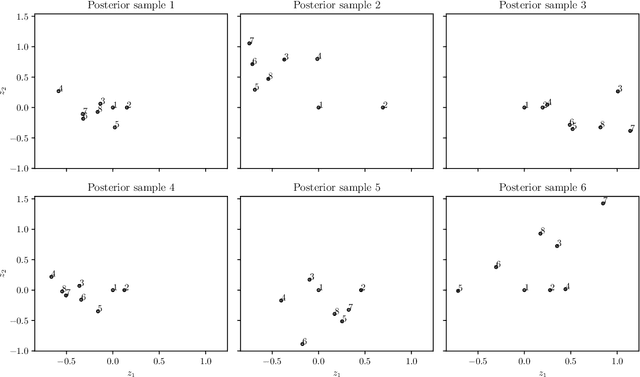

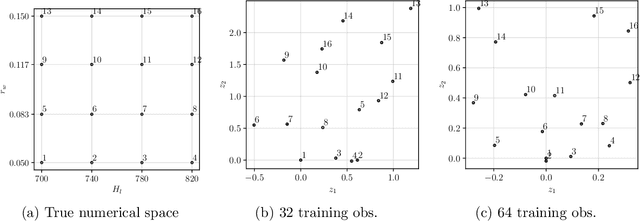

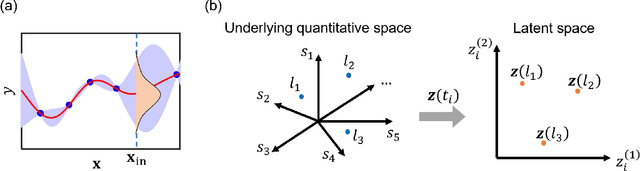

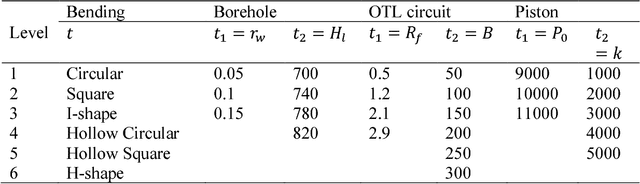

Abstract:Real engineering and scientific applications often involve one or more qualitative inputs. Standard Gaussian processes (GPs), however, cannot directly accommodate qualitative inputs. The recently introduced latent variable Gaussian process (LVGP) overcomes this issue by first mapping each qualitative factor to underlying latent variables (LVs), and then uses any standard GP covariance function over these LVs. The LVs are estimated similarly to the other GP hyperparameters through maximum likelihood estimation, and then plugged into the prediction expressions. However, this plug-in approach will not account for uncertainty in estimation of the LVs, which can be significant especially with limited training data. In this work, we develop a fully Bayesian approach for the LVGP model and for visualizing the effects of the qualitative inputs via their LVs. We also develop approximations for scaling up LVGPs and fully Bayesian inference for the LVGP hyperparameters. We conduct numerical studies comparing plug-in inference against fully Bayesian inference over a few engineering models and material design applications. In contrast to previous studies on standard GP modeling that have largely concluded that a fully Bayesian treatment offers limited improvements, our results show that for LVGP modeling it offers significant improvements in prediction accuracy and uncertainty quantification over the plug-in approach.

Uncertainty-aware Mixed-variable Machine Learning for Materials Design

Jul 11, 2022

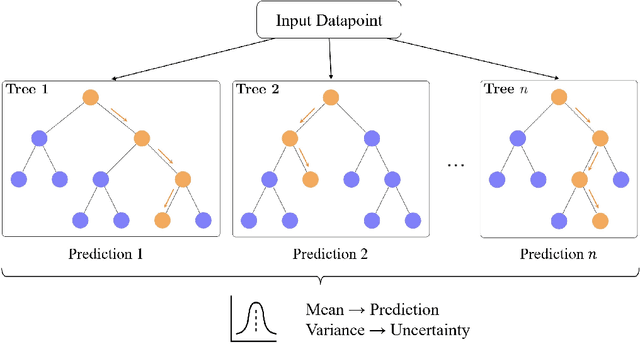

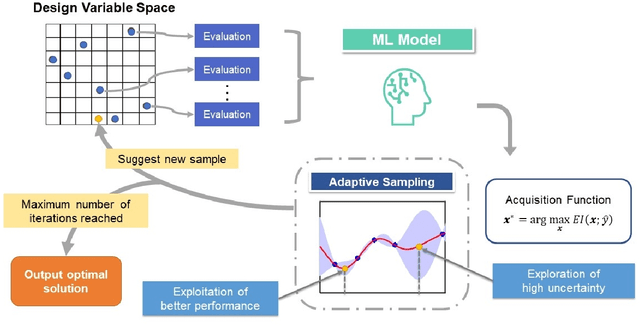

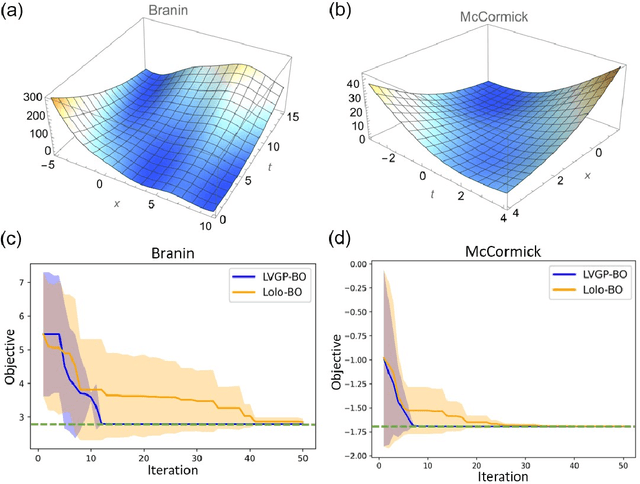

Abstract:Data-driven design shows the promise of accelerating materials discovery but is challenging due to the prohibitive cost of searching the vast design space of chemistry, structure, and synthesis methods. Bayesian Optimization (BO) employs uncertainty-aware machine learning models to select promising designs to evaluate, hence reducing the cost. However, BO with mixed numerical and categorical variables, which is of particular interest in materials design, has not been well studied. In this work, we survey frequentist and Bayesian approaches to uncertainty quantification of machine learning with mixed variables. We then conduct a systematic comparative study of their performances in BO using a popular representative model from each group, the random forest-based Lolo model (frequentist) and the latent variable Gaussian process model (Bayesian). We examine the efficacy of the two models in the optimization of mathematical functions, as well as properties of structural and functional materials, where we observe performance differences as related to problem dimensionality and complexity. By investigating the machine learning models' predictive and uncertainty estimation capabilities, we provide interpretations of the observed performance differences. Our results provide practical guidance on choosing between frequentist and Bayesian uncertainty-aware machine learning models for mixed-variable BO in materials design.

Concept Drift Monitoring and Diagnostics of Supervised Learning Models via Score Vectors

Dec 12, 2020

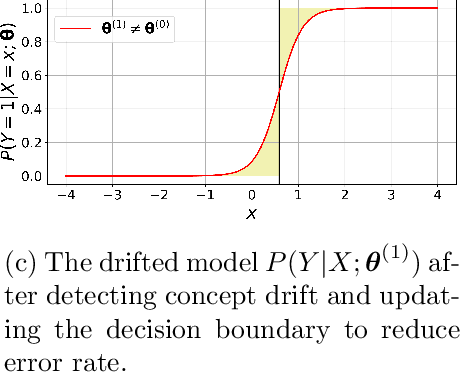

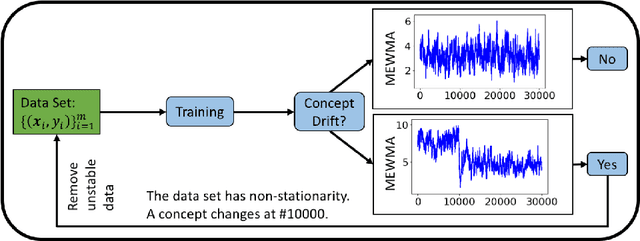

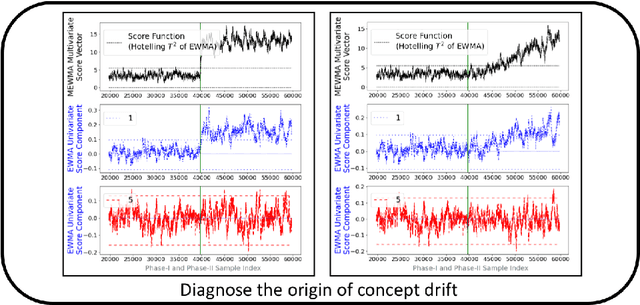

Abstract:Supervised learning models are one of the most fundamental classes of models. Viewing supervised learning from a probabilistic perspective, the set of training data to which the model is fitted is usually assumed to follow a stationary distribution. However, this stationarity assumption is often violated in a phenomenon called concept drift, which refers to changes over time in the predictive relationship between covariates $\mathbf{X}$ and a response variable $Y$ and can render trained models suboptimal or obsolete. We develop a comprehensive and computationally efficient framework for detecting, monitoring, and diagnosing concept drift. Specifically, we monitor the Fisher score vector, defined as the gradient of the log-likelihood for the fitted model, using a form of multivariate exponentially weighted moving average, which monitors for general changes in the mean of a random vector. In spite of the substantial performance advantages that we demonstrate over popular error-based methods, a score-based approach has not been previously considered for concept drift monitoring. Advantages of the proposed score-based framework include applicability to any parametric model, more powerful detection of changes as shown in theory and experiments, and inherent diagnostic capabilities for helping to identify the nature of the changes.

A Latent Variable Approach to Gaussian Process Modeling with Qualitative and Quantitative Factors

Jun 19, 2018

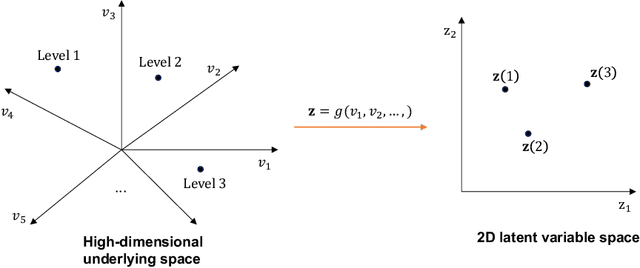

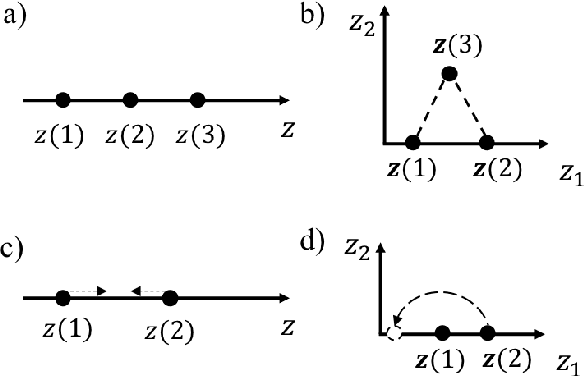

Abstract:Computer simulations often involve both qualitative and numerical inputs. Existing Gaussian process (GP) methods for handling this mainly assume a different response surface for each combination of levels of the qualitative factors and relate them via a multiresponse cross-covariance matrix. We introduce a substantially different approach that maps each qualitative factor to an underlying numerical latent variable (LV), with the mapped value for each level estimated similarly to the covariance lengthscale parameters. This provides a parsimonious GP parameterization that treats qualitative factors the same as numerical variables and views them as effecting the response via similar physical mechanisms. This has strong physical justification, as the effects of a qualitative factor in any physics-based simulation model must always be due to some underlying numerical variables. Even when the underlying variables are many, sufficient dimension reduction arguments imply that their effects can be represented by a low-dimensional LV. This conjecture is supported by the superior predictive performance observed across a variety of examples. Moreover, the mapped LVs provide substantial insight into the nature and effects of the qualitative factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge