Daniel Steinberg

Deviations in Representations Induced by Adversarial Attacks

Nov 07, 2022

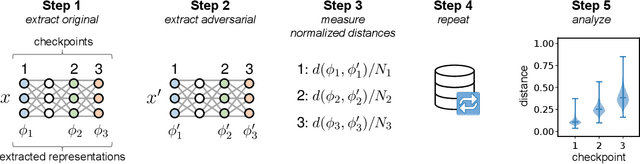

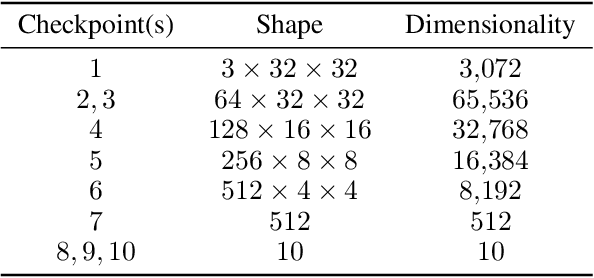

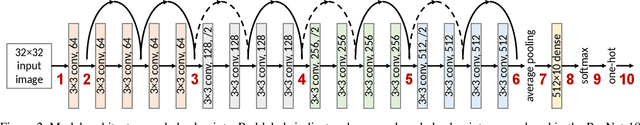

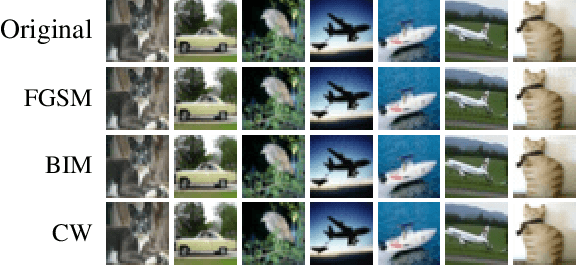

Abstract:Deep learning has been a popular topic and has achieved success in many areas. It has drawn the attention of researchers and machine learning practitioners alike, with developed models deployed to a variety of settings. Along with its achievements, research has shown that deep learning models are vulnerable to adversarial attacks. This finding brought about a new direction in research, whereby algorithms were developed to attack and defend vulnerable networks. Our interest is in understanding how these attacks effect change on the intermediate representations of deep learning models. We present a method for measuring and analyzing the deviations in representations induced by adversarial attacks, progressively across a selected set of layers. Experiments are conducted using an assortment of attack algorithms, on the CIFAR-10 dataset, with plots created to visualize the impact of adversarial attacks across different layers in a network.

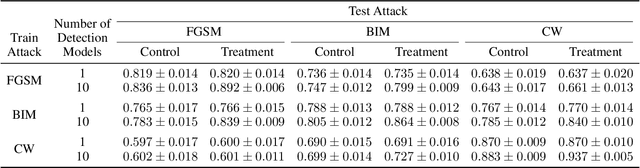

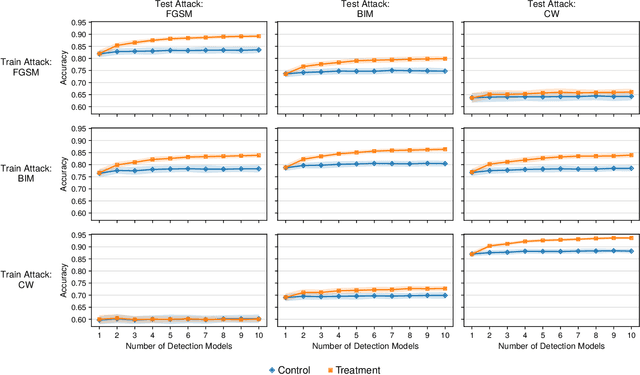

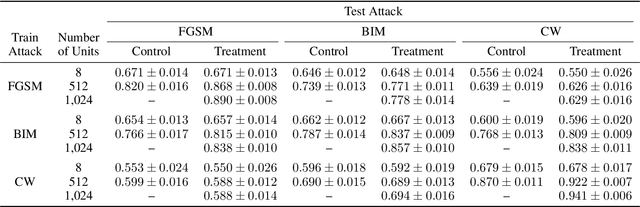

Measuring the Contribution of Multiple Model Representations in Detecting Adversarial Instances

Nov 13, 2021

Abstract:Deep learning models have been used for a wide variety of tasks. They are prevalent in computer vision, natural language processing, speech recognition, and other areas. While these models have worked well under many scenarios, it has been shown that they are vulnerable to adversarial attacks. This has led to a proliferation of research into ways that such attacks could be identified and/or defended against. Our goal is to explore the contribution that can be attributed to using multiple underlying models for the purpose of adversarial instance detection. Our paper describes two approaches that incorporate representations from multiple models for detecting adversarial examples. We devise controlled experiments for measuring the detection impact of incrementally utilizing additional models. For many of the scenarios we consider, the results show that performance increases with the number of underlying models used for extracting representations.

Visualizing Representations of Adversarially Perturbed Inputs

May 28, 2021

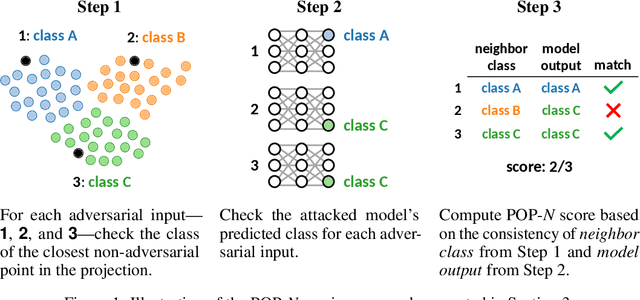

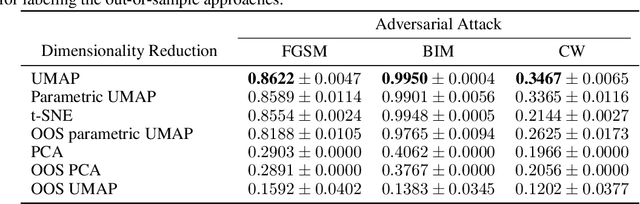

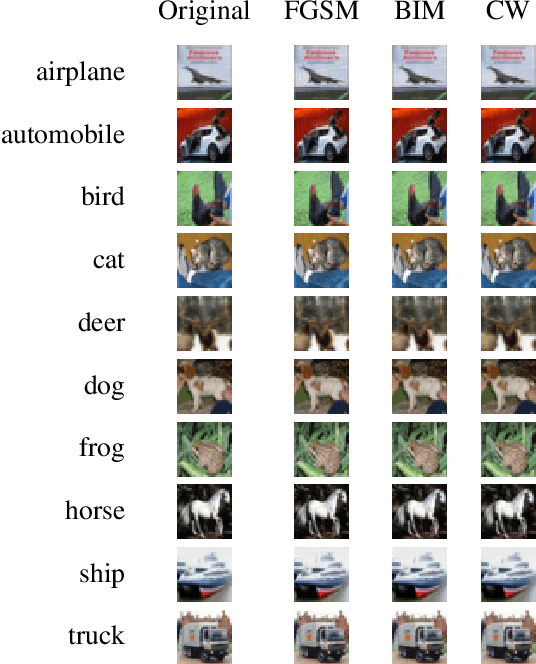

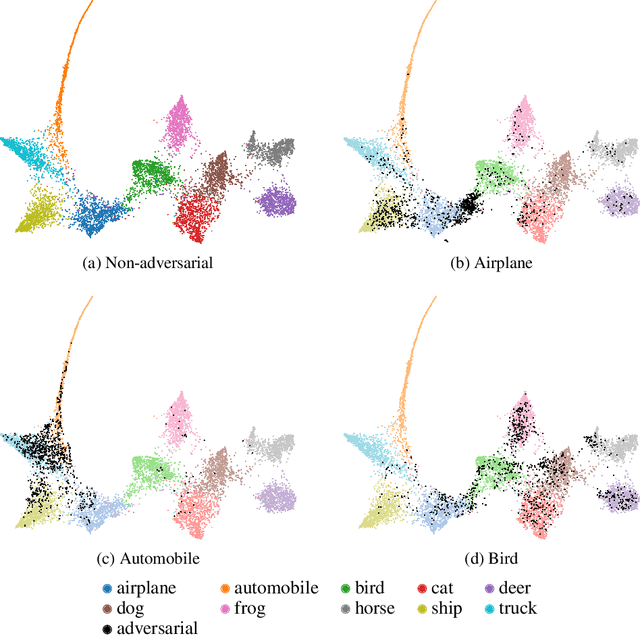

Abstract:It has been shown that deep learning models are vulnerable to adversarial attacks. We seek to further understand the consequence of such attacks on the intermediate activations of neural networks. We present an evaluation metric, POP-N, which scores the effectiveness of projecting data to N dimensions under the context of visualizing representations of adversarially perturbed inputs. We conduct experiments on CIFAR-10 to compare the POP-2 score of several dimensionality reduction algorithms across various adversarial attacks. Finally, we utilize the 2D data corresponding to high POP-2 scores to generate example visualizations.

Fast Fair Regression via Efficient Approximations of Mutual Information

Feb 14, 2020

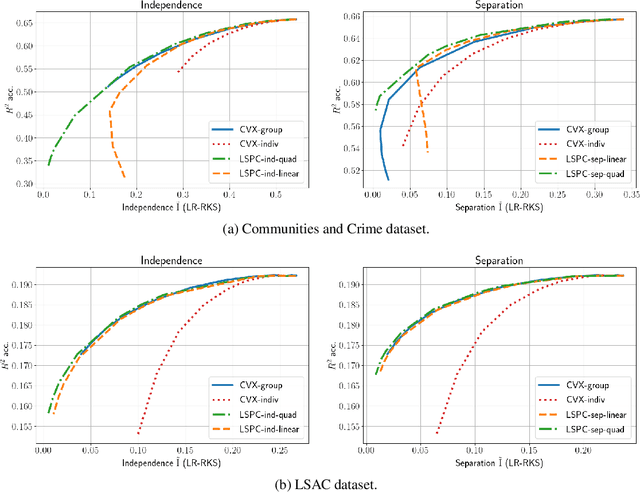

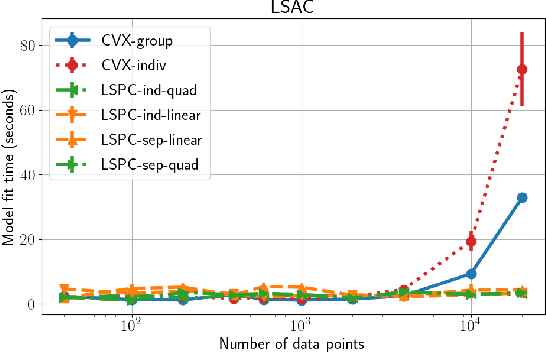

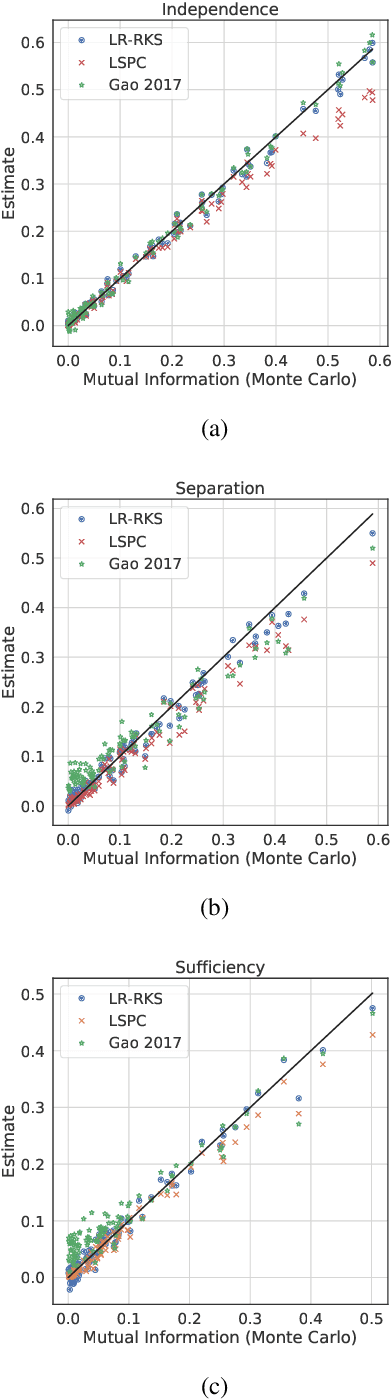

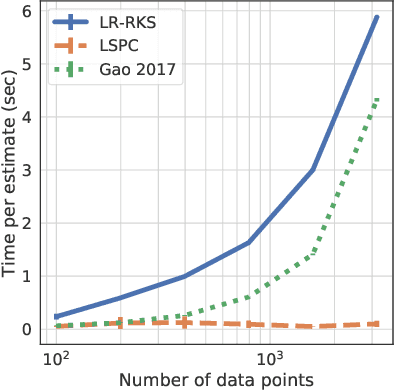

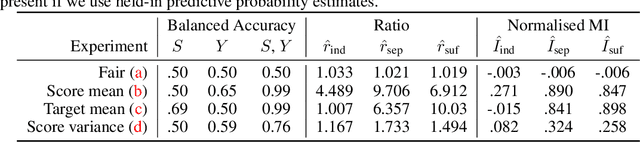

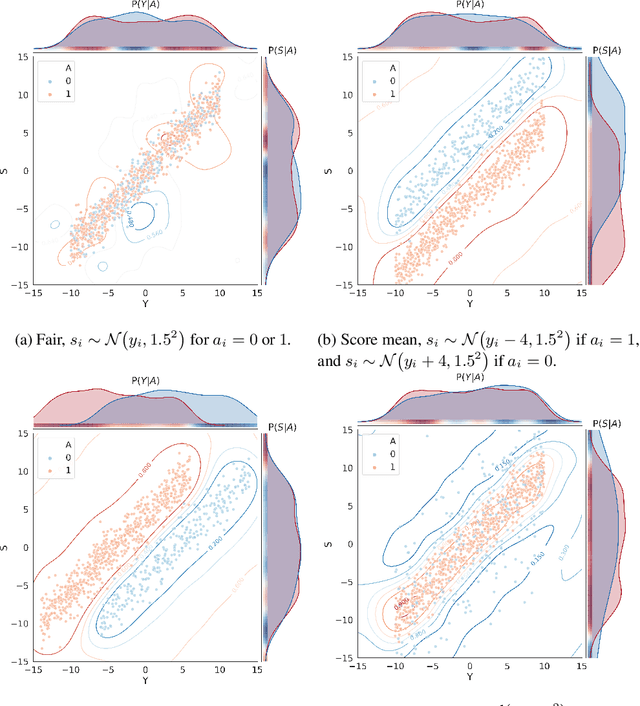

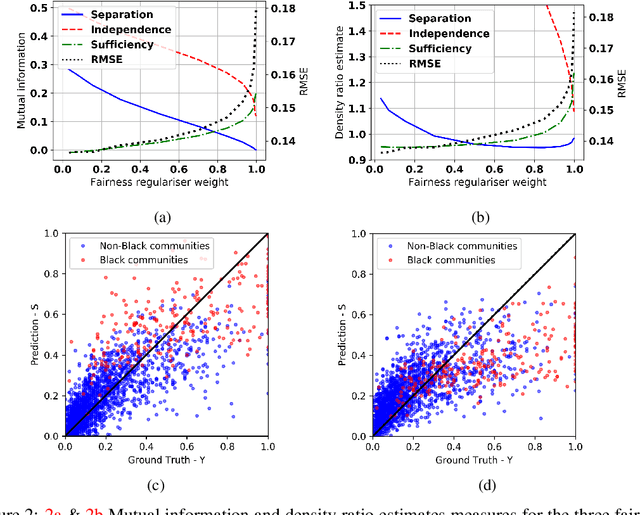

Abstract:Most work in algorithmic fairness to date has focused on discrete outcomes, such as deciding whether to grant someone a loan or not. In these classification settings, group fairness criteria such as independence, separation and sufficiency can be measured directly by comparing rates of outcomes between subpopulations. Many important problems however require the prediction of a real-valued outcome, such as a risk score or insurance premium. In such regression settings, measuring group fairness criteria is computationally challenging, as it requires estimating information-theoretic divergences between conditional probability density functions. This paper introduces fast approximations of the independence, separation and sufficiency group fairness criteria for regression models from their (conditional) mutual information definitions, and uses such approximations as regularisers to enforce fairness within a regularised risk minimisation framework. Experiments in real-world datasets indicate that in spite of its superior computational efficiency our algorithm still displays state-of-the-art accuracy/fairness tradeoffs.

Fairness Measures for Regression via Probabilistic Classification

Jan 16, 2020

Abstract:Algorithmic fairness involves expressing notions such as equity, or reasonable treatment, as quantifiable measures that a machine learning algorithm can optimise. Most work in the literature to date has focused on classification problems where the prediction is categorical, such as accepting or rejecting a loan application. This is in part because classification fairness measures are easily computed by comparing the rates of outcomes, leading to behaviours such as ensuring that the same fraction of eligible men are selected as eligible women. But such measures are computationally difficult to generalise to the continuous regression setting for problems such as pricing, or allocating payments. The difficulty arises from estimating conditional densities (such as the probability density that a system will over-charge by a certain amount). For the regression setting we introduce tractable approximations of the independence, separation and sufficiency criteria by observing that they factorise as ratios of different conditional probabilities of the protected attributes. We introduce and train machine learning classifiers, distinct from the predictor, as a mechanism to estimate these probabilities from the data. This naturally leads to model agnostic, tractable approximations of the criteria, which we explore experimentally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge