Daniel Mitropolsky

Simulated Language Acquisition in a Biologically Realistic Model of the Brain

Jul 15, 2025Abstract:Despite tremendous progress in neuroscience, we do not have a compelling narrative for the precise way whereby the spiking of neurons in our brain results in high-level cognitive phenomena such as planning and language. We introduce a simple mathematical formulation of six basic and broadly accepted principles of neuroscience: excitatory neurons, brain areas, random synapses, Hebbian plasticity, local inhibition, and inter-area inhibition. We implement a simulated neuromorphic system based on this formalism, which is capable of basic language acquisition: Starting from a tabula rasa, the system learns, in any language, the semantics of words, their syntactic role (verb versus noun), and the word order of the language, including the ability to generate novel sentences, through the exposure to a modest number of grounded sentences in the same language. We discuss several possible extensions and implications of this result.

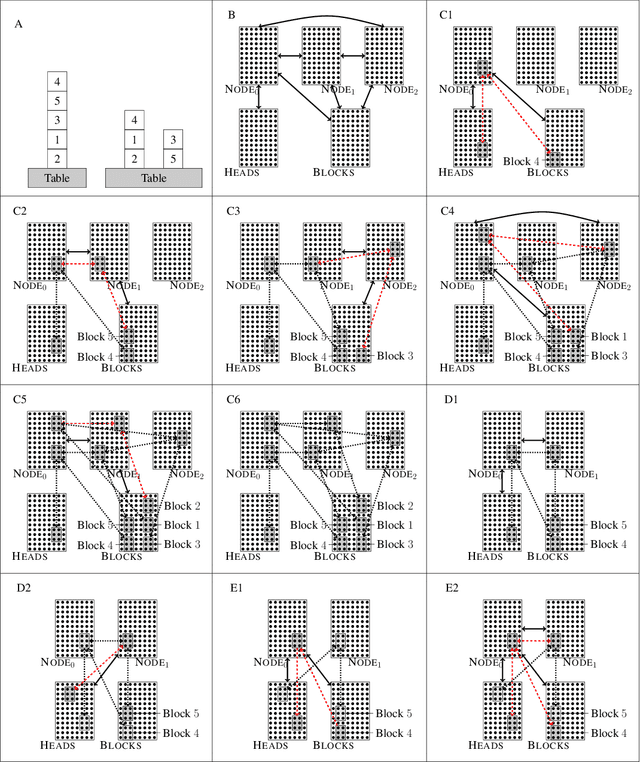

Coin-Flipping In The Brain: Statistical Learning with Neuronal Assemblies

Jun 11, 2024Abstract:How intelligence arises from the brain is a central problem in science. A crucial aspect of intelligence is dealing with uncertainty -- developing good predictions about one's environment, and converting these predictions into decisions. The brain itself seems to be noisy at many levels, from chemical processes which drive development and neuronal activity to trial variability of responses to stimuli. One hypothesis is that the noise inherent to the brain's mechanisms is used to sample from a model of the world and generate predictions. To test this hypothesis, we study the emergence of statistical learning in NEMO, a biologically plausible computational model of the brain based on stylized neurons and synapses, plasticity, and inhibition, and giving rise to assemblies -- a group of neurons whose coordinated firing is tantamount to recalling a location, concept, memory, or other primitive item of cognition. We show in theory and simulation that connections between assemblies record statistics, and ambient noise can be harnessed to make probabilistic choices between assemblies. This allows NEMO to create internal models such as Markov chains entirely from the presentation of sequences of stimuli. Our results provide a foundation for biologically plausible probabilistic computation, and add theoretical support to the hypothesis that noise is a useful component of the brain's mechanism for cognition.

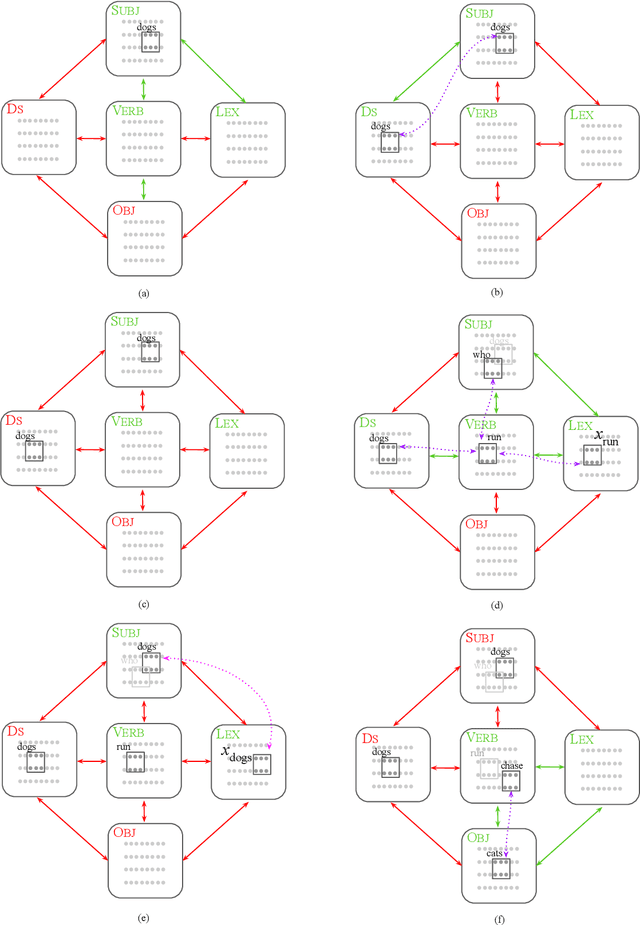

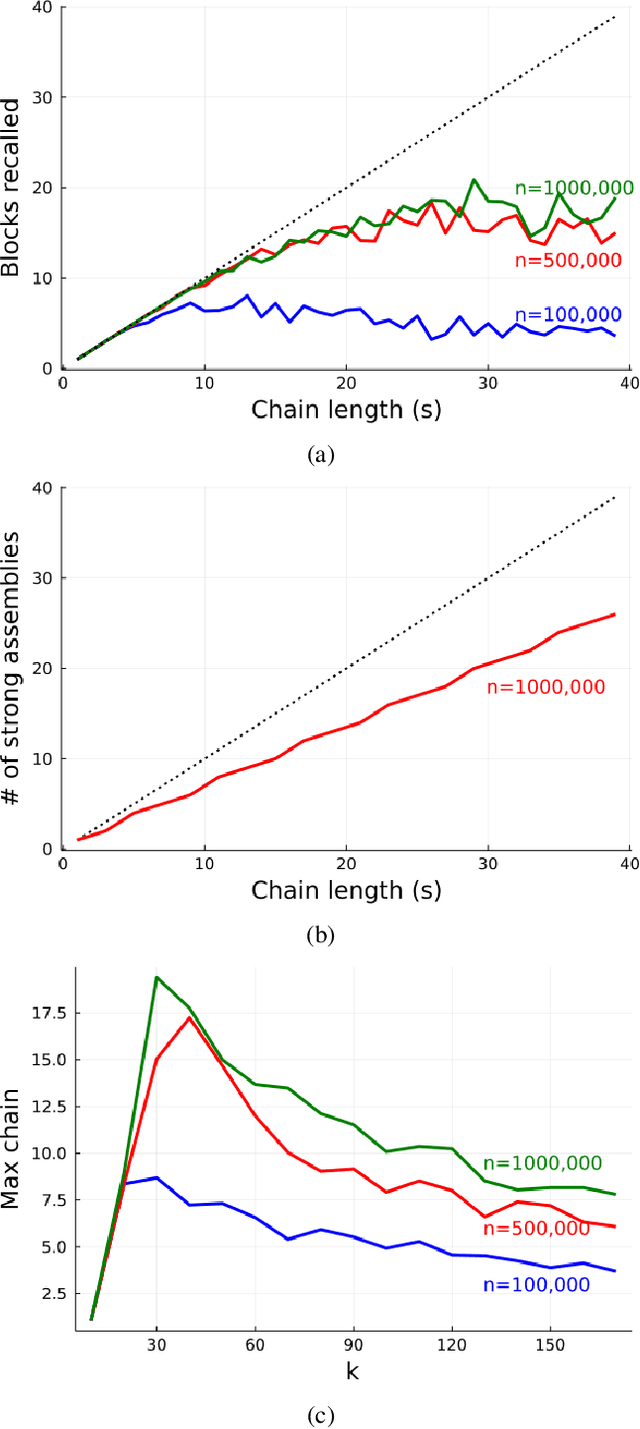

The Architecture of a Biologically Plausible Language Organ

Jun 27, 2023Abstract:We present a simulated biologically plausible language organ, made up of stylized but realistic neurons, synapses, brain areas, plasticity, and a simplified model of sensory perception. We show through experiments that this model succeeds in an important early step in language acquisition: the learning of nouns, verbs, and their meanings, from the grounded input of only a modest number of sentences. Learning in this system is achieved through Hebbian plasticity, and without backpropagation. Our model goes beyond a parser previously designed in a similar environment, with the critical addition of a biologically plausible account for how language can be acquired in the infant's brain, not just processed by a mature brain.

Center-Embedding and Constituency in the Brain and a New Characterization of Context-Free Languages

Jun 27, 2022

Abstract:A computational system implemented exclusively through the spiking of neurons was recently shown capable of syntax, that is, of carrying out the dependency parsing of simple English sentences. We address two of the most important questions left open by that work: constituency (the identification of key parts of the sentence such as the verb phrase) and the processing of dependent sentences, especially center-embedded ones. We show that these two aspects of language can also be implemented by neurons and synapses in a way that is compatible with what is known, or widely believed, about the structure and function of the language organ. Surprisingly, the way we implement center embedding points to a new characterization of context-free languages.

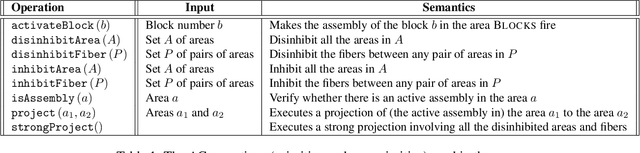

Planning with Biological Neurons and Synapses

Dec 16, 2021

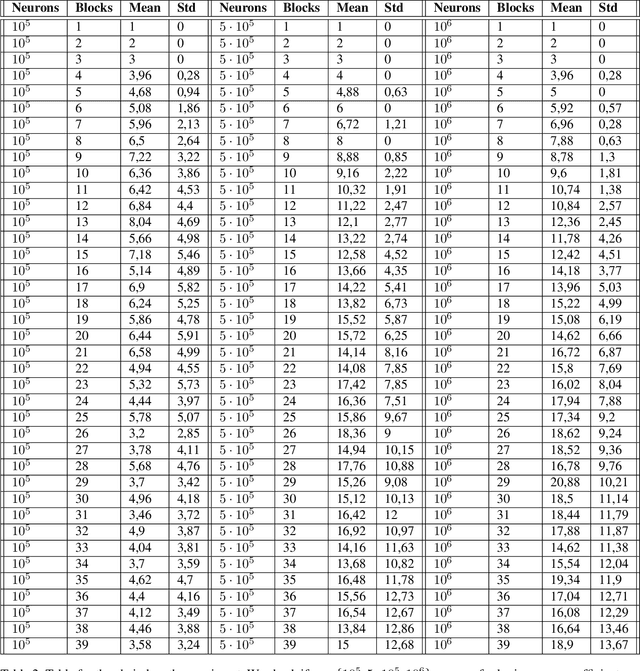

Abstract:We revisit the planning problem in the blocks world, and we implement a known heuristic for this task. Importantly, our implementation is biologically plausible, in the sense that it is carried out exclusively through the spiking of neurons. Even though much has been accomplished in the blocks world over the past five decades, we believe that this is the first algorithm of its kind. The input is a sequence of symbols encoding an initial set of block stacks as well as a target set, and the output is a sequence of motion commands such as "put the top block in stack 1 on the table". The program is written in the Assembly Calculus, a recently proposed computational framework meant to model computation in the brain by bridging the gap between neural activity and cognitive function. Its elementary objects are assemblies of neurons (stable sets of neurons whose simultaneous firing signifies that the subject is thinking of an object, concept, word, etc.), its commands include project and merge, and its execution model is based on widely accepted tenets of neuroscience. A program in this framework essentially sets up a dynamical system of neurons and synapses that eventually, with high probability, accomplishes the task. The purpose of this work is to establish empirically that reasonably large programs in the Assembly Calculus can execute correctly and reliably; and that rather realistic -- if idealized -- higher cognitive functions, such as planning in the blocks world, can be implemented successfully by such programs.

A Biologically Plausible Parser

Aug 04, 2021

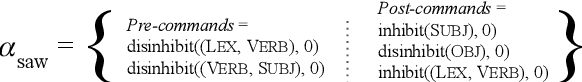

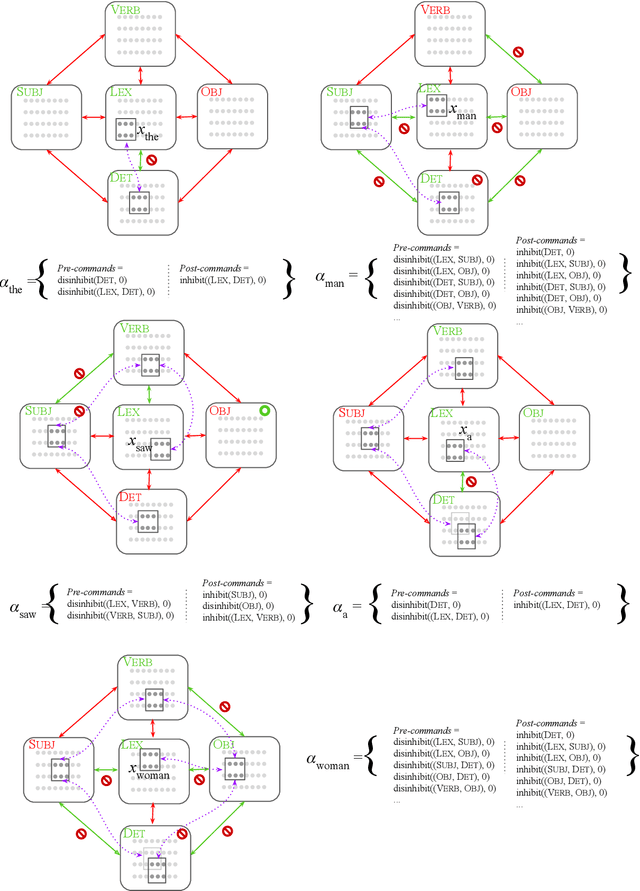

Abstract:We describe a parser of English effectuated by biologically plausible neurons and synapses, and implemented through the Assembly Calculus, a recently proposed computational framework for cognitive function. We demonstrate that this device is capable of correctly parsing reasonably nontrivial sentences. While our experiments entail rather simple sentences in English, our results suggest that the parser can be extended beyond what we have implemented, to several directions encompassing much of language. For example, we present a simple Russian version of the parser, and discuss how to handle recursion, embedding, and polysemy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge