Daniel Dylewsky

Neural models for prediction of spatially patterned phase transitions: methods and challenges

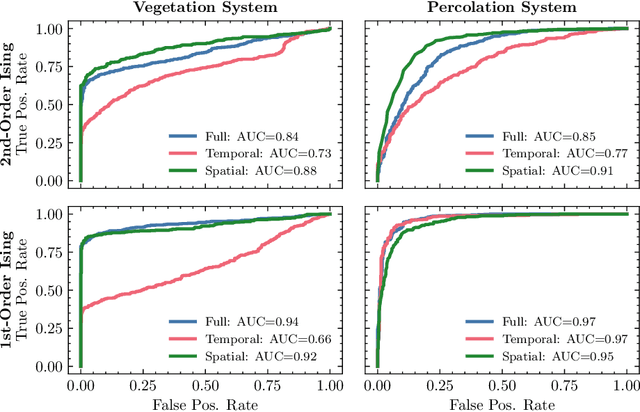

May 14, 2025Abstract:Dryland vegetation ecosystems are known to be susceptible to critical transitions between alternative stable states when subjected to external forcing. Such transitions are often discussed through the framework of bifurcation theory, but the spatial patterning of vegetation, which is characteristic of drylands, leads to dynamics that are much more complex and diverse than local bifurcations. Recent methodological developments in Early Warning Signal (EWS) detection have shown promise in identifying dynamical signatures of oncoming critical transitions, with particularly strong predictive capabilities being demonstrated by deep neural networks. However, a machine learning model trained on synthetic examples is only useful if it can effectively transfer to a test case of practical interest. These models' capacity to generalize in this manner has been demonstrated for bifurcation transitions, but it is not as well characterized for high-dimensional phase transitions. This paper explores the successes and shortcomings of neural EWS detection for spatially patterned phase transitions, and shows how these models can be used to gain insight into where and how EWS-relevant information is encoded in spatiotemporal dynamics. A few paradigmatic test systems are used to illustrate how the capabilities of such models can be probed in a number of ways, with particular attention to the performances of a number of proposed statistical indicators for EWS and to the supplementary task of distinguishing between abrupt and continuous transitions. Results reveal that model performance often changes dramatically when training and test data sources are interchanged, which offers new insight into the criteria for model generalization.

Predicting discrete-time bifurcations with deep learning

Mar 16, 2023Abstract:Many natural and man-made systems are prone to critical transitions -- abrupt and potentially devastating changes in dynamics. Deep learning classifiers can provide an early warning signal (EWS) for critical transitions by learning generic features of bifurcations (dynamical instabilities) from large simulated training data sets. So far, classifiers have only been trained to predict continuous-time bifurcations, ignoring rich dynamics unique to discrete-time bifurcations. Here, we train a deep learning classifier to provide an EWS for the five local discrete-time bifurcations of codimension-1. We test the classifier on simulation data from discrete-time models used in physiology, economics and ecology, as well as experimental data of spontaneously beating chick-heart aggregates that undergo a period-doubling bifurcation. The classifier outperforms commonly used EWS under a wide range of noise intensities and rates of approach to the bifurcation. It also predicts the correct bifurcation in most cases, with particularly high accuracy for the period-doubling, Neimark-Sacker and fold bifurcations. Deep learning as a tool for bifurcation prediction is still in its nascence and has the potential to transform the way we monitor systems for critical transitions.

Universal Early Warning Signals of Phase Transitions in Climate Systems

May 31, 2022

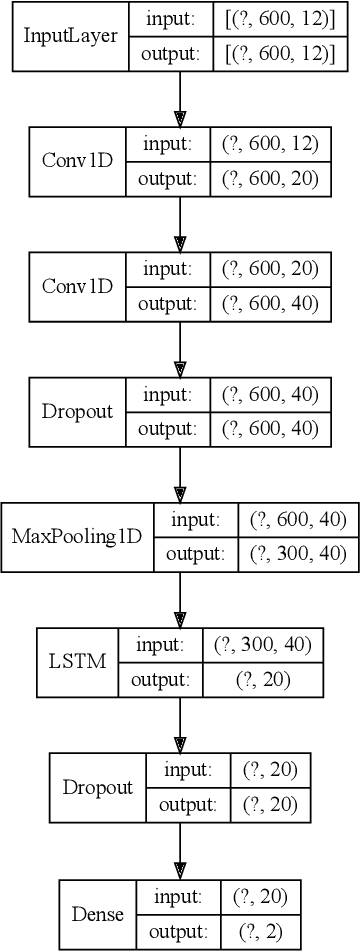

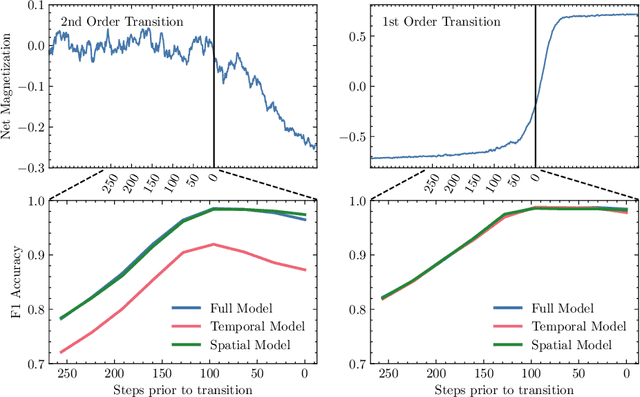

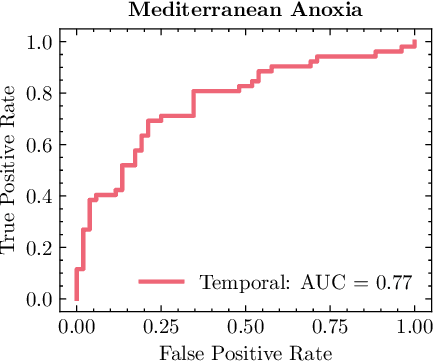

Abstract:The potential for complex systems to exhibit tipping points in which an equilibrium state undergoes a sudden and potentially irreversible shift is well established, but prediction of these events using standard forecast modeling techniques is quite difficult. This has led to the development of an alternative suite of methods that seek to identify signatures of critical phenomena in data, which are expected to occur in advance of many classes of dynamical bifurcation. Crucially, the manifestations of these critical phenomena are generic across a variety of systems, meaning that data-intensive deep learning methods can be trained on (abundant) synthetic data and plausibly prove effective when transferred to (more limited) empirical data sets. This paper provides a proof of concept for this approach as applied to lattice phase transitions: a deep neural network trained exclusively on 2D Ising model phase transitions is tested on a number of real and simulated climate systems with considerable success. Its accuracy frequently surpasses that of conventional statistical indicators, with performance shown to be consistently improved by the inclusion of spatial indicators. Tools such as this may offer valuable insight into climate tipping events, as remote sensing measurements provide increasingly abundant data on complex geospatially-resolved Earth systems.

Dynamic mode decomposition for forecasting and analysis of power grid load data

Oct 08, 2020

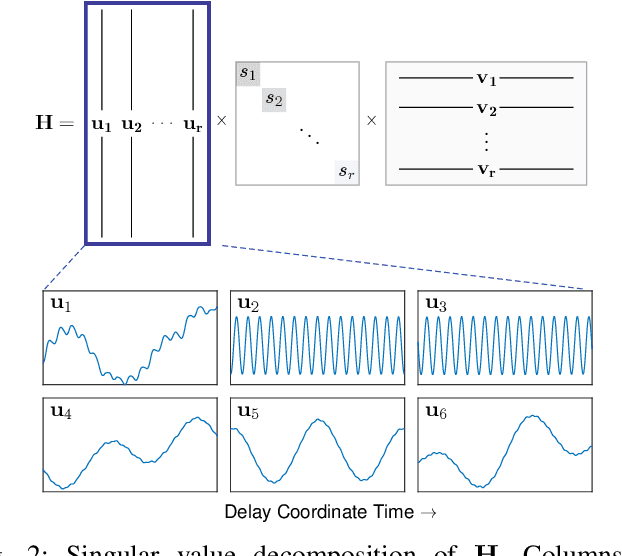

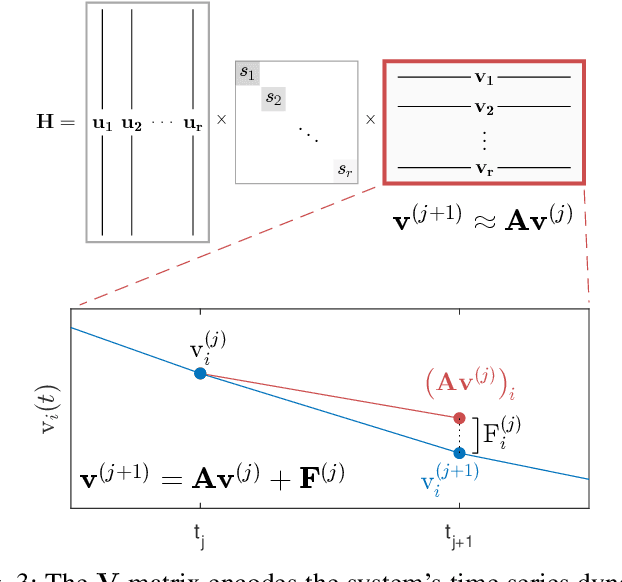

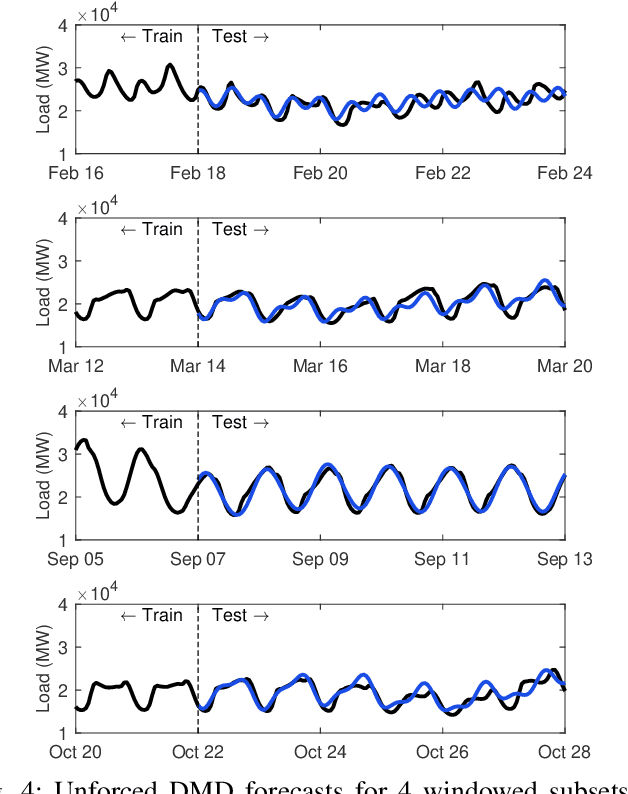

Abstract:Time series forecasting remains a central challenge problem in almost all scientific disciplines, including load modeling in power systems engineering. The ability to produce accurate forecasts has major implications for real-time control, pricing, maintenance, and security decisions. We introduce a novel load forecasting method in which observed dynamics are modeled as a forced linear system using Dynamic Mode Decomposition (DMD) in time delay coordinates. Central to this approach is the insight that grid load, like many observables on complex real-world systems, has an "almost-periodic" character, i.e., a continuous Fourier spectrum punctuated by dominant peaks, which capture regular (e.g., daily or weekly) recurrences in the dynamics. The forecasting method presented takes advantage of this property by (i) regressing to a deterministic linear model whose eigenspectrum maps onto those peaks, and (ii) simultaneously learning a stochastic Gaussian process regression (GPR) process to actuate this system. Our forecasting algorithm is compared against state-of-the-art forecasting techniques not using additional explanatory variables and is shown to produce superior performance. Moreover, its use of linear intrinsic dynamics offers a number of desirable properties in terms of interpretability and parsimony.

Sparse Identification of Slow Timescale Dynamics

Jun 01, 2020

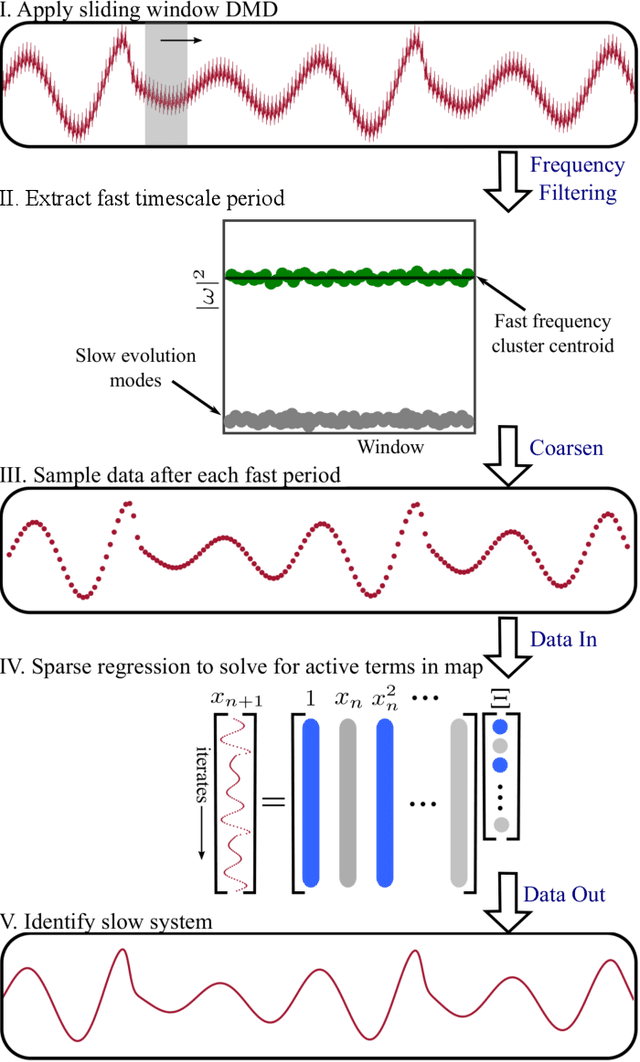

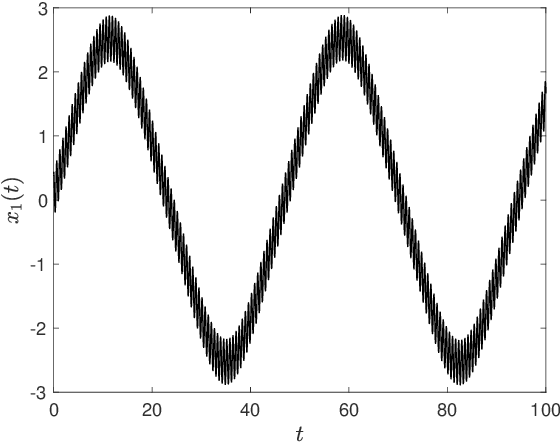

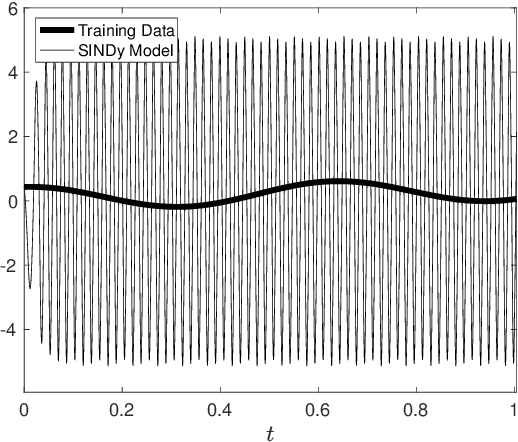

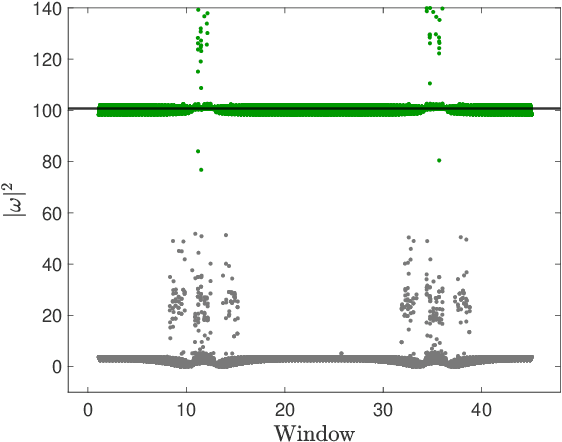

Abstract:Multiscale phenomena that evolve on multiple distinct timescales are prevalent throughout the sciences. It is often the case that the governing equations of the persistent and approximately periodic fast scales are prescribed, while the emergent slow scale evolution is unknown. Yet the course-grained, slow scale dynamics is often of greatest interest in practice. In this work we present an accurate and efficient method for extracting the slow timescale dynamics from a signal exhibiting multiple timescales. The method relies on tracking the signal at evenly-spaced intervals with length given by the period of the fast timescale, which is discovered using clustering techniques in conjunction with the dynamic mode decomposition. Sparse regression techniques are then used to discover a mapping which describes iterations from one data point to the next. We show that for sufficiently disparate timescales this discovered mapping can be used to discover the continuous-time slow dynamics, thus providing a novel tool for extracting dynamics on multiple timescales.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge