Dan Brown

Mind the Gap: Conformative Decoding to Improve Output Diversity of Instruction-Tuned Large Language Models

Jul 28, 2025Abstract:Instruction-tuning large language models (LLMs) reduces the diversity of their outputs, which has implications for many tasks, particularly for creative tasks. This paper investigates the ``diversity gap'' for a writing prompt narrative generation task. This gap emerges as measured by current diversity metrics for various open-weight and open-source LLMs. The results show significant decreases in diversity due to instruction-tuning. We explore the diversity loss at each fine-tuning stage for the OLMo and OLMo 2 models to further understand how output diversity is affected. The results indicate that DPO has the most substantial impact on diversity. Motivated by these findings, we present a new decoding strategy, conformative decoding, which guides an instruct model using its more diverse base model to reintroduce output diversity. We show that conformative decoding typically increases diversity and even maintains or improves quality.

Can Large Language Models Outperform Non-Experts in Poetry Evaluation? A Comparative Study Using the Consensual Assessment Technique

Feb 26, 2025Abstract:The Consensual Assessment Technique (CAT) evaluates creativity through holistic expert judgments. We investigate the use of two advanced Large Language Models (LLMs), Claude-3-Opus and GPT-4o, to evaluate poetry by a methodology inspired by the CAT. Using a dataset of 90 poems, we found that these LLMs can surpass the results achieved by non-expert human judges at matching a ground truth based on publication venue, particularly when assessing smaller subsets of poems. Claude-3-Opus exhibited slightly superior performance than GPT-4o. We show that LLMs are viable tools for accurately assessing poetry, paving the way for their broader application into other creative domains.

Is Temperature the Creativity Parameter of Large Language Models?

May 01, 2024Abstract:Large language models (LLMs) are applied to all sorts of creative tasks, and their outputs vary from beautiful, to peculiar, to pastiche, into plain plagiarism. The temperature parameter of an LLM regulates the amount of randomness, leading to more diverse outputs; therefore, it is often claimed to be the creativity parameter. Here, we investigate this claim using a narrative generation task with a predetermined fixed context, model and prompt. Specifically, we present an empirical analysis of the LLM output for different temperature values using four necessary conditions for creativity in narrative generation: novelty, typicality, cohesion, and coherence. We find that temperature is weakly correlated with novelty, and unsurprisingly, moderately correlated with incoherence, but there is no relationship with either cohesion or typicality. However, the influence of temperature on creativity is far more nuanced and weak than suggested by the "creativity parameter" claim; overall results suggest that the LLM generates slightly more novel outputs as temperatures get higher. Finally, we discuss ideas to allow more controlled LLM creativity, rather than relying on chance via changing the temperature parameter.

Bits of Grass: Does GPT already know how to write like Whitman?

May 10, 2023Abstract:This study examines the ability of GPT-3.5, GPT-3.5-turbo (ChatGPT) and GPT-4 models to generate poems in the style of specific authors using zero-shot and many-shot prompts (which use the maximum context length of 8192 tokens). We assess the performance of models that are not fine-tuned for generating poetry in the style of specific authors, via automated evaluation. Our findings indicate that without fine-tuning, even when provided with the maximum number of 17 poem examples (8192 tokens) in the prompt, these models do not generate poetry in the desired style.

Large Music Recommendation Studies for Small Teams

Jan 31, 2023Abstract:Running live music recommendation studies without direct industry partnerships can be a prohibitively daunting task, especially for small teams. In order to help future researchers interested in such evaluations, we present a number of struggles we faced in the process of generating our own such evaluation system alongside potential solutions. These problems span the topics of users, data, computation, and application architecture.

Automated Time-frequency Domain Audio Crossfades using Graph Cuts

Jan 31, 2023Abstract:The problem of transitioning smoothly from one audio clip to another arises in many music consumption scenarios, especially as music consumption has moved from professionally curated and live-streamed radios to personal playback devices and services. we present the first steps toward a new method of automatically transitioning from one audio clip to another by discretizing the frequency spectrum into bins and then finding transition times for each bin. We phrase the problem as one of graph flow optimization; specifically min-cut/max-flow.

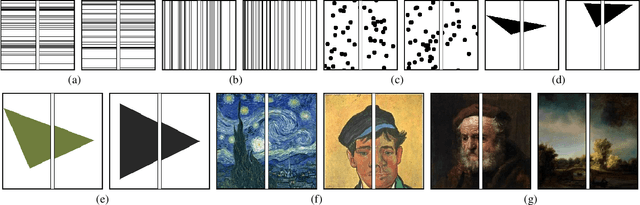

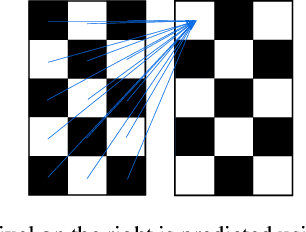

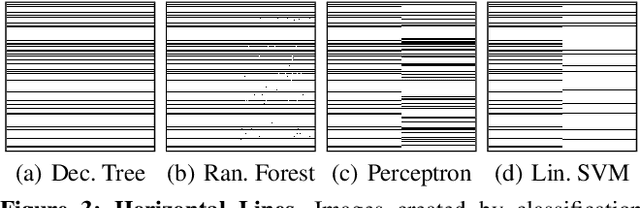

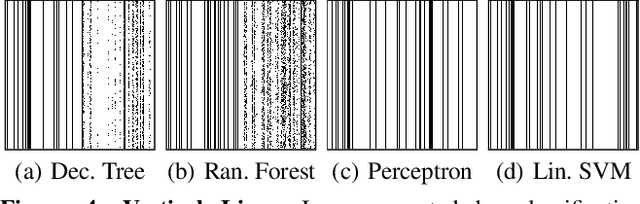

Shallow Art: Art Extension Through Simple Machine Learning

Oct 21, 2019

Abstract:Shallow Art presents, implements, and tests the use of simple single-output classification and regression models for the purpose of art generation. Various machine learning algorithms are trained on collections of computer generated images, artworks from Vincent van Gogh, and artworks from Rembrandt van Rijn. These models are then provided half of an image and asked to complete the missing side. The resulting images are displayed, and we explore implications for computational creativity.

* 5 pages, 9 figures, presented at the 10th International Conference on Computational Creativity (ICCC 2019)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge