Anna Jordanous

Emovectors: assessing emotional content in jazz improvisations for creativity evaluation

Dec 09, 2025Abstract:Music improvisation is fascinating to study, being essentially a live demonstration of a creative process. In jazz, musicians often improvise across predefined chord progressions (leadsheets). How do we assess the creativity of jazz improvisations? And can we capture this in automated metrics for creativity for current LLM-based generative systems? Demonstration of emotional involvement is closely linked with creativity in improvisation. Analysing musical audio, can we detect emotional involvement? This study hypothesises that if an improvisation contains more evidence of emotion-laden content, it is more likely to be recognised as creative. An embeddings-based method is proposed for capturing the emotional content in musical improvisations, using a psychologically-grounded classification of musical characteristics associated with emotions. Resulting 'emovectors' are analysed to test the above hypothesis, comparing across multiple improvisations. Capturing emotional content in this quantifiable way can contribute towards new metrics for creativity evaluation that can be applied at scale.

Mind the Gap: Conformative Decoding to Improve Output Diversity of Instruction-Tuned Large Language Models

Jul 28, 2025Abstract:Instruction-tuning large language models (LLMs) reduces the diversity of their outputs, which has implications for many tasks, particularly for creative tasks. This paper investigates the ``diversity gap'' for a writing prompt narrative generation task. This gap emerges as measured by current diversity metrics for various open-weight and open-source LLMs. The results show significant decreases in diversity due to instruction-tuning. We explore the diversity loss at each fine-tuning stage for the OLMo and OLMo 2 models to further understand how output diversity is affected. The results indicate that DPO has the most substantial impact on diversity. Motivated by these findings, we present a new decoding strategy, conformative decoding, which guides an instruct model using its more diverse base model to reintroduce output diversity. We show that conformative decoding typically increases diversity and even maintains or improves quality.

Is Temperature the Creativity Parameter of Large Language Models?

May 01, 2024Abstract:Large language models (LLMs) are applied to all sorts of creative tasks, and their outputs vary from beautiful, to peculiar, to pastiche, into plain plagiarism. The temperature parameter of an LLM regulates the amount of randomness, leading to more diverse outputs; therefore, it is often claimed to be the creativity parameter. Here, we investigate this claim using a narrative generation task with a predetermined fixed context, model and prompt. Specifically, we present an empirical analysis of the LLM output for different temperature values using four necessary conditions for creativity in narrative generation: novelty, typicality, cohesion, and coherence. We find that temperature is weakly correlated with novelty, and unsurprisingly, moderately correlated with incoherence, but there is no relationship with either cohesion or typicality. However, the influence of temperature on creativity is far more nuanced and weak than suggested by the "creativity parameter" claim; overall results suggest that the LLM generates slightly more novel outputs as temperatures get higher. Finally, we discuss ideas to allow more controlled LLM creativity, rather than relying on chance via changing the temperature parameter.

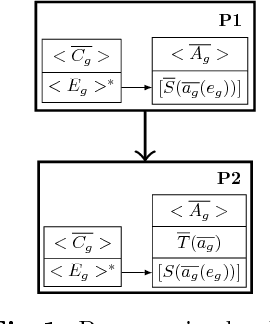

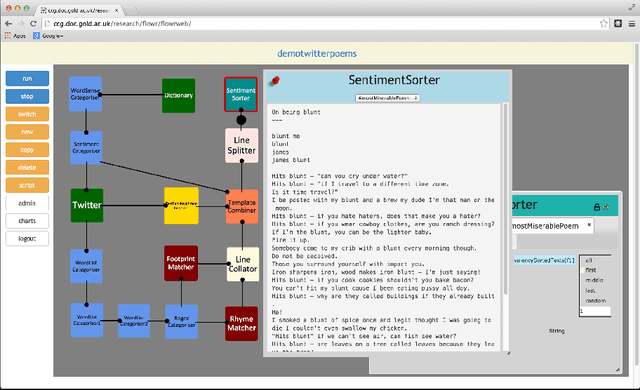

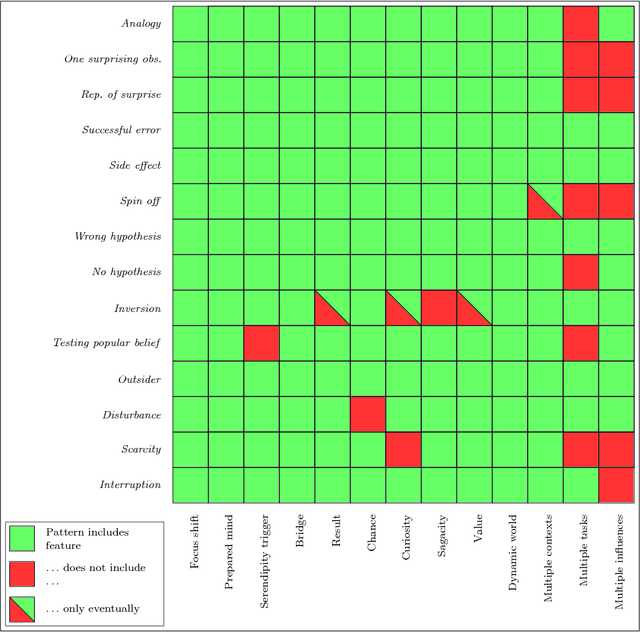

Modelling serendipity in a computational context

May 16, 2017

Abstract:Building on a survey of previous theories of serendipity and creativity, we advance a sequential model of serendipitous occurrences. We distinguish between serendipity as a service and serendipity in the system itself, clarify the role of invention and discovery, and provide a measure for the serendipity potential of a system. While a system can arguably not be guaranteed to be serendipitous, it can have a high potential for serendipity. Practitioners can use these theoretical tools to evaluate a computational system's potential for unexpected behaviour that may have a beneficial outcome. In addition to a qualitative features of serendipity potential, the model also includes quantitative ratings that can guide development work. We show how the model is used in three case studies of existing and hypothetical systems, in the context of evolutionary computing, automated programming, and (next-generation) recommender systems. From this analysis, we extract recommendations for practitioners working with computational serendipity, and outline future directions for research.

Modelling Creativity: Identifying Key Components through a Corpus-Based Approach

Sep 12, 2016

Abstract:Creativity is a complex, multi-faceted concept encompassing a variety of related aspects, abilities, properties and behaviours. If we wish to study creativity scientifically, then a tractable and well-articulated model of creativity is required. Such a model would be of great value to researchers investigating the nature of creativity and in particular, those concerned with the evaluation of creative practice. This paper describes a unique approach to developing a suitable model of how creative behaviour emerges that is based on the words people use to describe the concept. Using techniques from the field of statistical natural language processing, we identify a collection of fourteen key components of creativity through an analysis of a corpus of academic papers on the topic. Words are identified which appear significantly often in connection with discussions of the concept. Using a measure of lexical similarity to help cluster these words, a number of distinct themes emerge, which collectively contribute to a comprehensive and multi-perspective model of creativity. The components provide an ontology of creativity: a set of building blocks which can be used to model creative practice in a variety of domains. The components have been employed in two case studies to evaluate the creativity of computational systems and have proven useful in articulating achievements of this work and directions for further research.

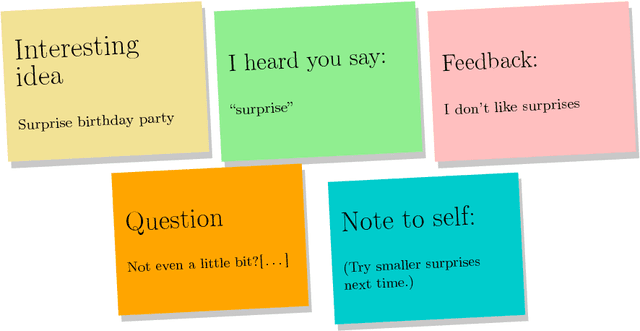

Implementing feedback in creative systems: A workshop approach

May 26, 2015

Abstract:One particular challenge in AI is the computational modelling and simulation of creativity. Feedback and learning from experience are key aspects of the creative process. Here we investigate how we could implement feedback in creative systems using a social model. From the field of creative writing we borrow the concept of a Writers Workshop as a model for learning through feedback. The Writers Workshop encourages examination, discussion and debates of a piece of creative work using a prescribed format of activities. We propose a computational model of the Writers Workshop as a roadmap for incorporation of feedback in artificial creativity systems. We argue that the Writers Workshop setting describes the anatomy of the creative process. We support our claim with a case study that describes how to implement the Writers Workshop model in a computational creativity system. We present this work using patterns other people can follow to implement similar designs in their own systems. We conclude by discussing the broader relevance of this model to other aspects of AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge