Da He

De-Noising of Photoacoustic Microscopy Images by Deep Learning

Jan 12, 2022

Abstract:As a hybrid imaging technology, photoacoustic microscopy (PAM) imaging suffers from noise due to the maximum permissible exposure of laser intensity, attenuation of ultrasound in the tissue, and the inherent noise of the transducer. De-noising is a post-processing method to reduce noise, and PAM image quality can be recovered. However, previous de-noising techniques usually heavily rely on mathematical priors as well as manually selected parameters, resulting in unsatisfactory and slow de-noising performance for different noisy images, which greatly hinders practical and clinical applications. In this work, we propose a deep learning-based method to remove complex noise from PAM images without mathematical priors and manual selection of settings for different input images. An attention enhanced generative adversarial network is used to extract image features and remove various noises. The proposed method is demonstrated on both synthetic and real datasets, including phantom (leaf veins) and in vivo (mouse ear blood vessels and zebrafish pigment) experiments. The results show that compared with previous PAM de-noising methods, our method exhibits good performance in recovering images qualitatively and quantitatively. In addition, the de-noising speed of 0.016 s is achieved for an image with $256\times256$ pixels. Our approach is effective and practical for the de-noising of PAM images.

Super-resolution-based Change Detection Network with Stacked Attention Module for Images with Different Resolutions

Feb 27, 2021

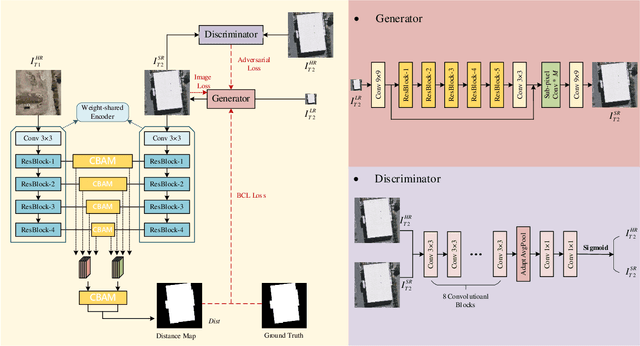

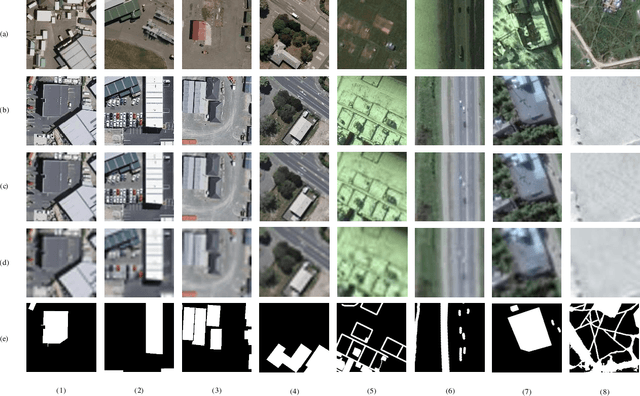

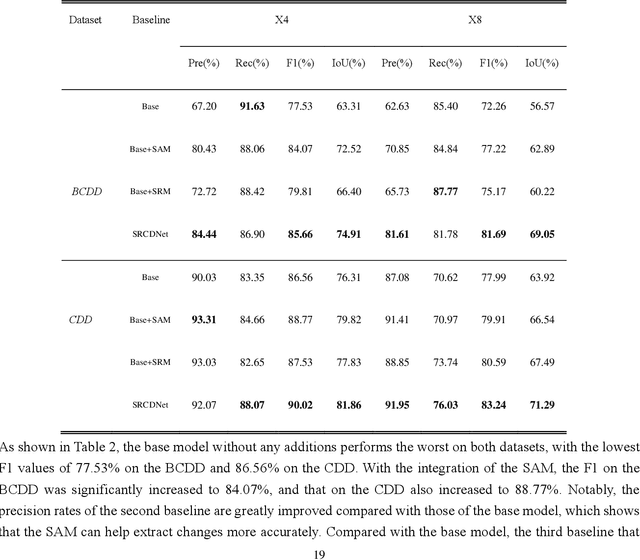

Abstract:Change detection, which aims to distinguish surface changes based on bi-temporal images, plays a vital role in ecological protection and urban planning. Since high resolution (HR) images cannot be typically acquired continuously over time, bi-temporal images with different resolutions are often adopted for change detection in practical applications. Traditional subpixel-based methods for change detection using images with different resolutions may lead to substantial error accumulation when HR images are employed; this is because of intraclass heterogeneity and interclass similarity. Therefore, it is necessary to develop a novel method for change detection using images with different resolutions, that is more suitable for HR images. To this end, we propose a super-resolution-based change detection network (SRCDNet) with a stacked attention module. The SRCDNet employs a super resolution (SR) module containing a generator and a discriminator to directly learn SR images through adversarial learning and overcome the resolution difference between bi-temporal images. To enhance the useful information in multi-scale features, a stacked attention module consisting of five convolutional block attention modules (CBAMs) is integrated to the feature extractor. The final change map is obtained through a metric learning-based change decision module, wherein a distance map between bi-temporal features is calculated. The experimental results demonstrate the superiority of the proposed method, which not only outperforms all baselines -with the highest F1 scores of 87.40% on the building change detection dataset and 92.94% on the change detection dataset -but also obtains the best accuracies on experiments performed with images having a 4x and 8x resolution difference. The source code of SRCDNet will be available at https://github.com/liumency/SRCDNet.

Adherent Mist and Raindrop Removal from a Single Image Using Attentive Convolutional Network

Sep 03, 2020

Abstract:Temperature difference-induced mist adhered to the windshield, camera lens, etc. are often inhomogeneous and obscure, which can easily obstruct the vision and degrade the image severely. Together with adherent raindrops, they bring considerable challenges to various vision systems but without enough attention. Recent methods for similar problems typically use hand-crafted priors to generate spatial attention maps. In this work, we propose to visually remove the adherent mist and raindrop jointly from a single image using attentive convolutional neural networks. We apply classification activation map attention to our model to strengthen the spatial attention without hand-crafted priors. In addition, the smoothed dilated convolution is adopted to obtain a large receptive field without spatial information loss, and the dual attention module is utilized for efficiently selecting channels and spatial features. Our experiments show our method achieves state-of-the-art performance, and demonstrate that this underrated practical problem is critical to high-level vision scenes.

Photoacoustic Microscopy with Sparse Data Enabled by Convolutional Neural Networks for Fast Imaging

Jun 08, 2020

Abstract:Photoacoustic microscopy (PAM) has been a promising biomedical imaging technology in recent years. However, the point-by-point scanning mechanism results in low-speed imaging, which limits the application of PAM. Reducing sampling density can naturally shorten image acquisition time, which is at the cost of image quality. In this work, we propose a method using convolutional neural networks (CNNs) to improve the quality of sparse PAM images, thereby speeding up image acquisition while keeping good image quality. The CNN model utilizes both squeeze-and-excitation blocks and residual blocks to achieve the enhancement, which is a mapping from a 1/4 or 1/16 low-sampling sparse PAM image to a latent fully-sampled image. The perceptual loss function is applied to keep the fidelity of images. The model is mainly trained and validated on PAM images of leaf veins. The experiments show the effectiveness of our proposed method, which significantly outperforms existing methods quantitatively and qualitatively. Our model is also tested using in vivo PAM images of blood vessels of mouse ears and eyes. The results show that the model can enhance the image quality of the sparse PAM image of blood vessels from several aspects, which may help fast PAM and facilitate its clinical applications.

Adaptive Weighting Depth-variant Deconvolution of Fluorescence Microscopy Images with Convolutional Neural Network

Jul 07, 2019

Abstract:Fluorescence microscopy plays an important role in biomedical research. The depth-variant point spread function (PSF) of a fluorescence microscope produces low-quality images especially in the out-of-focus regions of thick specimens. Traditional deconvolution to restore the out-of-focus images is usually insufficient since a depth-invariant PSF is assumed. This article aims at handling fluorescence microscopy images by learning-based depth-variant PSF and reducing artifacts. We propose adaptive weighting depth-variant deconvolution (AWDVD) with defocus level prediction convolutional neural network (DelpNet) to restore the out-of-focus images. Depth-variant PSFs of image patches can be obtained by DelpNet and applied in the afterward deconvolution. AWDVD is adopted for a whole image which is patch-wise deconvolved and appropriately cropped before deconvolution. DelpNet achieves the accuracy of 98.2%, which outperforms the best-ever one using the same microscopy dataset. Image patches of 11 defocus levels after deconvolution are validated with maximum improvement in the peak signal-to-noise ratio and structural similarity index of 6.6 dB and 11%, respectively. The adaptive weighting of the patch-wise deconvolved image can eliminate patch boundary artifacts and improve deconvolved image quality. The proposed method can accurately estimate depth-variant PSF and effectively recover out-of-focus microscopy images. To our acknowledge, this is the first study of handling out-of-focus microscopy images using learning-based depth-variant PSF. Facing one of the most common blurs in fluorescence microscopy, the novel method provides a practical technology to improve the image quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge