Cristina Perfecto

Vehicular Cooperative Perception Through Action Branching and Federated Reinforcement Learning

Dec 07, 2020

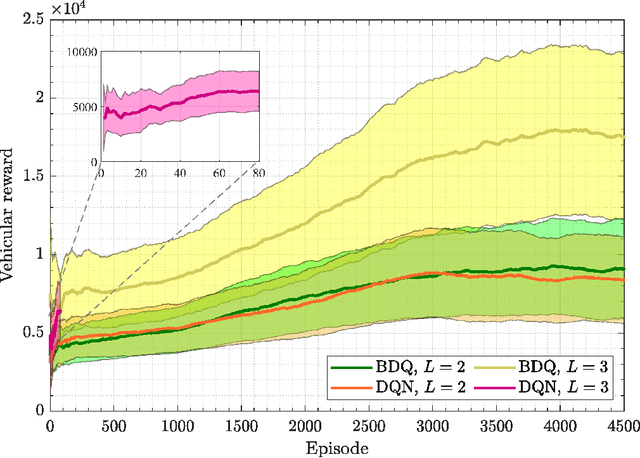

Abstract:Cooperative perception plays a vital role in extending a vehicle's sensing range beyond its line-of-sight. However, exchanging raw sensory data under limited communication resources is infeasible. Towards enabling an efficient cooperative perception, vehicles need to address the following fundamental question: What sensory data needs to be shared?, at which resolution?, and with which vehicles? To answer this question, in this paper, a novel framework is proposed to allow reinforcement learning (RL)-based vehicular association, resource block (RB) allocation, and content selection of cooperative perception messages (CPMs) by utilizing a quadtree-based point cloud compression mechanism. Furthermore, a federated RL approach is introduced in order to speed up the training process across vehicles. Simulation results show the ability of the RL agents to efficiently learn the vehicles' association, RB allocation, and message content selection while maximizing vehicles' satisfaction in terms of the received sensory information. The results also show that federated RL improves the training process, where better policies can be achieved within the same amount of time compared to the non-federated approach.

Cross Layer Optimization and Distributed Reinforcement Learning Approach for Tile-Based 360 Degree Wireless Video Streaming

Nov 12, 2020

Abstract:Wirelessly streaming high quality 360 degree videos is still a challenging problem. When there are many users watching different 360 degree videos and competing for the computing and communication resources, the streaming algorithm at hand should maximize the average quality of experience (QoE) while guaranteeing a minimum rate for each user. In this paper, we propose a \emph{cross layer} optimization approach that maximizes the available rate to each user and efficiently uses it to maximize users' QoE. Particularly, we consider a tile based 360 degree video streaming, and we optimize a QoE metric that balances the tradeoff between maximizing each user's QoE and ensuring fairness among users. We show that the problem can be decoupled into two interrelated subproblems: (i) a physical layer subproblem whose objective is to find the download rate for each user, and (ii) an application layer subproblem whose objective is to use that rate to find a quality decision per tile such that the user's QoE is maximized. We prove that the physical layer subproblem can be solved optimally with low complexity and an actor-critic deep reinforcement learning (DRL) is proposed to leverage the parallel training of multiple independent agents and solve the application layer subproblem. Extensive experiments reveal the robustness of our scheme and demonstrate its significant performance improvement compared to several baseline algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge